You’ll have to transform the raw data in some way to create usable data, whether you’re scraping data from the internet, conducting statistical studies, or creating dashboards and visualizations. This is when Data Wrangling comes into play. The process of translating raw data into more usable representations is known as Data Wangling. It’s a requirement for good Data Analysis and consists of unique processes, of which you’ll get a glimpse below.

This article gives you an overview of different paid and free data wrangling tools that you can use to get better insights of your data. Here’s all you need to know about Data Wrangling, as well as top 7 Data Wrangling Tools.

Table of Contents

What is Data Wrangling?

The term “Data Wrangling” is a catch-all term for the early stages of the data analytics process. The phrase is also sometimes used to describe the different steps in this process, which can be confusing. For example, you can hear it used interchangeably with Data Mining or Data Cleaning. The last two, in reality, are subsets of Data Wrangling.

While the order and amount of jobs will vary based on the dataset, the following are some of the most common critical steps:

- Collecting Data: The first stage is to figure out what data you’ll need, where you’ll get it, and how you’ll get it (or scrape it).

- Exploratory Data Analysis: Conducting an initial analysis helps summarize and clarify the essential properties of a dataset (or lack of one). Here’s where you can learn more about Exploratory Data Analysis.

- Organizing the Data: The majority of unstructured data is text-heavy. You’ll have to parse your data (break it down its syntactic components) and convert it to a more user-friendly format.

- Cleaning your Data: Once your data has some structure, it has to be cleaned. This entails eliminating errors, duplicate numbers, and undesired outliers, among other things.

- Enriching: After that, you’ll need to improve your data by either filling in missing numbers or integrating it with other sources to provide more data points.

- Validation: Finally, double-check that your data fits all of your requirements and that you’ve followed all of the previous procedures correctly. This frequently entails the use of programming languages such as Python.

- Storing Data: Finally, store and publish your data in a dedicated architecture, database, or warehouse so that end-users, whoever they may be, may access it.

Hevo is the only real-time ELT No-code Data pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. With integration with 150+ Data Sources (60+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready.

Check out what makes Hevo amazing:

- Data Transformation: Hevo provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Schema Management: Hevo can automatically detect the schema of the incoming data and map it to the destination schema.

- Models: This helps in modifying your data using SQL models. You can schedule the Models as per your required schedule and frequency to have timely access to analysis-ready data.

Top 12 Data Wrangling Tools

1) Talend

Talend is among one of the best Data Wrangling Tools for Data Wrangling, Data Preparation, and Data Cleansing. It’s a browser-based platform with a simple point-and-click interface that’s ideal for businesses. This simplifies Data Manipulation far more than it would be with heavy code-based programmes. It is also feasible to code from scratch rather than using the built-in Extract, Transform, and Load (ETL) capabilities. As a result, it’s a wonderful choice for people who wish to learn as they go.

Talend’s functionality includes the ability to apply rules to a variety of datasets, save them, and share them across teams. It also has built-in processes for tasks like enrichment and integration, as well as the ability to integrate with a range of different enterprise systems.

Drawbacks of Talend

- Talend has one flaw, its Machine Learning functionality isn’t always up to par. More complex jobs, such as Fuzzy Matching (finding matching data points that don’t match 100%), may suffer as a result.

- Since it has so much capability, it uses a lot of memory and can be a little buggy at times.

However, these drawbacks are generally outweighed by the level of Data Manipulation that even a novice may do with the platform.

Key Features of Talend

- Integration: Talend enables enterprises to manage any data type from a variety of data sources, whether in the Cloud or on-premises.

- Data Quality: Talend automatically purifies ingested data using Machine Learning capabilities such as Data Deduplication, Validation, and Standardization.

- Flexible: When creating data pipelines from your connected data, Talend goes beyond vendor or platform. Talend allows you to run data pipelines anywhere once you’ve created them from your ingested data.

Discover top Talend alternatives in our latest blog.

2) Alteryx APA

The Alteryx APA platform is one of the best Data Wrangling Tools which not only provides tools for Data Wrangling, but also for more general Data Analytics and Data Science needs. If you want everything in one place, this is ideal. Alteryx has over 100 pre-built Data Wrangling Tools that cover everything from data profiling to find-and-replace to fuzzy matching. However, one of its most notable advantages is the vast amount of sources it supports, all without sacrificing speed. Data may be extracted from almost any spreadsheet or file, as well as platforms like Salesforce, Third-party websites, Social Media, Mobile Apps, and Census Databases.

Alteryx also processes various Data Sources far more quickly than MS Excel, which has a tendency to slow down when dealing with huge datasets. It can also export data to any system and works well with other Data Wrangling Tools such as Tableau. This simplifies the process of creating excellent Data Visualizations.

Drawbacks of Alteryx

- On the flipside, Alteryx’s drag-and-drop interface might make things more difficult because each stage of the procedure must be included in the visual workflow. The interface is often dated, which is unfortunate because it does not reflect the platform’s potential.

- However, the price is by far the most significant stumbling block. It features a costly license-based pricing model, which means that each user must pay a charge. There isn’t any free trial version.

While Alteryx is still among one of the great Data Wrangling Tools, open-source competitors provide similar capability if you’re willing to forego the convenience of having everything in one spot.

Key Features of Alteryx

- Collaborate and Discover: Users can search any data asset and cooperate with other users not only to create new analytics tools, but also to utilize models created by others to avoid having to reinvent the wheel.

- Prepare, Analyze, and Model: These are the three steps in the process. Users can prepare their data and create effective models that can be utilized and reused for different datasets.

- Sharing Social/Community Experience:. Alteryx encourages users to share information. They’ve taken a few cues from the Open Source movement, such as encouraging complete disclosure of information or analytics tools produced by the community.

3) Altair Monarch

Another one of the leading Data Wrangling Tools, Altair Monarch, converts complex, unstructured data into a more readable format. It claims to be able to extract data from any source, even PDFs and text-based reports, which are challenging and unstructured forms. It then changes the data according to the rules you provide before directly inserting it into your SQL Database. Notably, the platform includes a number of solutions tailored to the accounting and healthcare industries’ reporting requirements. It’s extremely popular in these fields.

Drawbacks of Altair Monarch

- Altair Monarch began as one of the simplest Data Wrangling Tools, but it has since grown in capabilities. While this is wonderful if you have intricate criteria, it makes the product less user-friendly for those who don’t. Most users now need to be trained before they can utilize it.

- With larger datasets, the extra functionality can make it a little sluggish, and the PDF import tool isn’t as reliable as they’d like to admit.

Apart from that, it’s one of the decent performing Data Wrangling Tools.

Key Features of Altair Monarch

- Integrations: Pull data from flat files, relational databases, OLEDB/ODBC systems, web inputs, data models, workspaces, and multi-structured data sources with data integrations. Export data to CSV, MS-Access, and JSON file formats, as well as reporting and analysis applications like IBM Cognos Analytics, Tableau, and Qlik.

- Importing PDFs: Its PDF engine allows you to choose and alter tables from text-heavy PDF files before exporting them to Data Prep Studio. Create grids to which text is aligned by identifying graphical features such as rectangles and lines on produced PDF page pictures. Extract all backgrounds and fonts, including monospace and free-form typefaces, in a single step.

- Trapping in Excel: To construct workbooks or combine numerous worksheets into one, extract specific data fields. To avoid data traps, reuse data models by redacting personal information from templates.

4) Trifacta

Trifacta is a Cloud-based Interactive platform for profiling data and applying Machine Learning and Analytics models to it. Regardless of how chaotic or complex the datasets are, this data engineering tool tries to create intelligible data. Deduplication and Linear Transformation techniques allow users to delete duplicate entries and fill blank cells in datasets.

In any dataset, these types of Data Wrangling Tools look for outliers and erroneous data. With only a few clicks and drags, the data at hand is graded and intelligently transformed using Machine Learning-powered suggestions to speed up Data Preparation. Trifacta’s Data Wrangling is done through visually appealing profiles that can be used by both non-technical and technical personnel. Trifacta takes pride in its user-centric design, which includes visible and intelligent changes.

Drawbacks of Trifacta

- External datastore connectivity is not supported. Backend data storage integrations are not supported. The application must be used to upload and download all files. It is not possible to connect to relational sources.

- The only formats in which the results can be written are CSV and JSON. Compression of outputs is not possible.

However, still Trifacta is one of the best Cloud-based Data Wrangling Tools available and these drawbacks are generally outweighed by the level of ease it provides that even a novice may do with the platform.

Key Features of Trifacta

- Cloud Integration: Supports preparation workloads in any cloud or hybrid environment, allowing developers to ingest data for wrangling from anywhere.

- Standardization: Trifacta wrangler provides a number of mechanisms for detecting data patterns and standardizing outputs. Data engineers can choose to standardize by pattern, function, or a combination of both.

- Easy Workflow: Trifacta uses flows to arrange data preparation tasks. A flow is made up of one or more datasets as well as the recipes that go with them (defined steps that transform data).

5) Datameer

Datameer is a SaaS Data Transformation platform that helps software engineers ease Data Munging and Integration. Datameer allows you to extract, manipulate, and load datasets into Cloud data warehouses like Snowflake. Engineers can input data in a variety of formats for aggregation using this data wrangling tool, which works well with typical dataset formats like CSV and JSON.

To fulfill all Data Transformation needs, Datameer includes catalog-like Data Documentation, Comprehensive Data Profiling, and discovery. Users can track faulty, missing, or outlying fields and values, as well as the overall form of data, using the tool’s detailed visual data profile. Datameer, which runs on a Scalable Data Warehouse, uses efficient Data Stacks and excel-like functions to transform data for meaningful insights.

Drawbacks of Datameer

- Having a lot of tabs open can make it difficult to concentrate.

- The video tutorials are a little on the long side.

These drawbacks can be neglected as compared to the features it provides, making it one of the best Data Wrangling Tools available.

Key Features of Datameer

- Multi-User Environment: Supports both techies and non-techies with multi-person data transformation settings, low code, code, and hybrid.

- Shared Workspace: To expedite projects, Datameer allows teams to reuse and collaborate on models.

- Extensive Documentation: Datameer uses metadata and wiki-style descriptions, tags, and comments to facilitate both system and user-generated data documentation.

6) Microsoft Power Query

Microsoft Power Query is one of the most popular Data Wrangling Tools. While Microsoft provides a wide range of tools, MS Power Query stands out when it comes to Data Manipulation. It has a lot of the same ETL features as the other Data Wrangling Tools. Power Query, on the other hand, is unique in that it is integrated directly into Microsoft Excel. This makes it the ideal next step for Excel experts who want to take their skills to the next level.

Drawbacks of Power Query

- The fact that Power Query is a Microsoft product is both its greatest strength and its greatest weakness. While it’s a good tool, the fact that it interfaces with other Microsoft technologies like Power BI (their data visualization tool) and Power Automate is its main selling point (their workflow software).

- Although the fact that it does not require any coding is a plus, there may be better solutions available if you do not utilize any of their other software.

Apart from that it performs and is one of the most popular Data Wrangling Tools.

Key Features of Power Query

- Connectivity to a Wide Range of Data Sources: Power Query was built to export data from a wide range of sources, including text files, Excel Workbooks, and CSV files, among others.

- Combining Tables: Power Query’s Merge option replaces Excel’s VLOOKUP function. When used on a large dataset with thousands of rows, the latter is a useful way for finding corresponding values, but it becomes slightly troublesome when applied to a large dataset with thousands of rows.

- Tables for Combining: When changes to the same source data must be imported on a regular basis, such as once a week or once a month, manually assuring data replication becomes difficult.

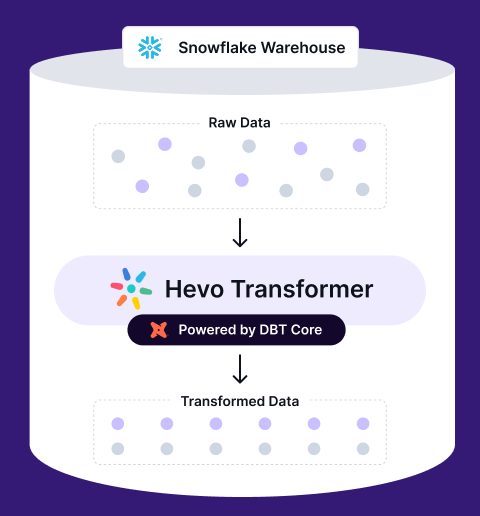

Seamlessly join, aggregate, modify your data on Snowflake. Automate dbt workflows, version control with Git, and preview changes in real-time. Build, test, and deploy transformations effortlessly, all in one place.

🔹 Instant Data Warehouse Integration – Connect in minutes, auto-fetch tables

🔹 Streamlined dbt Automation – Build, test, and run models with ease

🔹 Built-in Version Control – Collaborate seamlessly with Git integration

🔹 Faster Insights – Preview, transform, and push data instantly

7) Tableau Desktop

You’ve already covered web scraping and transformation tools, but this list wouldn’t be complete without a data visualization tool. Tableau Desktop is a desktop version of Tableau. Tableau has a variety of eye-catching visualizations, including Treemaps, Gantt Charts, Histograms, and Motion Charts. It’s important to note that it’s not primarily a Data Wrangling Tools, but it does have some Data Preparing and Cleaning Tools that aid in the creation of the flashy visuals for which it’s known.

The data preview window allows you to quickly see the key elements of a dataset. You can also use the data translator to identify columns, headings, and rows. You can split string values into many columns and organize continuous values into sections (known as bins) to aid in the creation of Histograms, among other things.

Drawbacks of Tableau Desktop

- Tableau’s data prep capabilities aren’t as extensive as those of other Data Wrangling Tools, but they’re ideal for preparing data for great visuals. It can also handle unstructured data. However, you might find it helpful to clean this out first with Alteryx or Python.

Key Features of Tableau Desktop

- Visually Appealing: Tableau generates aesthetically appealing and interactive reports and dashboards in general. This makes the entire data wrangling process a lot clearer.

- High Security: Tableau has numerous authentication techniques and authorization systems for data connection and user access, resulting in a highly secure environment. Tableau can also work with security protocols such as Active Directory and Kerberos. Cryptographic processes are used in security protocols to provide secure communication between two or more parties.

- Real-Time Sharing: Tableau allows users to share their reports, dashboards, workbooks, and other data visualizations in real time with other users or their team. This improves team collaboration, allowing businesses to make quicker decisions.

8) Scrapy

Another popular web scraping tool, Scrapy, is more complex and highly versatile. Based on Python, it is a fast and powerful tool for data wrangling.

Features of Scrapy

- Open-Source: It is one of the completely free data wrangling tools with a web scraping framework built in Python. It is also an open source data wrangling tools providing ease of availability for all its users.

- High Scalability: Scrapy is fast and scalable, making it suitable for projects of any size.

- GitHub repository: What makes Scrapy unique is its great repository on GitHub as it is open-source. It contains all the code you could need to alter its functionality and remove or extend its modules in a wide variety of ways.

Drawbacks of using Scrapy

- Scrapy’s major drawback is its learning curve. As it is written in Python, you’ll need a good understanding of the language before using it.

- Even though its functionality is wide-range, a drawback of this is that you’ll need to learn what each module can do before deciding whether or not to use it.

9) Parsehub

One of the first steps in the data analytics process is data collection, usually done on the web. For a beginner in Python, Parsehub provides a useful alternative.

Features of Parsehub are:

- Easy-to-use Interface: This web scraping and data extraction tool offers an easy-to-use desktop interface that extracts data from a wide range of interactive websites. Code-free, you can simply click on the data you would like to collect and extract and export this into JSON, Excel spreadsheet, or API formats.

- Graphical User Interface: Parsehub’s main selling point for beginners is that it has a graphical user interface. It offers similar functionality to other web scraping tools but at a lower price.

Limitations of Parsehub:

- Many websites have anti-scraping protocols, which Parsehub can’t get around.

- Not suitable for larger projects, either, although its customer support can help solve most issues. In short, if you’re new to web scraping, Parsehub is great for getting to grips with the fundamentals.

10) Infogix

Infogix provides a robust suite of data governance tools, including metadata management, data lineage, data cataloging, and business glossaries. These tools are complemented by customizable dashboards and zero-code workflows that adjust to the evolving data management needs of an organization. Widely used for managing data governance, risk, compliance, and data value, Infogix is also noted for its flexibility and user-friendly interface, accommodating a range of data analysis tasks from the extensive to the more focused.

Features:

- Data360 Analyze: Offers self-service data preparation and analytics with drag-and-drop functionality, allowing users to quickly acquire, prepare, and analyze data without heavy IT involvement.

- Data360 Govern: Establishes a strong governance framework to manage data effectively, with customizable dashboards and workflows, and metadata harvesting.

- Data360 DQ+: Enhances data quality monitoring, visualization, remediation, and reconciliation across various systems and processes.

- No Coding Required: Infogix provides an easy configuration model for businesses to manage workflows without coding or IT dependency.

- Real-Time Insights: Personalized dashboards and quick data analysis for timely insights and decision-making.

Drawbacks:

- Users have reported that the interface may hang when dealing with large amounts of data.

- Some users have noted that lineage functionality needs improvement.

- Infogix may not support multi-tenancy deployment with a single license.

11) Paxata

Paxata, now a part of DataRobot, stands out in the crowded field of data preparation with its self-service Adaptive Data Preparation platform. The platform is designed to empower business analysts to collect, explore, transform, and combine data rapidly. What sets Paxata apart is its ability to automate data preparation tasks using algorithms and machine learning, significantly reducing the time and effort required for data cleaning and integration.

Features

- Machine Learning Algorithms: Paxata leverages machine learning for intelligent data profiling, standardization, and deduplication, which streamlines the data preparation process.

- Interactive Excel-like Interface: The platform provides an interactive interface that allows users to investigate and discover trends, outliers, and patterns across their entire dataset.

- Smart Recommendations: It detects joins, pens, and overlaps across data sources with smart machine learning recommendations.

- Data Profiling: Paxata profiles uploaded data and generates a downloadable report showing key column statistics, which aids in understanding data quality.

- Collaboration and Governance: The platform supports tagging, annotating, sharing, and collaboration using a central catalog, creating repeatability and ensuring end-to-end data lineage and traceability.

Drawbacks

- Paxata may struggle with ingesting large XLSX files due to the requirement of loading the entire file into RAM, which can lead to performance issues or errors.

- The platform’s extensive features can be overwhelming for new users, despite its user-friendly interface.

- Finding a pricing model that balances the advanced features with cost-effectiveness has been challenging.

12) Tamr

Tamr is a data integration platform that leverages machine learning to address the challenges of data curation and mastering. It stands out for its ability to connect and enrich a wide variety of internal and external data sources, making underutilized data more accessible for analytics. Tamr’s platform is known for its human-guided machine learning, which cleans, labels, and connects structured data to overcome the obstacles posed by data variety.

Features:

- Active Learning for Categorization: Tamr’s active learning increases the accuracy and efficiency of categorization projects, which is particularly beneficial for handling complex data sets.

- Semantic Comparison with Large Language Models (LLMs): This feature helps in identifying discrete similarities and differences within data, which enhances the matching accuracy.

- Pre-trained Machine Learning Models: Tamr offers a robust library of continuously improving matching models, reducing the need for high upfront investment in machine learning.

- Curation Interface: It engages users to collaborate with the data, provide feedback, and implement changes swiftly.

- Recommendation Engine: Tamr’s engine identifies the most likely matches in data, utilizing Tamr ID to narrow down results to the recommended match.

Drawbacks:

- Some users may find the process of integrating Tamr with existing data systems complex, which could lead to a steep learning curve.

- The cost of ownership might be a concern for some organizations, especially if they are transitioning from traditional rule-based Master Data Management (MDM) systems.

Next, let’s look into the benefits and use cases of data wrangling.

What are the Benefits of Data Wrangling?

Data professionals can spend up to 80% of their time handling data. Only 20% of the budget is allocated to research and Marketing, raising the question, “Is Data Wrangling Worth the Effort?“

Given the numerous advantages that Data Wrangling brings, it’s absolutely worth the effort. Here are some of the benefits of Data Wrangling offers your business:

- Easy Analysis: Once raw data has been wrangled and transformed, Business Analysts and Stakeholders can quickly, easily, and efficiently evaluate even the most complicated data.

- Simple Data Wrangling: The Data Wrangling method converts raw, unstructured, and jumbled data into useful data in clean rows and columns. In addition, the process enriches the data in order to make it more meaningful and deliver additional intelligence.

- Better Targeting: You may better understand your audience when you mix several sources of data, which leads to better targeting for your Ad Campaigns and Content Strategy. Having the right data to understand your audience is critical to your success, whether you’re trying to hold Webinars to highlight what your firm does for your target clients or using an online course platform to design a training course for your own company.

- Making the Most of Your Time: Analysts can spend less time fighting to arrange unruly data and more time receiving insights to assist them make informed decisions based on data that is easy to read and digest thanks to the Data Wrangling process.

- Data Visualization: Once you’ve wrangled the data, you can quickly export it to any Analytics Visual Platform of your choosing to begin summarizing, sorting, and analyzing it.

All of this results in more informed decisions. However, this is far from the only advantage of data wrangling. Here are a few noteworthy advantages:

- By transforming data into a format that is suitable with the end system, Data Wrangling helps to improve Data Usability.

- It facilitates the generation of data flows quickly and easily using an intuitive user interface, and the data flow process may be easily planned and automated.

- Different Types of Information, as well as the sources, such as databases, files, web services, and so on, are all included in Data Wrangling.

- Users can use data wrangling to process large amounts of data and readily exchange Data Flow Methodologies.

- Reduces variable costs associated with using Third-party APIs or paying for software platforms that aren’t regarded as mission-critical.

What are the Use Cases of Data Wrangling?

- Financial Insights: Financial companies frequently employ data wrangling to uncover hidden insights and numbers in order to predict trends and forecast markets. It aids in the answering of questions so that informed investment decisions can be made.

- Improved Reporting: Various departments within a company need to generate reports about their activity or obtain specialized data. Unstructured data, on the other hand, makes it harder to construct reports. Data wrangling enhances the quality of data and makes it easier to fit information into reports.

- Unified Format: Different departments of the business utilize different systems to collect data in various formats. Data Wrangling aids in the unification of data and the transformation of data into a single format in order to obtain a holistic view.

- Understanding Customer Base: Each consumer has unique personal and behavioral information. You can use Data Wrangling to find patterns in the data as well as similarities across distinct consumers.

- Data Quality: Data wrangling considerably aids in the improvement of data quality. Every sector requires data in order to gain insights and make better data-driven business decisions.

Conclusion

As organizations expand their businesses, managing large volumes of data becomes crucial for achieving the desired efficiency. Data Wrangling Tools powers stakeholders and management to handle their data in the best possible way. In case you want to export data from a source of your choice into your desired Database/destination then Hevo Data is the right choice for you!

Simplify your data workflows with top-rated extraction tools designed for diverse use cases. Find out more at Extraction Tools for Data.

Hevo Data with its strong integration with 150+ data sources (including 60+ free sources) allows you to not only export data from your desired data sources & load it to the destination of your choice, but also transform & enrich your data to make it analysis-ready so that you can focus on your key business needs and perform insightful analysis using BI tools.

Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

FAQs on Data Wrangling Tools

1. What are data-wrangling tools?

Data wrangling tools are software applications or platforms designed to facilitate the process of data preparation, transformation, cleaning, and exploration.

2. Is ETL part of data wrangling?

ETL (Extract, Transform, Load) is related to data wrangling, but they are not exactly the same.

3. Is data wrangling a skill?

Yes, data wrangling is considered a valuable skill in the fields of data science, analytics, and business intelligence. Data wrangling involves the process of cleaning, transforming, and preparing raw data into a format suitable for analysis and visualization.

4. Which tool can be used for automating the data wrangling process?

There are several tools and platforms that can be used for automating the data-wrangling process, each offering different features and capabilities.