With the BigQuery MySQL Connector, users can perform data analysis on MySQL data stored in BigQuery without the need for complex data migration processes. With MySQL BigQuery integration, organizations can leverage the scalability and power of BigQuery for handling large datasets stored in MySQL.

Migrating MySQL to BigQuery can be a complex undertaking, necessitating thorough testing and validation to minimize downtime and ensure a smooth transition. This blog will provide 2 easy methods to connect MySQL to BigQuery in real time. The first method uses Hevo’s automated Data Pipeline to set up this connection, while the second method involves writing custom ETL Scripts to perform this data transfer from MySQL to BigQuery. Read along and decide which method suits you the best!

Effortlessly integrate data from MySQL to BigQuery using Hevo Data in just two simple steps. Set up your source and destination once, then enjoy automated, hassle-free data synchronisation with Hevo’s powerful platform.

Check out what makes Hevo amazing:

- It has a highly interactive UI, which is easy to use.

- It streamlines your data integration task and allows you to scale horizontally.

- The Hevo team is available round the clock to extend exceptional support to you.

Hevo has been rated 4.7/5 on Capterra. Know more about our 2000+ customers and give us a try.

Get Started with Hevo for FreeTable of Contents

What are the Methods to Connect MySQL to BigQuery?

Following are the 2 methods using which you can set up your MySQL to BigQuery integration:

Alternatively, you can move your data from MySQL to Redshift for scalable analytics.

Method 1: Using Hevo Data to Connect MySQL to BigQuery

With a ready-to-use Data Integration Platform, Hevo, you can easily move data from MySQL to BigQuery with just 2 simple steps. This does not need you to write any code and will provide you with an error-free, fully managed setup to move data in minutes.

Step 1: Connect and configure your MySQL database.

- Click PIPELINES in the Navigation Bar.

- Click + CREATE in the Pipelines List View.

- In the Select Source Type page, select the MySQL as your source.

- In the Configure your MySQL Source page, specify the connection settings for your MySQL Source.

Step 2: Choose BigQuery as your Destination

- Click DESTINATIONS in the Navigation Bar.

- Click + CREATE in the Destinations List View.

- In Add Destination page select Google BigQuery as the Destination type.

- In the Configure your Google BigQuery Warehouse page, specify the following details:

It is that simple. While you relax, Hevo will fetch the data and send it to your destination Warehouse.

Learn how to integrate Airtable with MySQL using Hevo’s no-code platform.

Instead of building a lot of these custom connections, ourselves, Hevo Data has been really flexible in helping us meet them where they are.

– Josh Kennedy, Head of Data and Business Systems

Method 2: Manual ETL Process to Connect MySQL to BigQuery

The manual method of connecting MySQL to BigQuery involves writing custom ETL scripts to set up this data transfer process. This method can be implemented in 2 different forms. Before connecting to BigQuery, ensure you migrate your MySQL database between servers effectively.

1. Full Dump and Load

This approach is relatively simple, where complete data from the source MySQL table is extracted and migrated to BigQuery. If the target table already exists, drop it and create a new table ( Or delete complete data and insert newly extracted data).

Full Dump and Load is the only option for the first-time load even if the incremental load approach is used for recurring loads. The full load approach can be followed for relatively small tables even for further recurring loads. You can also check out MySQL to Redshift integration.

The high-level steps to be followed to replicate MySQL to BigQuery are:

- Step 1: Extract Data from MySQL

- Step 2: Clean and Transform the Data

- Step 3: Upload to Google Cloud Storage(GCS)

- Step 4: Upload to the BigQuery Table from GCS

Let’s take a detailed look at each step to migrate sqlite to mariadb.

Step 1: Extract Data from MySQL

There are 2 popular ways to extract data from MySQL – using mysqldump and using SQL query.

- Export the data from MySQL

Alternatively, you can also use the OUTFILE command while exporting data from MySQL. I have mentioned the command below for your reference.

SELECT * INTO OUTFILE 'filename.csv' CHARACTER SET 'utf8' FIELDS TERMINATED BY '\t' OPTIONALLY ENCLOSED BY '' FROM table <yourtable>Replace <yourtable> field with the name of your table, which contains your data in MySQL Database.

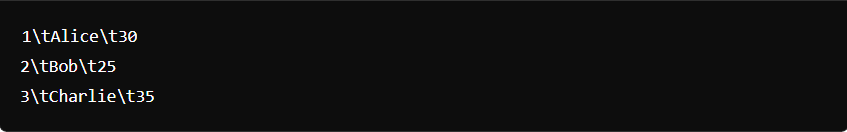

The output will be as shown below:

- Extract data using mysqldump

Mysqldump is a client utility coming with Mysql installation. It is mainly used to create a logical backup of a database or table. Here, is how it can be used to extract one table:

mysqldump -u <db_username> -h <db_host> -p db_name table_name > table_name.sqlHere output file table_name.sql will be in the form of insert statements like

INSERT INTO table_name (column1, column2, column3, ...)

VALUES (value1, value2, value3, ...);This output has to be converted into a CSV file. You have to write a small script to perform this. Here is a well-accepted python library doing the same – mysqldump_to_csv.py

Alternatively, you can create a CSV file using the below command. However, this option works only when mysqldump is run on the same machine as the mysqld server which is not the case normally.

mysqldump -u [username] -p -t -T/path/to/directory [database] --fields-terminated-by=,- Extract Data using SQL query

MySQL client utility can be used to run SQL commands and redirect output to file.

mysql -B -u user database_name -h mysql_host -e "select * from table_name;" >

table_name_data_raw.txtFurther, it can be piped with text editing utilities like sed or awk to clean and format data.

Example:

mysql -B -u user database_name -h mysql_host -e "select * from table_name;" |

sed "s/'/'/;s/t/","/g;s/^/"/;s/$/"/;s/n//g" > table_name_data.csvStep 2: Clean and Transform the Data

Apart from transforming data for business logic, there are some basic things to keep in mind:

- BigQuery expects CSV data to be UTF-8 encoded.

- BigQuery does not enforce Primary Key and unique key constraints. ETL process has to take care of that.

- Column types are slightly different. Most of the types have either equivalent or convertible types. Here is a list of common data types.

| MySQL Data Type | BigQuery Data Type |

| TINYINT | INT64 |

| SMALLINT | INT64 |

| MEDIUMINT | INT64 |

| INT | INT64 |

| BIGINT | INT64 |

| DECIMAL | NUMERIC |

| FLOAT | FLOAT64 |

| DOUBLE | FLOAT64 |

| BIT | BOOL |

| CHAR | STRING |

| VARCHAR | STRING |

| BINARY | BYTES |

| VARBINARY | BYTES |

| TINYTEXT | STRING |

| TEXT | STRING |

| MEDIUMTEXT | STRING |

| LONGTEXT | STRING |

| ENUM | No type for ENUM.Must use any type which can represent values in ENUM |

| SET | No type for SET.Must use any type which can represent values in SET |

| DATE | DATE |

| TIME | TIME |

| DATETIME | DATETIME |

| TIMESTAMP | TIMESTAMP |

Fortunately, the default date format in MySQL is the same, YYYY-MM-DD. Hence, while taking mysqldump there is no need to do any specific changes for this. If you are using a string field to store date and want to convert to date while moving to BigQuery you can use STR_TO_DATE function.

DATE value must be dash(-) separated and in the form YYYY-MM-DD (year-month-day). You can visit their official page to know more about BigQuery data types.

Syntax :

STR_TO_DATE(str,format)

Example :

SELECT STR_TO_DATE('31,12,1999','%d,%m,%Y');

Result :

1999-12-31- The hh:mm: ss (hour-minute-second) portion of the timestamp must use a colon (:) separator.

- Make sure text columns are quoted if it can potentially have delimiter characters.

Step 3: Upload to Google Cloud Storage(GCS)

- Import the data into Google BigQuery

By using the command I have mentioned below, you can import the data that you just exported from MySQL into your BigQuery data warehouse.

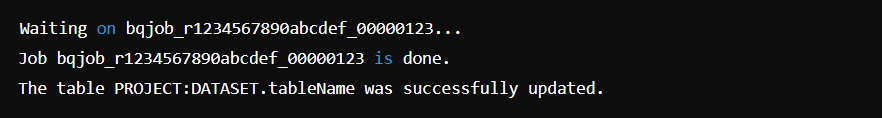

bq load --field_delimiter="\t" --null_marker="\N" --quote="" \ PROJECT:DATASET.tableName gs://bucket/data.csv.gz table_schema.jsThe output of this command will be as shown below:

Gsutil is a command-line tool for manipulating objects in GCS. It can be used to upload files from different locations to your GCS bucket.

To copy a file to GCS:

gsutil cp table_name_data.csv gs://my-bucket/path/to/folder/To copy an entire folder:

gsutil cp -r dir gs://my-bucket/path/to/parent/If the files are present in S3, the same command can be used to transfer to GCS.

gsutil cp -R s3://bucketname/source/path gs://bucketname/destination/pathStorage Transfer Service

Storage Transfer Service from Google cloud is another option to upload files to GCS from S3 or other online data sources like HTTP/HTTPS location. Destination or sink is always a Cloud Storage bucket. It can also be used to transfer data from one GCS bucket to another.

This service is extremely handy when comes to data movement to GCS with support for:

- Schedule one-time or recurring data transfer.

- Delete existing objects in the destination if no corresponding source object is present.

- Deletion of source object after transferring.

- Periodic synchronization between source and sink with advanced filters based on file creation dates, file name, etc.

Upload from Web Console

If you are uploading from your local machine, web console UI can also be used to upload files to GCS. Here are the steps to upload a file to GCS with screenshots.

- Login to your GCP account. In the left bar, click Storage and go to Browser.

2. Select the GCS bucket you want to upload the file. Here the bucket we are using is test-data-hevo. Click on the bucket.

3. On the bucket details page below, click the upload files button and select file from your system.

4. Wait till the upload is completed. Now, the uploaded file will be listed in the bucket:

Step 4: Upload to the BigQuery Table from GCS

You can use the bq command to interact with BigQuery. It is extremely convenient to upload data to the table from GCS.Use the bq load command, and specify CSV as the source_format.

The general syntax of bq load:

bq --location=[LOCATION] load --source_format=[FORMAT] [DATASET].[TABLE] [PATH_TO_SOURCE] [SCHEMA][LOCATION] is your location. This is optional.

[FORMAT] is CSV.

[DATASET] is an existing dataset.

[TABLE] is the name of the table into which you’re loading data.

[PATH_TO_SOURCE] is a fully-qualified Cloud Storage URI.

[SCHEMA] is a valid schema. The schema can be a local JSON file or inline.– autodetect flag also can be used instead of supplying a schema definition.

There are a bunch of options specific to CSV data load :

| CSV option | Description | bq command flag |

| Field delimiter | Field delimiter: Comma, Tab, Pipe, Other | -F or –field_delimiter |

| Header rows | Header rows to skip | –skip_leading_rows |

| Number of bad records allowed | Number of errors allowed | –max_bad_records |

| Newline characters | Allow quoted newlines | –allow_quoted_newlines |

| Custom null values | Specifies a string that represents a null value in a CSV file | –null_marker |

| Trailing optional columns | Allow jagged rows | –allow_jagged_rows |

| Unknown values | Ignore unknown values | –ignore_unknown_values |

| Quote | The value that is used to quote data sections in a CSV file | –quote |

| Encoding | The character encoding of the data | -E or –encoding |

To see the full list options, visit Bigquery documentation on loading data cloud storage CSV.

The following are some example commands to load data:

- Specify schema using a JSON file:

bq --location=US load --source_format=CSV mydataset.mytable gs://mybucket/mydata.csv ./myschema.json- If you want the schema to be auto-detected from the file:

bq --location=US load --autodetect --source_format=CSV mydataset.mytable gs://mybucket/mydata.csvIf you are writing to the existing table, BigQuery provides three options: Write if empty, Append to the table, and Overwrite table. Also, it is possible to add new fields to the table while uploading data. Let us see each with an example.

- To overwrite the existing table:

bq --location=US load --autodetect --replace --source_format=CSV mydataset.mytable

gs://mybucket/mydata.csv- To append to an existing table:

bq --location=US load --autodetect --noreplace --source_format=CSV mydataset.mytable

gs://mybucket/mydata.csv ./myschema.json- To add a new field to the table. Here new schema file with an extra field is given :

bq --location=asia-northeast1 load --noreplace --schema_update_option=ALLOW_FIELD_ADDITION

--source_format=CSV mydataset.mytable gs://mybucket/mydata.csv ./myschema.json2. Incremental Dump and Load

In certain use cases, loading data once from MySQL to BigQuery will not be enough. There might be use cases where, once initial data is extracted from the source, we need to keep the target table in sync with the source. For a small table, doing a full data dump every time might be feasible, but if the volume of data is higher, we should think of a delta approach.

The following steps are used in the Incremental approach to connect MySQL to Bigquery:

Step 1: Extract Data from MySQL

For incremental data extraction from MySQL, use SQL with proper predicates and write output to a file. mysqldump cannot be used here as it always extracts full data.

Eg: Extracting rows based on the updated_timestamp column and converting to CSV.

mysql -B -u user database_name -h mysql_host -e "select * from table_name where

updated_timestamp < now() and updated_timestamp >'#max_updated_ts_in_last_run#'"|

sed "s/'/'/;s/t/","/g;s/^/"/;s/$/"/;s/n//g" > table_name_data.csvNote: In case of any hard delete that happens in the source table, it will not be reflected in the target table.

Step 2: Update Target Table in BigQuery

First, upload the data into a staging table to upsert newly extracted data to the BigQuery table. This will be a full load. Please refer full data load section above. Let’s call it delta_table. Now, there are two approaches to loading data into the final table:

- Update the values of existing records in the final table and insert new rows from the delta table that are not in the final table.

UPDATE data_set.final_table t

SET t.value = s.value

FROM data_set.delta_table s

WHERE t.id = s.id;INSERT data_set.final_table (id, value)

SELECT id, value

FROM data_set.delta_table

WHERE NOT id IN (SELECT id FROM data_set.final_table);2. Delete rows from the final table that are present in the delta table. Then insert all rows from the delta table to the final table.

DELETE data_set.final_table f

WHERE f.id IN (SELECT id from data_set.delta_table);INSERT data_set.final_table (id, value)

SELECT id, value

FROM data_set.delta_table;Disadvantages of Manually Loading Data

Manually loading data from MySQL to BigQuery presents several drawbacks:

- Cumbersome Process: While custom code suits one-time data movements, frequent updates become burdensome manually, leading to inefficiency and bulkiness.

- Data Consistency Issues: BigQuery lacks guaranteed data consistency for external sources, potentially causing unexpected behavior during query execution amidst data changes.

- Location Constraint: The data set’s location must align with the Cloud Storage Bucket’s region or multi-region, restricting flexibility in data storage.

- Limitation with CSV Format: CSV files cannot accommodate nested or repeated data due to format constraints, limiting data representation possibilities.

- File Compression Limitation: Mixing compressed and uncompressed files in the same load job using CSV format is not feasible, adding complexity to data loading tasks.

- File Size Restriction: The maximum size for a gzip file in CSV format is capped at 4 GB, potentially limiting the handling of large datasets efficiently.

What Can Be Migrated From MySQL To BigQuery?

Since the 1980s, MySQL has been the most widely used open-source relational database management system (RDBMS), with businesses of all kinds using it today.

MySQL is fundamentally a relational database. It is renowned for its dependability and speedy performance and is used to arrange and query data in systems of rows and columns.

Both MySQL and BigQuery use tables to store their data. When you migrate a table from MySQL to BigQuery, it is stored as a standard, or managed, table.

Both MySQL and BigQuery employ SQL, but they accept distinct data types, therefore you’ll need to convert MySQL data types to BigQuery equivalents. Depending on the data pipeline you utilize, there are several options for dealing with this.

Once in BigQuery, the table is encrypted and kept in Google’s warehouse. Users may execute complicated queries or accomplish any BigQuery-enabled job.

What are the Advantages of Connecting MySQL To BigQuery?

- BigQuery is intended for efficient and speedy analytics, and it does so without compromising operational workloads, which you will most likely continue to manage in MySQL.

- It improves workflows and establishes a single source of truth. Switching between platforms can be difficult and time-consuming for analysts. Updating BigQuery with MySQL ensures that both data storage systems are aligned around the same source of truth and that other platforms, whether operational or analytical, are constantly bringing in the right data.

- BigQuery increases data security. By replicating data from MySQL to BigQuery, customers avoid the requirement to provide rights to other data engineers on operational systems.

- BigQuery handles Online Analytical Processing (OLAP), whereas MySQL is designed for Online Transaction Processing (OLTP). Because it is a cost-effective, serverless, and multi-cloud data warehouse, BigQuery can deliver deeper data insights and aid in the conversion of large data into useful insights.

Conclusion

The article listed two methods to set up your BigQuery MySQL integration. The first method relies on Hevo’s automated Data Pipeline to transfer data, while the second method requires you to write custom scripts to perform ETL processes from MySQL to BigQuery.

Simplify data migration between BigQuery and MySQL for efficient data processing and reporting. Learn more about BigQuery to MySQL.

Complex analytics on data requires moving data to Data Warehouses like BigQuery. It takes multiple steps to extract data, clean it, and upload it. It requires real effort to ensure there is no data loss at each stage of the process, whether it happens due to data anomalies or type mismatches. Hevo’s data pipeline can elevate your data transfer process and ensure there is no data loss. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

FAQ MySQL to BigQuery

1. How to transfer data from MySQL to BigQuery?

Transferring data from MySQL to Google BigQuery involves several steps. Here’s a general outline of the process:

1. Export Data from MySQL

2. Prepare the Data

3. Upload to GCS

4. Load Data into BigQuery

2. How to connect MySQL to Google BigQuery?

To connect MySQL to Google BigQuery, you can use several methods:

1. ETL Tools

2. Google Cloud Dataflow

3. Custom Scripts

3. Is BigQuery Based on MySQL?

No, BigQuery is not based on MySQL. BigQuery is a fully managed, serverless data warehouse developed by Google. It uses a proprietary columnar storage format and a distributed architecture optimized for analytical queries and large-scale data processing.