Unlock the full potential of your Amazon RDS data by integrating it seamlessly with BigQuery. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

As data volumes continue exploding, many organizations find their Amazon RDS databases constrained by storage limits, scale challenges, and rising costs. Migrating from Amazon RDS to Google BigQuery offers a tempting path to reap benefits like scalable storage, faster queries, and lower costs. However, moving terabytes of production data from RDS into BigQuery can seem daunting.

This post covers two methods for easy AWS RDS to BigQuery migration: an automated tool like Hevo and a manual method. You can choose the one that suits you best.

Table of Contents

Use Case of Amazon RDS to BigQuery Migration

Migrating from RDS to BigQuery can bring numerous benefits and address various needs. Here are some practical use cases for making the switch:

- Handling Large-Scale Data Analytics

- If your current RDS setup struggles to process large datasets efficiently, BigQuery’s powerful processing capabilities can easily handle petabyte-scale data.

- You’ll experience faster query performance, essential for real-time analytics and quick decision-making.

- Cost Efficiency

- When facing high costs with RDS due to scaling issues or extensive maintenance, moving to BigQuery can reduce your expenses. BigQuery’s pricing model is based on the amount of data processed, which can be more cost-effective.

- You pay for what you use, helping you manage and predict your data costs better.

- Integration with Google Cloud Ecosystem

- If you’re already using other Google Cloud services, BigQuery integrates seamlessly with tools like Google Data Studio, Google Analytics, and TensorFlow, making it easier to build a unified data environment.

- You’ll benefit from streamlined workflows and easier data sharing across different tools and teams.

- Improved Data Accessibility

- With BigQuery’s built-in features, you can make data more accessible to your teams. They can run queries and generate reports without deep technical expertise.

- It democratizes data access, allowing more team members to leverage data for decision-making.

Migrating from RDS to BigQuery can streamline your data operations, reduce costs, and unlock advanced analytics capabilities. If you’re ready to move, let’s dive into the details and plan the migration together!

Method 1: Amazon RDS to BigQuery Migration Using Hevo Data

Effortlessly migrate your Amazon RDS data to BigQuery with Hevo’s no-code platform. Experience automated data transfer, real-time synchronization, and flexible transformations without any coding required.

Method 2: Manual Amazon RDS to BigQuery Migration

You can manually extract data from your Amazon RDS database as CSV files and then load it into Google BigQuery. This method requires manual setup and handling but allows for customized data management.

Method 1: Amazon RDS to BigQuery Migration Using Hevo Data

Hevo’s pre-built integration with databases in Amazon RDS and 150+ Sources (including 60+ free Data Sources) will take full charge of the data transfer process, allowing you to set up Amazon RDS to BigQuery Migration seamlessly and focus solely on key business activities.

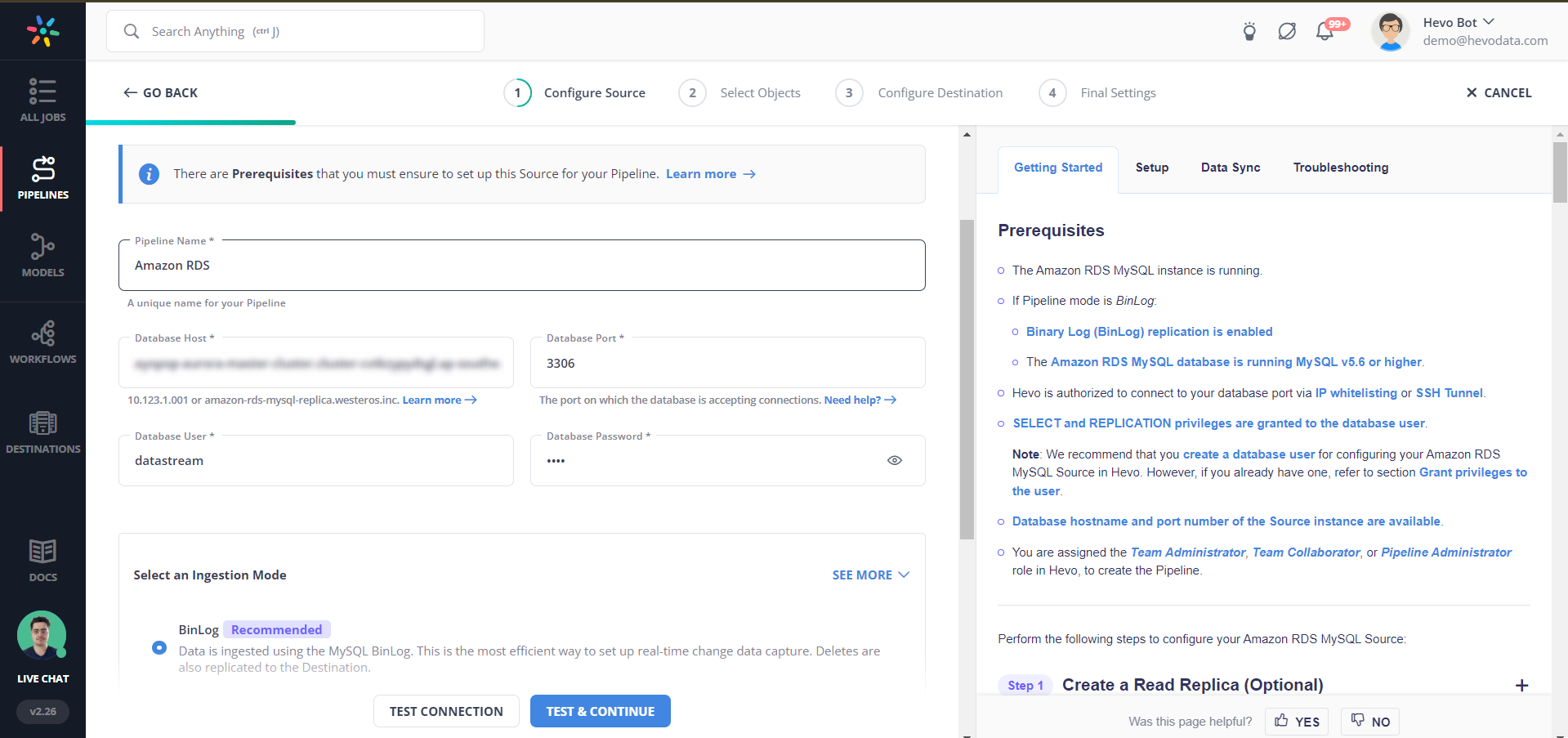

Step 1: Set up Amazon RDS as a Source

There are various connectors through which you can connect Amazon RDS as a Source. In Hevo Data, you can use PostgreSQL to configure Amazon RDS PostgreSQL as a Source, as shown below:

Step 2: Connect Google BigQuery as Destination

You can set up Google BigQuery as Destination as shown below:

To know more about AWS RDS to BigQuery migration, refer to the Hevo Documentation:

Method 2: Manual Amazon RDS to BigQuery Migration

Users can set up manual Amazon RDS to BigQuery Migration by implementing the following steps:

- Step 1: Exporting Data from Amazon RDS

- Step 2: Importing CSV Data into Google BigQuery

- Step 3: Validating the Data Load

Step 1: Exporting Data from Amazon RDS

This blog shows how this step can be implemented for Amazon RDS MySQL. You have to execute corresponding queries for the database in your use case if not using MySQL.

You can export the required data from Amazon RDS MySQL using the mysqldump Unix command. Execute the following query to export the required data:

mysql --password=XXX --user=XXX --host=XXX.amazonaws.com --port=XXX --database=DataBaseName --batch --silent -e "SELECT * FROM TableName" | sed 's/\t/,/g' > /usr/home/TableName.csvThe required data will now be downloaded as a CSV.

Step 2: Importing CSV Data into Google BigQuery

Once the data has been exported from Amazon RDS as CSV, it has to be imported into Google BigQuery. Users can easily perform a batch-load job in Python by running the following code:

from google.cloud import bigquery

# Construct a BigQuery client object.

client = bigquery.Client()

# TODO(developer): Set table_id to the ID of the table to create.

table_id = "your-project.your_dataset.your_table_name"

job_config = bigquery.LoadJobConfig( source_format=bigquery.SourceFormat.CSV,

skip_leading_rows=1,

autodetect=True

)

with open(file_path, "rb") as source_file:

job = client.load_table_from_file(source_file, table_id, job_config=job_config)

job.result() # Waits for the job to complete.

table = client.get_table(table_id) # Make an API request.

print("Loaded {} rows and {} columns to {}".format(table.num_rows, len(table.schema), table_id))

Step 3: Validating the Data Load

You can now use the Query Editor to query & fetch the test data after the manual AWS RDS to BigQuery migration so that you can validate the process.

Additionally, you can validate the count of records in the Amazon RDS tables and import Google BigQuery tables to cross-check whether you have imported all the required records.

Best Practices for Manual Amazon RDS to BigQuery Migration

The best practices that all users should follow while implementing manual Amazon RDS to BigQuery Migration are as follows:

- While using the mysqldump, you can use command filter parameters such as –compress, –compact. COMPACT is a format supported by Antilope. It stores the first 768 bytes of BLOB in case its value doesn’t fit on the page. COMPRESSED is used for compressed tables.

- If you select Auto-Detect schema while creating a table on BigQuery UI, you need to validate it to be on the safe side. If you need to modify or add or remove columns from the table, we can also do that with the EDIT schema option available in Google BigQuery.

- If you don’t choose Auto-Detect schema, you will need to create it manually one by one with the help of the Add Field option.

Limitations of Manual Amazon RDS to BigQuery Migration

- Brittle Processes: The entire mechanism to move data from Amazon RDS to BigQuery is set up manually. This setup is brittle and prone to error as it requires multiple coherent steps to be executed one after the other. Failure of any single process part can cause the entire data pipeline to fail. This can eventually result in data loss.

- Inability to Transform Data: If your use case demands you to clean, transform and enrich data when moving it from Amazon RDS to BigQuery, then you will need to write additional code to accomplish that. This makes the overall process very cumbersome.

- Constant Monitoring and Maintenance: More often than not, schema changes, and scripts break and ultimately result in data inconsistencies. Since you are moving critical transactional data from Amazon RDS to BigQuery, you will need to station dedicated engineering resources to monitor both the infrastructure and flow of data constantly. This adds to the maintenance overhead.

Conclusion

This article provided you with a step-by-step guide on how you can set up Amazon RDS to BigQuery Migration manually or using Hevo. However, there are certain limitations associated with the manual method. If those limitations are not a concern to your operations, then using it is the best option but if it is, then you should consider using automated Data Integration platforms like Hevo. This article also takes you through use cases when you do BigQuery data transformation for AWS data.

Hevo helps you directly transfer data from a source of your choice to a Data Warehouse, Business Intelligence, or desired destination in a fully automated and secure manner without having to write the code. It will make your life easier and make data migration hassle-free. It is User-Friendly, Reliable, and Secure.

Want to try Hevo? Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. Have a look at our unbeatable pricing, which will help you choose the right plan for you.

What are your thoughts on moving data from Amazon RDS to Google BigQuery? Let us know in the comments.

What is the difference between RDS and BigQuery?

1. RDS is a managed relational database service that supports MySQL, PostgreSQL, Oracle, and SQL Server. It is designed for transactional applications with ACID compliance and is ideal for OLTP workloads.

2. BigQuery is a fully managed, serverless data warehouse for large-scale analytics. It supports SQL-like queries on massive datasets and is optimized for fast and scalable OLAP workloads.

Is BigQuery a relational DB?

BigQuery is not a traditional relational database, but it does use SQL-like queries and supports relational data concepts such as tables, rows, and columns. However, it’s optimized for analytical queries rather than transactional processing.

Does BigQuery use Cloud Storage?

BigQuery integrates with Google Cloud Storage (GCS) for data loading and exporting. You can load data into BigQuery from GCS, and export query results from BigQuery to GCS. Additionally, BigQuery can query data directly stored in GCS without loading it into BigQuery first.