Easily move your data from Webhooks To Redshift to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Streaming data is an essential data source for organizations to perform real-time analytics. The initial stage is loading streaming data into the warehouse in real-time, frequently done via Webhooks. In this article, you’ll learn how to load real-time streaming data from Webhooks to Redshift. Read along to know more!

Table of Contents

What are Webhooks?

Webhooks are a means for applications to communicate with one another automatically. Webhooks are a cost-effective and resource-efficient technique to perform and record event reactions. A Webhook includes a method for notifying client apps whenever a new server-side event occurs.

Reverse API is another name for webhooks. In most APIs, the client-side application makes a call to the server-side application under normal conditions. However, the server triggers the webhook. i.e., the client-side calls the server-side.

WebHook avoids continually polling client applications to the server application for new updates since it calls the client application. More information about webhooks may be found here.

Key Features of Webhooks

- Efficient: Webhooks are a quick and easy way to transfer data to other applications without having to set up elaborate processes or risk missing any vital data.

- Automation: While using Webhooks, we can effectively automate certain data transmission procedures and allow users to establish specific actions for triggering events in software programs and applications. A webhook is excellent for building events you wish to repeat when the same triggering event occurs numerous times because it may deliver data instantly.

- Specificity: Another advantage of using webhooks in your programming is that they allow you to make direct connections between some regions of an application rather than connecting several code pieces to construct a functioning data transfer system. This may make creating a webhook lot faster and easier than using other APIs or callbacks, and it also helps you keep your code clean and understandable by decreasing the amount of complex code in your software.

- Integration: Many applications allow webhooks integration; if you’re building a new app and want to use webhooks to connect with current apps, it’s usually a simple procedure. If you want to design an app that contains notifications, messages, or events based on the behavior of other apps, this is a good option.

- Low Set-up Time: When you utilize webhooks to link applications instead of alternative ways, you save time and effort.

To know more about Webhooks & its related concepts, you can check out this blog.

What is Amazon Redshift?

AWS Redshift is Amazon Web Services’ cloud-based Data Warehousing and Analytics solution. AWS Redshift allows users to upload and manage large amounts of data.

By providing a nearly limitless data storage option, Amazon Redshift provides consumers and organizations with a platform for analyzing data and gaining fresh insights into their operations. The price of AWS Redshift rises directly to the quantity of storage needed. If consumers need more capacity as their businesses grow, they can obtain it now because AWS Redshift is cloud-based and can be scaled very quickly.

The architecture of RedshiftRedshift suggests that it is built to analyze your data swiftly. Massively Parallel Processing (MPP) is used to achieve this. It also uses Machine Learning and results caching to ensure less than a second query response times.

Redshift’s ease of usage is its most appealing feature. It can be created using either the AWS interface or the API. Users can use virtual networks to segregate their data. Backups and copying are automated. Redshift also employs end-to-end encryption to protect user data.

To learn more about Amazon Redshift, you can visit their official documentation.

Key Features of Amazon Redshift

- Faster Query Speed: Amazon Redshift can easily handle large datasets ranging from gigabytes to exabytes. Redshift uses columnar storage, data compression, and zone maps to reduce the amount of I/O necessary to run queries. It employs the MPP Data Warehouse architecture to parallelize and distribute SQL operations such that all available resources are used. The underlying technology is geared for high-performance data processing with locally connected storage maximizing throughput between CPUs and drives and a high-bandwidth mesh network maximizing throughput between nodes.

- Fault-Tolerant: Amazon Redshift includes several features that help your Data Warehouse cluster be more reliable. Redshift checks the cluster’s health for fault tolerance, automatically duplicating data from failed discs and replacing nodes.

- Scalability: Amazon Redshift is simple and scales quickly to meet your changing demands. You may instantly adjust the quantity or type of nodes in your Data Warehouse in a few clicks in the console or a simple API call and scale up or down as your needs change.

- Simple to Set Up: Amazon Redshift is simple to set up and use. You may create a new Data Warehouse with a few clicks on the Amazon Web Services Management console, and RedshiftRedshift will take care of the infrastructure. Most administrative duties, such as backups and replication, are automated, allowing you to focus on your data rather than the management.

- Simple to Use: Redshift has solutions that can help you make changes based on your workloads regarding controlling. New capabilities are transparent, minimizing the need to plan and execute upgrades and repairs.

- Flexible Querying: Users can perform queries directly from the interface or link their preferred SQL client tools, libraries, or business intelligence tools to Amazon Redshift. The Query Editor in the Amazon Web Services UI is a powerful tool for executing SQL queries on Redshift clusters and viewing the query results and execution plan alongside your queries.

Hevo Data, a Fully-managed Data Pipeline platform, can help you automate, simplify & enrich your data replication process in a few clicks. With Hevo’s wide variety of connectors and blazing-fast Data Pipelines, you can extract & load data from 100+ Data Sources straight into your Data Warehouse or any Databases. To further streamline and prepare your data for analysis, you can process and enrich raw granular data using Hevo’s robust & built-in Transformation Layer without writing a single line of code!

GET STARTED WITH HEVO FOR FREEHevo is the fastest, easiest, and most reliable data replication platform that will save your engineering bandwidth and time multifold.

Try our 14-day full access free trial today to experience an entirely automated hassle-free Data Replication!

Why is there a need to integrate Webhooks to Redshift?

Webhooks are essentially the bedrock of nearly all modern web applications. Webhooks, in particular, have grown increasingly popular for any event notification. Webhooks allow APIs to send streams of events to a specified callback URL via HTTP POST requests (the webhook). There’s no need to pull all the time now. As a result, they’re also known as “reverse APIs.”

Integrating Webhooks to Redshift will allow to collect and save data in a Centralised manner. This seems to be the most effective technique for collecting data from various sources, allowing access to the remaining relevant data—for instance – client data, such as internal application usage and payment information. After that, you can use a BI tool to query your customer data and create reports to gain business insights.

Most modern Webhooks consist of simply listening for data changes and then delivering it to another HTTP endpoint. Instead of repeatedly requesting data, the receiving application can relax and wait for what it requires without sending several requests to another system.

Webhooks have the advantage of being less resource-intensive & easier to set up than APIs. Creating an API is a complex process that may be as difficult as designing and creating an application, but setting up a webhook is as simple as sending a single POST request, defining a URL to accept the data on the receiving end, and then executing some action on the information once it is received.

How to integrate Webhooks to Redshift using Hevo?

Hevo Data, a No-code Data Pipeline, helps Load Data from any data source such as Databases, SaaS applications, Cloud Storage, SDKs, and Streaming Services and simplifies the ETL process. It supports 100+ data sources(including 40+ accessible sources) for free. It is a 3-step process: selecting the data source, providing valid credentials, and choosing the Destination. Hevo loads the data onto the desired Data Warehouse, enriches it, and transforms it into an analysis-ready form without writing a single line of code.

Its wholly automated pipeline offers data to be delivered in real-time without any loss from Source to Destination. Its fault-tolerant and scalable architecture ensure that the data is handled securely and consistently with zero data loss and supports different forms of data.

Prerequisites

- Access to the Source and the Destination systems.

Steps for integrating Webhooks to Redshift

The following are the steps to integrate Webhooks to Redshift using Hevo Data:

Step 1: Configure Webhooks as a Source

- In the Asset Palette > Click on PIPELINES.

- In the pipeline list view > click on +CREATE

- Select Webhook from the list of Sources. The list is displayed based on the Sources you select while setting up your account with Hevo.

Note: If Webhook is unavailable in the displayed list, click View All, search for Webhook, and then select it on the Select Source Type page.

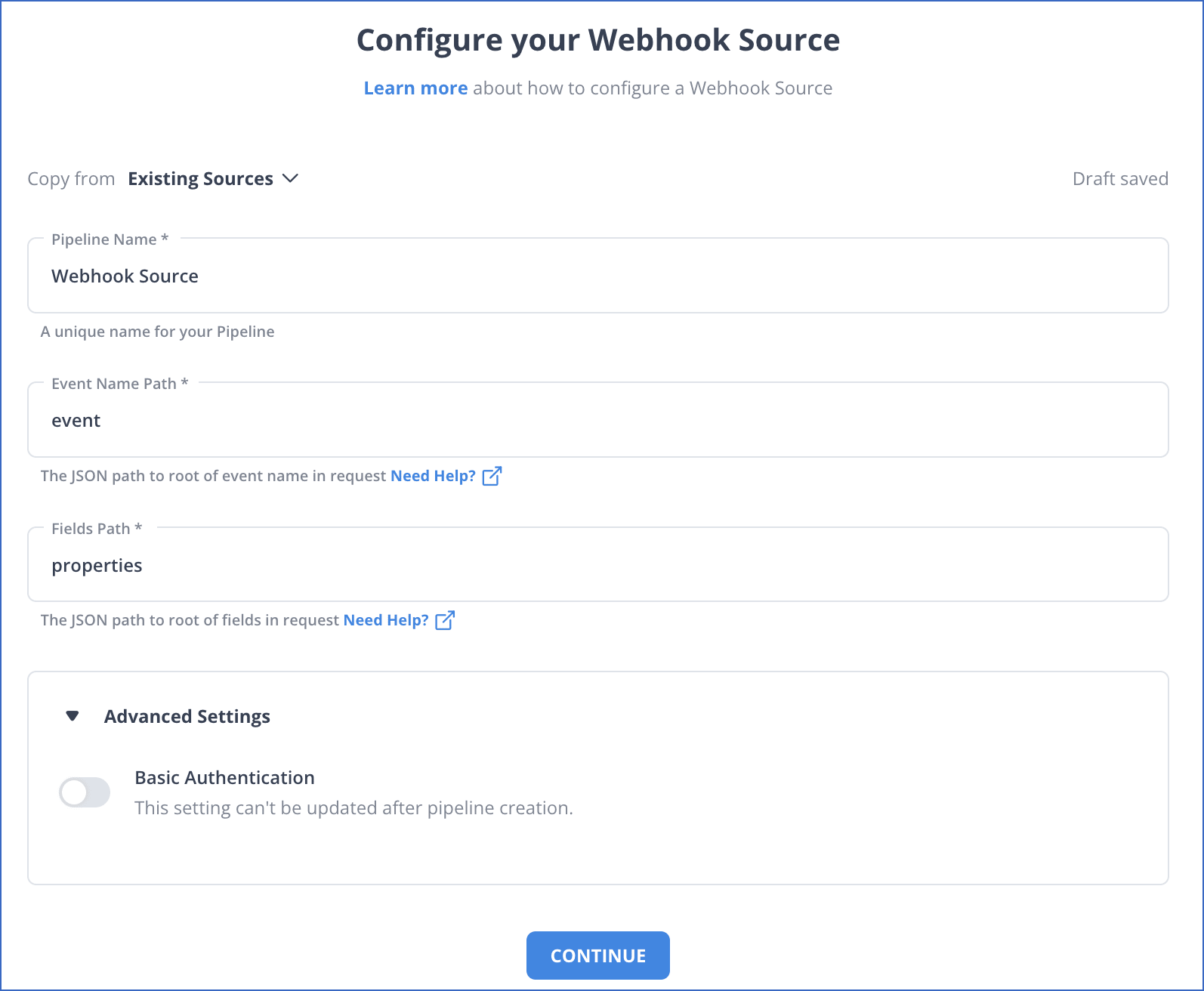

- In the Configure your Webhook Source page, specify the JSON path to the root of the Event name and the root of the fields present in your payload.

- Click CONTINUE.

Step 2: Configure Amazon Redshift as a Destination Type

- On the Select Destination Type page, select Amazon Redshift as the Destination.

- In the Configure your Amazon Redshift Destination page, specify the Amazon Redshift settings to configure your Destination.

- Click SAVE & CONTINUE.

- In the Destination Table Prefix field, provide a prefix if you want to modify the Destination table or partition name. Else, you can leave the field blank.

- Click CONTINUE.

- Voila, A Webhook URL has been successfully generated along with the sample payload.

Note: To check the Webhook URL connectivity to Hevo, Copy and paste the generated Webhook URL in Step 2 above in the application from which you want to push events to Hevo.

Benefits of using Hevo to integrate Webhooks to Redshift

Listed below are the advantages of using Hevo Data over any other Data Pipeline platform:

- Secure: Hevo has a fault-tolerant architecture that ensures that the data is handled securely and consistently with zero data loss.

- Schema Management: Hevo takes away the tedious task of schema management & automatically detects the schema of incoming data and maps it to the destination schema.

- Minimal Learning: Hevo, with its simple and interactive UI, is effortless for new customers to work on and perform operations.

- Hevo Is Built To Scale: As the number of sources and your data volume grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real-time. This ensures efficient utilization of bandwidth on both ends.

- Live Support: The Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

- Live Monitoring: Hevo allows you to monitor the data flow and check where your data is at a particular time.

Sign up here for a 14-day Free Trial!

Conclusion

Webhooks are an advanced means of integrating applications that exist independently. The only alternative to using webhook is for the application that requires the data to poll an API hosted at the source application regularly. This is difficult and prone to failure. As a result, Webhooks are the preferred method of transferring real-time data between two independently hosted applications.

Learn how to integrate various data sources with Redshift, including efficiently connecting Twilio to Redshift. Access our detailed guides and resources here.

For more learning on integrating Webhooks to a Datawarehouse such as Google Bigquery, refer to the below-mentioned article.

In this article, you learned how to integrate Webhooks to Redshift. It also provided an overview of Webhooks and Redshift’s key features. You also learned about the key advantages of integrating Webhooks to Redshift.

FAQ on WebHook to Redshift

How to connect a webhook to a database?

To connect a webhook to a database:

1. Set up an API endpoint to receive the webhook request.

2. Parse the incoming data from the webhook.

3. Insert the parsed data into your database (e.g., MySQL, MongoDB) using the appropriate database query.

How do I add a webhook to AWS?

To add a webhook to AWS:

1. Create an API Gateway endpoint to receive the webhook.

2. Set up a Lambda function as the backend for the API Gateway to process the incoming webhook data.

3. The Lambda function can then store the data in a database like DynamoDB or trigger other AWS services. Example flow: Webhook → API Gateway → Lambda → DynamoDB.

How do I receive data from a webhook?

To receive data from a webhook:

1. Set up an HTTP server with an endpoint that can accept POST requests.

2. Configure the webhook source to send data to this endpoint.

3. The server will parse the request body (usually JSON or form data) to access the data.

Pratibha is a seasoned Marketing Analyst with a strong background in marketing research and a passion for data science. She excels in crafting in-depth articles within the data industry, leveraging her expertise to produce insightful and valuable content. Pratibha has curated technical content on various topics, including data integration and infrastructure, showcasing her ability to distill complex concepts into accessible, engaging narratives.

Share it with your connections.

-

Share To LinkedIn

-

Share To Facebook

-

Share To X

-

Copy Link