Data Challenges

At Slice, all their mobile application data gets stored in the MongoDB database and they use Amazon Redshift as their single source of truth. A year back there was no data engineering team at slice, the technology team used to manually write the custom Python scripts to migrate their MongoDB data to Redshift, and it used to take almost 3 days to build a single data pipeline.

As the data grew, they realized the need to adopt an automated data integration solution, primarily for these 3 reasons:

- Manually writing Python scripts was time-consuming

- Reading Changelog Steam and maintaining the custom code was challenging

- Building the in-house data infrastructure was a complex and costly affair

Doing the analysis directly on the MongoDB data is too heavy and also we didn’t have a big data engineering team who could take care of the entire infrastructure and pipelines. It was a classic case of Build Vs Buy. After thorough research, we decided to go ahead with a specialized data integration tool.

The Solution

Based on our requirements and the team size we had, we chose Hevo as our data pipeline platform. It’s a fairly simple product that doesn’t require any training and anyone can easily create their data pipelines without writing any code.

Initially, Hevo was used by the credit risk team at slice for moving their MongoDB data to Redshift, but later on, as they built their data engineering team, the entire data pipeline setup and maintenance was handled by Vivek and his team.

Hevo has simplified a lot of our tasks. We have scrapped our entire manual data integration process and switched to automation. We use Hevo’s data pipeline scheduling, models, auto-mapping features to seamlessly move our data to the destination warehouse. We flatten certain columns from our incoming data using the Python interface in Hevo and our risk team uses Models to write SQL queries to get the required data for reporting.

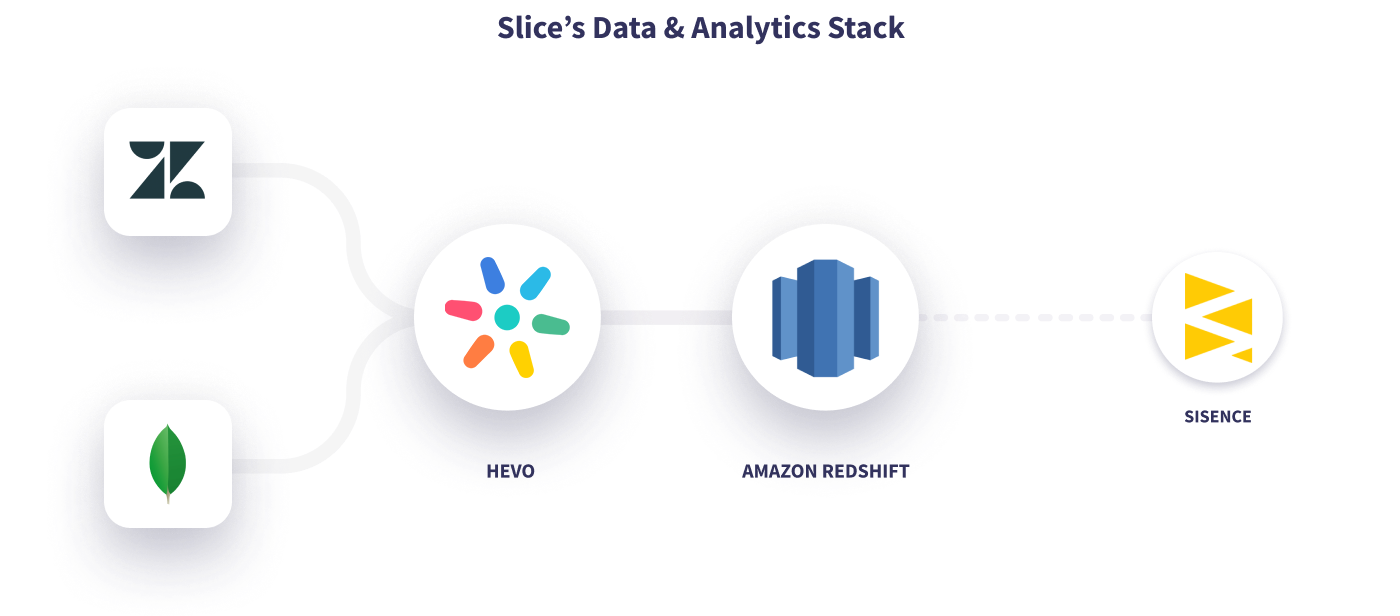

Hevo works as a backbone of slice’s Data & Analytics Stack that connects its primary data source MongoDB and Zendesk with Amazon Redshift. Also, the business teams use Sisense (formerly Periscope) to create their reports.

Key Results

Earlier with the manual data pipeline process, analysts at slice used to spend almost 3 days creating a single data pipeline but after switching to a modern data stack with Hevo they have seen a drastic improvement in the turnaround time for pipeline creation and business reporting.

With Hevo’s automated data pipelines, we’ve not just saved a lot of time but also got the most unified, accurate and real-time data with zero data loss. Also, now we don’t have to look at the upscaling or downscaling of our infrastructure as Hevo does all the heavy lifting. I would recommend Hevo for 3 main reasons - Easy to use, Reliable, Time-saver.

Slice aims at expanding its offerings to over 50 cities in India and reaching the 1 million customer milestone in 2021. Hevo is happy to be a part of slice’s growth journey and looks forward to strengthening this partnership in years to come.

Excited to see Hevo in action and understand how a modern data stack can help your business grow? Sign up for our 14-day free trial or register for a personalized demo with our product expert.