In today’s fast-paced data landscape, real-time data replication and synchronization are critical for maintaining operational efficiency and making timely decisions. AWS Database Migration Service (DMS) offers a comprehensive database migration and replication solution, including support for Change Data Capture (CDC). This blog will delve into configuring AWS DMS CDC Oracle, providing a step-by-step guide and addressing key considerations to ensure a successful setup.

Table of Contents

What is AWS DMS?

AWS Database Migration Service (AWS DMS) is a tool for moving your data from a source to your target destination. Whether you’re dealing with relational databases, data warehouses, NoSQL databases, or other data sources, AWS DMS can securely migrate your data to AWS Cloud destinations.

We can use AWS DMS for both homogenous migrations (like moving from one Oracle database to another) and heterogeneous migrations (like moving from Oracle to MySQL). The best part? It doesn’t mess with your source database much during the migration, so your applications have minimal downtime.

Key Features of AWS DMS

- Continuous Data Replication: AWS DMS can perform ongoing data replication from the source to the target database, ensuring that the target database is always in sync with your source.

- Minimal Downtime: AWS DMS minimizes the impact on the source database during migration by using replication and change data capture techniques.

- Flexibility: AWS DMS supports both full-load and incremental data migration, allowing for tailored data transfer based on specific needs.

Want to learn more? Take a look at our guide on the CDC with AWS DMS.

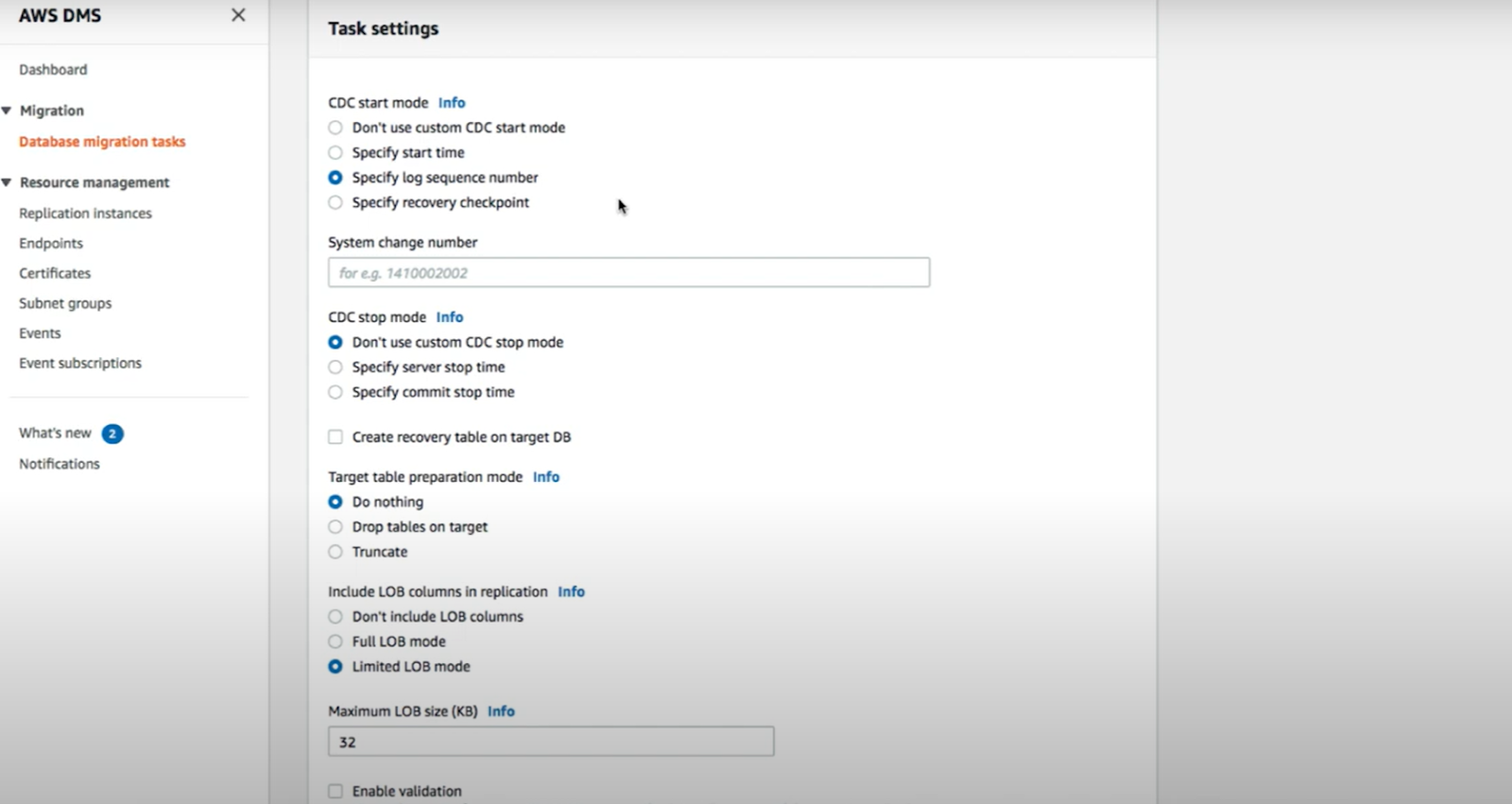

Note: You can use DMS for a one-time migration or set it up to keep your source and target databases in sync by continuously capturing and tracking changes. If you’re only using AWS DMS to handle changes, you’ll need to provide a time or system change number (SCN) so it knows where to look in the database logs. Also, ensure those logs are accessible for a while so AWS DMS can keep track of everything.

Efficiently migrate your Oracle data with Change Data Capture (CDC) using Hevo’s powerful platform. Ensure real-time data synchronization and minimal manual effort.

Effortless Migration: Seamlessly migrate Oracle data with CDC capabilities without coding.

Real-Time Data Sync: Keep your data current with continuous real-time updates.

Flexible Transformations: Utilize built-in transformations or custom Python scripts to prepare your data.

Auto-Schema Mapping: Automatically map schemas to ensure smooth data transfer.

Join over 2000 satisfied customers who trust Hevo and experience a smooth data migration process with us.

Start your Oracle CDC for FreeHow to Migrate From your Database with AWS DMS

The basic process for migrating your database engine that DMS is as follows:

- Convert Your Schema: First, use the AWS Schema Conversion Tool (SCT) to convert your schema syntax from your current database engine to the new one. Load this converted schema into your destination database. At this stage, we’ll focus on loading just the tables.

- Bulk Copy the Data: Next, let AWS DMS do a bulk copy of all your data into the new database. This step is like moving all your furniture into your new house.

- Copy Other Database Objects: After the bulk copy, we’ll need to import other important database objects, such as foreign keys and indexes. Think of these as the finishing touches to ensure smooth operation.

- Use Change Data Capture (CDC): To keep your data in sync, we’ll use Change Data Capture (CDC). This ensures that any new changes in your source database are reflected in the destination until you’re ready to switch over.

- Cut Over to the New Database: When everything is set, and you’re ready to switch, copy over the last few objects, such as triggers. Then, stop the CDC, cut over any applications, and you’re good to go!

Remember, you can always tailor this approach to fit your specific business needs.

What is CDC?

One of the data integration techniques is Change Data Capture, which generates a trace of all the changes in your database. Suppose you are making an update or additions of data or even deletion; CDC follows all these changes and sees that they are mirrored in real-time.

With CDC, this won’t require reloading the whole database every time something changes. It just captures new updates and applies them where they need to go. This CDC benefit is helpful when you need to maintain consistency among different systems, such as a data warehouse or even analytics tools, by keeping it current and precise without any of the overhead a complete refresh would bring.

In summary, the CDC technique helps to keep data fresh and synchronized in all systems, and changes are quickly reflected at any point of their origin, ensuring data consistency.

How Does DMS Support CDC?

AWS DMS supports CDC by continuously monitoring the source database for changes and applying those changes to the target database. This is very useful when real-time data synchronization is required, such as for analytics, reporting, or operational data stores.

So, how does AWS DMS make CDC work for you? Here’s the breakdown:

- Change Data Capture Mechanisms: AWS DMS taps into the built-in CDC features of your source database to catch changes. This means using redo logs and tools like LogMiner to keep track of updates for Oracle databases.

- Replication Instances: AWS DMS uses these instances to grab changes from the source and apply them to the target database. They’re the ones that handle all the data transfer and transformation magic.

- Endpoints: You’ll need to set up source and target endpoints to tell AWS DMS how to connect to your databases and move data around.

What is Oracle?

Oracle Database is a widely used relational database management system (RDBMS) known for its robustness, scalability, and high performance. Due to its reliability and advanced features, many large enterprises employ it for mission-critical applications.

Configure Oracle using AWS DMS

You can set up your Oracle database as a source and destination, both in the AWS ecosystem. Let us look at the configurations one by one.

Oracle as a Source

AWS Database Migration Service (DMS) enables seamless data migration from Oracle databases to various target destinations.

AWS DMS supports the following Oracle database editions:

- Oracle Enterprise Edition

- Oracle Standard Edition

- Oracle Express Edition

- Oracle Personal Edition

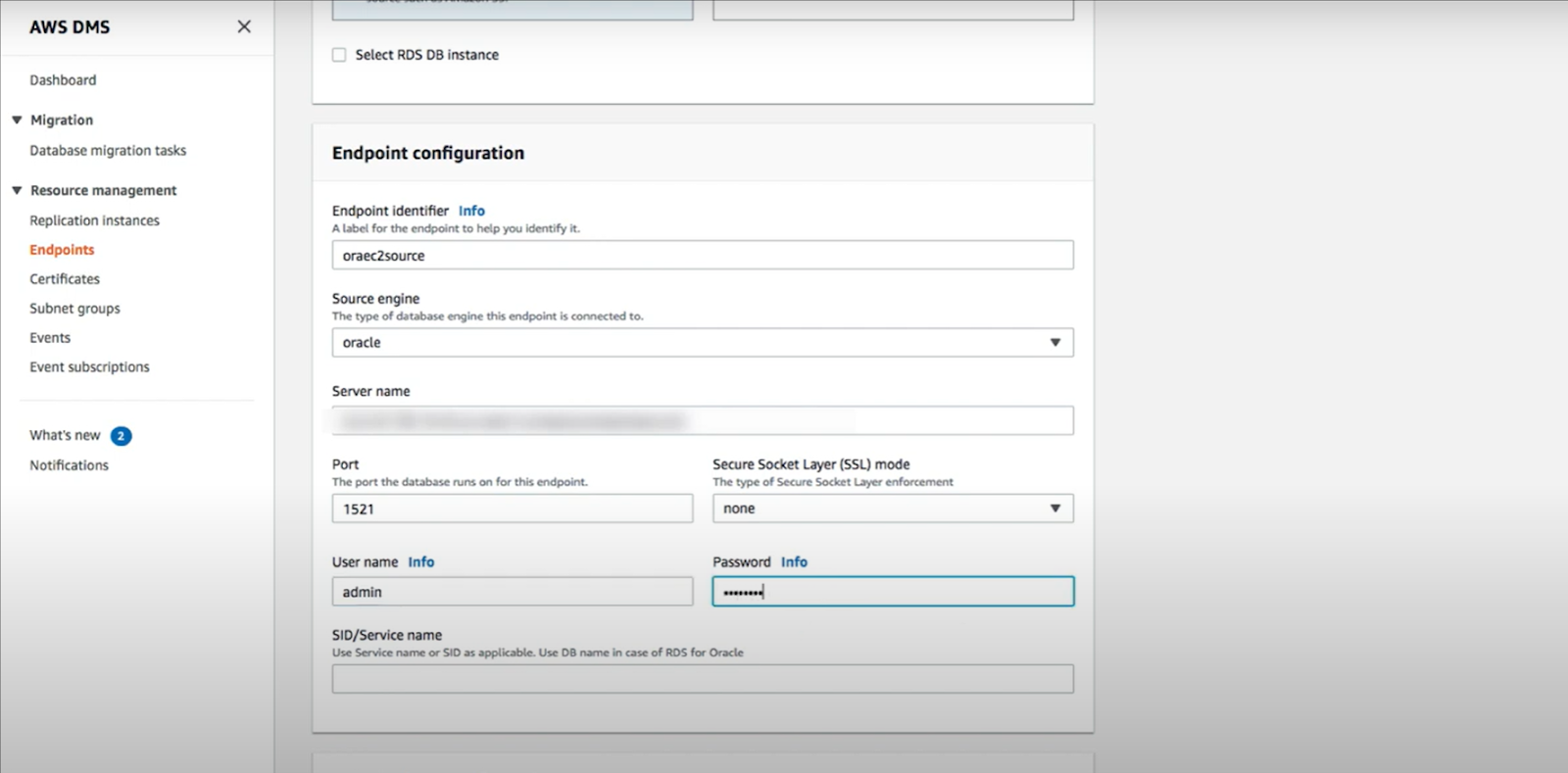

Steps to Configure an Oracle Database as an AWS DMS Source Endpoint:

- Create an Oracle User: Ensure you register an IAM role with the necessary AWS DMS permissions to access your Oracle source database.

- Set Up the Oracle Source Endpoint: Configure it according to your Oracle database setup. No additional configuration is required for a full-load-only task.

- Choose a CDC Method: For tasks involving change data capture (CDC), select Oracle LogMiner or AWS DMS Binary Reader to capture data changes.

What should you choose, Binary Reader or LogMiner?

When using AWS DMS to perform change data capture (CDC) with Oracle as a source, you have two methods: Oracle LogMiner and Binary Reader. LogMiner is an Oracle API that reads online redo logs and archived redo log files. Binary Reader is an AWS DMS method that directly reads and parses the raw redo log files.

Here’s a comparison of their features:

| Feature | LogMiner | Binary Reader |

| Easy to configure | Yes | No |

| Lower impact on source system I/O and CPU | No | Yes |

| Better CDC performance | No | Yes |

| Supports Oracle table clusters | Yes | No |

| Supports all types of Oracle Hybrid Columnar Compression (HCC) | Yes | Partially (does not support QUERY LOW) |

| LOB column support in Oracle 12c only | No (LOB support is not available with LogMiner in Oracle 12c) | Yes |

| Supports UPDATE statements affecting only LOB columns | No | Yes |

| Supports Oracle Transparent Data Encryption (TDE) | Partially (TDE on column level not supported for Amazon RDS for Oracle) | Partially (supports TDE only for self-managed Oracle databases) |

| Supports all Oracle compression methods | Yes | No |

| Supports XA transactions | No | Yes |

| RAC (Real Application Clusters) | Yes (not recommended due to performance reasons and some DMS limitations) | Yes (highly recommended) |

LogMiner is easier to configure but has a higher impact on source system performance and limited features compared to Binary Reader. Binary Reader offers better performance, lower system impact, and support for additional features but requires a more complex setup.

Account Privileges Required to Access Redo Logs

- When using Oracle LogMiner

To access the redo logs using the Oracle LogMiner, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

GRANT EXECUTE on DBMS_LOGMNR to db_user;

GRANT SELECT on V_$LOGMNR_LOGS to db_user;

GRANT SELECT on V_$LOGMNR_CONTENTS to db_user;

GRANT LOGMINING to db_user; -– Required only if the Oracle version is 12c or higher.- When using AWS DMS Binary Reader

To access the redo logs using the AWS DMS Binary Reader, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

-– Grant this privilege if the redo logs are stored in Oracle Automatic Storage Management (ASM) and AWS DMS accesses them from ASM.

GRANT SELECT on v_$transportable_platform to db_user;-– Grant this privilege to allow AWS DMS to use Oracle BFILE read file access in certain cases. This access is required when the replication instance doesn't have file-level access to the redo logs on non-ASM storage.

GRANT CREATE ANY DIRECTORY to db_user;-– Grant this privilege to copy the redo log files to a temporary folder using the CopyToTempFolder method.

GRANT EXECUTE on DBMS_FILE_TRANSFER to db_user;

GRANT EXECUTE on DBMS_FILE_GROUP to db_user;Note: AWS DMS uses LogMiner by default. You don’t have to specify additional connection attributes to use it. But If you change between Oracle LogMiner and AWS DMS Binary Reader, restart the CDC task.

Configuring Binary Reader for Oracle CDC

To use AWS DMS Binary Reader for reading redo logs from Oracle databases, configure the extra connection attributes as follows:

Basic Configuration

For a standard setup:

useLogMinerReader=N;useBfile=Y;ASM Configuration

If your Oracle server uses ASM (Automatic Storage Management), use the following format:

useLogMinerReader=N;useBfile=Y;asm_user=asm_username;asm_server=RAC_server_ip_address:port_number/+ASM;Password Configuration

Set the source endpoint Password parameter to include both the Oracle user password and the ASM password, separated by a comma:

oracle_user_password,asm_user_passwordHigh-Performance Settings

For better performance in environments using ASM, consider adding these settings to improve CDC task efficiency:

useLogMinerReader=N;useBfile=Y;asm_user=asm_username;asm_server=RAC_server_ip_address:port_number/+ASM; parallelASMReadThreads=6;readAheadBlocks=150000;- parallelASMReadThreads: Number of parallel threads for ASM read operations.

- readAheadBlocks: Number of read-ahead buffers.

Note: When configuring CDC, choose an appropriate start point to capture the earliest open transaction. This typically involves setting a CDC native start point based on the Oracle system change number (SCN) to avoid missing earlier open transactions.

Oracle as a Destination

AWS Database Migration Service (DMS) allows you to migrate data to Oracle databases from various sources, including other Oracle databases. Key features and considerations include:

- Encryption:

- Secure Sockets Layer (SSL): Encrypt connections between your Oracle endpoint and the replication instance.

- Oracle Transparent Data Encryption (TDE): Supports data encryption at rest without requiring an encryption key or password.

- Version Support: Oracle versions 11g (versions 11.2.0.3.v1 and higher), 12c, 18c, and 19c for the Enterprise, Standard, Standard One, and Standard Two editions

- Schema Transformation:

By default, data is migrated into the schema used for the target connection. To migrate data to a different schema, use a schema transformation rule. For example, to migrate data from `PERFDATA1` to `PERFDATA2`:

{

"rule-type": "transformation",

"rule-id": "2",

"rule-name": "2",

"rule-action": "rename",

"rule-target": "schema",

"object-locator": {

"schema-name": "PERFDATA1"

},

"value": "PERFDATA2"

}- Tablespace Transformation:

AWS DMS migrates tables and indexes to default tablespaces. To assign them to specific tablespaces, use a tablespace transformation rule. For example, to migrate all tables in the `INVENTORY` schema to the `INVENTORYSPACE` tablespace:

{

"rule-type": "transformation",

"rule-id": "3",

"rule-name": "3",

"rule-action": "rename",

"rule-target": "table-tablespace",

"object-locator": {

"schema-name": "INVENTORY",

"table-name": "%",

"table-tablespace-name": "%"

},

"value": "INVENTORYSPACE"

}Account Privileges Required for Oracle as a Target

To use an Oracle target in an AWS Database Migration Service (DMS) task, grant the following privileges to the user account specified in the Oracle database definitions for AWS DMS:

- Basic Privileges

GRANT SELECT ANY TRANSACTION TO dms_user;

GRANT SELECT ON V$NLS_PARAMETERS TO dms_user;

GRANT SELECT ON V$TIMEZONE_NAMES TO dms_user;

GRANT SELECT ON ALL_INDEXES TO dms_user;

GRANT SELECT ON ALL_OBJECTS TO dms_user;

GRANT SELECT ON DBA_OBJECTS TO dms_user;

GRANT SELECT ON ALL_TABLES TO dms_user;

GRANT SELECT ON ALL_USERS TO dms_user;

GRANT SELECT ON ALL_CATALOG TO dms_user;

GRANT SELECT ON ALL_CONSTRAINTS TO dms_user;

GRANT SELECT ON ALL_CONS_COLUMNS TO dms_user;

GRANT SELECT ON ALL_TAB_COLS TO dms_user;

GRANT SELECT ON ALL_IND_COLUMNS TO dms_user;

GRANT DROP ANY TABLE TO dms_user;

GRANT SELECT ANY TABLE TO dms_user;

GRANT INSERT ANY TABLE TO dms_user;

GRANT UPDATE ANY TABLE TO dms_user;

GRANT CREATE ANY VIEW TO dms_user;

GRANT DROP ANY VIEW TO dms_user;

GRANT CREATE ANY PROCEDURE TO dms_user;

GRANT ALTER ANY PROCEDURE TO dms_user;

GRANT DROP ANY PROCEDURE TO dms_user;

GRANT CREATE ANY SEQUENCE TO dms_user;

GRANT ALTER ANY SEQUENCE TO dms_user;

GRANT DROP ANY SEQUENCE TO dms_user;

GRANT DELETE ANY TABLE TO dms_user;- Additional Privileges for Specific Requirements

- Specific Table List:

GRANT SELECT ON replicated_table TO dms_user;

GRANT ALTER ON replicated_table TO dms_user;- Creating Tables in Default Tablespace:

GRANT UNLIMITED TABLESPACE TO dms_user;- Logon Privilege:

GRANT CREATE SESSION TO dms_user;- Direct Path Load (Full Load):

GRANT LOCK ANY TABLE TO dms_user;- Different Schema (Using “DROP and CREATE” Table Prep Mode):

GRANT CREATE ANY INDEX TO dms_user;

GRANT DROP ANY TABLE TO dms_user;- Storing Changes in Change or Audit Tables:

GRANT CREATE ANY TABLE TO dms_user;

GRANT CREATE ANY INDEX TO dms_user;This set of privileges ensures that the AWS DMS user has the necessary permissions to perform data migration tasks effectively in the Oracle database.

Limitations of AWS DMS CDC

While AWS DMS offers powerful features for database migration and replication, it does have some limitations that are important to consider:

- At Least One Database in AWS

AWS DMS requires that at least one of your databases—either the source or the target—must reside within AWS. This is important to note when planning your migration strategy.

- No Complex Transformations

AWS DMS supports basic data transformations but has limited capabilities for complex transformations during migration. Additional tools or custom solutions may be required for advanced data manipulation.

- CDC Overhead

Capturing and applying changes can introduce additional load on the source database. This overhead might impact its performance, especially in high-transaction environments.

- Compatibility Issues

Certain features and data types in Oracle may not be fully supported by AWS DMS. This can lead to issues with data replication or require additional configuration to work around limitations.

Configuring Oracle using Hevo Data

Hevo Data offers an alternative approach to data integration and replication, focusing on ease of use and automation. With Hevo, you can set up automated pipelines for data replication, including CDC from Oracle, without the need for extensive manual configuration.

Hevo Data supports:

Configure Generic Oracle as Source

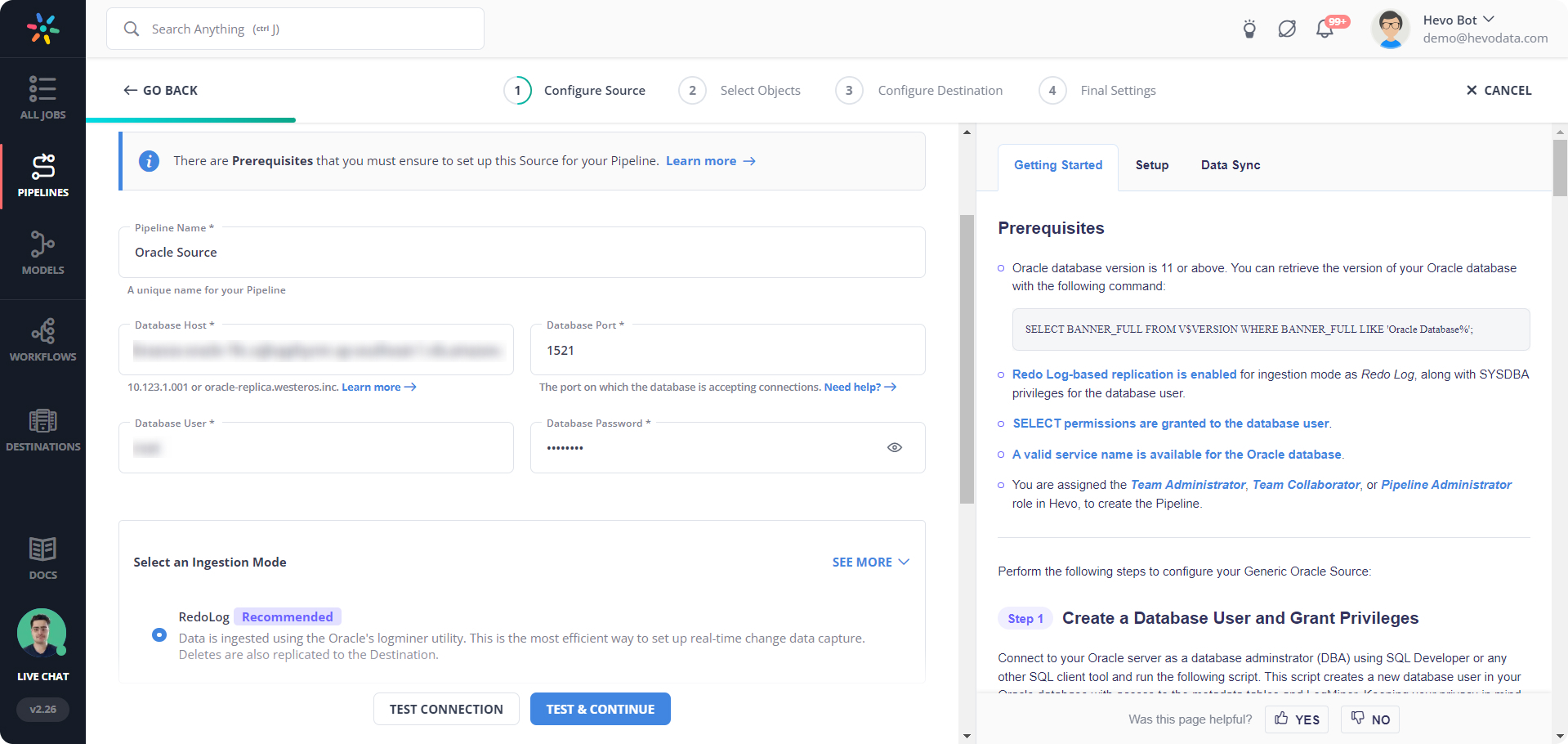

Perform the following steps to configure Oracle as a Source in Hevo:

- Click PIPELINES in the Navigation Bar.

- Click + CREATE in the Pipelines List View.

- In the Select Source Type page, select Oracle.

- In the Configure your Oracle Source page, specify the following:

Configure Amazon RDS Oracle as a Source

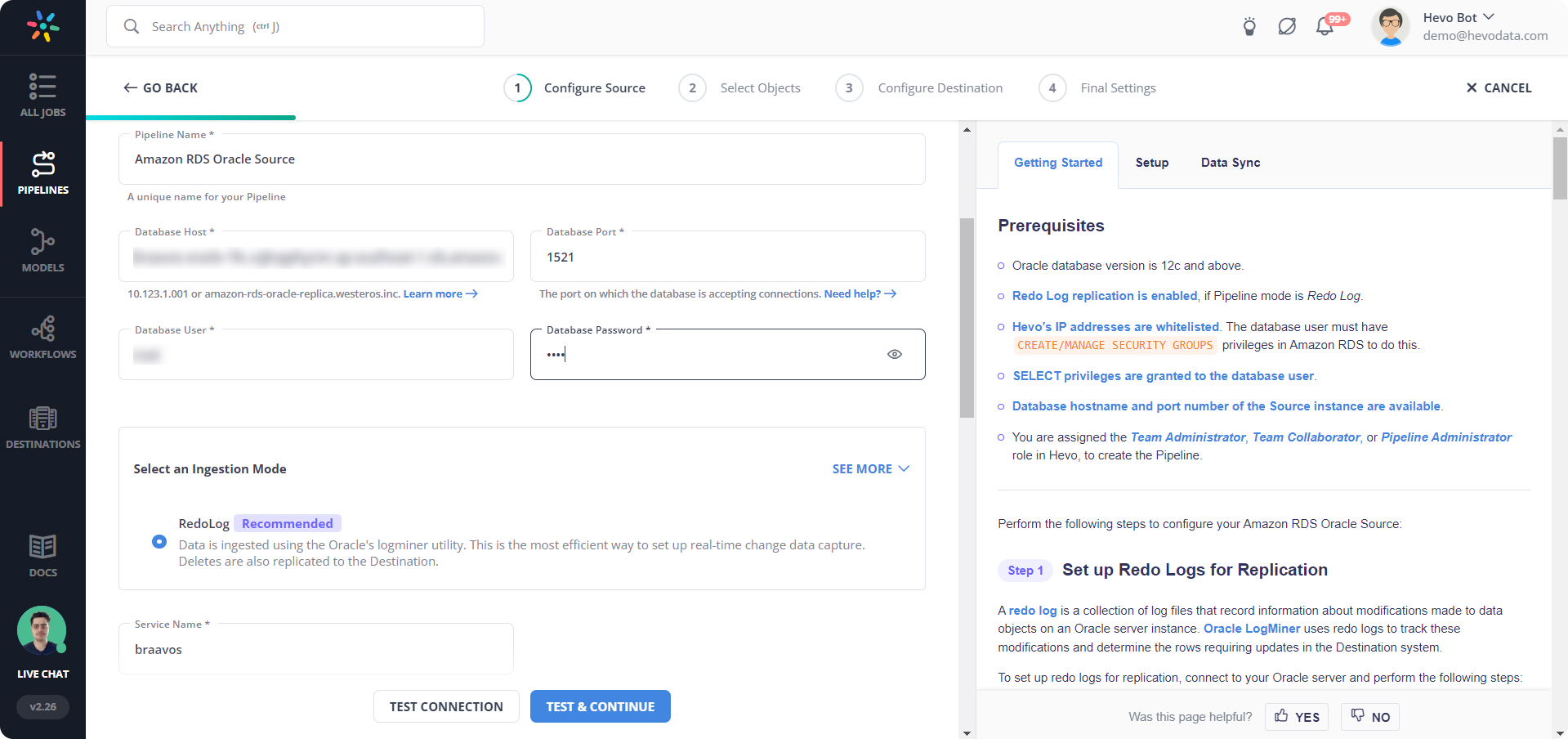

Perform the following steps to configure Amazon RDS Oracle as a Source in Hevo:

- Click PIPELINES in the Navigation Bar.

- Click + CREATE in the Pipelines List View.

- In the Select Source Type page, select Amazon RDS Oracle.

- In the Configure your Amazon RDS Oracle Source page, specify the following:

Why Choose Hevo over AWS DMS for Oracle Migration?

Hevo has a number of advantages over AWS Database Migration Service that make the process smoother and more efficient while migrating your Oracle database:

- Easy to Set Up

Setup with Hevo is easy; you don’t have to worry about complex configurations or long setup procedures. With Hevo, you can get started fast with migration rather than being stuck in the technical details.

- No-Code Solution

Hevo is a no-code platform; hence, you don’t need to write code explicitly for data migration. It helps users with non-technical backgrounds speed up their migration process.

- Real-Time Data Ingestion

Hevo supports the ingesting of real-time data and thus continuously captures changes and synchronizes data between the source and your target databases. This minimizes downtime and keeps your data up to date during migration.

- Auto Schema Mapping

Hevo automatically maps your source schema to the target schema, saving you most of the manual effort required in migration. This feature prevents errors in data transfer and ensures data integrity and consistency.

By choosing Hevo, you get a user-friendly, no-code platform that facilitates real-time data ingestion and automatic schema mapping, making your Oracle migration process straightforward and efficient.

Learn More About

Key Takeaways

- AWS DMS provides a powerful solution for migrating and replicating Oracle databases with CDC capabilities, allowing real-time data synchronization.

- CDC ensures that changes to the source database are captured and applied to the target, maintaining data consistency across systems.

- Configuration involves setting up Oracle as both a source and destination, utilizing redo logs and LogMiner for CDC.

- The limitations of AWS DMS include its support for complex transformations and potential performance impact.

- Hevo offers an alternative with its automated, no-code approach to data replication and integration.

FAQ on AWS DMS CDC Oracle

Does AWS DMS support CDC?

Yes, AWS Database Migration Service (DMS) supports Change Data Capture (CDC), enabling continuous data replication by capturing ongoing changes from the source database and applying them to the target database.

How to check CDC in Oracle?

1. Oracle Streams: Check for Oracle Streams setup using queries on `DBA_STREAMS_T3` or `DBA_CAPTURE`.

2. Oracle GoldenGate: Use GoldenGate views like `GGSCI` commands or query `GG_ADM` views.

3. LogMiner: Use Oracle LogMiner to query the redo log files for changes.

Can AWS DMS be used for replication?

Yes, AWS DMS can be used for database replication. It supports both full load and ongoing replication (CDC) to keep the source and target databases in sync.

What is AWS DMS full load?

AWS DMS full load is the initial phase of data migration, during which the entire dataset from the source database is copied to the target database. It sets up the base dataset before applying ongoing changes through CDC for continuous replication.