Easily move your data from Firebase Analytics To Databricks to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Building an all-new data connector is challenging, especially when you are already overloaded with managing & maintaining your existing custom data pipelines. To fulfill your marketing team’s ad-hoc Firebase Analytics to Databricks connection request, you’ll have to invest a significant portion of your engineering bandwidth.

We know you are short on time & need a quick way out. This can be a piece of cake for you if you just need to download and upload a couple of CSV files. Or you could directly opt for an automated tool that fully handles complex transformations and frequent data integrations for you.

Either way, with this article’s stepwise guide to connect Firebase Analytics to Databricks effectively, you can set all your worries aside and quickly fuel your data-hungry business engines in 7 nifty minutes.

Looking for the best ETL tools to connect your Firebase account? Rest assured, Hevo’s no-code platform helps streamline your ETL process. Try Hevo and equip your team to:

- Integrate data from 150+ sources(60+ free sources).

- Utilize drag-and-drop and custom Python script features to transform your data.

- Risk management and security framework for cloud-based systems with SOC2 Compliance.

Table of Contents

How to connect Firebase Analytics to Databricks?

Exporting & Importing Data as CSV Files

To get started with this approach of connecting Firebase Analytics to Databricks, you can follow the steps given below:

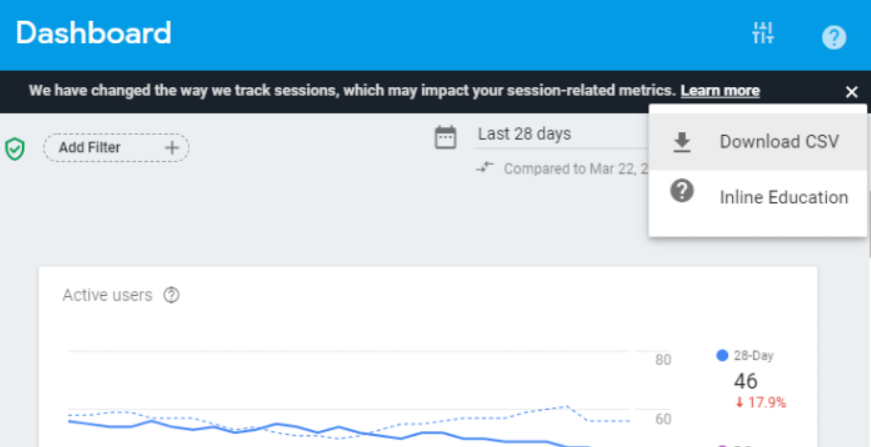

- Step 1: Log in to your Firebase Analytics console and navigate to the report whose data you want to replicate. Click on the ⠇option next to the date range and select the Download CSV option.

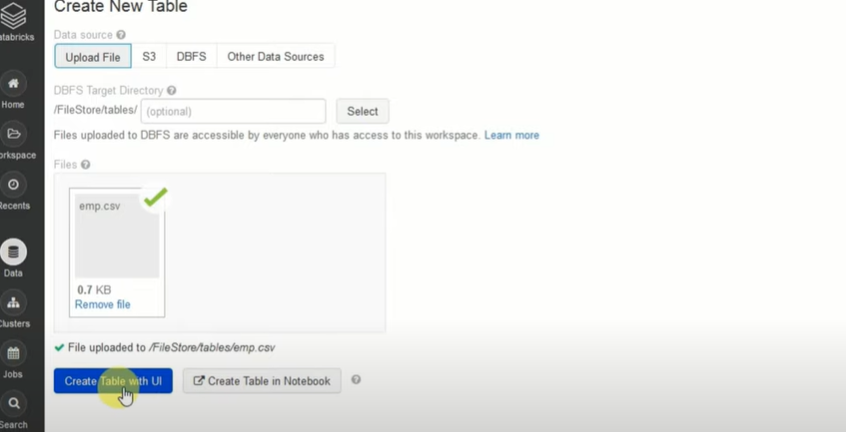

- Step 2: Log in to your Databricks account. On your Databricks homepage, click on the “click to browse” option. A new dialog box will appear on your screen. Navigate to the location on your system where you have saved the CSV file and select it.

- Step 3: In the Create New Table window in Databricks, click on the Create New Table with UI. Interestingly, while uploading your CSV files from your system, Databricks first stores them in the DBFS(Databricks File Store). You can observe this in the file path of your CSV file i.e in the format “/FileStore/tables/<fileName>.<fileType>”.

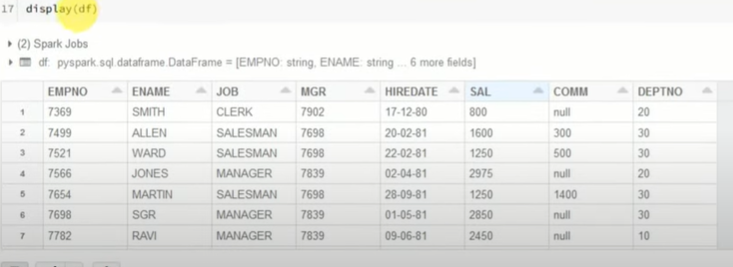

- Step 4: Select the cluster where you want to create your table and save the data. Click on the Preview Table button once you are done.

- Step 5: Finally, you can name the table and select the database where you want to create the table. Click on the Infer Schema check box to let Databricks set the data types based on the data values. Click the Create Table button to complete your data replication from Firebase Analytics to Databricks.

Note: For cases when your Databricks is hosted on Google Cloud, you can replicate data from Firebase Analytics to Google BigQuery. Then, query data from BigQuery on Databricks. However, this Firebase Analytics to Databricks data replication approach is costly as you also need to pay for the data storage charges on BigQuery.

Using the CSV files to provide data for your marketing & product teams is a great option for the following cases:

- Little to No Transformation Required: Carrying out complex data preparation and standardization tasks is impossible using the above method. Hence, it is an excellent choice if your website or app data is already in an analysis-ready form for your business analysts.

- One-Time Data Transfer: At times, business teams only need this data quarterly, yearly, or once when looking to migrate all the data completely. For these rare occasions, the manual effort is justified.

- Few Reports: Downloading and uploading only a few CSV files is fairly simple and can be done quickly.

A challenge arises when your business teams need fresh data from multiple reports every few hours. For them to make sense of this data present in different formats, it becomes necessary to clean and standardize it. This eventually leads you to spend a significant portion of your engineering bandwidth creating new data connectors. You also have to watch out for any changes in these connectors and fix data pipelines on an ad-hoc basis to ensure a zero data loss transfer. These additional tasks take 40-50% of your time, which you could have used to perform your primary engineering goals.

So, is there a simpler yet effective alternative to this? You can…

Automate the Data Replication process using a No-Code Tool

Going all the way to write custom scripts for every new data connector request is not the most efficient and economical solution. Frequent breakages, pipeline errors, and lack of data flow monitoring make scaling such a system a nightmare.

You can streamline the Firebase Analytics to Databricks data integration process by opting for an automated tool. To name a few benefits, you can check out the following:

- It allows you to focus on core engineering objectives while your business teams can jump on to reporting without any delays or data dependency on you.

- Your marketers can effortlessly enrich, filter, aggregate, and segment raw Firebase Analytics data with just a few clicks.

- The beginner-friendly UI saves the engineering team hours of productive time lost due to tedious data preparation tasks.

- Without coding knowledge, your analysts can seamlessly standardize timezones or aggregate campaign data from multiple sources for faster analysis.

- Your business teams get to work with near-real-time data with no compromise on the accuracy & consistency of the analysis.

As a hands-on example, you can check out how Hevo, a cloud-based No-code ETL/ELT Tool, makes the Firebase Analytics to Databricks data replication effortless in just 2 simple steps:

- Step 1: To get started with replicating data from Firebase Analytics to Databricks, configure Firebase as a source by providing your Firebase credentials.

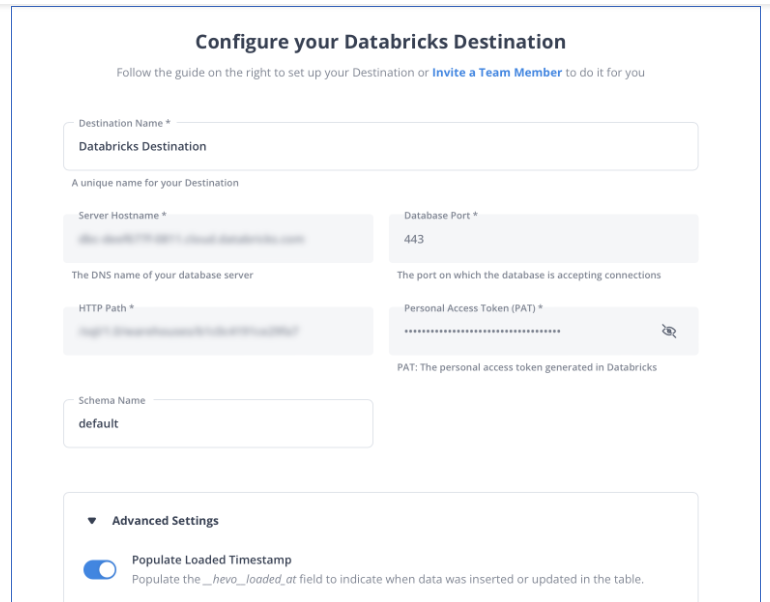

- Step 2: Configure Databricks as your destination and provide your Databricks credentials.

In a matter of minutes, you can complete this No-Code & automated approach of connecting Firebase Analytics to Databricks using Hevo and start analyzing your data.

Hevo’s fault-tolerant architecture ensures that the data is handled securely and consistently with zero data loss. It also enriches the data and transforms it into an analysis-ready form without having to write a single line of code.

Hevo’s reliable data pipeline platform enables you to set up zero-code and zero-maintenance data pipelines that just work. By employing Hevo to simplify your Firebase Analytics to Databricks data integration needs, you get to leverage its salient features:

- Reliability at Scale: With Hevo, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability: Monitor pipeline health with intuitive dashboards that reveal every stat of pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs.

- Stay in Total Control: When automation isn’t enough, Hevo offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management: Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo automatically maps the source schema with the destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support: With Hevo, you get more than just a platform, you get a partner for your pipelines. Discover peace with round-the-clock “Live Chat” within the platform. What’s more, you get 24×7 support even during the 14-day full-feature free trial.

- Transparent Pricing: Say goodbye to complex and hidden pricing models. Hevo’s Transparent Pricing brings complete visibility to your ELT spend. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in the data flow.

What can you achieve by migrating data from Firebase Analytics to Databricks?

Replicating data from Firebase Analytics to Databricks can help your data analysts get critical business insights. Here’s a short list of questions that this Firebase Analytics to Databricks data integration helps answer:

- From which geography do you have the maximum number of IoS users?

- How many app installations were from paid campaigns?

- Which In-app Issues have the highest average response time for the corresponding support tickets raised?

- How do paid sessions and goal conversion rates vary with marketing spend and cash flow?

- Which social media channels and content generates traffic, clicks, and CTR?

Using an automated tool allows your data analytics team to combine Firebase data with multiple data sources in Databricks effortlessly. This gives them better sales funnel visibility and accurate attributions to marketing channels.

Putting It All Together

These once-in-a-blue-moon Firebase Analytics data requests from your marketing & product teams can be effectively handled by downloading & uploading CSV files. You will need to shift gears to a custom data pipeline if data replication needs to happen every few hours. This is especially important for marketers as they need continuous updates on the ROI of their marketing campaigns & channels. So instead of putting in months of time and effort to develop & maintain such data integrations, you can hop on a smooth ride with Hevo’s 150+ plug-and-play integrations(40+ free sources like Firebase Analytics).

Saving countless hours of manual data cleaning & standardizing, Hevo’s pre-load data transformations get it done in minutes via a simple drag n drop interface or your custom python scripts. No need to go to your data warehouse for post-load transformations. You can simply run complex SQL transformations from the comfort of Hevo’s interface and get your data in the final analysis-ready form.