Key Takeaways

Key TakeawaysREST API ETL tools are specialized platforms that extract, transform, and load data from RESTful APIs into target systems like data warehouses. They simplify API data integration, ensuring automation, data quality, and real-time access.

Top 5 REST API ETL tools:

- Hevo Data: Automate API data ingestion with no-code pipelines.

- Airbyte: Flexible open-source tool for custom API connections.

- Fivetran: Reliable, fully managed API data replication.

- Stitch Data: Simple, scalable ETL for multiple API sources.

- Matillion: Cloud-native transformations for API data workflows.

When selecting a REST API ETL tool, focus on API connectivity, real-time access, transformation capabilities, ease of use, pricing, scalability, and security.

Struggling to get live data from all your apps in one place?

Data lives everywhere, in CRMs, SaaS apps, and cloud platforms, but bringing it together shouldn’t slow you down. REST API ETL tools solve this challenge by automating the entire process.

They connect to APIs, extract the data, transform it to match your business needs, and load it into your data warehouse or analytics platform.

In this article, we’ll explore the 10 best REST API ETL tools, highlight their key features, and show how they help businesses integrate, manage, and leverage API data efficiently for smarter decision-making.

Table of Contents

What are API ETL Tools?

API ETL tools are software solutions designed to pull data from various APIs, transform it into a usable format, and load it into a central data warehouse, database, or analytics platform. They act as a bridge between multiple data sources and a unified destination.

Businesses today pull data from CRMs, marketing tools, and payment gateways. Without automation, this creates silos and inefficiencies. API ETL tools solve this by unifying and standardizing data using an API Integration tool approach, enabling faster and more reliable decisions.

Key benefits of API ETL tools:

- Data integration: API ETL tools consolidate data from multiple APIs into one central system, eliminating silos and ensuring teams work with consistent datasets.

- Automated workflows: Instead of manually extracting data from each source, API ETL tools automate the extraction, transformation, and loading process. This saves time and reduces human errors. Teams can focus more on insights rather than repetitive tasks.

- User-friendly interface: Most API ETL platforms offer no-code or low-code setups. Non-technical users can design and manage data pipelines seamlessly across the organization.

- Scalability: API ETL tools are built to handle large datasets without disrupting workflows, making them future-proof for operations at scale.

What are REST API ETL Tools?

REST API ETL tools are specialized solutions designed to extract, transform, and load data from RESTful APIs into target systems such as databases, data warehouses, or analytics platforms.

Organizations depend on data from SaaS platforms, cloud services, and third-party applications. REST API ETL tools enable seamless integration of live data into internal systems, providing a structured way to connect, manage, and move API-based data efficiently.

Key features of REST API ETL tools:

- Real-time data access: By directly connecting with RESTful web services, these tools ensure that data is always up to date. Real-time access enables organizations to track changes as they happen, supporting faster responses and more accurate reporting.

- Standardized data handling: Using standard HTTP methods like GET, POST, PUT, and DELETE, REST API ETL tools ensure consistency in data operations. You can work with multiple APIs while maintaining uniformity across systems.

- Improved data quality: These tools feature built-in features for cleansing, validating, and enriching data. Businesses can access accurate, high-quality information loaded into their systems, reducing errors downstream.

- Business agility: With continuous access to updated, trustworthy data, decision-making becomes faster and more informed. Businesses adapt quickly to market changes and maintain a competitive edge.

Top Public APIs ETL Tools

1. Hevo

Rating: 4.3(G2)

Hevo is a no-code data pipeline platform that simplifies data integration from public APIs to data warehouses. With its easy-to-use interface, users can effortlessly connect to REST APIs as a data source and automate data ingestion processes.

What makes Hevo unique?

- No-Code Platform: Hevo requires no coding knowledge, making it accessible for users at all technical levels.

- Real-Time Data Replication: Hevo enables real-time data updates, ensuring that your data warehouse is always current.

- Built-in Data Transformation: Hevo provides data transformation capabilities, allowing users to clean and enrich data during the ETL process.

2. Airbyte

Rating: 4.5(G2)

Airbyte is an open-source data integration tool designed to sync data from various sources, including public APIs, to data warehouses. It offers a wide range of connectors and allows users to create custom connectors easily.

Pros and Cons:

| Pros | Cons |

| Strong community support | Can require technical expertise for setup |

| Open-source and customizable | Limited out-of-the-box connectors |

3. Fivetran

Rating: 4.2(G2)

Fivetran is a data integration tool that automates data extraction, transformation, and loading from various sources, including public APIs. It provides a set of pre-built connectors that simplify the ETL process.

Pros and Cons:

| Pros | Cons |

| Quick setup with pre-built connectors | Pricing can be high for large data volumes |

| Automated schema migrations | Limited customization options |

4. Stitch Data

Rating: 4.4(G2)

Stitch Data is a cloud-based ETL service that allows users to replicate data from multiple sources, including public APIs, to data warehouses. It is known for its ease of use and robust data integration capabilities.

Pros and Cons:

| Pros | Cons |

| User-friendly interface | Limited transformation features |

| Supports a variety of sources | Pricing can be high for larger datasets |

5. Matillion

Rating: 4.4(G2)

Matillion is a cloud-native ETL tool that enables users to integrate data from public APIs into cloud data warehouses. It offers a rich set of features for data transformation and orchestration.

Pros and Cons:

| Pros | Cons |

| Easy integration with cloud platforms | Requires some technical knowledge |

| Robust transformation capabilities | Higher cost for small businesses |

6. Airflow

Rating: 4.3(G2)

Apache Airflow is an open-source platform designed for programmatically authoring, scheduling, and monitoring workflows. While it is not a dedicated ETL tool, it can orchestrate ETL tasks and connect to various public APIs.

Pros and Cons:

| Pros | Cons |

| Highly customizable workflows | There is a Steep learning curve for beginners |

| Strong community and support | Requires technical setup |

7. Talend

Rating: 4.0(G2)

Talend now acquired by Qlik is an ETL tool that provides a comprehensive suite of data integration and transformation capabilities. It supports connecting to various public APIs for data ingestion.

Pros and Cons:

| Pros | Cons |

| Active community and support | Can be complex to set up |

| Extensive data transformation tools | Requires a license for advanced features |

8. Pentaho Data Integration

Rating: 4.3(G2)

Pentaho is a powerful data integration and analytics platform that offers ETL capabilities for connecting to public APIs. It allows users to create and manage complex data workflows efficiently.

Pros and Cons:

| Pros | Cons |

| Good reporting and analytics capabilities | May require technical expertise for advanced use |

| Robust data integration features | UI can be less intuitive |

9. Microsoft SSIS

Rating: 4.3(G2)

SQL Server Integration Services (SSIS) is a data integration tool from Microsoft that allows users to create data-driven workflows for data extraction, transformation, and loading. It can connect to various public APIs.

Pros and Cons:

| Pros | Cons |

| Powerful data transformation capabilities | Steeper learning curve for new users |

| Deep integration with Microsoft products | Requires SQL Server license |

10. Rivery

Rating: 4.7(G2)

Rivery is a cloud-based ETL platform designed for data integration and management. It enables users to connect to public APIs and automate data workflows efficiently.

Pros and Cons:

| Pros | Cons |

| Supports a wide range of data sources | Pricing can be high for small businesses |

| User-friendly interface | Limited customization options |

Factors to consider when selecting the right ETL solution for your Public API’s:

Here’s a detailed breakdown of factors to consider when selecting an ETL solution for your public APIs:

1. API connectivity

The ETL tool should support connections to a wide range of public APIs and allow configuration for custom endpoints, headers, and authentication methods.

2. Data transformation capabilities

Look for tools that can cleanse, enrich, and reshape API data before loading it into your target system. Strong transformation features help maintain data quality and align raw API responses with your business schema.

3. Real-time vs. batch processing

Choose an ETL tool that offers both batch and streaming capabilities, so you can adapt to different API data refresh needs.

4. Ease of use

A no-code or low-code interface allows teams to build and manage ETL pipelines without deep technical expertise. It simplifies connecting to APIs, mapping data, and setting up transformations.

5. Transparent pricing

Transparent pricing lets you clearly understand costs based on data volume, API calls, or pipeline executions. Predictable budgeting eliminates hidden fees and empowers teams to scale ETL operations confidently.

6. Scalability

As data volume grows, your ETL solution should handle increased loads without bottlenecks. Scalable tools ensure that API extractions remain fast and reliable even as usage expands.

7. Security & compliance

Ensure the ETL tool encrypts data, supports secure authentication, and adheres to regulations like GDPR or HIPAA. This safeguards sensitive API data and maintains customer trust.

Step-by-Step Guide to Pull Data from Public APIs within Minutes:

Prerequisites:

- Access to Hevo’s product. You can go to Hevo and create a new account for Free.

- API URL that you want to access.

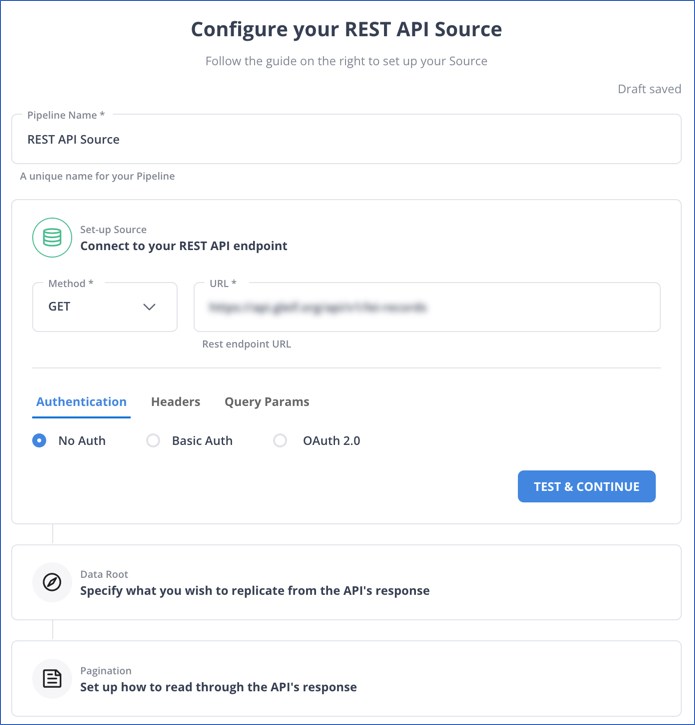

Step 1: Set up your Public API as a source

Step 1. a) Create a new Pipeline

Step 1. b) From the list of sources, search for REST API and configure your REST API source by filling in the required credentials.

Note: If you want to access a public API, you can keep the Authentication as No Auth

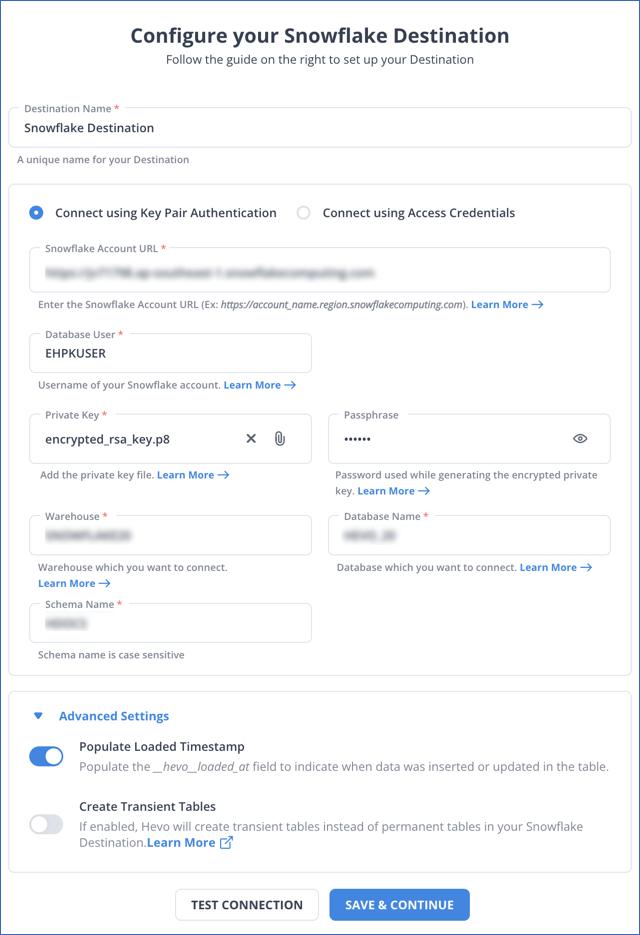

Step 2: Configure your Destination

Step 2. a) Next step is to configure your destination.

Note: For this guide I’ll pick my destination as Snowflake you can pick any destination from the vast number of options that Hevo provides.

Step 2. b) Search for Snowflake in the destinations window, and fill in the required credentials.

With these two simple and easy steps, you have successfully connected your public API to your destination.

Looking for the best ETL tools to connect your data sources? Rest assured, Hevo’s no-code platform helps streamline your ETL process. Try Hevo and equip your team to:

- Integrate data from 150+ sources(60+ free sources).

- Utilize drag-and-drop and custom Python script features to transform your data.

Why should you migrate from Public API’s to Data Warehouse?

- Data migration to a data warehouse will allow organizations to bring information from multiple APIs into one single source, which will help make such information much more accessible and easier to analyze.

- Enhanced Data Analysis: A data warehouse supports advanced analytics, which helps businesses to gain actionable insights from large datasets efficiently.

- Data Quality and Consistency: ETL helps in maintaining quality and consistency of data, and it makes the process of keeping proper and accurate records and reports relatively easy.

Conclusion

Moving data from public APIs to the data warehouse is important data management and analysis. Advanced ETL tools, such as Hevo, Airbyte, and Fivetran, can help organizations streamline their data integration processes and ensure access to the most updated information.

Discover powerful data extraction tools to streamline data retrieval from various sources for analytics and integration. Learn more at Data Extraction Tools.

When selecting an appropriate ETL solution, compatibility, scalability, and user-friendliness become matters of consideration, and in return, you are optimizing the entire data strategy to drive valuable insights. Then, investment in the appropriate tools will enable businesses to extract more value from their data assets while the data landscape continues to evolve.

FAQ on Best API ETL Tools

1. What is ETL in API?

ETL in API stands for extracting data from different APIs and then transforming the same into a suitable format so that it can be loaded into a target system like data warehouse, thereby enabling effective consolidation and analysis of data from multiple sources.

2. What are the 4 types of ETL tools?

a. On-Premises ETL Tools

b. Cloud-Based ETL Tools

c. Open-Source ETL Tools

d. Real-Time ETL Tools

3. What is the difference between API and ETL tools?

APIs are interfaces that allow the communication between different software systems and facilitate exchanges of data, while ETL tools extract data from different sources, transform it, and load it into a centralized repository where the data is analyzed.