Easily move your data from Twilio To Redshift to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Managing Twilio data effectively can be challenging without the right setup. Integrating Twilio to Redshift can be a game-changer.

In this article, you will learn how to integrate Twilio and Redshift using APIs and Twilio Studio. It also discusses an automated method used to connect Twilio and Redshift. In addition to that, it gives a brief introduction to Twilio and Redshift.

Table of Contents

What is Twilio?

- Developed in 2008, Twilio is a customer engagement platform many businesses use to build unique, personalized customer experiences. Twilio is used to democratize channels such as chats, videos, texts, emails, voice, and more, using APIs to build customer interactions on the media they prefer.

- Twilio provides complete telephony-based communication solutions. Twilio is already used by over a million developers and leading brands to create innovative communications solutions. Twilio Communications APIs allow web and mobile apps to have a voice, messaging, and video conversations. This makes it easier for developers to communicate between different apps.

- Twilio Frontline is a programmable mobile application that improves sales efficiency and outcomes by enabling digital relationships over messaging and voice. Start building meaningful relationships with customers by integrating the app with any CRM or customer database.

Key Features of Twilio

- Reliable connections: Twilio enables organizations to provide a seamless connection with customers, partners, and employees. It offers incredible reliability achieving a maximum of 99.95% uptime SLA.

- Cost-effective: Twilio provides a pay-as-you-go pricing scheme for different communication APIs. Therefore, organizations have to only pay for the services they are using. As a result, Twilio is a cost-effective platform, allowing organizations to control their communication budgets.

Method 1: Using Hevo Data to Connect Twilio to Redshift

Hevo Data, an Automated Data Pipeline, provides you with a hassle-free solution to connect Twilio to Redshift within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of loading data from Twilio to Amazon Redshift and enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Using Custom Code to Move Data from Twilio to Redshift

This method would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes, streaming data from Twilio to Redshift. This method is suitable for users with a technical background.

When integrated, moving data from Twilio into Amazon Redshift could solve some of the biggest data problems for businesses. Hevo offers a 14-day free trial, allowing you to explore real-time data processing and fully automated pipelines firsthand. With a 4.3 rating on G2, users appreciate its reliability and ease of use—making it worth trying to see if it fits your needs.

GET STARTED WITH HEVO FOR FREE[/hevoButton]

What is Amazon Redshift?

- Developed in October 2012, Amazon Redshift is a popular, reliable, and fully scalable warehousing service. It helps organizations manage exabytes of data and run complex analytical queries without worrying about administrative tasks.

- Since Amazon Redshift is a fully scalable data warehouse, all the administrative tasks, such as memory management, resource allocation, and configuration management, are handled on their own automatically.

- You can start using Amazon Redshift with a set of nodes called Amazon clusters. The Amazon clusters can be managed by the Amazon Command Line interface or Redshift Console. You can also manage clusters programmatically by leveraging Amazon Redshift Query API or AWS Software Development Kit.

- For storing and analyzing large data sets, Amazon Redshift is a fully managed petabyte-scale cloud data warehouse. Amazon Redshift’s ability to handle large amounts of data – it can process unstructured and structured data up to exabytes – is one of its key advantages.

Key Features of Amazon Redshift

- ANSI SQL: Amazon Redshift is based on ANSI-SQL, using industry-standard ODBC and JDBC connections that enable you to use existing SQL clients and BI tools. With ANSI SQL, users can seamlessly query files such as CSV, JSON, ORC, Avro, Parquet, and more.

- Fault tolerance: Fault tolerance refers to the systems working even when some of the components fail. Amazon Redshift monitors the health of your clusters continuously and makes your data warehouse clusters more fault-tolerant.

- Robust security: Amazon Redshift allows users to secure data warehouses without an additional cost. With Amazon Redshift, you can configure firewalls to control network access with a specific data warehouse cluster. Redshift enables you to train the column and the row-level security control to ensure that users can only view the data with authorized access. It also consists of other features such as end-to-end encryption, network isolation, tokenization, and auditing to provide reliability.

- Result caching: The result caching feature of Amazon Redshift can deliver a sub-second response time for repeated queries. Whenever a query is executed in Amazon Redshift, it can search the cache to get any search results from the previous queries.

- Fast performance: Amazon Redshift provides fast performance due to its features such as massively parallel processing, columnar data storage, result caching, data compression, query optimizer, and compiled code.

Connecting Twilio to Redshift

Method 1: Using Hevo Data to Connect Twilio to Redshift

Using Hevo Data, you can connect Twilio to Amazon Redshift in the following 2 steps:

Step 1.1: Configure Twilio as the Source

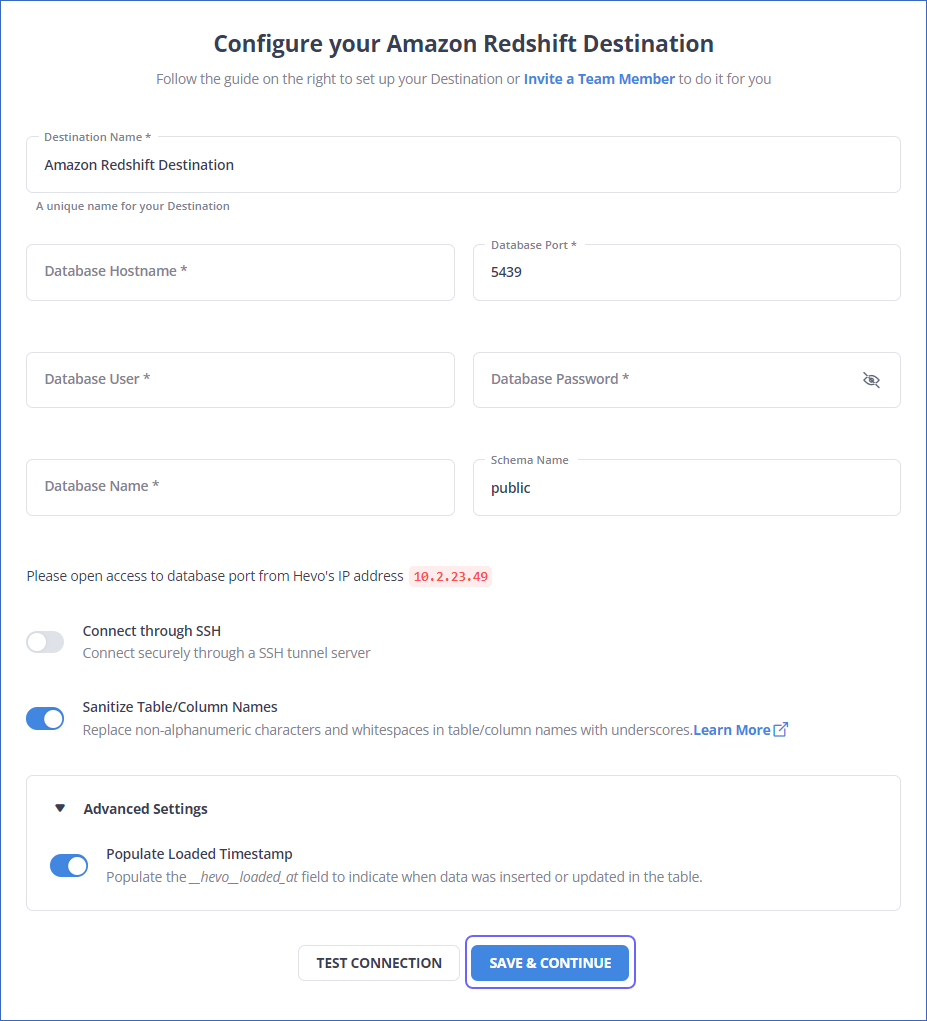

Step 1.2: Configure Redshift as the Destination

That’s it, literally! You have connected Twilio to Redshift in just 2 steps. These were just the inputs required from your end. Now, everything will be taken care of by Hevo. It will automatically replicate new and updated data from Twilio to Redshift.

Why is the Hevo Method Recommended?

- Smooth Schema Management: Hevo takes away the tedious task of schema management & automatically detects the schema of incoming data and maps it to your schema in the desired Data Warehouse.

- Exceptional Data Transformations: Best-in-class & Native Support for Complex Data Transformation at fingertips. Code & No-code Flexibility is designed for everyone.

- Quick Setup: Hevo with its automated features, can be set up in minimal time. Moreover, with its simple and interactive UI, it is extremely easy for new customers to work on and perform operations.

- Built To Scale: As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Live Support: The Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

Method 2: Using Custom Code to Move Data from Twilio to Redshift

You can connect Twilio to Amazon Redshift using Twilio APIs, Twilio Studio, and third-party ETL tools. In this article, you will learn to connect Twilio to Redshift by exporting and importing Twilio to Redshift.

Step 2.1: Exporting Twilio Data

You can export Twilio data in two ways – exporting Twilio data by using APIs and Twilio Studio.

Step 2.2: Exporting Twilio Data Using APIs

BulkExport is a new feature in Twilio that allows you to access and download files containing records of all incoming and outcoming messages.

With BulkExport, you can:

- Connect to a data warehouse consisting of the state of all your messages.

- Check the status of your messages without going back to the Twilio API.

You can get the final state of all your messages with the BulkExport file. BulkExport allows you to get a single zipped JSON file containing records of each message you sent or received on a given day.

When you enable BulkExport, you can download a file each day that includes the messages from the previous day.

BulkExport is useful for:

- Checking the delivery status of your messages.

- Loading message data into a data store.

- Checking how many messages are sent and received.

- Archiving your activity.

When you get the BulkExport file, you can view the messages and load them to another system. You must fetch the resulting file from the Twilio API to use these messages.

The below code is an example of the BulkExport JSON block.

{

"date_updated": "2017-08-03T03:57:34Z",

"date_sent": "2017-08-03T03:57:33Z",

"date_created": "2017-08-03T03:57:32Z",

"body": "Sent from your Twilio trial account - woot woot!!!!",

"num_segments": 1,

"sid": "SMandtherestofthemessagesid",

"num_media": 0,

"messaging_service_sid": "MGandtherestofthemessagesid",

"account_sid": "ACandtherestoftheaccountsid",

"from": "+14155551212",

"error_code": null,

"to": "+14155552389",

"status": "delivered",

"direction": "outbound-api"

}

// a lot of other messages

You can read more about BulkExport.

Step 2.3: Export Twilio Data Using Twilio Studio

You can export Twilio data using the Twilio Studio following the below steps.

- Create a Flow

The first step in Twilio Studio is to create a Flow that represents the workflow you want to build for your project.

Follow the below steps to create a Flow.

- Log into your Twilio account in the Twilio Console.

- Go to the Studio Flows section.

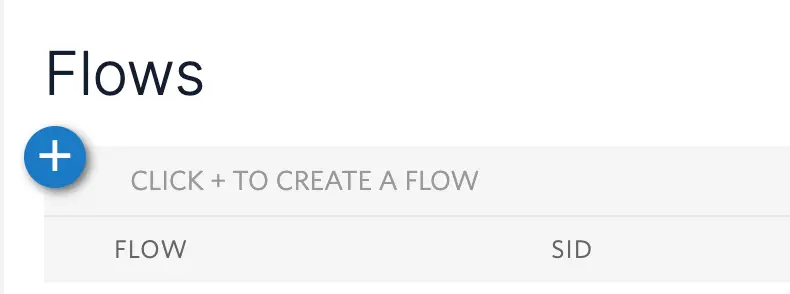

- Click on Create new Flow. You can see the window below if you have already created a Flow before. Click on the ‘+’ sign to create a new Flow.

- Give a name to your Flow and click on Next.

- After naming your Flow, you can see the list of possible templates you can use. You can also start with an empty template by clicking on the Start from scratch option and then clicking on Next.

- After creating the Flow, you need to make the Flow’s Canvas, where you can build the rest of the logic for your project. You can follow the video tutorial to navigate and understand Flow’s Canvas.

- After managing the Canvas, you can use the Widgets, which are known as the building blocks of Twilio Studio. Widgets allow you to handle incoming actions and respond immediately by performing tasks such as sending a message, making a phone call, capturing the information, and more. You can read more and implement working Widgets using the video tutorial.

Step 2.4: Importing Data to Amazon Redshift

There are several methods to import data to Amazon Redshift:

- Importing data to Amazon Redshift using the COPY command.

- Importing data to Amazon Redshift using the ETL tools.

- Importing data to Amazon Redshift using AWS Data Pipeline.

- Importing data to Amazon Redshift using Amazon S3.

The COPY command specifies the files’ location where the data must be fetched. It is also used to manifest files with a list of file locations. These files can be in several formats such as CSV, JSON, Avro, and more.

In this tutorial, you will learn to load data (.csv file) to Amazon Redshift using Amazon S3. This process consists of two steps. Loading the csv file into the S3 bucket and then loading the csv file into Amazon Redshift from Amazon S3.

Follow the below steps to load a csv file into Amazon Redshift.

- Navigate to the csv file you want to import into Amazon Redshift, load it to the Amazon S3 bucket, and zip that file.

- When the file is in the S3 bucket, you can use the COPY command to load it to the desired table.

COPY <schema-name>.<table-name> (<ordered-list-of-columns>) FROM '<manifest-file-s3-url>'

CREDENTIALS'aws_access_key_id=<key>;aws_secret_access_key=<secret-key>' GZIP MANIFEST;- You have to use the ‘csv’ keyword in the COPY command to make Amazon Redshift identify the file format, as shown below.

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV;

-- Ignore the first line

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV

INGOREHEADER 1;Limitations of Using Custom Code to Move Data from Twilio to Redshift

- Although using APIs might seem an effortless task for exporting Twilio to Redshift, it requires more time and technical experts.

- Besides, you can also manually export Twilio to Redshift using the Twilio Studio, but it can not process the real-time data.

- As a result, to eliminate such problems, you can use third-party ETL tools like Hevo, which provides seamless and autonomous integration between Twilio and Amazon Redshift.

Conclusion

- In this article, you learned to connect Twilio to Redshift using two different methods.

- Twilio allows organizations to communicate with their customers by using several APIs that provide interactive and personalized communication.

- Organizations can also export their campaign data to Amazon Redshift, Snowflake, and more to understand and optimize their business operations while reaching out to customers.

- Hevo Data offers a No-code Data Pipeline that can automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Marketing, Customer Management, etc.

Take Hevo’s 14-day free trial to experience a better way to manage your data pipelines.

You can also check out the unbeatable pricing, which will help you choose the right plan for your business needs.

FAQs on Twilio to Redshift

1. How do I transfer data to Redshift?

Transfer data to Redshift using the COPY command. This command loads data from files in Amazon S3, DynamoDB, or other sources into Redshift tables.

2. How do I push data to AWS Redshift?

You can push data to AWS Redshift using the COPY command for bulk loading from S3 or tools like AWS Data Pipeline, AWS Glue, or third-party ETL tools.

3. Can we load data from S3 to Redshift?

Yes, you can load data from S3 to Redshift using the COPY command, which is efficient for bulk data loading.

4. How do I connect to the Redshift Database?

Provide the cluster endpoint, port, database name, username, and password to connect to Redshift using JDBC or ODBC drivers, psql, or SQL clients like pgAdmin.

5. How do I migrate from RDS to Redshift?

You can migrate from RDS to Redshift by exporting data from RDS to S3 (using AWS DMS or mysqldump) and loading the data into Redshift using the COPY command.