As more and more businesses move their data to the public cloud, one of the most pressing issues is how to keep it safe from illegal access and exfiltration.

Using a tool like HashiCorp Vault, you may have greater control over your sensitive credentials and fulfill cloud security regulations.

An introduction to HashiCorp Vault, as well as HashiCorp Vault High Availability and a few examples of how it may be used to enhance cloud security, is provided in this article.

Table of Contents

What is HashiCorp Vault?

When it comes to keeping secrets secure, HashiCorp Vault is a great tool to have on hand. The term “secret” refers to confidential identification that must be closely guarded and scrutinized to prevent unauthorized access to confidential data. Passwords, API keys, SSH keys, RSA tokens, and one-time passwords (OTPs) are all examples of secrets.

HashiCorp Vault’s unilateral interface for managing any secret in your infrastructure makes controlling and managing access a breeze. In addition, you can keep track of who accessed what and produce extensive audit records.

HashiCorp Vault is a tool that helps businesses manage access to secrets and securely transmit them within their organization. Any form of sensitive credentials that must be tightly controlled and monitored and can be used to unlock sensitive information is defined as a secret. Passwords, API keys, SSH keys, RSA tokens, and OTP are all examples of secrets.

By providing you with a unilateral interface to manage every secret in your infrastructure, HashiCorp Vault makes it very easy to control and manage access.

Migrating customer data can be complex and error-prone. Hevo simplifies the process with automated, no-code migration, ensuring your data seamlessly integrates to your desired destination.

Why Choose Hevo?

- Automated Migration: Avoid manual errors and save time.

- Easy Setup: No technical expertise required.

- Trusted by 2000+ Customers

Take the word for industry leaders such as Thoughtspot, which relies on Hevo for seamless data integration.

Get Started with Hevo for FreeKey Features of Vault

In a low-trust environment, HashiCorp Vault is a secret management tool that is specifically designed to control access to sensitive credentials. It can be used to store sensitive data while also dynamically generating access to specific services/applications on a lease basis.

Passwords can be used for authentication, or dynamic values can be used to generate temporary tokens that allow you to access a specific path. Who gets what access is determined by policies written in HashiCorp Configuration Language (HCL).

To grant access to systems and secrets, HashiCorp Vault employs identity-based access. Humans and machines are the two major actors when it comes to identity authentication.

Role-based Access Control (RBAC) is used to manage human access by granting permission and restricting access to either create and manage secrets or manage the access of other users based on the secret value they are logged in with.

Managing machine access, on the other hand, entails granting access to various servers or secrets. Because of HashiCorp Vault’s dynamic nature, you can create temporary secrets and revoke access in the event of a breach.

Vault offers “encryption as a service,” encrypting data both in transit and at rest (using TLS) (using AES 256-bit CBC encryption). This safeguards sensitive information in two ways: while it travels across your network and while it is stored in your cloud and datacenters.

It’s simple to update and deploy new keys across the distributed infrastructure with centralized key management.

When installing apps that involve the usage of secrets or sensitive data, software like Vault is vital. Vault protects sensitive data through UI, CLI, or HTTP API with high-level policy administration, secret leasing, audit logging, and automatic revocation. Key use audits, key rollovers, and automated revocation are all possible thanks to the leases connected with each secret in the database.

Provides unambiguous “break glass” procedures for employees in the event of compromise via several revocation options. In addition to a consistent interface for storing secrets, it provides strong access control and a complete audit trail. It does not need any specific hardware, such as a physical HSM, to be installed to use it (Hardware Security Modules).

Understanding Vault High Availability

- Vault High Availability: Intuitive Accessibility

- Vault High Availability: Cluster Architecture

- Vault High Availability: HA Mode

Vault High Availability: Intuitive Accessibility

Secrets are typically managed using Vault in production situations. Thus, any Vault service outage might directly impact clients who use it. Vault High Availability is intended to provide a highly available deployment to minimize the impact of a machine or process failure.

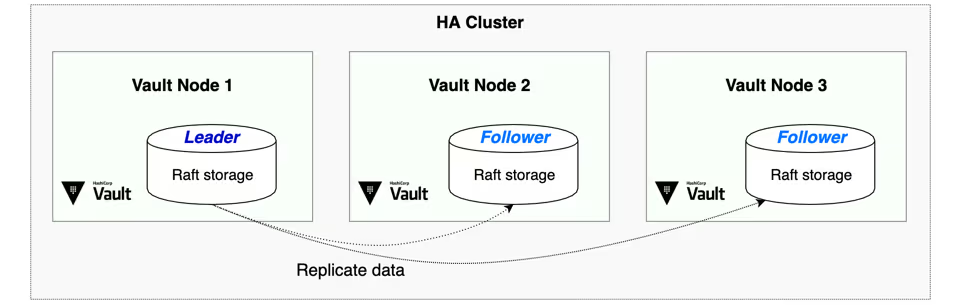

Two extra states are added to Vault High Availability servers while operating in HA mode: standby and active. All requests (reads and writes) are handled by a single active node in a Vault cluster, and all standby nodes forward all requests to the active node.

Note that with Version 0.11, standby nodes can handle most read-only queries and act as reading replica nodes. Vault Enterprise has the Performance Standby Nodes functionality. High volume Encryption as a Service (Transit Secrets Engine) queries may benefit from this. A Performance Standby lesson and documentation are available to help you learn more.

Vault High Availability: Cluster Architecture

The Vault’s architecture’s fundamental purpose is to reduce downtime by making it highly available (HA).

As a first step, you built a modest proof of concept in Vault’s development mode and ran it locally to understand how the system works.

As a result, Hashicorp suggests creating a multi-node cluster of Consul to operate Vault High Availability mode. As a result, you opted for a generic and managed storage backend option instead of administering the Consul cluster.

MySQL (RDS) was chosen as the backend database since you needed a solution that could be easily migrated to another cloud in the future.

In the end, you went with EC2 instances running behind an internal ALB for the final configuration. Using this configuration, the ALB will only route queries to the active Vault instance and consider the inactive node offline (as shown in the diagram by HTTP status 429 when unsealed). In your situation, the Vault High Availability is designed to provide high availability and not to add capacity. All requests are served by a single Vault instance, while the rest are hot replicas. One of the standby nodes is automatically activated if the primary node fails.

Vault High Availability: HA Mode

The next sections go into further depth on the many ways servers communicate with one another and how each sort of request is handled. For a HA cluster to function, at least some of the prerequisites for redirection mode must be satisfied.

Server-to-Server Transmissions

The active node advertises information about itself to the other nodes in both ways of request processing. Information is sent between the active node and unsealed backup nodes of the Vault system rather than through a public network.

Direct connection between servers is eliminated instead of solely encrypted entries in the data store used to convey state when using the client redirection approach.

There must be direct contact between the servers to use the request forwarding mechanism. A newly-generated private key and a newly-generated self-signed certificate are advertised through the encrypted data store entry by the active node to execute this safely.

TLS 1.2 connections are established between the standby nodes and the main node using the published cluster address. The active node receives client requests, serializes them, and sends them across this TLS-protected communication channel. The active node then sends a response to the standby node, which transmits the response back to the asking user.

Request Forwarding

For example, if request forwarding is allowed (by default in 0.6.2), clients may override it by providing the X-Vault-No-Request-Forwarding header to a value other than zero.

A few configuration settings are required for a successful cluster setup, although some of these parameters may be computed automatically.

Redirecting Clients

Requests with a non-empty value for the X-Vault-No-Request-Forwarding header will be sent to the active node’s redirect address with a 307 status code.

This is the fallback mechanism if request forwarding is disabled or an error occurs when forwarding a request. A redirect address is always needed for all Vault High-Availability (HA) systems.

Certain HA data storage drivers can autodetect the redirect address, but in most cases, it must be explicitly configured through a top-level setting in the configuration file. The VAULT API ADDR environment variable has priority over API ADDR when specifying the value of this key.

The API ADDR value should be adjusted to something else depending on how Vault is set up. Vault servers may be accessed in two ways: directly by clients or via a load balancer.

A complete URL (HTTP/HTTPS) is required in both circumstances, rather than just an IP address and port.

Ease of Use

- The API ADDR for each node should be that node’s address when clients may access Vault directly. For instance, suppose there are two Vault nodes:

- A available at:

https://a.vault.mycompany.com:8200

- B, which may be accessed at the following address:

https://b.vault.mycompany.com:8200

- In this case node, A would set its API ADDR to

https://a.vault.mycompany.com:8200

- While node B would be setting its API ADDR at

https://b.vault.mycompany.com:8200

- As a result, all requests received by node B will be sent to node A’s API ADDR @

https://a.vault.mycompany.com

- While node A is the active node and the other way around.

Load Balancers

Clients may initially employ load balancers to contact one of the Vault servers. However, each Vault node may be accessed directly by the clients. However, clients have direct access to the Vault servers for redirection reasons and hence should be configured according to the preceding section.

In other words, if the only way to reach the Vault servers is through the load balancer, the API ADDR for each node should be identical: to the load Balancer’s IP. If a client reaches a standby node, it will be sent back to the load balancer, where the load balancer’s settings should have been changed to know the IP of the current leader. This may lead to a redirect loop. Therefore it’s not a good idea if it can be prevented.

Per-Node Cluster Listener Addresses

Vault’s configuration file provides a listener block for each address value on which Vault listens for requests. Vault High Availability listens for server-to-server cluster requests in the same way that each listener block has a cluster address. Its IP address and port will be automatically configured to the same as the address value and one higher if this value is left blank (so, by default, port 8201).

Consider that only nodes that are actively listening have listeners. When a node is activated, it will begin listening to the cluster, and when it is put into standby mode, it will cease doing so.

Cluster Address for Each Node

Cluster addr, like api addr, is a top-level value in the configuration file that each node should announce to the standbys to use for server-to-server interactions if it is active. Each node’s cluster address value in the listener blocks, including port, should be set to a hostname or IP address that a standby may use to access that node. Note that this will always be pushed to HTTPS since only TLS connections are utilized between servers.

Alternatively, you may use the VAULT CLUSTER ADDR environment option to provide this value.

Storage Assistance

Consul, ZooKeeper, and other storage backends now offer a high availability mode. There is a chance that these may change over time. Therefore the configuration page should be consulted.

For new installations of Vault, HashiCorp recommends using Vault Integrated Storage as the HA backend for Vault High Availability. In addition, the Consul Storage Backend is a viable alternative that is often used in live environments. You may use the chart to help you decide which choice is best for your needs.

When developing new backends or extending high-availability support for existing backends, we’d appreciate your help. Adding HA support requires physical implementation. Storage backend interfaces through HABackend.

Scalability

Increasing the number of teams and apps using Vault High Availability means that you need to find out how to increase the system’s capacity. Vault horizontal scalability isn’t a viable option since it would need numerous backup nodes in the cluster, which isn’t ideal. In addition, since standby nodes are only accessible in the Vault Enterprise version, you cannot utilize them for read-only applications.

It’s important to consider increasing the storage backend’s IO capacity to keep Vault from being constrained by its limits rather than the computing needs.

You are confident in the scalability of the backend since you are running MySQL RDS in Multi-AZ mode. You can also add extra open files and RAM to the Vault nodes so that they can handle more queries.

Also, take a look at how you can deploy and run PostgreSQL High Availability and MongoDB High Availability easily step-by-step.

Conclusion

This article talks about High Availability in HashiCorp Vault in detail. In addition to that, it gives a brief introduction to HashiCorp Vault.

Hevo Data, a No-code Data Pipeline provides you with a consistent and reliable solution to manage data transfer between a variety of sources and a wide variety of Desired Destinations, with a few clicks. Hevo Data with its strong integration with 100+ sources (including 40+ free sources) allows you to not only export data from your desired data sources & load it to the destination of your choice, but also transform & enrich your data to make it analysis-ready so that you can focus on your key business needs and perform insightful analysis using BI tools.

Want to take Hevo for a spin?

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQ

1. What is HashiCorp Vault?

HashiCorp Vault is a tool designed to securely store and access secrets, such as API keys, passwords, and certificates.

2. What is Vault High Availability?

Vault High Availability ensures continuous accessibility of secrets and secure data even if one server node fails.

3. Why is High Availability important in Vault?

High Availability minimizes downtime, enhances reliability, and ensures data remains accessible during server failures.