Data Challenges

DocOn was founded in 2015 with the aim of providing technology solutions to improve the Doctor-Patient experience in an Out-Patient Department (OPD) and help them transition from a pen-paper state to a fully digitized clinic. Initially, as their data volume was low, Navneet and the team were just using Google Sheets for data aggregation and reporting. Even they were not using a data warehouse and all their data was stored in MongoDB in the unstructured form.

Most of our use cases were on MongoDB, and querying MongoDB was a difficult task. Writing a basic query on MongoDB used to take a lot of time and also there were multiple limitations as the complex joins and stored procedures weren’t possible. To move our data from MongoDB to Google Sheets, we had to take a lot of help from our backend engineering team and it was impacting our product development.

To create insightful business reports for various stakeholders, business analysts at DocOn were struggling to get data beyond L0-metrics, many times they had to just rely on customer feedback and they used to take almost 2 to 3 days for creating a single report.

The absence of a data warehouse and limitations of the MongoDB database led to computational complexities as well as slow turnaround time on business reporting. As our business grew, our business teams started asking for more data points for analyzing multiple use cases. To provide data beyond L0 metrics, it was important for us to move away from the Google Sheets setup and implement a concrete data engineering process.

The Solution

As the volume of data grew, the data & analytics team at DocOn realized the importance of automating their entire data integration process and having a data warehouse that can easily be used for querying any data for business reporting.

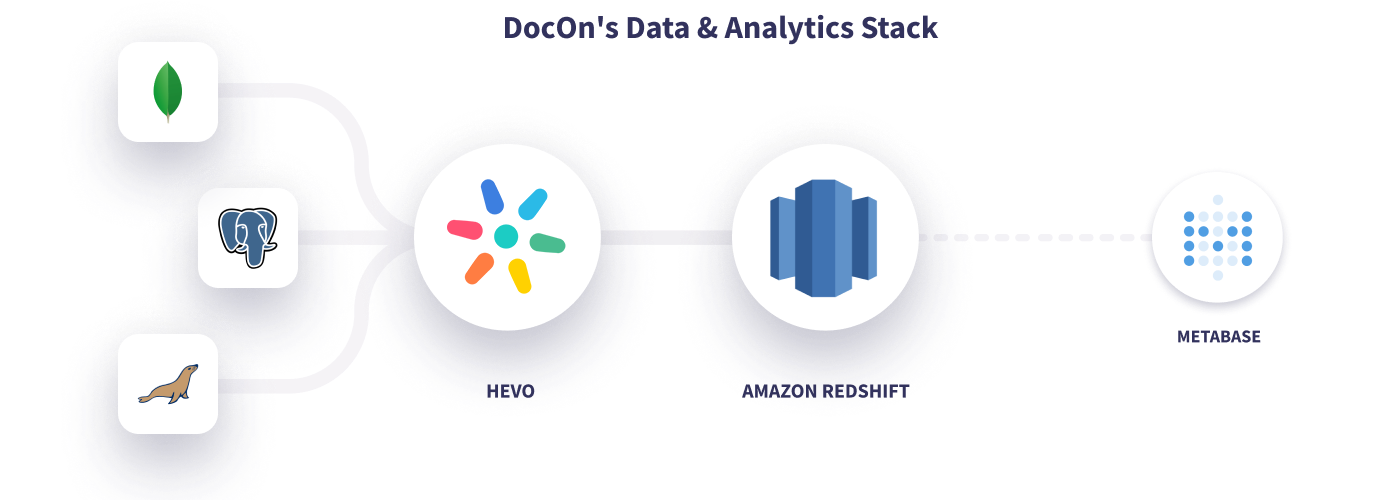

DocOn’s technology stack was already on AWS, hence Navneet and his team decided to set up their data warehouse on Amazon Redshift. After successfully setting up the warehouse, they started focusing on building the data engineering stack. They were primarily looking for a data integration tool that can help them connect and transform their unstructured MongoDB data into a structured form and store it in Redshift.

After trying Alooma and Hevo for about 2 weeks, we decided to go ahead with Hevo. We were highly impressed with some of the features like advanced transformations, real-time data flow, end-to-end data encryption, and Replay Queue. As we deal with healthcare data, we have to be very cautious about selecting the right tool that can help us comply with the industry data standards. We were highly impressed with Hevo as it meets all our expectations.

Using Hevo, DocOn streamlined their data integration process and easily created a single source of truth for their entire business data. They connected MongoDB, PostgreSQL, and MariaDB with their Redshift data warehouse. DocOn uses Metabase as its primary reporting tool which pulls the required data directly from Redshift.

Hevo has immensely helped us in simplifying our data engineering process. It smartly catches all the exceptions that arise while transforming or mapping the data from source to destination, stores that in the Replay Queue, and notifies us via slack and email. This way we never lose our data and spend negligible time in maintaining our data pipelines.

Key Results

After implementing Hevo, Navneet and Animesh were able to generate their entire data at the production layer without any latency and it was easily made available to all the business teams for reporting and analytics. Switching to this new data engineering stack has helped DocOn significantly reduce their reporting turnaround time from 2 days to 30 mins.

Creating and maintaining data pipelines on Hevo is super easy. After setting up our data stack, we were able to aggregate our data in the data warehouse from day 1 and the data accuracy was well above 99%. This has helped us bring more data visibility within the team and catalyze a critical ‘data-first’ paradigmatic shift in the company.

With Hevo, we were able to process over 50 million events every month and it saved almost 90 hours of our engineering efforts. Our business teams were able to track performance beyond L0-metrics across multiple use cases and that helped us make better business decisions and improve customer retention.

After using Hevo for more than 2 years, Navneet, Animesh, and their entire business team were extremely happy with the data quality and data security. They found Hevo to be the most Robust, Reliable & Efficient

tool for creating data pipelines.

Excited to see Hevo in action and understand how a modern data stack can help your business grow? Sign up for our 14-day free trial or register for a personalized demo with our product expert.