When it comes to migrating data from MongoDB to PostgreSQL, I’ve had my fair share of trying different methods and even making rookie mistakes, only to learn from them. The migration process can be relatively smooth if you have the right approach, and in this blog, I’m excited to share my tried-and-true methods with you to move your data from MongoDB to PostgreSQL.

In this blog, I’ll walk you through two easy methods: using Hevo’s automated methods for a faster and simpler approach and a manual method for more granular control. Choose the one that works for you. Let’s begin!

Table of Contents

What is MongoDB?

MongoDB is a modern, document-oriented NoSQL database designed to handle large amounts of rapidly changing, semi-structured data. Unlike traditional relational databases that store data in rigid tables, MongoDB uses flexible JSON-like documents with dynamic schemas, making it an ideal choice for agile development teams building highly scalable and available internet applications.

At its core, MongoDB features a distributed, horizontally scalable architecture that allows it to scale out across multiple servers as data volumes grow easily. Data is stored in flexible, self-describing documents instead of rigid tables, enabling faster iteration of application code.

You can also take a look at MongoDB’s key use cases to get a better understanding of how it works.

Ditch the manual process of writing long commands to connect MongoDB to PostgreSQL and choose Hevo’s no-code platform to streamline your data migration.

With Hevo:

- Easily migrate different data types like CSV, JSON, etc.

- 150+ connectors like PostgreSQL and MongoDB(including 60+ free sources).

- Eliminate the need for manual schema mapping with the auto-mapping feature.

Experience Hevo and see why 2000+ data professionals, including customers such as Thoughtspot, Postman, and many more, have rated us 4.3/5 on G2.

Get Started with Hevo for FreeWhat is PostgreSQL?

PostgreSQL is a powerful, open-source object-relational database system that has been actively developed for over 35 years. It combines SQL capabilities with advanced features to store and scale complex data workloads safely.

One of PostgreSQL’s core strengths is its proven architecture focused on reliability, data integrity, and robust functionality. It runs on all major operating systems, has been ACID-compliant since 2001, and offers powerful PostgreSQL extensions like the popular PostGIS for geospatial data.

What are the Benefits of Migrating MongoDB Data to PostgreSQL?

- Enhanced Data Consistency: Synchronizing data ensures consistency across MongoDB and PostgreSQL, eliminating discrepancies that could affect analytics, reporting, or application performance.

- Improved Data Availability: Real-time replication provides up-to-date data in both databases, ensuring uninterrupted access to critical information for applications and users.

- Real-Time Decision-Making: By maintaining synchronized data, organizations can enable real-time analytics and faster decision-making, which is critical for dynamic business environments.

- Scalability: Synchronization supports distributed systems, allowing businesses to scale operations effectively by leveraging the strengths of both databases—MongoDB’s document-based architecture and PostgreSQL’s relational capabilities.

- Operational Efficiency: With synchronized databases, teams can optimize workflows, streamline ETL processes, and reduce redundancy in data management.

- Enhanced Security: Synchronization tools often come with built-in encryption and access controls, ensuring secure data transfer between databases.

Also, you can check out how you can migrate data from MongoDB to MySQL easily and explore other destinations where you can sync your MongoDB data.

Differences between MongoDB & PostgreSQL

I have found that MongoDB is a distributed database that excels in handling modern transactional and analytical applications, particularly for rapidly changing and multi-structured data. On the other hand, PostgreSQL is an SQL database that provides all the features I need from a relational database.

- Data Model: MongoDB uses a document-oriented data model, but PostgreSQL uses a table-based relational model.

- Query Language: MongoDB uses query syntax, but PostgreSQL uses SQL.

- Scaling: MongoDB scales horizontally through sharding, but PostgreSQL scales vertically on powerful hardware.

- Community Support: PostgreSQL has a large, mature community support, but MongoDB’s is still growing.

If you want a deeper comparison, check out this blog: MongoDB vs PostgreSQL

Method 1: Manually Migrating MongoDB Data To PostgreSQL

To manually transfer data from MongoDB to PostgreSQL, you can follow a straightforward ETL (Extract, Transform, Load) approach. Here’s how you can do it:

Prerequisites and Configurations

- MongoDB Version: For this demo, I am using MongoDB version 4.4.

- PostgreSQL Version: Ensure you have PostgreSQL version 12 or higher installed.

- MongoDB and PostgreSQL Installation: Both databases should be installed and running on your system.

- Command Line Access: Make sure you have access to the command line or terminal on your system.

- CSV File Path: Ensure the CSV file path specified in the COPY command is accurate and accessible from PostgreSQL.

Step 1.1: Extract the Data from MongoDB

First, I use the mongoexport Utility to export data from MongoDB. I ensure that the exported data is in CSV file format. Here’s the command I run from a terminal:

mongoexport --host localhost --db bookdb --collection books --type=csv --out books.csv --fields name,author,country,genreThis command will generate a CSV file named books.csv. It assumes that I have a MongoDB database named bookdb with a book collection and the specified fields.

Step 1.2: Create the PostgreSQL Table

Next, I create a table in PostgreSQL that mirrors the structure of the data in the CSV file. Here’s the SQL statement I use to create a corresponding table:

CREATE TABLE books (

id SERIAL PRIMARY KEY,

name VARCHAR NOT NULL,

position VARCHAR NOT NULL,

country VARCHAR NOT NULL,

specialization VARCHAR NOT NULL

);This table structure matches the fields exported from MongoDB.

Step 1.3: Load the Data into PostgreSQL

Finally, I use the PostgreSQL COPY command to import the data from the CSV file into the newly created table. Here’s the command I run:

COPY books(name,author,country,genre)

FROM 'C:/path/to/books.csv' DELIMITER ',' CSV HEADER;This command loads the data into the PostgreSQL books table, matching the CSV header fields to the table columns.

Pros and Cons of the Manual Method

Pros:

- It’s easy to perform migrations for small data sets.

- I can use the existing tools provided by both databases without relying on external software.

Cons:

- The manual nature of the process can introduce errors.

- For large migrations with multiple collections, this process can become cumbersome quickly.

- It requires expertise to manage effectively, especially as the complexity of the requirements increases.

Method 2: Using Third-Party Tools Like Hevo Data

As someone who has leveraged Hevo Data for migrating between MongoDB and PostgreSQL, I can attest to its efficiency as a no-code ELT platform. What stands out for me is the seamless integration with transformation capabilities and auto schema mapping. Let me walk you through the easy 2-step process:

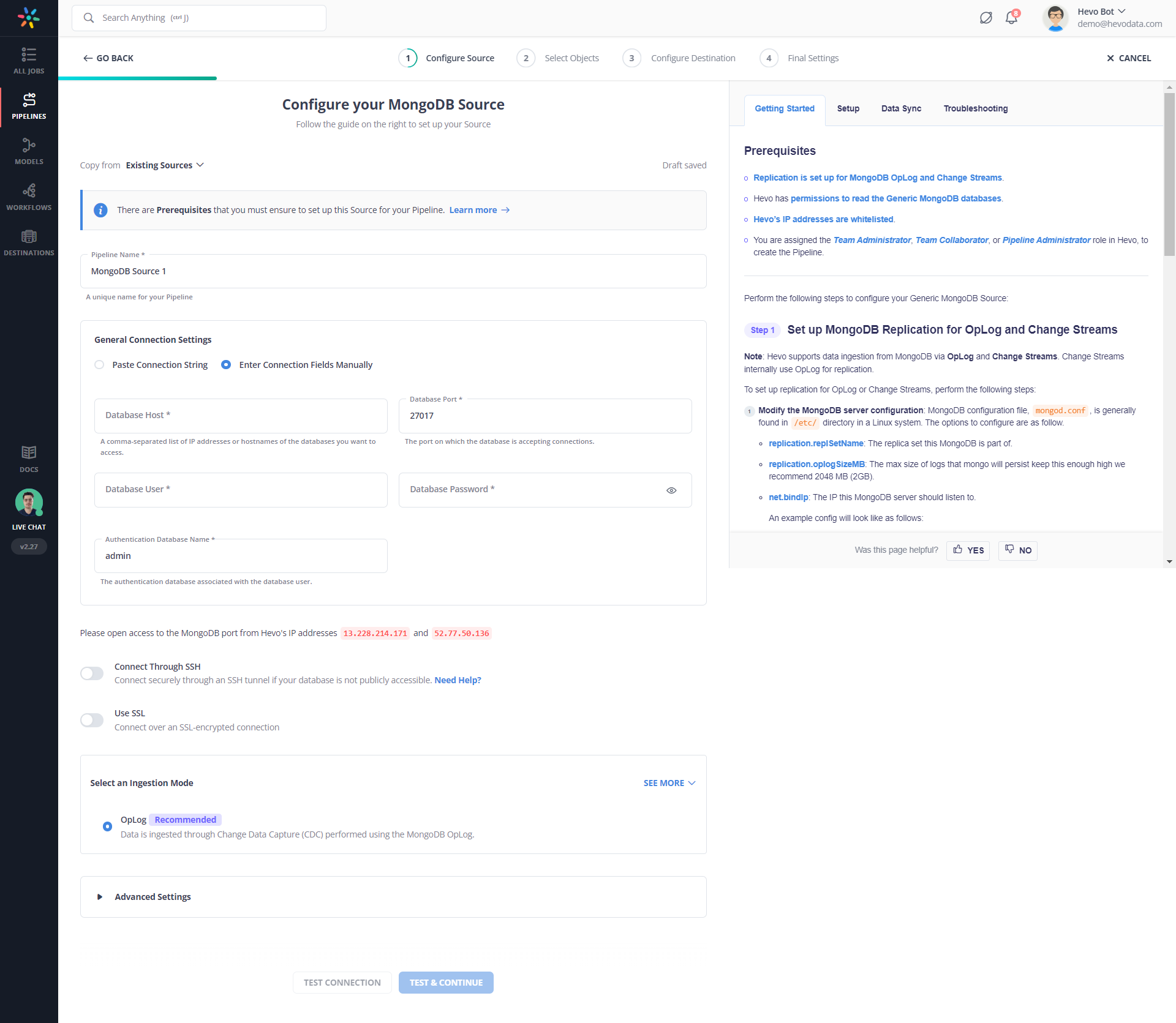

Step 2.1: Configure MongoDB as your Source

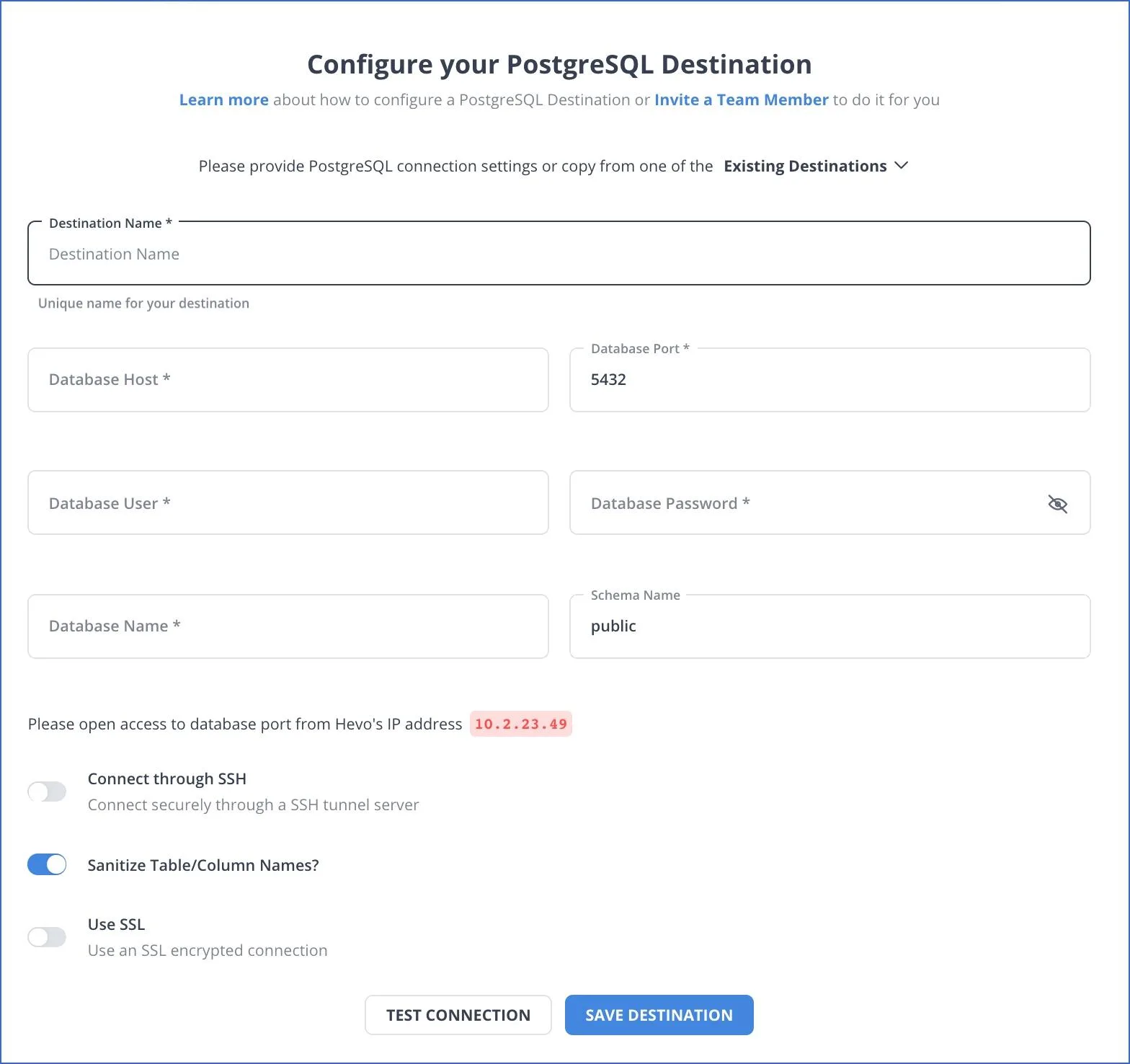

Step 2.2: Setup PostgreSQL as your Destination

You have successfully synced your data between MongoDB and PostgreSQL. It is that easy!

If you are looking for a way to perform this same process vice versa, check out how you can Sync Data from PostgreSQL to MongoDB seamlessly to easily load data into MongoDB.

Performance Monitoring and Verification Post-Migration

Ensuring a smooth transition during data migration involves not only moving data but also maintaining its accuracy, performance, and reliability in the new environment. Here are key aspects to focus on:

1. Real-Time Monitoring and Error Handling

- Why It Matters: Real-time monitoring helps identify bottlenecks and errors during the migration process, ensuring issues are addressed promptly.

- Best Practices: Utilize tools that provide detailed logs and alert mechanisms for migration tasks, allowing for quick error resolution.

2. Verifying Data Integrity

- Why It Matters: Ensuring that the migrated data matches the source data is crucial for maintaining trust in the system and supporting decision-making.

- Action Steps:

- Perform row-level and column-level data comparisons.

- Use checksum or hash functions to validate data consistency.

- Conduct spot checks for high-priority data.

3. Post-Migration Performance Adjustments

Why It Matters: Migrated systems often require tuning to achieve optimal performance in the new environment.

Action Steps:

- Monitor query execution times and resource usage.

- Adjust indexing, caching, and partitioning strategies.

- Leverage performance monitoring tools to identify and resolve inefficiencies.

Best Practices For a Seamless Migration

- Data Compatibility Assessment: Differences between MongoDB’s schema-less JSON-compatible storage and PostgreSQL’s relational schema should be reviewed in order to conduct transformations appropriately.

- Schema Definition: The PostgreSQL schema should be defined in an optimized way that realizes relational constraints, indexes, normalization, data integrity, and performance enhancement.

- Use ETL Tools: Leverage ETL tools like Hevo Data, Talend, or custom scripts to carry out suitable data transformation and migration.

- Batch Data Migration: Migrate large amounts of data in batches for better performance and error recovery.

- Indexing and Performance Tuning: Optimize indexes, queries, and partitioning in PostgreSQL for better performance post-migration.

- Data Validation: Compare record counts, data types, and integrity between MongoDB and PostgreSQL to validate the accomplished migration.

- Handle Nested Data: MongoDB’s nested documents should be flattened or restructured into relational tables, or PostgreSQL’s JSON/JSONB can be used to carry semi-structured data.

What’s your pick?

When deciding how to migrate your data from MongoDB to PostgreSQL, the choice largely depends on your specific needs, technical expertise, and project scale.

- Manual Method: If you prefer granular control over the migration process and are dealing with smaller datasets, the manual ETL approach is a solid choice. This method allows you to manage every step of the migration, ensuring that each aspect is tailored to your requirements.

- Hevo Data: If simplicity and efficiency are your top priorities, Hevo Data’s no-code platform is perfect. With its seamless integration, automated schema mapping, and real-time transformation features, Hevo Data offers a hassle-free migration experience, saving you time and reducing the risk of errors.

Conclusion

Migrating data from MongoDB to PostgreSQL can be seamless with the right approach. Whether you opt for the manual ETL method for greater control or Hevo’s automated no-code platform for efficiency, planning, schema mapping, and performance optimization is key. By ensuring data integrity, monitoring post-migration performance, and following best practices, you can unlock PostgreSQL’s full potential.

Want to simplify your data migration? Sign up for a free trial of Hevo today and experience effortless, automated data transfers!

FAQ

1. How to convert MongoDB to Postgres?

Step 1: Extract Data from MongoDB using the mongoexport Command.

Step 2: Create a Product Table in PostgreSQL to Add the Incoming Data.

Step 3: Load the Exported CSV from MongoDB to PostgreSQL.

2. Is Postgres better than MongoDB?

Choosing between PostgreSQL and MongoDB depends on your specific use case and requirements.

3. How to sync MongoDB and PostgreSQL?

Syncing data between MongoDB and PostgreSQL typically involves implementing an ETL process or using specialized tools like Hevo, Stitch, etc.

4. How to transfer data from MongoDB to SQL?

1. Export Data from MongoDB

2. Transform Data (if necessary)

3. Import Data into SQL Database

4. Handle Data Mapping