With the year 2024 nearing its end, it is time to examine the significant trends and advancements that have impacted the field of data engineering. If you’re like me, you’ve been riding the data wave, watching how tools, technologies, and strategies evolve at breakneck speed. If there’s one thing I’ve learned as a data enthusiast, it’s that this field never stops evolving. New technologies, shifting priorities, and the ever-expanding world of possibilities make data engineering one of the most exhilarating professions today. This year has brought some remarkable trends that are reshaping the way we think about and handle data.

From the glitzy buzzwords like GenAI and LLMs to the underappreciated heroes like FinOps and Data Governance, there’s a lot to unpack. So, let’s dive into the biggest data engineering trends of 2024 and see what the experts have to say about them.

Table of Contents

1. Data Consolidation: Combining Everything

Raise your hands if your organization is also drowning in the sea of siloed data!! Yeah, I thought so. Organizations today have data flowing from various sources, and consolidating that data into one destination is crucial.

Recent surveys have estimated that US enterprises, on average, access over 400 data sources in their operations; furthermore, 20% of these enterprises rely on over 1,000 sources, emphasizing the need for a comprehensive data consolidation management plan.

Why was it trending in 2024?

- Teams waste less time stitching together datasets.

- Real-time analytics becomes more of a reality.

My take on Data Consolidation?

Consolidation is like cleaning out a messy garage. It’s boring at first, but when you’re done, you feel unstoppable. You should integrate disparate systems into unified platforms, ensuring consistency and accessibility. Platforms like Snowflake, Databricks, and BigQuery dominate this space, making it easier to merge transactional and analytical data into a single source of truth.

According to a report by Forrester, 55% of IT professionals report data silos as a significant hurdle in achieving consolidation goals, and over 73% of organizations aim to consolidate data sources by 2025.

2. Snowflake: A Rising Star in Data Warehousing

Snowflake is the name in data engineering, and 2024 proved that. What started as a cloud data warehouse has evolved into a full-blown ecosystem. If you’re not using Snowflake yet, you’ve probably been hearing about it at every conference and meeting.

This year, Snowflake is getting even cooler (pun intended). They’re enhancing Snowpark’s developer environment to make working with data pipelines a breeze. Their unstructured data support means you can do even more with video, images, and logs.

Why was it trending in 2024?

- Snowflake heavily invested in enabling AI-driven applications. The platform experienced a surge in adopting AI-friendly tools like Python (571% growth) and witnessed over 33,000 AI applications being developed, emphasizing its role in the AI ecosystem.

- You can now build apps inside Snowflake itself, which is perfect for simplifying workflows.

My take on Snowflake?

Snowflake has not only refined its own product plans but also saw the trends and the future of enterprise data. They continue to innovate at Snowflake to shape that future. Snowflake is revolutionizing data with innovations like Horizon for blazing-fast data streaming and Polaris Catalog for unified data discovery and governance. You can also power data engineering for AI and ML, apps, and analytics. You can see 4.6x faster performance while maintaining full governance and control. The Snowflake ecosystem is so well-rounded now that it’s hard to find a reason not to use it.

According to the Snowflake Data Trends for 2024, Over 9,000 enterprises globally utilize the Snowflake Data Cloud, demonstrating its widespread adoption for advanced data operations.

Looking for the best ETL tools to connect your Snowflake account? Rest assured, Hevo’s no-code platform seamlessly integrates with Snowflake to streamline your ETL process. Try Hevo and equip your team to:

- Integrate data from 150+ sources(60+ free sources).

- Simplify data mapping with an intuitive, user-friendly interface.

- Instantly load and sync your transformed data into Snowflake.

Choose Hevo and see why Deliverr says- “The combination of Hevo and Snowflake has worked best for us. ”

Get Started with Hevo for Free3. Streaming Data: Real-Time is the Prime Time

Even though batch processing is one of the most effective ways of data migration, in 2024, it was all about streaming data—whether it’s tracking user actions, stock prices, or IoT sensor data. Nowadays, people have too much to do and too little time for that. The need for faster, real-time analytics for better decision-making is very apparent.

This is where the need for streaming data platforms, like Apache Flink and Hevo, emerges. These tools will offer faster analysis and real-time data synchronization. Streaming data feels like having a live feed into the pulse of your business. It’s dynamic, exciting, and just a little addictive. Once you taste it with real-time insights, there’s no going back to a slow version.

Why was it Trending in 2024?

- Immediate insights mean faster decision-making and improved business operations.

- The availability of scalable cloud platforms and Data-as-a-Service (DaaS) models makes it easier for businesses of all sizes to handle and derive value from real-time data.

My Take on Streaming Data?

Streaming data is like the caffeine boost your data pipelines need—instant energy and action. It’s a must-have if your business needs to react quickly, but it can get pricey if not managed well. Imagine a retail company dynamically adjusting inventory based on real-time sales data or a healthcare provider spotting anomalies in patient vitals as they happen. That’s the power of streaming. Yes, it comes with complexities (and costs), but the benefits far outweigh the challenges.

According to a report by Markets and Markets, the streaming analytics market is anticipated to grow from USD 29.53 billion in 2024 to USD 125.85 billion in 2029, at a CAGR of 33.6% during the forecast period.

4. FinOps: Making Cost Optimization a Breeze

Okay, let’s talk about money. FinOps, or Financial Operations, has become a must-know in 2024. We have already established that we are drowning in the sea of data, and managing such a huge volume of data can become very expensive very soon. It’s like bringing accountants and engineers together to ensure every dollar spent on the cloud delivers value.

Why was it trending in 2024?

- As companies grew their cloud infrastructures, specifically with the emergence of AI/ML and newer technologies such as containers and serverless, there was an increased imperative to manage and optimize the increasing cloud expenses

- Constrained budgets made the need to control cloud waste and ensure optimal resource utilization become top-of-mind for companies, with the improvement of reserved instances and savings plans

My Take on FinOps?

FinOps is highly beneficial to organizations adopting cloud services and wishing to manage their cloud expenditure effectively. Trust me—FinOps isn’t just about saving money; it’s about using your resources effectively. And hey, who doesn’t like having a little extra cash to reinvest in shiny new tools?

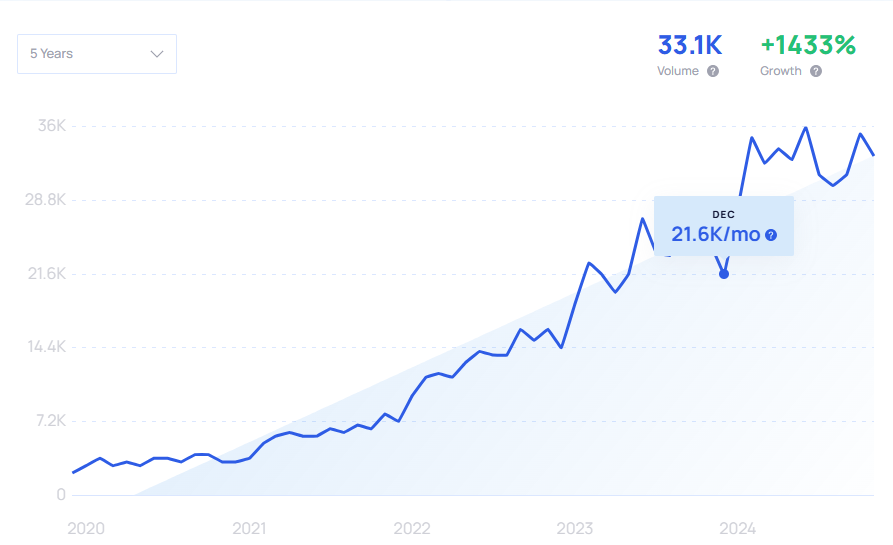

This graph shows how many people searched for “FinOps” from 2020 to 2024. The number went up to 36k/mo in June.

5. Data Lakes and Lakehouses: From Swamps to Smart Lakes

Data Lakes have been known to us for a while, but let’s be honest: they weren’t that helpful to any of us until the concept of Data Lakehouse came to light. A Data Lakehouse is an architectural approach that integrates the capabilities of data lakes and data warehouses.

Scalable storage solutions like Azure Databricks, Amazon S3, Snowflake, etc., have become a go-to choice for users. Many papers, like one by Michael Armbrust, have argued that the data warehouse architecture as we know it today will wither in the coming years and be replaced by a new architectural pattern, the Lakehouse.

Why was it trending in 2024?

- Increased demand is attributed to unlimited scalability and flexibility.

- They handle structured and unstructured data, so you can store everything from transaction logs to video files.

- Rising demand from data scientists, data developers, and business analysts.

My Take on Data Lakes and Lakehouses?

With the rise of data lakes and lakehouses, businesses don’t have to choose between flexibility and performance. In 2025, modern data lakes will incorporate advanced features such as cataloging, lineage tracking, and automated data quality checks, ensuring they are valuable to organizations looking for scalable data storage combined with data governance and management.

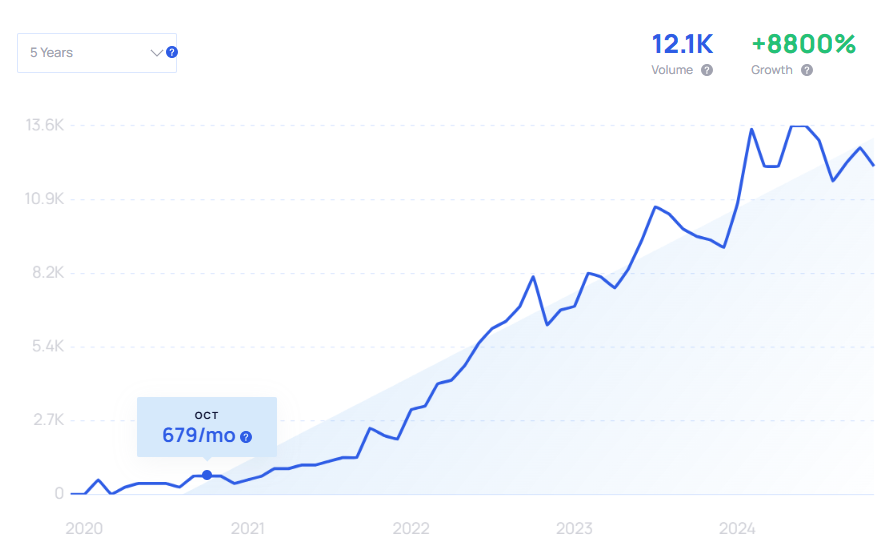

This graph shows how many people searched for “Data Lakehouse” from 2020 to 2024. The global search volume increased to 12.1k/mo in November, with +8800% growth from 2020 to 2024.

According to a report by Research and Markets, The Data Lake Market grew from USD 10.02 billion in 2023 to USD 12.12 billion in 2024. It is expected to grow at a CAGR of 21.38%, reaching USD 38.90 billion by 2030.

6. GenAI: Meet Your New Data Superhero

Generative AI is the talk of the town. It is best known for generating text, images, and code, but in the data world, it’s transforming workflows. It can automate ETL processes, design schemas, and even debug pipelines. The biggest promise of GenAI is that it makes technology available to less technical users as well.

OpenAI’s release of ChatGPT and then GPT-4, Meta’s decision to open source Llama and Llama 2, and a host of other announcements and innovations around the application of advanced AI have stirred more excitement and driven real progress in the development and enterprise adoption of large language models. Innovative ChatGPT application development is pushing the boundaries of AI integration across various industries, transforming how businesses leverage conversational AI technologies.

Why was it trending in 2024?

- Tools like Google Vertex AI and Snowflake’s ML integrations make GenAI accessible for data teams.

- AI assistants are getting better at understanding natural language commands.

- Businesses are embracing AI as a way to boost productivity without adding headcount.

My take on GenAI?

GenAI feels like having a personal assistant who works 24/7 and never complains. It can personalize content based on individual preferences, making it more engaging and relevant to users. Many AI tools can already generate stunning images and videos, write flawless content, and rewrite and edit like pros. It’s not perfect yet, but the potential is mind-blowing. Among them, tools like an AI humanizer can refine content to better match human tone and style. If your organization isn’t already exploring it, you are missing out on potentially driving substantial productivity gains in your organization.

The global generative AI market size was valued at $10.5 billion in 2022 and is projected to reach $191.8 billion by 2032, growing at a CAGR of 34.1% from 2023 to 2032.

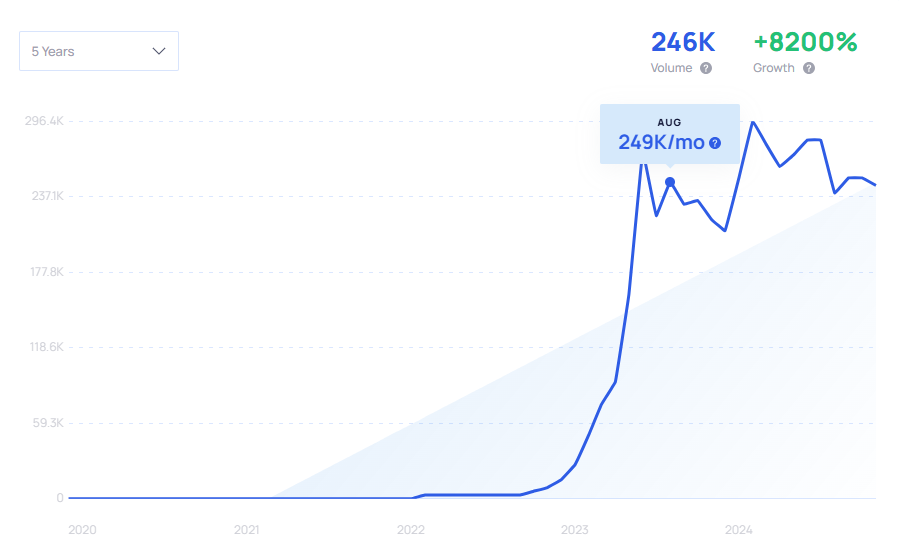

This graph shows how many people searched for “Generative AI” from 2020 to 2024. The global search volume increased to 246k/mo in November, with a +8200% growth from 2020 to 2024.

7. Iceberg: The Table Format You Definitely Need

Iceberg is a high-performance format for huge analytic tables. It is designed to make data lakes more reliable. It brings ACID transactions, schema evolution, and time travel to your lakes and turns chaos into order.

It brings the robustness and simplicity of SQL tables to big data. It ensures that engines like Spark, Trino, Flink, Presto, Hive, and Impala can safely and efficiently work with the same table simultaneously.

Why was it Trending in 2024?

- The increased popularity of data lakes and the rise in the need to improve them.

- Integration with tools like Spark, Hive, and Flink makes Iceberg the standard for structured data in lakes.

- Iceberg simplifies complex data pipelines by ensuring consistency and reliability.

My Take on Iceberg

Iceberg is the main character that your Data Lakes didn’t know they needed to be a hit. It turns chaotic, unstructured lakes into well-oiled machines. I love how it simplifies version control and makes querying large datasets painless. If you are serious about modernizing your lakes, then Apache Iceberg should be at the top of your list.

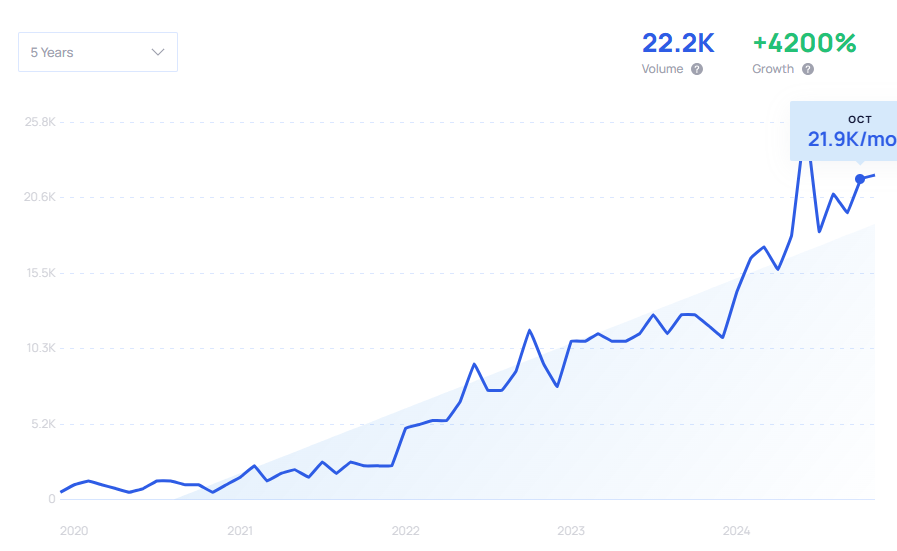

This graph shows how many people searched for “Apache Iceberg” from 2020 to 2024. The global search volume increased to 22.4k/mo in November, with a +4200% growth from 2020 to 2024.

8. Data Governance & Observability: The Data Police is Here

According to Amazon Web Services(AWS) CDO Survey, CDOs spend a substantial part of their time focusing on data governance activities (63 percent in 2023 vs. 44 percent in 2022). Governance is crucial because it ensures your data is secure, accurate, and compliant. Observability tracks pipeline performance, detects issues, and provides lineage to understand where problems originate.

Why was it trending in 2024?

- Stricter regulations like GDPR updates are driving governance adoption.

- Observability tools like Monte Carlo help teams proactively fix pipeline issues.

- As pipelines grow more complex, visibility is critical to avoid downtime. Hence, it is imperative to implement strong governance strategies.

My Take on Data Governance and Observability

Governance and observability may not be flashy concepts, but they’re the foundation of a reliable data strategy. Without them, you’re flying blind. Imagine driving a car without a dashboard—you wouldn’t get far. These tools might not generate excitement, but they’re non-negotiable in today’s data-driven landscape.

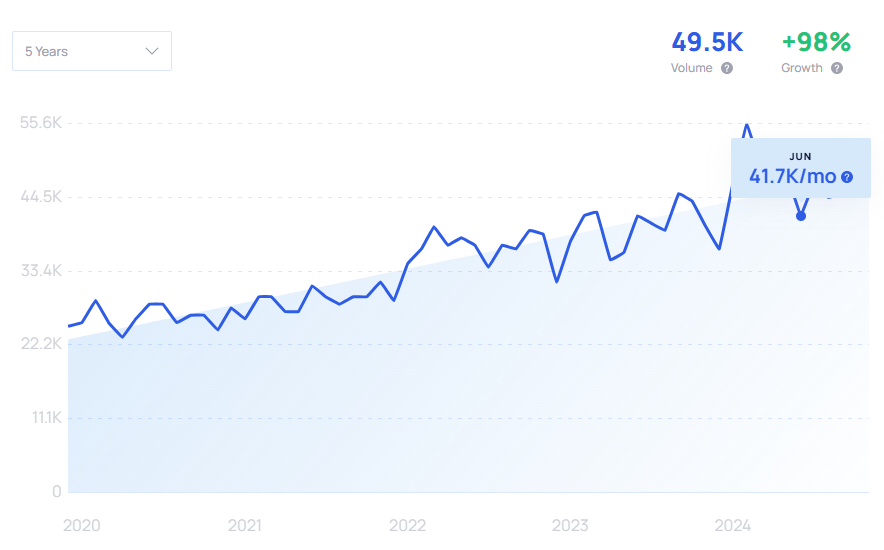

The graph shown below demonstrates how many people searched for “Data Governance” from 2020 to 2024. The global search volume increased to 49.5k/mo in November, with a +98% growth from 2020 to 2024.

9. LLMs and RAG: Smart AI for Smarter Use Cases

The introduction of Large Language Models has transformed the field of GenAI drastically. These models have shown remarkable abilities in understanding and creating human-like text and decisions. The concept of Retrieval-Augmented Generation (RAG) working with LLMs has been a groundbreaking development. Retrieval-augmented generation (RAG) enables Large Language Models (LLMs) to pull real-time information from external sources, making their responses more accurate and relevant.

Why were they trending in 2024?

- Businesses started using RAG for smarter chatbots and customer support.

- It bridges the gap between static AI models and dynamic, up-to-date data.

- Smarter BI Tools: LLMs can fetch real-time analytics and contextualize insights.

My Take on LLMs and RAG

LLMs are already incredible, but RAG takes them to the next level by making them dynamic and context-aware. Think of it as turning a static encyclopedia into a live, interactive research assistant.

Picture this: your AI chatbot not only understands your customer’s tone but also pulls up their purchase history to suggest the perfect upsell. Or imagine an AI agent that can scan internal company documents on the fly to deliver precise answers during meetings.

From May 2023 through January 2024, in the Streamlit community, chatbots went from 18% of LLM apps to 46%. And climbing.2. Within the Streamlit developer community, between April 27, 2023, and Jan. 31, 2024, we saw 20,076 unique developers work on 33,143 LLM-powered apps(including apps still in development). Source: Snowflake Data Trends 2024

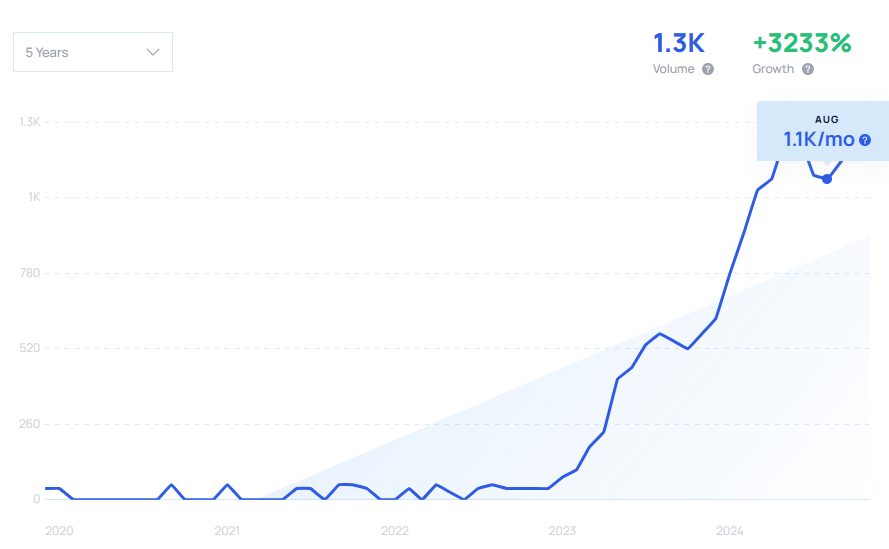

This graph shows how many people searched for “LLMs” from 2020 to 2024. The global search volume increased to 1.3k/mo in November, with a +3233% growth from 2020 to 2024.

Explore our blog on AI in data engineering for deeper insights and practical applications.

10. S3 Tables: Unleash Your Data’s Potential

S3 Tables are revolutionizing data analytics by bringing the power of Apache Iceberg to Amazon S3. Experience blazing-fast queries, unmatched scalability, and dramatically reduced costs.

Imagine a world where your data is instantly accessible, your analyses are lightning-fast, and your costs are significantly lower. S3 Tables make this a reality.

Why was it trending in 2024?

- Blazing Fast: Experience significantly faster query performance and increased transaction throughput.

- Scalable & Durable: Leverage the power and reliability of S3 for seamless scaling and robust data protection.

- Cost-Effective: Reduce storage and analytics costs by utilizing the cost-efficiency of S3.

My Take on S3 Tables

S3 Tables are a game-changer for data analytics. By seamlessly integrating the power of Apache Iceberg with the cost-effectiveness and scalability of Amazon S3, they offer a compelling solution for businesses of all sizes. We’re excited about the potential of S3 Tables to accelerate data-driven decision-making, reduce costs, and unlock new levels of business agility.

Summing It Up

2024 has been an incredible year for data engineering, full of innovation and breakthroughs. These trends aren’t just shaping how we work—they’re redefining what’s possible. Whether you’re building smarter search tools, scaling AI models, or transforming customer experiences, these technologies are where the action is. So, what’s your favorite trend? Let’s connect and geek out together!

If you want to incorporate these trends in your data strategy and stay ahead of your competitors, sign up for Hevo and easily experience the power of streaming data.

Frequently Asked Questions

1. What is the difference between streaming data and normal data?

Unlike static data, which is stored in databases and files, streaming data is dynamic and unbounded; it is continuously updated.

2. What does LLM stand for in ChatGPT?

LLM stands for Large Language Model.

3. What is Apache Iceberg used for?

Apache Iceberg is used for managing large datasets in data lakes, supporting ACID transactions, schema evolution, and efficient query performance in lakehouse architectures.

4. What are the 3 pillars of FinOps?

Three pillars of FinOps are- Inform, Optimize, and Operate