As modern technology continues to enable organizations to create and store data with ease, data volumes have exploded. The continuous launch of different technology services lets users work with data using the Cloud Service Platforms whenever and wherever they want. However, when working with Cloud Service platforms, security should be the primary concern for any organization. To provide a secure environment to users and organizations, Databricks has the Secret Scopes feature that works as a safe storage space for users’ credentials and sensitive information present in the application or code. Databricks Secret Scope also provides users with a third-party Secret Scopes Management System where users can store and retrieve secrets whenever needed.

In this article, you will learn about Secret Scopes, Secrets, different ways to Create Secret Scopes and Secrets, and Working with Secret Scopes.

Table of Contents

Prerequisites

- Fundamental Knowledge of Authentication.

What is Databricks?

Databricks is an Apache Spark-based analytics platform that unifies Data Science and Data Engineering across Machine Learning tasks. It is an industry-leading Analytics service provider as its collaborative features allow professionals from different teams to work together to build data-driven products and services. Instead of working with different environments for different levels of Data Operations, Databricks allows developers to use Databricks as a one-stop-shop for all data operations, from data collection to application development.

What is Secret Scopes?

A Secret Scope allows you to manage all the secret identities or information used for API authentication or other connections in a workspace. But, you have to provide a unique name to the secret information you are storing as it helps you in getting access to the actual secret information. The naming of a Secret Scope has specific criteria in which the name should be non-sensitive and easily readable to the users using the workspace.

val storageAccountName = "mystorageaccount"

val containerName = "demo"

val accessKey = "fuiqweXSNAeuyn8wqehd78"

spark.conf.set(

"fs.azure.account.key." + storageAccountName + ".blob.core.windows.net",

accessKey

)For example, consider the above code. Here the access key is present directly in the code. Storing credentials and sensitive information inside a code is not advisable in terms of Data Security and Privacy since anyone with access to code can get hold of authentication keys, which can allow them to fetch or update data without your permission.

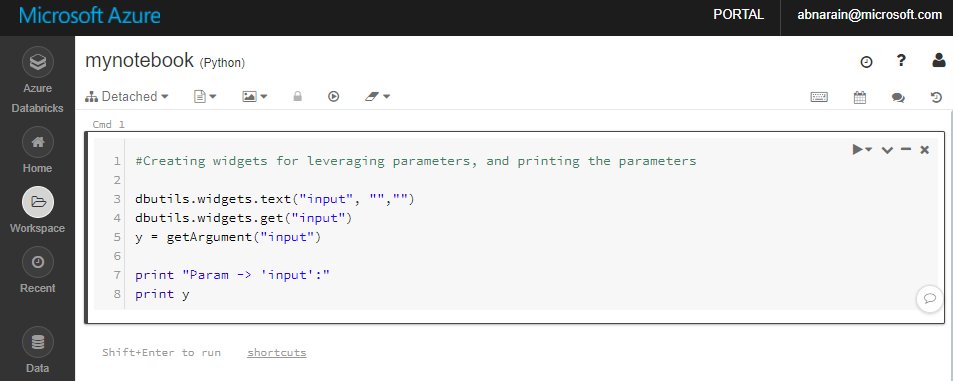

Secret Scopes can be created and managed by using Azure Key Vault and Databricks CLI method. But, the example shown above is for managing Secret Scopes with the Databricks CLI method using DB Utility libraries. This library allows developers to store and access credentials or sensitive information safely and securely by sharing the code with different teams for different purposes.

What is a Secret?

Inside a Secret Scope, Secrets are present in the form of a Key-value Pair that stores Secret information like access key, access Id, reference key, etc. In a Key-value pair, keys are the Identifiable Secret Names, and values are the Auditory Data interpreted as strings or bites.

Secret Management in Databricks

In Databricks, every Workspace has Secret Scopes within which one or more Secrets are present to access third-party data, integrate with applications, or fetch information. Users can also create multiple Secret Scopes within the workspace according to the demand of the application. However, a Workspace is limited to a maximum of 100 Secret Scopes, and a Secret Scope is limited to a maximum of 1000 Secrets.

Types of Databricks Secret Scopes

Since security is the primary concern when working with Cloud services, instead of storing passwords or access keys in Notebook or Code in plaintext, Databricks provides two types of Secret Scopes to store and retrieve all the secrets when and where they are needed.

The two types of Databricks Secret Scopes are:

1) Azure Key Vault-Backed Scope

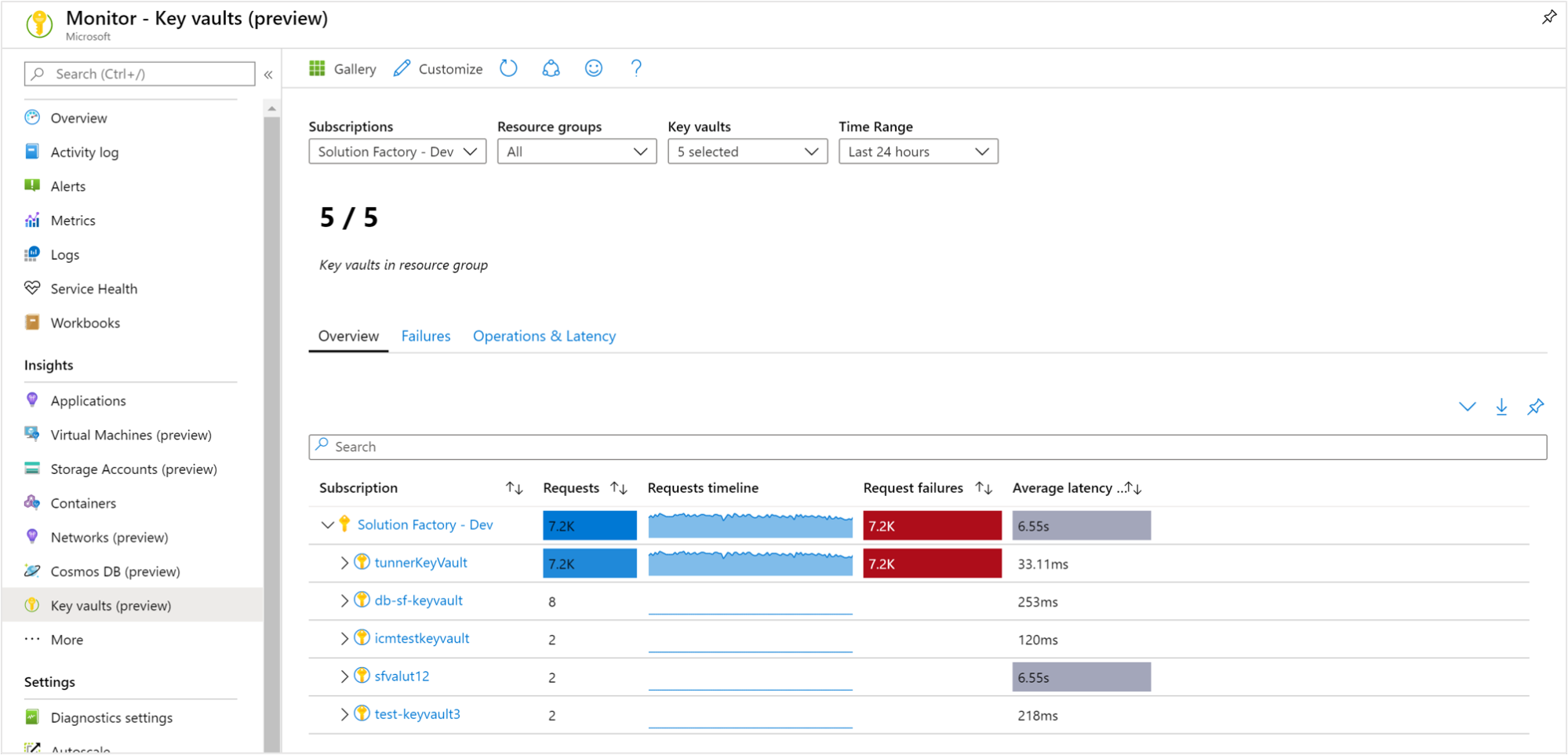

To refer and access Secrets, users can create a Secret Scope backed by the Azure Key Vault. It allows users to leverage all the Secrets in the corresponding Key Vault instance from a particular Secret Scope. However, this Azure Key Vault-Backed Scope is only supported for the Azure Databricks Premium plan. Users can manage Secrets in the Azure Key Vault using the Azure Set Secret REST API or Azure portal UI.

2) Databricks-Backed Scope

In this method, the Secret Scopes are managed with an internally encrypted database owned by the Databricks platform. Users can create a Databricks-backed Secret Scope using the Databricks CLI version 0.7.1 and above.

Permission Levels of Secret Scopes

There are three levels of permissions that you can assign while creating each Secret Ccope. They are:

- Manage: This permission is used to manage everything about the Secret Scopes and ACLS (Access Control List). By using ACLs, users can configure fine-grained permissions to different people and groups for accessing different Scopes and Secrets.

- Write: This allows you to read, write, and manage the keys of the particular Secret Scope.

- Read: This allows you to read the secret scope and list all the secrets available inside it.

Seamlessly integrate your data into Databricks using Hevo’s intuitive platform. Ensure streamlined data workflows with minimal manual intervention and real-time updates.

- Seamless Integration: Connect and load data into Databricks effortlessly.

- Real-Time Updates: Keep your data current with continuous real-time synchronization.

- Flexible Transformations: Apply built-in or custom transformations to fit your needs.

- Auto-Schema Mapping: Automatically handle schema mappings for smooth data transfer.

Read how Databricks and Hevo partnered to automate data integration for the Lakehouse.

Get Started with Hevo for Free

Working with Databricks Secret Scopes

You can create and manage Secret Scopes by using both Azure Key Vault and Databricks CLI methods.

1) How to Create a Secret Scope?

To begin with, let’s take a peek on what are the requirements to Create Databricks Secret Scopes:

- Azure Subscription

- Azure Databricks Workspace

- Azure Key Vault

- Azure Databricks Cluster (Runtime 4.0 or above)

- Python 3 (3.6 and above)

For creating Azure Key Vault-Backed Secret Scopes, you should first have access to Azure Key Vault.

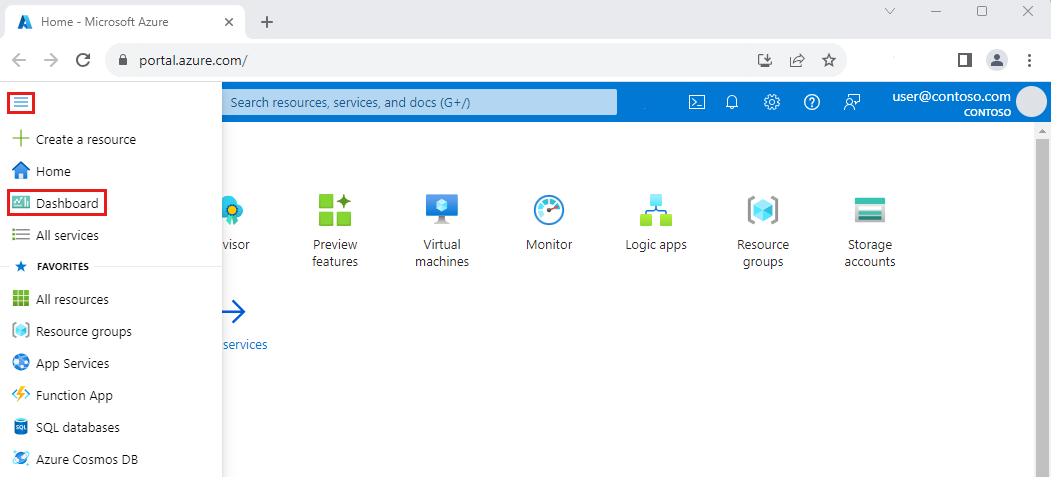

- To create an Azure Key Vault, open the Azure Portal in your browser.

- Log in to your Azure account.

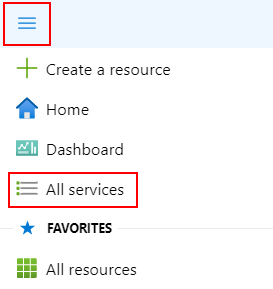

- Click on All Services in the top left corner and select the Key Vault from the given options.

- After clicking on add, the Create Key Vault tab will open.

- Enter all the required fields.

- Click Create and refresh the page.

- Now, the created key vault is displayed on your “All Resources” section.

- Right-click on the created the key vault, select properties, and note the DNS Name and Resource Id, which will be used later.

A) Creating Azure Key Vault-Backed Secret Scopes with Azure UI

- In your web browser, visit the Azure Portal and log in to your Azure account.

- Now, open your Azure Databricks Workspace and click on Launch Workspace.

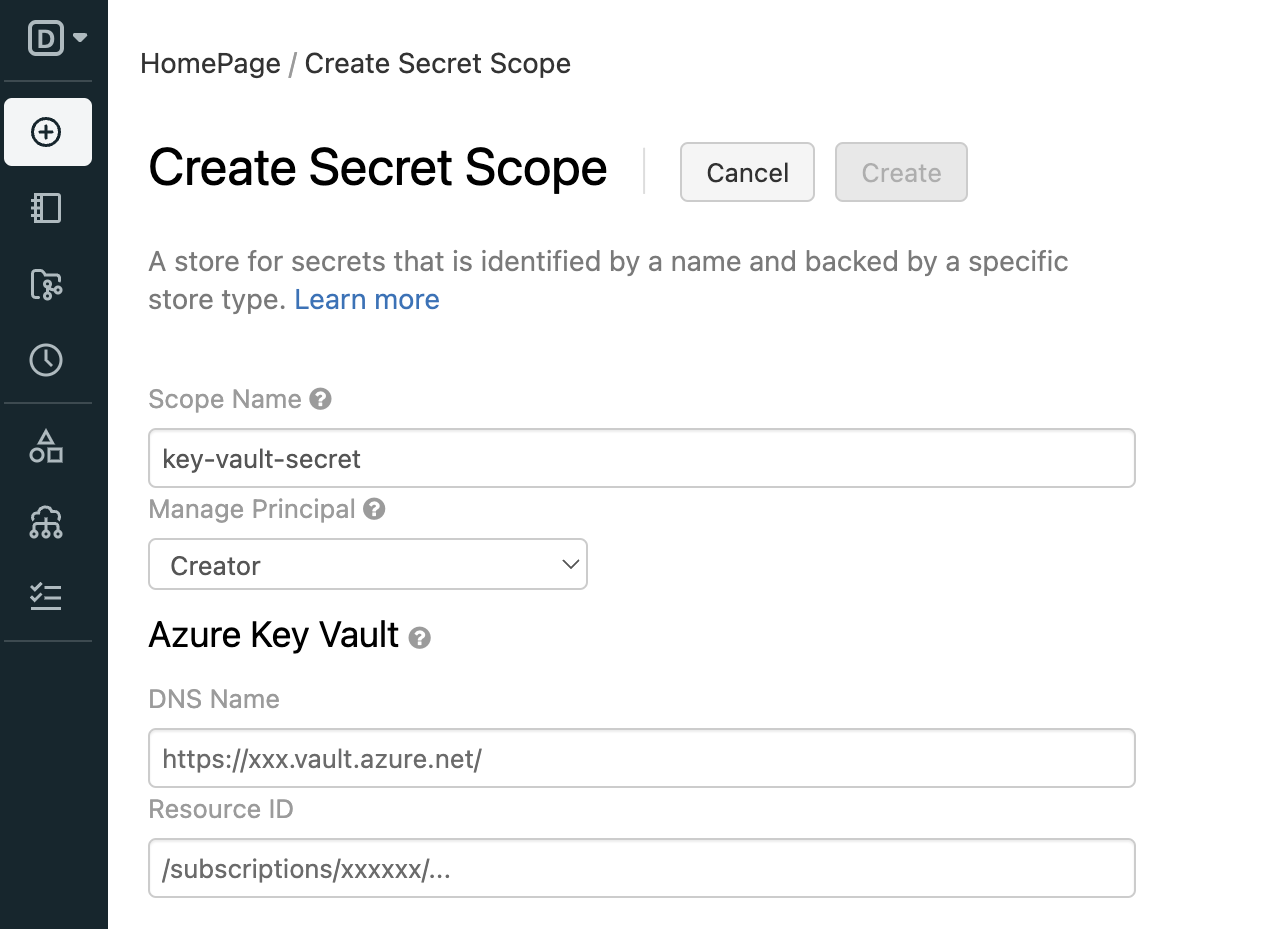

- By default, you will have your Azure Databricks Workspace URL present in the address bar of your web browser. Now, to create Secret Scopes, you have to add the extension “#secrets/createScope” to the default URL (ensure S in scope is uppercase).

- Now, enter all the required information, scope name, DNS name, and resource ID that you generated earlier.

- Then, click on the Create button. By this, we have created and connected the Azure Key Vault secret scope with Databricks.

Now, to create secrets in the Azure Key Vault Secret Scope, follow the below steps.

Creating Secret in Azure Key Vault

- Click on “Secrets” under settings in the left panel on the Microsoft Azure home page.

- Then select Generate/Import to create a Secret.

- Fill in the name of the Secret and Value to be given inside the secret, which is confidential.

- Now, click on create, and the Secret is created.

- Note down the name of the Secret, which will be used further in the Notebook or Code.

To check whether Databricks Secret Scope and Secret are created correctly, we use them in a notebook:

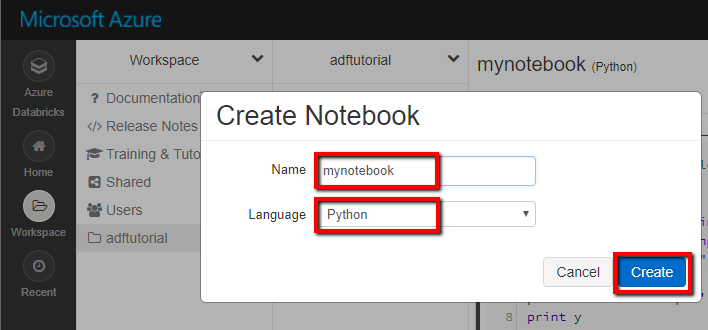

- In your Databricks workspace, click on Create Notebook.

- Enter the Name of the Notebook and preferred programming language.

- Click on the Create button, and the Notebook is created.

Enter the code in the notebook:

dbutils.secrets.get(scope = "azurekeyvault_secret_scope", key = "BlobStorageAccessKey") In the above code, azurekeyvault_secret_scope is the name of the secret scope you created in Databricks, and BlobStorageAccessKey is the name of the secret that you created in an Azure Vault.

val storageAccountName = "mystorageaccount"

val containerName = "demo"

val accessKey = "fuiqweXSNAeuyn8wqehd78"

spark.conf.set(

"fs.azure.account.key." + storageAccountName + ".blob.core.windows.net",

accessKey

)After you execute the above code in a notebook, you should get a “REDACTED” message. If it appears, then you have successfully created a Secret Scope and a Secret.

B) Creating Secret Scopes and Secrets using Databricks CLI

- Open a command prompt on your computer.

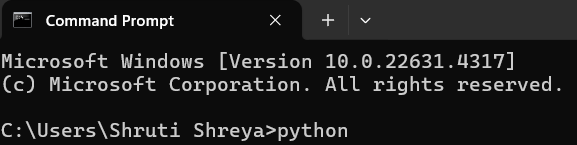

- Check whether Python is installed.

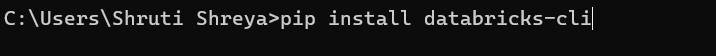

- Then, install Databricks CLI by entering the following command:

pip install databricks-cli- After the execution of the command, Databricks CLI gets installed.

- Now, open the Databricks Workspace.

- To access information through CLI, you have to authenticate.

- For authenticating and accessing the Databricks REST APIs, you have to use a personal access token.

- To generate the access token, click on the user profile icon in the top right corner of the Databricks Workspace and select user settings.

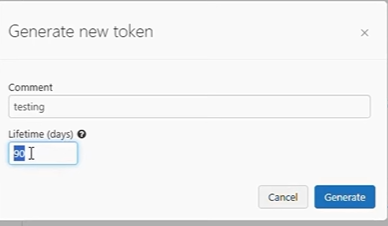

- Select Generate New Token

- Enter the name of the comment and lifetime (total validity days of the token).

- Click on generate.

- Now, the Personal Access is generated; copy the generated token.

- In the command prompt, type databricks configure –token and press enter.

- When prompted to enter the Databricks Host URL, provide your Databricks Host Link.

- Then, you will be asked to enter the token.

- Enter your generated token and authenticate.

- Now, you are successfully authenticated and all set for creating Secret Scopes and Secrets using CLI.

Creating Scope using Databricks CLI

You can enter the following command to create a Scope:

databricks secrets create-scope --scope BlobStorage -–initial-manage-principal usersAfter executing the command, a Databricks Secret Scope will be successfully created.

Creating Secrets Inside the Secret Scope using Databricks CLI

You can enter the following command to create a Secret inside the Scope:

databricks secrets put –scope BlobStorage –key BLB_Strg_Access_Key- BlobStorage > Name of the Secret scope

- BLB_Strg_Access_Key > Name of the secret

Hence, the secret called BLB_Strg_Access_Key is created inside the scope called BlobStorage.

After this process, a notepad will open where you have to give the value of the Secret. Save the notepad after entering the value of the Databricks Secret. If there is no error, then the process is successful.

This is how you can create Databricks Secret Scope and Secret using Databricks CLI.

2) How to List the Secret Scope?

This command lists all the Secret Scopes that were created before:

databricks secrets list–scopesListing the Secrets inside Secret Scopes

This command lists the Secrets present inside the Secret Scope named BlobStorage.

databricks secrets list –scope BlobStorage3) How to Delete the Secret Scope?

This command deletes the Secret Scope named BlobStorage that was created before:

databricks secrets delete–scope –-scope BlobStorageConclusion

Using the feature of Key Vaults, Secret Scopes, and Secrets, developers can easily grant access to any level of an organization for viewing and editing the developer’s work safely and securely. In this article, you have learned about the basic implementation of creating and working with Databricks Secret and Secret Scopes.

Extracting complex data from a diverse set of data sources can be challenging and requires immense Engineering Bandwidth. All of this can be effortlessly automated by a Cloud-Based ETL tool like Hevo Data.

If you are using Databricks as a Data Lakehouse and Analytics platform in your business and searching for a stress-free alternative to Manual Data Integration, then Hevo can effectively automate this for you. Hevo with its strong integration with 150+ Data Sources & BI tools (Including 40+ Free Sources), allows you to not only export & load data but also transform & enrich your data & make it analysis-ready.

Give Hevo a shot! Try Hevo’s 14-day free trial and experience the feature-rich Hevo suite first hand. Check out the pricing details to get a better understanding of which plan suits you the most.

Share with us your experience of learning about Databricks Secret. Let us know in the comments section below!

FAQs

1. What is Databricks’ secret?

Databricks’ secret refers to its ability to manage sensitive information securely using its Secrets API.

2. What is unique about Databricks?

Databricks is unique for its unified data analytics platform, combining data engineering, machine learning, and data science in a collaborative environment.

3. How to read secret value in Databricks?

Use the dbutils.secrets.get(scope, key) command to read a secret value in Databricks securely.