dbt is a data transformation tool used by data engineers to process raw data within data warehouses. While dbt is a robust tool for transformation, you should be aware of the dbt commands to harness the power of dbt. Mastering dbt commands will let you run complex asks on dbt projects.

This article will list some of the widely used dbt commands you should be familiar with.

Table of Contents

dbt Cloud and dbt Core

dbt commands are instructions to manage dbt projects. It is used to run, fetch information, and modify projects. As dbt can be embraced either through dbt Cloud or dbt Core, dbt commands work on both solutions. However, not all dbt commands are supported in dbt Cloud and dbt Core.

dbt Cloud

dbt Cloud is a browser-based cloud solution for transforming data in your data warehouse. It is an alternative to dbt Core, an open-source command line interface tool. To run commands in dbt Cloud, you must set up the project and start editing with the cloud IDE. The cloud IDE has a dedicated feature to run dbt commands.

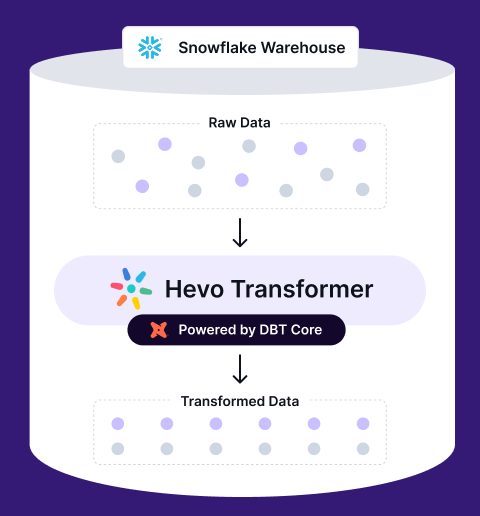

Get lightning-fast data transformations with Hevo Transformer—no code needed. Automate your dbt workflows, manage Git version control, and preview your changes all in one platform.

✅ Instant Data Warehouse Setup – Effortlessly connect and start transforming

✅ Simplified dbt Automation – Streamline building, dbt testing, and deploying models

✅ Seamless Version Control – Collaborate using Git for smoother workflows

✅ Quick, Accurate Insights – Preview and deploy transformations in real-time

dbt Command Line Interface (CLI)

Also known as dbt Core, the dbt command line interface (CLI) allows you to run dbt commands from your local machine. dbt CLI supports more commands than dbt Cloud. Unlike dbt cloud, where you can have buttons to trigger tasks, dbt projects in your local device can only be managed and executed with commands.

13 dbt Commands That You Should Know

dbt seed

dbt seeds are CSV files referenced in the downstream models using the <strong>ref</strong> function. However, you must first add the data to the data warehouse from your locally hosted project. This is where the dbt seed command helps you with loading data. Once the data is loaded, it can be referenced while building dbt data models.

Consider a CSV file named country_codes is located at seeds/country_codes.csv. You can load your CSV file into the data warehouse by executing the dbt seed command.

The output of the dbt seed command:

The loaded file can then be referenced with the following code:

select * from {{ ref('country_codes') }}dbt snapshot

dbt snapshots are SQL SELECT statements used to track overtime changes in data. This is highly beneficial for businesses that record changing business processes. For instance, an e-commerce company keeps changing the order status from pending to shipped and more. Understanding the time taken in various processes, from accepting to delivering orders, is crucial for e-commerce companies. Recording the gradual changes in the order status will help optimize your business operations.

For snapshots, you need to use a snapshot block.

You can further configure the snapshot block with a config block as follows:

The configuration blocks include target_database and target_schema used while storing the new table created with the dbt snapshot command.

dbt snapshotThe output of the dbt snapshot command:

dbt run

dbt run is a simple command used to run SQL SELECT statements in the models using a desired materialization strategy. dbt run command will execute all the models in the project. You can even use the dbt run command with flags like —full-refresh to treat dbt incremental models as table models. It is used when the schema has changed for the incremental model, and you want to recreate the transformation.

dbt run --full-refreshdbt run-operation

The dbt run-operation command invokes a macro, which are logic functions included in the dbt models. It is used to test macros in the model without running the entire model to avoid computational requirements. However, since macros are functions, it requires arguments. Make sure to pass the arguments with the dbt run-operation command.

Syntax:

dbt run-operations {macro} --args ‘{args}’Example:

$ dbt run-operation grant_select --args '{role: reporter}'In the aforementioned command, grant_select is the macro and role: reporter is the argument.

dbt run –models

The –models flag is used to specify which models to run. It is used to avoid running all the models.

dbt run --models my_model, cust_orderIn the aforementioned command, you are running only two models (my_model and cust_order) instead of all the models in the dbt project.

dbt run-tags

Tags are set to describe or add metadata to models. These tags can be later used as a part of resource selection syntax to filter models while using the dbt run command.

dbt run --select tag:hourlyThe aforementioned command will run all the models that have been tagged hourly.

dbt init

<a href="https://hevodata.com/data-transformation/dbt-init/" target="_blank" rel="noreferrer noopener">dbt init</a> is used to get started with dbt projects. When you wish to create a project, you run the dbt init command. Running the dbt init for the first time will prompt you to provide different information. You might be asked to name the project, mention the database adapter, and input the username and password. Eventually, it will create a folder with the necessary files and folders to get you started. However, if you are using dbt init on an existing dbt project, it will connect your profile with the project so that you can start making changes.

dbt initdbt clean

<a href="https://hevodata.com/data-transformation/dbt-clean/" target="_blank" rel="noreferrer noopener">dbt clean</a> command deletes folders specified in the clean-targets list in the dbt_project.yml file. The command is widely used to delete specific folders repeatedly. Often several files in different folders are created when you run dbt models.

For instance, if you compile or run models, the compiled folder will store multiple files as your models run. Even packages you import from other dbt projects create files you might not need over time. You can list the folders in the clean-targets list to clear such files. When you run dbt_clean, the files will be deleted from the specified folders.

dbt compile

<a href="https://hevodata.com/data-transformation/dbt-compile/" target="_blank" rel="noreferrer noopener">dbt compile</a> is similar to compilers of programming languages. It is used to test and debug code before executing the code. However, with dbt, it is not mandatory to run dbt compile before using dbt run. But you should use dbt compile to check if the code is correct.

dbt compiledbt docs

<a href="https://hevodata.com/data-transformation/dbt-docs/" target="_blank" rel="noreferrer noopener">dbt docs</a> command helps you generate your project documentation. It has two subcommands—generate and serve.

dbt docs generate

This command allows you to generate your project’s documentation website. It is done in three steps:

- Copy the website index.html file into the target/ directory.

- Compile the project to target/manifest.json.

- Produce the target/catalog.json file. The file contains metadata about the views and tables produced by models.

You can use the –no-compile flag with dbt docs generate to skip the second step from the above steps.

dbt docs serve

The command will create a documentation website locally. You can access the web server on port 8080. But you can specify different ports using the –port flag. The web server will be located in the target/ directory. However, run dbt docs generate before dbt docs serve since the docs server relies on catalog metadata artifact that the server command depends upon.

dbt docs serve --port 8001dbt list

dbt list or dbt ls is used to list resources included in your dbt project. By default, dbt ls would list all the models, snapshots, seeds, tests, and sources. However, you can filter the output using several arguments like –resource-type, –output, –models, and more.

- –resource-type: Limits the dbt resources included by default.

- –output: It allows you to control the format of the output from the

dbt lscommand. - –models: This argument helps you only list models.

dbt source

<a href="https://hevodata.com/learn/dbt-sources/" target="_blank" rel="noreferrer noopener">dbt source</a> enables you to work with source data. A general use case of dbt source is understanding the trend of updated sources over time. It includes a subcommand, dbt source freshness, to calculate the freshness of information of all the sources.

On execution of the dbt source freshness command, the freshness of source data will be stored in target/sources.json. However, you can override the destination of sources.json by using –output argument.

dbt source freshness --output target/source_freshness.json How to Use Operators to Run Dynamic dbt Commands

As you keep building your dbt projects, you might end up with numerous models. However, you would not always be required to run all the models at once. To select only specific models, you can use different selection arguments like –select, –exclude, –selector, and –defer. To further extend the capabilities of these selection arguments, you can use operators like +, @, *, union, and intersection.

Plus (+) Operator

Plus operator is used before or after the model name. It helps select the parents and children of the specified models. Plus operator is useful when you build models that are dependent on other models. + at the beginning would include all parents of the selected model and + at the end would select all the children.

Some examples of + operator:

dbt run --select my_model+

dbt run --select +my_model

dbt run --select +my_model+

At (@) Operator

The @ operator is similar to the plus operator, but it will also include the parents of the children of the selected model.

For example, $ dbt run –models @my_model would select my_model’s children and the parents of the children.

Star (*) Operator

The star operator is used to match all the models in the selected package or directory.

Some examples of * operator:

dbt run --select snowplow.*The aforementioned command runs all the models in the snowplow package.

dbt run --select finance.base.* This command runs all the models in the models/finance/base directory.

Unions

With unions, you can select all the resources in the models. It is denoted by adding a space after the model names.

dbt run --select +snowplow_sessions +fct_ordersThe aforementioned command would run all the resources in snowplow_sessions and fct_orders models.

Intersections

The intersection command is used to select resources that are common to the specified models. Insertions are denoted with a comma (,):

dbt run --select +snowplow_sessions,+fct_ordersThe aforementioned command would only run the resources that are common to both models (snowplow_sessions and fct_orrders.)

Conclusion

dbt commands are highly beneficial for processing simple and complex tasks on dbt projects. While there are many dbt commands, we have listed some of the most commonly used commands. The listed commands in the articles are often used in any dbt project. Therefore, you should be familiar with these commands to enhance your data transformation workflows.

In case you want to integrate data into your desired Database/destination, then Hevo Data is the right choice for you! It will help simplify the ETL and management process of both the data sources and the data destinations. Sign up for a 14-day free trial today.

FAQ on dbt Commands

What does the dbt run command do?

The dbt run command executes the models defined in your dbt project, transforming your raw data into structured tables in your data warehouse. It compiles the SQL statements for each model and runs them against the target database, creating or updating tables as specified in your model definitions.

How to run the dbt test command?

To run the command, navigate to your dbt project directory in your command line and execute the command: dbt test

This command will run all tests defined in your project, including schema tests, data tests, and any custom tests you have implemented. This will help you ensure the quality and accuracy of your data models.

What is dbt CLI?

The dbt CLI (Command Line Interface) is the command-line tool used to interact with dbt. It provides a way to run dbt commands, manage your dbt project, and execute tasks such as compiling models, running tests, and generating documentation directly from your terminal. This interface allows for automation and integration into data workflows.

What are the options for dbt?

dbt offers several options that can be used with its commands. Some common options include:--target: Specify the target profile to use from your profiles.yml.--models: Run specific models or a group of models using tags or paths.--exclude: Exclude specific models from being run.--full-refresh: Force a complete refresh of tables instead of incremental loads.--threads: Set the number of threads to run models in parallel.--vars: Pass variables to the dbt run.