Data has become the foundation of any successful business. The ability to efficiently extract, transform, and load data for analysis is crucial for making informed data-driven decisions. Therefore, the tools you choose for managing your business data are also extremely important.

This blog will discuss two such tools: dbt and Airflow. We will provide a comprehensive dbt vs airflow comparison, their features, pros and cons, and pricing, among other details. So, without further ado, let’s get started!

Table of Contents

What is dbt?

dbt is a transformation workflow that helps you complete more work while producing higher-quality results. You can use dbt to centralize analytics code and equip your data team with guardrails usually found in software engineering workflows. This allows for effective, collaborative work on data models, versioning them, testing and documenting your queries, and safely deploying them to production with monitoring and visibility.

Key Features

- Materialization: Handle boilerplate code to materialize queries as relations.

- Implement Macros: Users can implement Jinja and repeated SQL through code compilers.

- Auto-generate documentation: In dbt Cloud, you can auto-generate the documentation when your dbt project runs.

- Model documentation: dbt provides a mechanism to write, version-control, and share documentation for your dbt models.

- Snapshot data: dbt provides a mechanism for snapshotting raw data at a point in time.

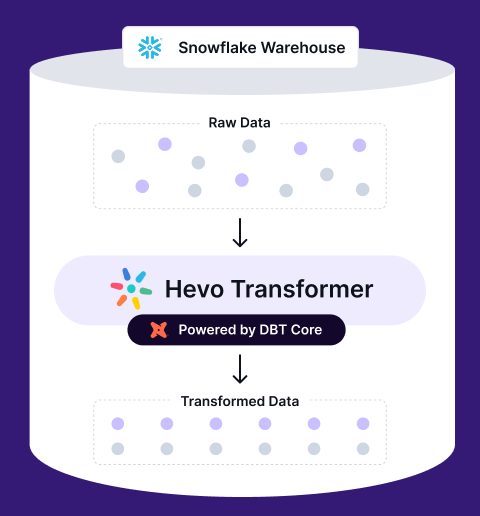

Harness the full power of dbt Core in a no-code solution with Hevo Transformer. Effortlessly join, modify, and aggregate data, while automating your transformations and tracking changes with Git.

✅ Instant Data Integration – Connect and auto-fetch tables in minutes

✅ Simplified dbt Workflows – Build, test, and deploy models without hassle

✅ Git Version Control – Collaborate and manage changes seamlessly

✅ Real-Time Previews – Transform, test, and deploy faster than ever

Use cases

dbt is designed for analysts. It is best for organizations with data warehouses that need to transform raw data into clean, usable datasets. For example, if your team often creates reports from data in BigQuery, Snowflake, or Redshift, dbt will make these transformations efficient.

- Data Modeling: dbt is used mainly to transform raw data into models used later for analytics and reporting.

- Incremental Loads: You can use dbt to build incremental models that update only changed rows in the data, saving processing time and resources.

- Testing: dbt comes with native testing, allowing data teams to ensure data quality through simple tests written and run as part of the transformation process.

dbt caters to customers in various industries, such as:

- Financial Services

- Healthcare

- IT & Software

- Oil & Gas

- Transportation

What is Apache Airflow?

Apache Airflow is an open-source platform for authoring, scheduling, and monitoring workflows through programming. It empowers users to set tasks and dependencies as code and run elaborate data pipes. No matter how complex, almost any workflow can be implemented using Python code within Apache Airflow. The tool’s flexibility has Airflow used at hundreds of companies worldwide for various use cases.

Use Cases

- Business Operations: Apache Airflow’s tool agonist and extensible quality make it a preferred solution for many business operations.

- ETL/ELT: Airflow allows you to schedule your DAGs in a data-driven way. It also uses Path API, simplifying interaction with storage systems such as Amazon S3, Google Cloud Storage, and Azure Blob Storage.

- Infrastructure Management: Setup/teardown tasks are a special type of task that can be used to manage the infrastructure needed to run other tasks.

- MLOps: Airflow has built-in features that include simple features like automatic retries, complex dependencies, and branching logic, as well as the option to make pipelines dynamic.

dbt vs Airflow: Key comparisons

| Aspect | dbt (Data Build Tool) | Apache Airflow |

| Primary Function | Data transformations within the warehouse. | Data orchestration and migration. |

| Ease of Use | Moderate, requires technical knowledge about SQL. | Complex setup and steep learning curve. |

| Key Capabilities | Data modeling, testing, documentation, Version Control, and CI/CD. | ETL orchestration, task scheduling, monitoring. |

| Use Cases | Data transformation, incremental loading. | ETL processes, complex workflows, automation. |

| Integration | Strong integration with data warehouses like BigQuery, Snowflake, and Redshift. Databricks etc. | Extensive integration with a wide range of services and systems. |

| Scalability | Scales well with the data warehouse but is limited by SQL performance. | Highly scalable, handles complex workflows across multiple environments. |

| Community & Support | Dedicated dbt Support team (dbt Cloud users), the Community Forum, dbt Community Slack, and documentation. | Slack community, newsletter, documentation, dev list. |

| Performance | High performance for SQL-based and Jinja transformations. | Performance depends on task complexity and infrastructure. |

| Cost | Provides three pricing models. | Free and open-source, with enterprise support available |

| Best For | Analysts and data teams focusing on data transformation. | Data engineers managing complex workflows and automation. |

Head-to-Head Comparison

Ease of Use

- dbt: dbt is relatively easy for someone who already knows SQL. Setup is easy, and it provides detailed documentation and Slack community support.

- Airflow: Apache Airflow has a steeper learning curve. It requires a deeper understanding of Python and workflow orchestration.

Functionality

- dbt: dbt transforms data inside a data warehouse. It’s modeled to turn raw data into a clean, usable dataset, so it’s a great fit for any analytics team. dbt also provides testing and documentation layers to help keep the data clean.

- Airflow: Airflow is a data migration tool that can handle ETL processes, automate tasks, and integrate with various services. Thus, it becomes the perfect solution for managing end-to-end data pipelines.

Scalability

- dbt: Debt’s scalability depends on the scalability of the underlying data warehouse. However, dbt’s scalability focuses more on data transformations than on orchestrating entire workflows.

- Airflow: Given enough computing power, Airflow can be scaled to handle infinite numbers of tasks and workflows.

Cost

- dbt: It offers three pricing plans:

- Enterprise: Contact Sales

- Team: $100/developer seat per month

- Developer: $0, free.

- Airflow: It is an open-source platform.

Performance

- dbt: Its performance depends on the workflow and underlying data warehouse. If the workflow and SQL query are optimized, dbt can effectively process large datasets.

- Airflow: Airflow’s performance is tied to the complexity of the tasks and the infrastructure it’s running on. It is designed to handle large-scale workflows, but its performance can vary based on how well the tasks are optimized and the resources available.

Integrations

- dbt: dbt integrates well with modern data warehouses like Redshift, BigQuery, Snowflake, Databricks, etc.

- Airflow: Airflow provides extensive integration capabilities to connect databases, cloud services, or APIs.

Use cases: When to Choose dbt vs When to Choose Airflow

Choose dbt if:

- You are mainly performing data transformation within a warehouse.

- Your team comprises of analysts with SQL knowledge who develop and maintain data models.

- You need to maintain quality data through built-in testing and documentation.

- You have relatively simple workflows that are mainly dedicated to transformation for analysis.

Choose Airflow if:

- You need to orchestrate complex workflows involving several tasks and systems.

- Need an organization-wide quick solution for automating repetitive tasks, like ETL jobs or machine learning pipelines.

- You want a tool to help you run large-scale data pipelines across different environments.

- You want to be able to integrate with a large number of data sources and services.

Limitations of dbt and Airflow

dbt

Although dbt provides extensive data transformation capabilities, it also has a few limitations.

- dbt focuses heavily on data transformation. Therefore, it is less useful in ETL processes due to its lack of extraction and loading capabilities.

- dbt heavily relies on SQL for transformations. While that is highly beneficial to teams acquainted with SQL, it can sometimes be a limitation if some complex transformation requires a programming language—like Python—that dbt doesn’t natively support.

- dbt is batch-oriented, making it less suitable for real-time or streaming data transformations.

- dbt lacks built-in orchestration capabilities, meaning it cannot manage or schedule jobs independently. For data orchestration, you will have to add tools such as Airflow.

Airflow

- Airflow can be challenging to set up and configure, especially for beginners, as it requires significant infrastructure and DevOps expertise.

- It has a steep learning curve. Learning how to write DAGs (Directed Acyclic Graphs) in Python and understanding the various operators and tasks can be daunting for people with no technical background.

- Unlike dbt, which includes built-in data testing and documentation features, Airflow doesn’t natively provide data quality checks or documenting models.

- It doesn’t solve the pain of multi-step pipelines that move data between several tables and require synchronization across steps.

Introducing Hevo- A Better and Easier Way to Migrate and Transform Your Data

While looking for an ETL tool that fits your business needs, dbt and Airflow have pros and cons. dbt is an extensive tool that provides excellent data transformation capabilities but lacks migration capabilities. On the other hand, Airflow is a highly flexible and customizable open-source tool, but it can be complex and have a steep learning curve.

Meet Hevo, an automated data pipeline platform that provides the best of both tools. Hevo offers:

- A user-friendly interface.

- Robust data integration and seamless automation.

- It supports 150+ connectors, providing all popular sources and destinations for your data migrations.

- The drag-and-drop feature and custom Python code transformation allow users to make their data more usable for analysis.

- A transparent, tier-based pricing structure.

- Excellent 24/7 customer support.

These features combine to place Hevo at the forefront of the ELT market.

Hevo just made data transformation easier than ever! Hevo Transformer integrates with dbt Core and Git, allowing you to transform data directly in your warehouse with speed, automation, and precision. No more inefficiencies—just seamless transformations.

Get Early Access for FreeConclusion

To summarize, both dbt and Airflow are effective tools for their specific use cases, but both lack in some way or another.

The choice between dbt and Airflow will depend on your specific needs, the complexity of your workflows, and your team’s skills. In many cases, using both tools together can provide a comprehensive solution that leverages each of their strengths.

If you are looking for a solution to streamline your data integration and transformation processes, consider exploring Hevo. Try Hevo’s 14-day free trial and experience seamless data migration.

Frequently Asked Questions

1. Is Airflow an ETL?

Airflow is not an ETL tool itself, but it is often used to orchestrate ETL processes. It schedules and manages the execution of tasks in data pipelines, including those involving ETL operations.

2. What Are the Problems with Airflow in Real-Time?

Airflow may face challenges in real-time scenarios, such as high latency in task execution, difficulty in handling dynamic workflows, limited real-time monitoring capabilities, and complexities in scaling for large workloads.

3. Is dbt an Orchestration Tool?

No, dbt (data build tool) is not an orchestration tool. It is a data transformation tool that uses SQL to transform data within a data warehouse. However, it is often used in conjunction with orchestration tools like Airflow.