Data engineers are the foundation for any data-driven initiative in organizations. However, the rapid increase in data collection within organizations is clogging data engineers with several challenges. Streamlining the entire data flow at the pace of collecting data is a significant challenge for data engineers.

Other problems include enabling data pipeline management and creating flawless downstream application workflows. This is where dbt helps data engineers eliminate major data handling problems. But let’s first understand more about dbt and look at dbt use cases.

Table of Contents

dbt Overview

dbt is a data transformation tool that allows you to transform, test, and document data within your data warehouse. dbt offers two data transformation solutions – dbt Core and dbt Cloud. dbt Core is a command line interface to run your projects locally. dbt Cloud is a managed service that offers several features like scheduling jobs and document hosting for streamlining the data transformation workflows.

For transformation, dbt supports SQL and Python, enabling a broader range of professionals to leverage quality data. You can transform data using dbt data modeling. SQL-based models include select statements that define the logic for transformation. Python-based models rely on functions called model that houses the underlying logic for data transformation. dbt also supports version control and documentation to ensure you keep track of all the changes and help others collaborate effectively.

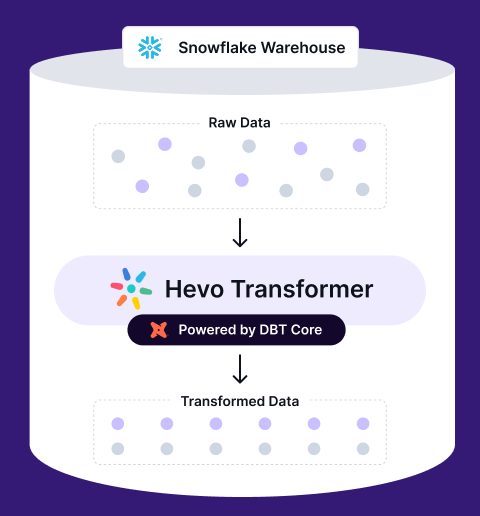

Easily manage and transform your data on Snowflake with Hevo Transformer—automate dbt workflows, track versions via Git, and validate in real time.

- Quick Data Integration – Connect your warehouse in a snap and auto-fetch tables

- No-Hassle dbt Automation – Build, test, and deploy with ease

- Version Control with Git – Track changes effortlessly with Git integration

- Instant Data Transformation – Preview and push changes instantly

Data Engineering dbt Use Cases

Data engineers prefer dbt as it simplifies the transformation process in the data warehouse. Here are some of the critical roadblocks dbt can eliminate for users.

Eliminates Data Silos

Data silos are isolated data that are stored in disparate data sources. Today, data silos are common trends across industries. Data silos occur because organizations have more data than they can utilize for analysis. As data grows in an organization, centralizing the information becomes critical for analysis. However, consolidating data is a complex process. You need to provide data access to every stakeholder while ensuring security.

Data from different departments, like sales and marketing, reside in various sources. Without centralization, marketing professionals will struggle to access sales data and vice versa. This reduces the data engineers’ productivity as they often have to work on ad-hoc data models for different teams. Being reactive over proactive impacts the efficiency of the entire organization.

You can become proactive by creating a centralized logic for different dbt use cases within a data warehouse. dbt can help you create all your transformational logic with models that can be leveraged across multiple downstream processes.

Data Quality

In the digital world, data quality is one of the biggest challenges for data engineers. While data is available at plenty, the complexities of raw data make it difficult for companies to make data fit for analysis. Most of the time, data engineers spend on updating, maintaining, and identifying quality issues in the data pipeline. Since data come from multiple sources, the same records are often stored in different forms and structures. This makes it challenging to clean and enrich data to create a single source of truth.

dbt allows you to clean data with simple SQL statements or with Python programming. You can build complex models to filter, transform, and automate data clearing tasks. The quality of data is also highly dependent on the source of data. Since data collection sources keep changing, you might end up with a broken workflow whenever you change the source.

To eliminate this, you can standardize the source reference with dbt. By creating dbt sources, you would only be required to change the definition of the source. You do not have to worry about the code changes in the downstream processes, as the reference to the source can remain the same.

Machine Learning Data Preparation

Data preparation for machine learning goes beyond the basic data quality checks. Usually, data engineers perform data engineering that includes addition, deletion, and combination of datasets. Creating new variables with data engineering helps data engineers better represent the underlying business problems. The new variables are then used to train machine learning models to improve decision-making based on a broader range of variables.

With dbt, data engineers can easily split data or make new variables using Python’s rich ecosystem for molding data. dbt with Python empowers data engineers to introspect and explore new possibilities with the existing data to improve machine learning models’ accuracy.

Brings Transparency

Analysts are often critical of the data they are using for generating insights. This happens because analysts are not aware of the source of data. You need to understand where the data is coming from and what kinds of transformation it has gone through. Answers to these questions allow analysts to carry out business intelligence processes and generate reports confidently. Analysts can communicate with the decision makers to trust the insights if they know every ins and outs of the data source.

dbt eliminates the need for guess works and brings trust among stakeholders by providing data lineage and documentation. Data lineage enables you to track the data’s history, origin, and transformation. Data lineage in dbt is represented by a Directed Acyclic Graph (DAG). It is also used to understand the dependencies among models to ensure you build dbt projects with complete transparency.

On the other hand, dbt documentation assists you in identifying the types of transformations, the logic associated with models, and more. dbt documents also include information about test cases to eliminate any data discrepancies. Any business needs to make decisions based on accurate insights. As data engineers can streamline the generation of documentation and data lineage, it brings trust among decision-makers.

Test & Debug Data Pipelines

Data pipelines are an effective way to move data among different applications. But, managing and maintaining data pipelines is a tedious task. Any changes in the upstream process can impact the entire downstream process. It is essential to ensure that the issues in the data pipelines are caught and resolved. Failing to fix test and debug bottlenecks in data pipelines can negatively impact the overall business performance.

dbt helps you test and debug data pipelines in near real-time. You can use built-in dbt features to test the freshness of sources and include test cases (singular tests and generic tests). Source freshness and generic tests are defined in YAML. And singular tests are SQL statements (.sql file) in the test directory of your project. Data engineers can also catch edge cases using dbt with custom test cases using Jinja and SQL.

Simplifies Data Pipeline Workflows

As businesses scale, deploying new data pipelines and updating existing ones becomes difficult. Deploying new data pipelines can cause several problems in the downstream applications. It could break all the dependent models, halting the entire project. Before deploying, it is vital to check the models that might be impacted. To address this problem, data engineers use CI/CD pipelines. However, it is often difficult to write custom CI/CD pipelines can be a challenging task.

With dbt Cloud, you can use built-in CI/CD features that don’t require extensive maintenance. You can set up CI/CD with GitHub to automate runs and checks your data on pull requests. CI jobs can be further triggered with APIs to check the impacted models before deployment. You can also standardize the format of SQL code pushed to make changes. This is beneficial for growing teams, as consistency in code is vital as you build complex models.

All the changes made in the dbt projects are checked for the impacted models before deploying into production. A clear understanding of the modifications incorporates the CI/CD principles to build iteratively. These features enable data engineers to build robust data pipelines that have little to no downtime.

What Does the Future of dbt Look Like

dbt is becoming central among data-driven companies to simplify data transformation tasks. dbt is further poised to witness an increase in adoption due to the advancements in dbt Cloud. It includes unique features like scheduling, document hosting, and cloud IDE to extend the capabilities of dbt core. Dbt cloud also integrates different platforms to implement reverse ETL, monitor data feeds, create data catalogs, and orchestrate data pipelines.

In addition, dbt relies on popular programming languages like Python and SQL, which reduces the learning curve for data professionals. It also has a modularized workflow to break complexities into smaller sections for managing transformation tasks effectively. As companies seek to expedite their data transformation strategies, dbt is becoming the go-to choice for reducing data engineers’ workloads. In the future, dbt can become the core of every data engineering team to support complex transformation as companies continue to collect more raw data.

Conclusion

dbt is eliminating major roadblocks for data engineers so that they can focus on building a better foundation for data-driven initiatives. As dbt enables data engineers to transform data and centralize it for better collaboration, it is being deployed in many data-driven companies. The open-source community is further helping the dbt Core project progress and simplify data engineers’ lives.

Eager to dive into dbt Python models? Check out our guide to learn how Python can be leveraged within dbt for advanced data modeling and transformations. And if you’re looking for an effortless way to integrate and transform your data, Hevo’s no-code data pipeline seamlessly integrates with dbt, enabling automated, scalable, and real-time transformations—without the engineering overhead. Sign up for a 14-day free trial.

FAQ

1. Is dbt an ETL tool?

dbt (data build tool) is not traditionally considered an ETL (Extract, Transform, Load) tool but rather a tool that focuses specifically on the “Transform” part of the ETL process.

2. What is the main purpose of dbt?

The main purpose of DBT is to do Transformations on the data.

3. Who needs dbt?

Here are specific groups and use cases that benefit from dbt: 1. Data Analyst and Data Scientist 2. Data Engineers 3. Data Teams in Organisations 4. Business Intelligence teams