Snowflake launched its new open-source, “state-of-the-art ” large language model, Snowflake Arctic, in April 2024. The data cloud company announced that the primary idea behind this innovation was to simplify adopting AI on enterprise data. At its core, Arctic offers a collection of embedding models that help you extract valuable insights from your data efficiently.

This blog will serve you as a practical guide to Snowflake Arctic. It will give you an overview, help you with the setup process, explore integration techniques, and share best practices and troubleshooting tips. We’ll also showcase real-world use cases, discuss future developments, and provide ongoing support and learning resources.

Table of Contents

What is Snowflake Arctic?

Snowflake Arctic is a set of comprehensive tools designed to facilitate the implementation and integration of AI in the Snowflake Data Cloud. It provides a range of embedding models that enable us to extract meaningful insights from our data.

It also supports an adaptable general-purpose large language model (LLM) that can handle a variety of activities, such as creating programming code and SQL queries and adhering to intricate instructions.

One of it’s best qualities is its smooth integration with the Snowflake Data Cloud. Thanks to this tight coupling, you can directly utilize AI’s potential within your current data architecture, guaranteeing a safe and efficient user experience.

Components of Arctic’s Architecture

There are mainly three components in the Snowflake Arctic architecture:

- Arctic’s architecture is based upon a Dense Mixture of Experts (MoE) hybrid transformer design, a critical innovation that enables efficient scaling and adaptability.

- Arctic supports a vast network of 480 billion parameters distributed across 128 specialized experts, each fine-tuned for specific tasks.

- Arctic uses a top-2 gating technique that activates only 17 billion parameters by choosing only the two most pertinent experts for each input. This improvement maintains top-tier performance across a broad spectrum of enterprise-focused operations while dramatically reducing computational overhead.

Key Features of Arctic

- Intelligent: Arctic leverages advanced machine learning and AI capabilities to provide critical insights and predictions. It enables businesses to derive actionable insights from their data with minimal manual intervention.

- Efficiency: The platform is optimized for high performance and scalability, allowing users to process and analyze large datasets quickly and efficiently. It supports seamless integration with various data sources, reducing the time and effort required for data preparation and analysis.

- Enterprise AI: It is designed to support enterprise-level AI applications and explicitly meet the demands of commercial enterprises, providing excellent outcomes for activities such as data analysis, process automation, and decision support.

- Open Source: The platform embraces open-source technologies, enabling users to leverage various open-source tools and libraries for data processing, analysis, and machine learning. It’s available under an Apache 2.0 license, granting everyone access to its code and model weights.

Setup with a real-world Use Case

To use Arctic, you must ensure your Snowflake Account is based in one of the Snowflake CortexLLM function availability regions. You can check the regions where CortexLLM functions are available in this official Snowflake document.

To understand Arctic’s functionalities, we will utilize synthetic call transcript data, mimicking text sources commonly overlooked by organizations, including customer calls/chats, surveys, interviews, and other text data generated by marketing and sales teams.

Step 1: Create a table and load the data

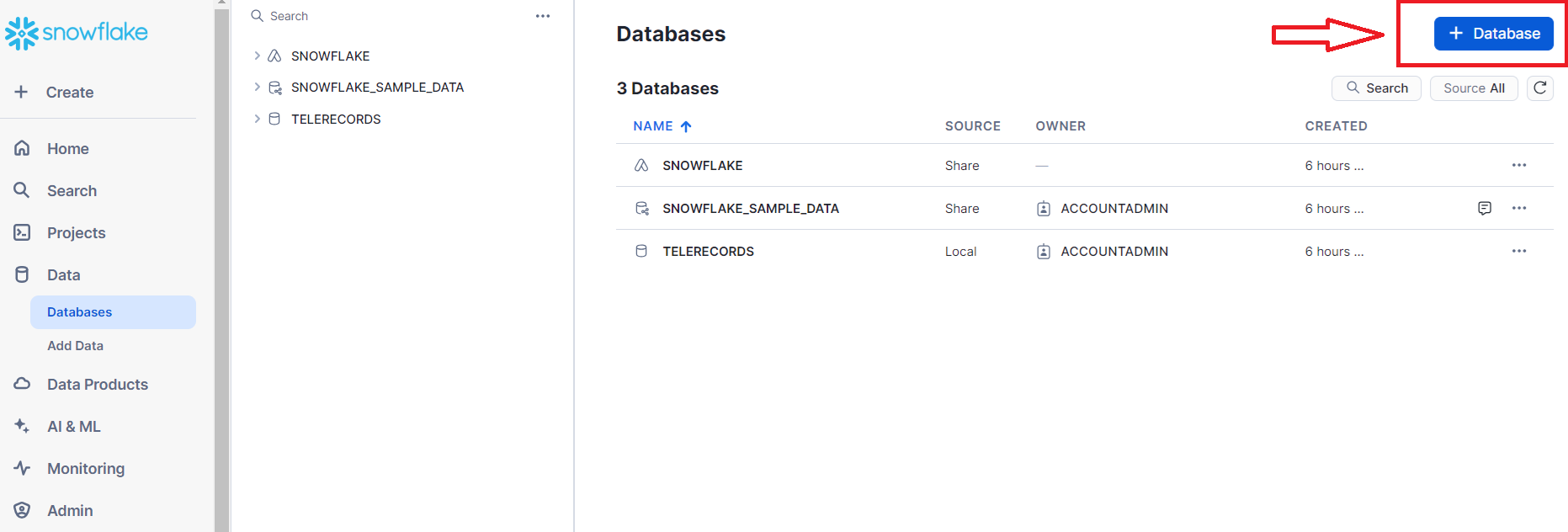

You must perform the following steps after creating a new database in your Snowflake account. You can do that by logging into your Snowflake account, going to Data → Database, and clicking the following button.

After creating a new database, you can execute the following steps in the newly created database.

1. a) Define the file format

CREATE OR REPLACE FILE FORMAT csvformat

TYPE = 'CSV'

FIELD_OPTIONALLY_ENCLOSED_BY = '"'

SKIP_HEADER = 1

NULL_IF = ('NULL', 'null');1. b) Create or Replace the stage

CREATE OR REPLACE STAGE call_transcripts_data_stage

FILE_FORMAT = csvformat

URL = 's3://sfquickstarts/misc/call_transcripts/';1. c) Create or Replace a new table to load the data into

CREATE or REPLACE table CALL_TRANSCRIPTS (

date_created date,

language varchar(60),

country varchar(60),

product varchar(60),

category varchar(60),

damage_type varchar(90),

transcript varchar

);1. d) Copy the data from the stage into the newly created table

COPY into CALL_TRANSCRIPTS

from @call_transcripts_data_stage;Once you load the data successfully into the table, you should be able to preview your data in the following manner:

Step 2: Using Arctic’s functionalities

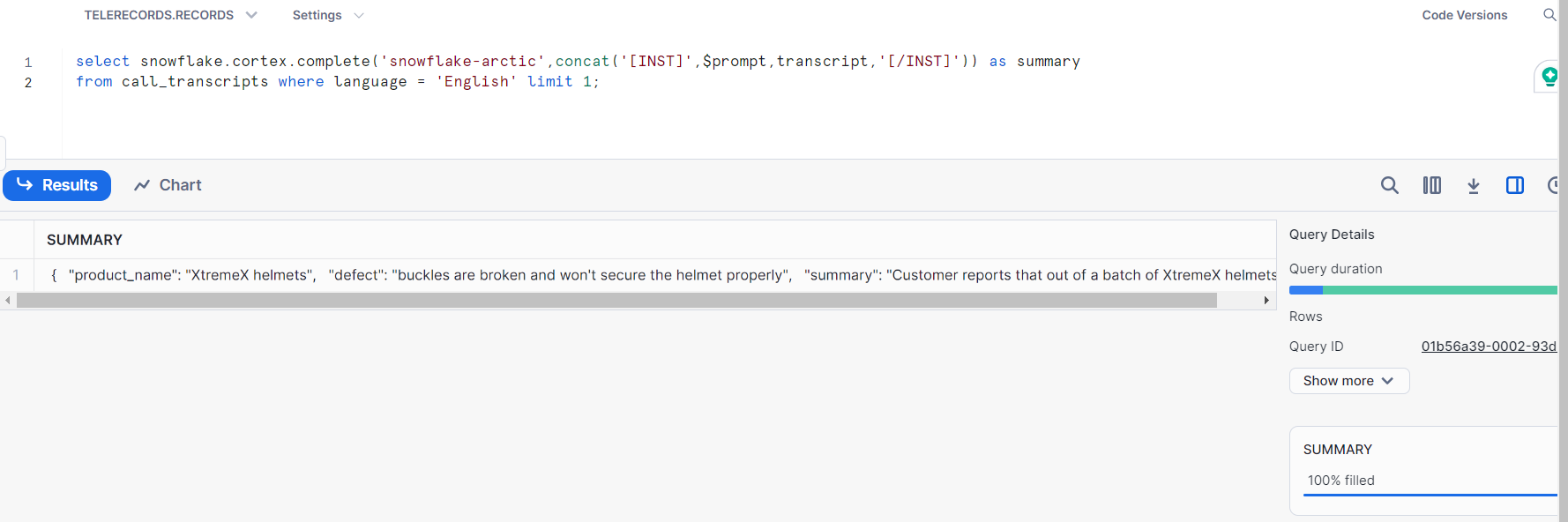

To examine Snowflake’s functionality, we are going to specifically pull out the product name, identify which part of the product was defective, and limit the summary to 200 words from a call transcript record.

To accomplish this, we will be using the snowflake.cortex.complete function.

We will select an Arctic model and prompt it to customize the output.

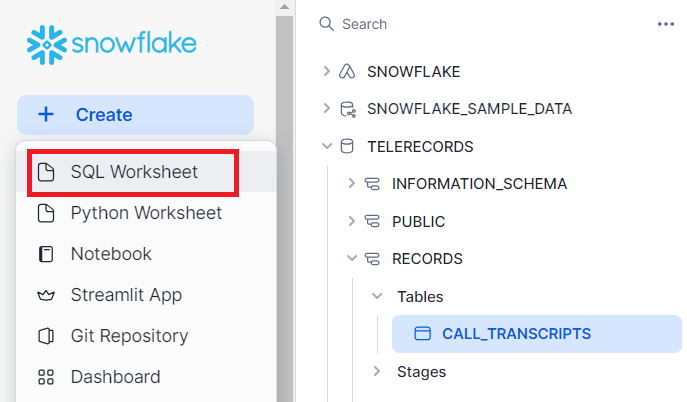

2. a) Go to +Create → SQL Worksheet

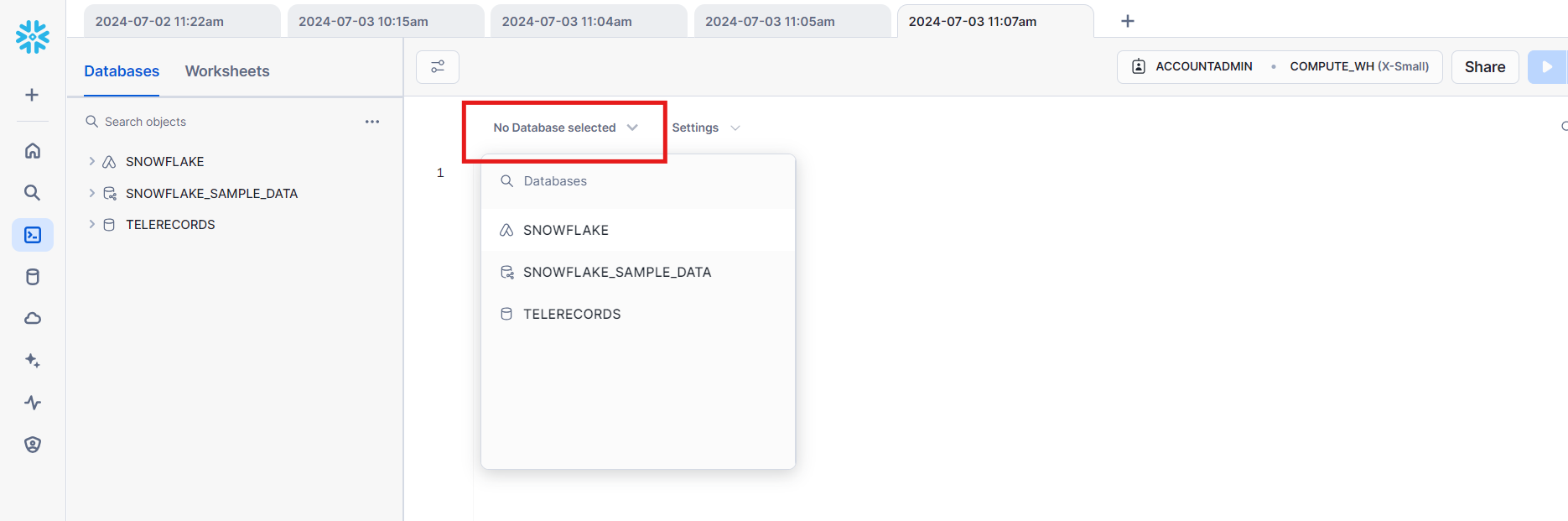

2. b) Select your Database → Table from the dropdown menu.

2. c) Set the Prompt according to your requirement using the following syntax

SET prompt =

'###

Summarize this transcript in less than 200 words.

Put the product name, defect and summary in JSON format.

###';

2. d) Write the query to execute using the snowflake.cortex.complete function

select snowflake.cortex.complete('snowflake-arctic',concat('[INST]',$prompt,transcript,'[/INST]')) as summary

from call_transcripts where language = 'English' limit 1;

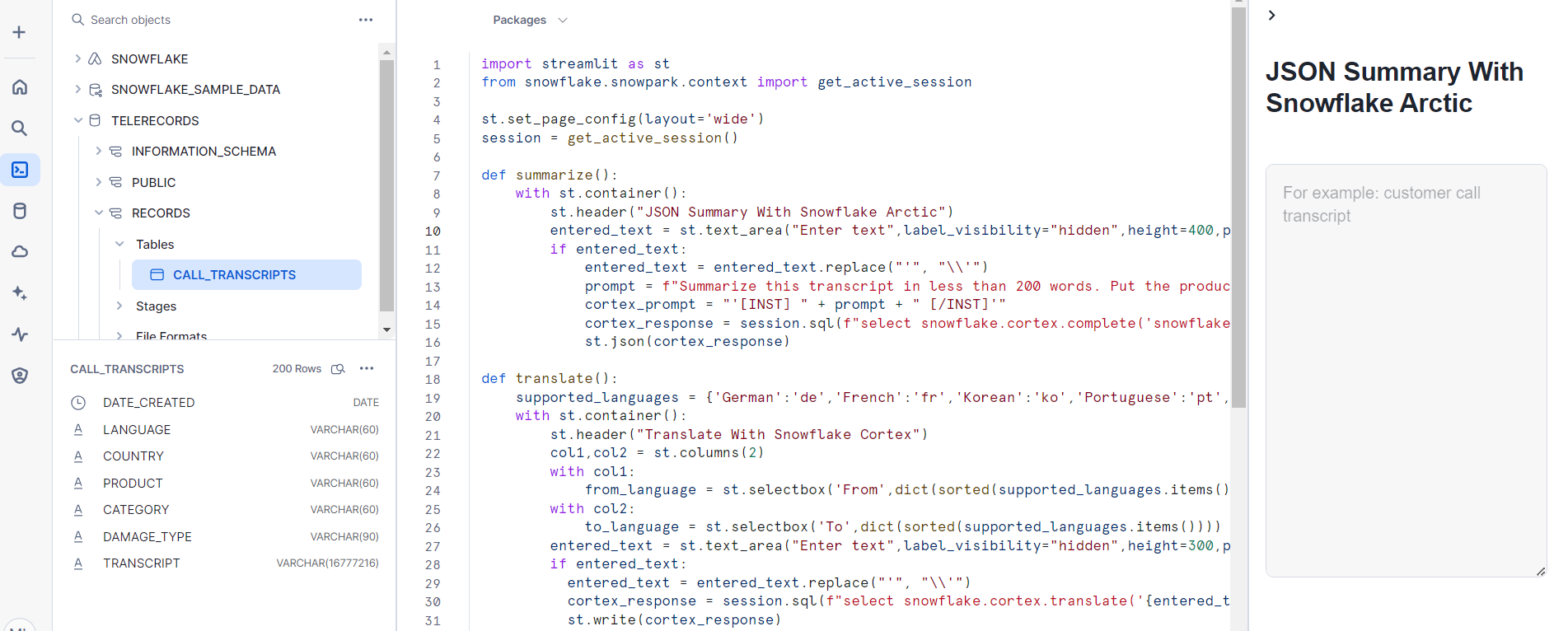

Integrating Arctic with Streamlit

In this section, we will use Streamlit, a Python library that facilitates the development of interactive web applications, to create an interface for our search queries. We will use the Streamlit app feature, which is already available within the Snowflake environment.

- First, we need to go to +Create → Streamlit app.

- Then, we need to use the following code to deploy your Streamlit application.

import streamlit as st

from snowflake.snowpark.context import get_active_session

st.set_page_config(layout='wide')

session = get_active_session()

def summarize():

with st.container():

st.header("JSON Summary With Snowflake Arctic")

entered_text = st.text_area("Enter text",label_visibility="hidden",height=400,placeholder='For example: customer call transcript')

if entered_text:

entered_text = entered_text.replace("'", "\\'")

prompt = f"Summarize this transcript in less than 200 words. Put the product name, defect if any, and summary in JSON format: {entered_text}"

cortex_prompt = "'[INST] " + prompt + " [/INST]'"

cortex_response = session.sql(f"select snowflake.cortex.complete('snowflake-arctic', {cortex_prompt}) as response").to_pandas().iloc[0]['RESPONSE']

st.json(cortex_response)

def translate():

supported_languages = {'German':'de','French':'fr','Korean':'ko','Portuguese':'pt','English':'en','Italian':'it','Russian':'ru','Swedish':'sv','Spanish':'es','Japanese':'ja','Polish':'pl'}

with st.container():

st.header("Translate With Snowflake Cortex")

col1,col2 = st.columns(2)

with col1:

from_language = st.selectbox('From',dict(sorted(supported_languages.items())))

with col2:

to_language = st.selectbox('To',dict(sorted(supported_languages.items())))

entered_text = st.text_area("Enter text",label_visibility="hidden",height=300,placeholder='For example: call customer transcript')

if entered_text:

entered_text = entered_text.replace("'", "\\'")

cortex_response = session.sql(f"select snowflake.cortex.translate('{entered_text}','{supported_languages[from_language]}','{supported_languages[to_language]}') as response").to_pandas().iloc[0]['RESPONSE']

st.write(cortex_response)

def sentiment_analysis():

with st.container():

st.header("Sentiment Analysis With Snowflake Cortex")

entered_text = st.text_area("Enter text",label_visibility="hidden",height=400,placeholder='For example: customer call transcript')

if entered_text:

entered_text = entered_text.replace("'", "\\'")

cortex_response = session.sql(f"select snowflake.cortex.sentiment('{entered_text}') as sentiment").to_pandas()

st.caption("Score is between -1 and 1; -1 = Most negative, 1 = Positive, 0 = Neutral")

st.write(cortex_response)

page_names_to_funcs = {

"JSON Summary": summarize,

"Translate": translate,

"Sentiment Analysis": sentiment_analysis,

}

selected_page = st.sidebar.selectbox("Select", page_names_to_funcs.keys())

page_names_to_funcs[selected_page]()

- Your output screen should look something like this after running the Streamlit application successfully.

Learn how to create Streamlit apps on Snowflake with this step-by-step guide, and explore how it complements Snowflake Arctic for building advanced LLM applications.

Future of Snowflake Arctic

Arctic’s exceptional performance and economic efficiency have raised the bar for language models. Future developments are possible for more sophisticated natural language comprehension, enhanced multitask learning and enhanced support for domain-specific applications. As Snowflake continues to innovate, users may anticipate even more potent and adaptable features.

Frequently Asked Questions

- Is Snowflake Arctic free?

Snowflake Arctic offers a tiered pricing model, including a free tier with limited features and capacity. A subscription plan is required for more extensive usage and advanced features. Details on specific pricing can be checked on Snowflake’s official website or by contacting their sales team.

- Differences Between Snowflake Arctic and DBRX

Snowflake Arctic is designed as a secure and scalable platform for data storage, management, and analytics, facilitating the creation and deployment of machine learning models. While DBRX is often associated with database management and analytics tools, this term isn’t explicitly tied to any well-known product.

- Does Snowflake Use AI?

Yes, Snowflake integrates AI capabilities into its platform. It provides tools and features that enable the development and deployment of machine learning models, including data preparation, model training, and inference. Snowflake Cortex and Snowpark are examples of Snowflake’s efforts to support AI and machine learning within their platform.

- Differences Between Snowflake Arctic and Llama3

Snowflake Arctic focuses primarily on data storage, management, and machine learning model deployment within an integrated data platform, while Llama3 is designed for natural language processing tasks.