Easily move your data from GA4 to Databricks to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time. Check out our 1-minute demo below to see the seamless integration in action!

Replicating your Google Analytics 4 data to Databricks can help you integrate advanced user interaction data and the powerful processing capabilities of Databricks to enhance your data analysis. While this integration comes with numerous benefits, the process of integrating these tools can often be confusing for users who are new to data analytics. Therefore, in this blog, we have provided an easy step-by-step guide on how you can integrate your data from Google Analytics 4 to Databricks.

Table of Contents

Benefits of Replicating Data From Google Analytics 4 to Databricks

- Get more insights into Customer Journeys: Including Google Analytics 4 data sources in other sources within Databricks gives a much more complete view of customer journeys. You would be able to combine ad revenue income with CRM data for more comprehensive KPI analysis.

- Get a Clear View of the Attribution Model: You will be able to measure and then tune how the best model affects your customer touchpoints through your Google Analytics 4 data sitting inside of Databricks.

- Multiple Cloud Support: Native use of BigQuery with Google Analytics 4 allows for free data transfer to Google BigQuery. But again, that works only in the context of Google Cloud. Google Analytics 4 to Databricks allows you to migrate data on multiple cloud stacks, such as AWS, Azure, and Google Cloud, with seamless integration and eliminates rebuilding processes on different platforms.

- Support for Autoscaling: BigQuery has some limitations on data scaling and controlled slot allocation; this slows down the query-processing speed. Autoscaling would aid Databricks in automatically resizing the clusters according to the workload demands to increase the speed of query execution with the growing workloads.

- Build Machine Learning Models: Databricks provides broad support for a range of ML tools, including Apache Spark, Python, and scikit-learn. This capability gives you a much higher-level, customizable, and more powerful predictive analytics, which really elevates your data analytics capabilities.

Looking for a no-code platform to connect your source with the destination? Hevo is here to save you from the task of coding manually with 150+ integrations(60+ free) and a no-code platform. Here’s why you should try Hevo:

- Map and match your data fields hassle-free with Hevo’s automapping feature.

- Customize your transformations to fit your business needs.

- Rely on real engineers with Hevo’s Live Chat support available 24/5.

Explore Hevo’s features and discover why it is rated 4.3 on G2 and 4.7 on Software Advice for its seamless data integration. Try out the 14-day free trial today to experience hassle-free data integration.

Get Started with Hevo for FreeReplicate Data from Google Analytics 4 to Databricks Using Hevo

Step 1: Configure Google Analytics 4 as your Source

Note: You can select from the “Historical Sync Duration” according to your requirements, where the default duration is six months. You can enable the “Pivot Report” option if you want to create an aggregated report based on the dimensions and metrics selected.

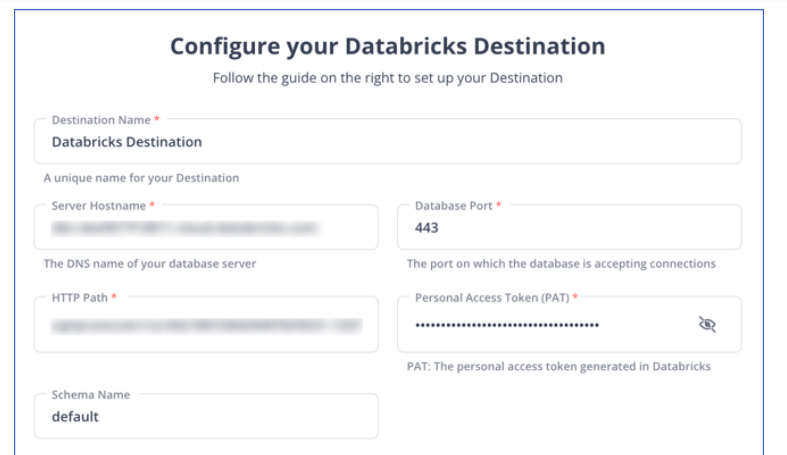

Step 2: Configure Databricks as your Destination

All Done to Setup Your ETL Pipeline!

You can also learn more about:

Conclusion

In this article, we have provided you with a guide for replicating data from Google Analytics 4 to Databricks. Including Google Analytics 4 data sources in other sources within Databricks gives a much more complete view of customer journeys. You would be able to combine ad revenue income with CRM data for more comprehensive KPI analysis. In Databricks clusters, size will automatically be resized based on workflow demand. As a result, your query processing time is now optimized with support from ML-powered predictive analytics tools. Google Analytics 4 to Databricks integration will complement your decision-making capabilities with the much-needed finesse.

Want to take Hevo Data for a ride? Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

1. How do you access Google Analytics 4 data?

Access your data from Google Analytics 4 through:

-Log into Google Analytics

-Click “All Web Site Data” to locate your GA4 property

-Click to select the GA4 property to view new data

2. How does Google Analytics 4 collect data?

Shortline codes of Javascript or HTML called tags are used by Google Analytics 4 to collect data.

3. What is the difference between Google Analytics and GA4?

Google Analytics, using the Universal Analytics approach, employs a session-based tracking model based on sessions and page views. In contrast, GA4 employs an event-based model that tracks all user interactions in a much more holistic way.