Integrating data from HubSpot to Amazon Aurora enables businesses to streamline their customer data management and enhance reporting capabilities. This blog will explore:

- Why integrating HubSpot with Amazon Aurora is beneficial.

- Different methods to set up this integration.

- Step-by-step guidance on using CSV files and automation tools.

By the end, you’ll know how to efficiently transfer your HubSpot data to Amazon Aurora for optimized database management.

Table of Contents

Overview of HubSpot

HubSpot is an all-in-one inbound marketing and sales software platform that helps companies attract visitors, convert leads, and close customers. It is a cloud-based platform that provides tools for marketing, sales, service, web content, and operations teams to work together seamlessly. Key features of HubSpot include:

- Customer service tools for ticketing, self-service, and support analytics

- A centralized CRM to manage customer data and interactions

- Tools for creating and managing websites, blogs, landing pages, and email campaigns

- Functionality for social media posting, ad campaigns, and marketing analytics

- Sales enablement features like lead engagement, deal management, and reporting

Method 1: Integrate Data from HubSpot to Amazon Aurora Using an Automated ETL Tool

Hevo Data, an Automated Data Pipeline, provides you with a hassle-free solution to connect HubSpot to Amazon Aurora within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of not only loading data from HubSpot but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Using HubSpot APIs to Move Data from HubSpot to Amazon Aurora

This method of integrating HubSpot into Amazon Aurora would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable HubSpot Amazon Aurora migration. This method is suitable for users with a technical background.

Get Started with Hevo for Free!How to Connect HubSpot to Amazon Aurora?

Method 1: Integrate Data from HubSpot to Amazon Aurora Using an Automated ETL Tool

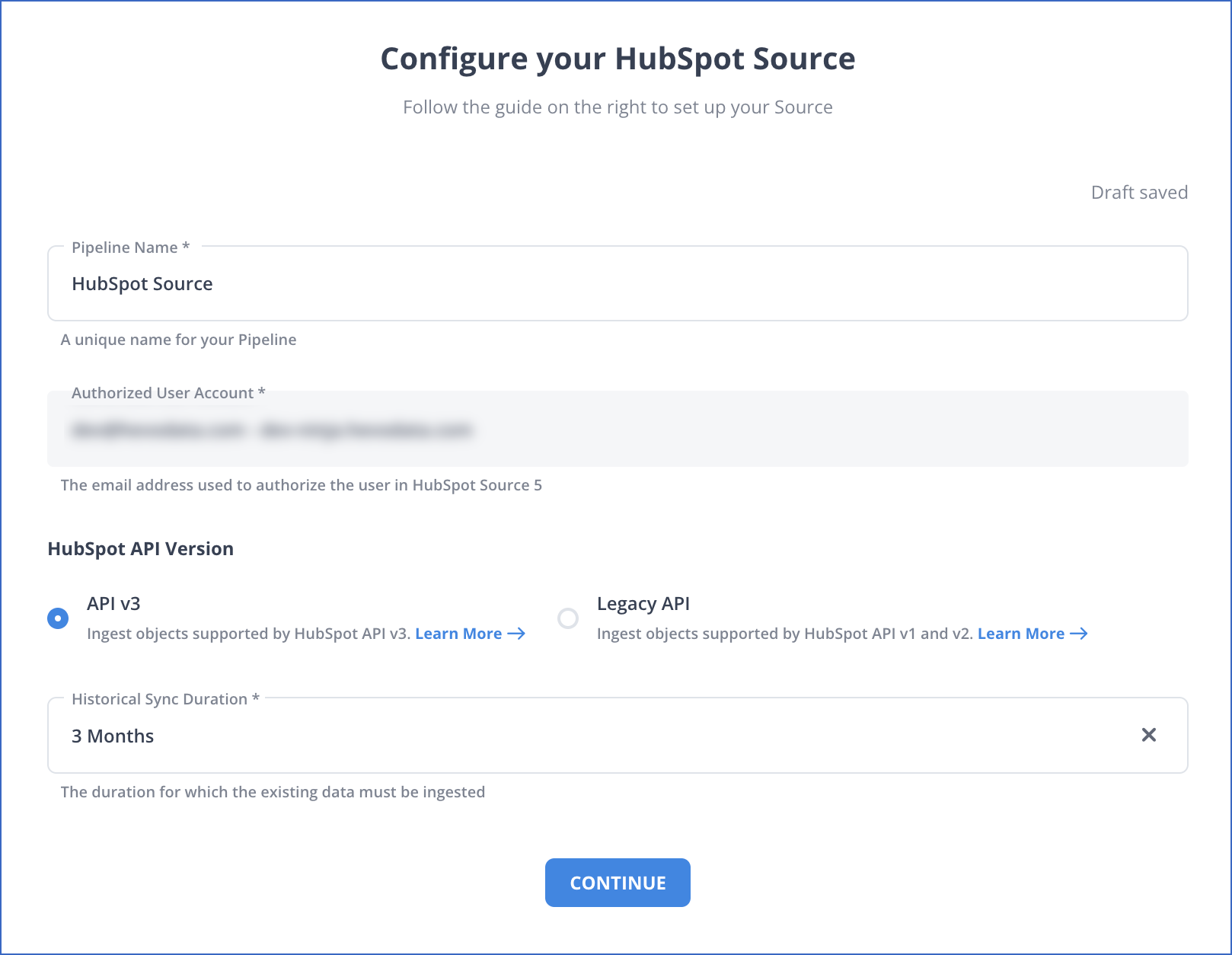

Step 1.1: Configure HubSpot as Your Source

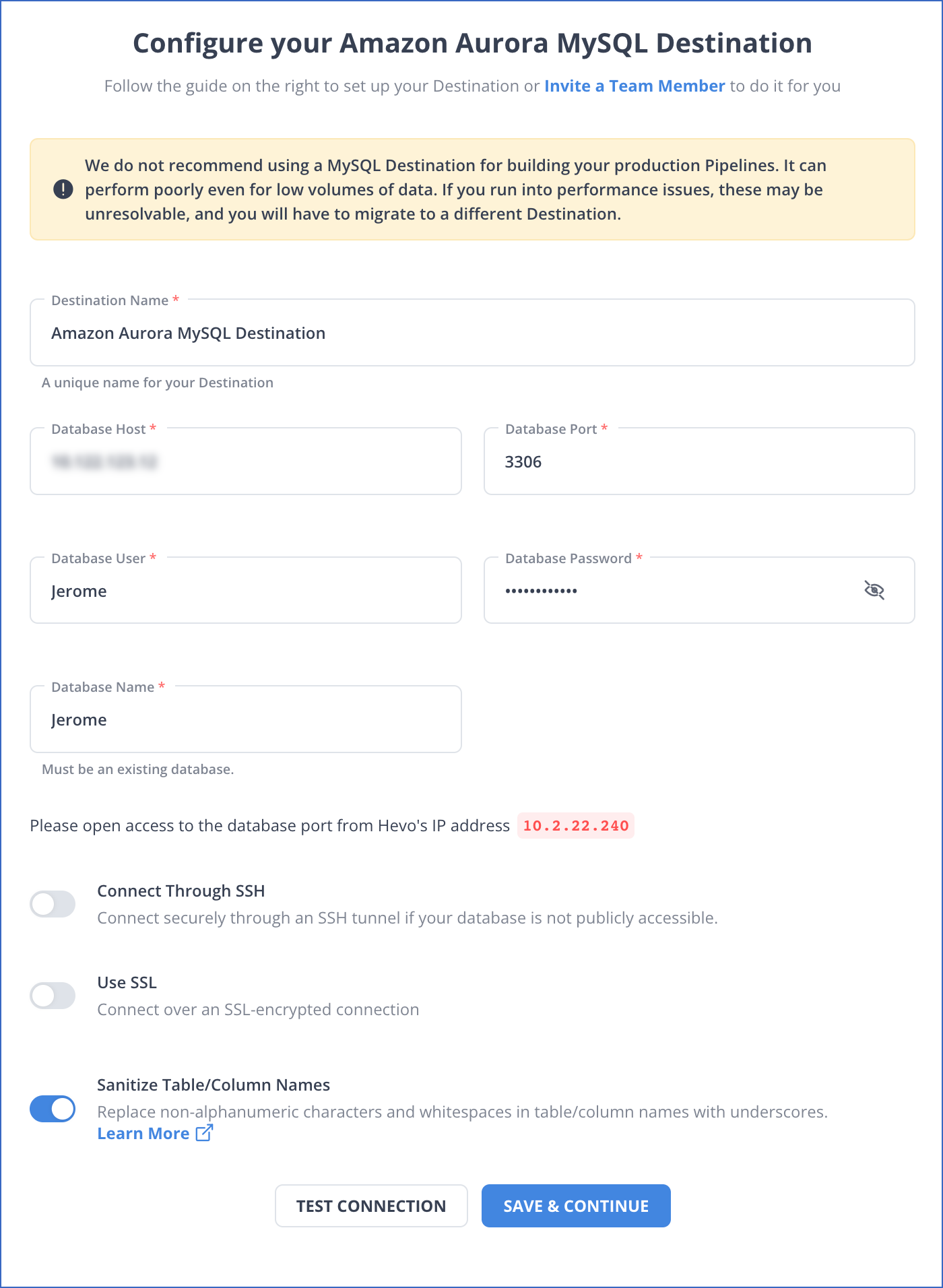

Step 1.2: Configure Amazon Aurora as Your Destination

That’s it. Your ETL pipeline is now set up !!

Salient Features of Hevo

- Fully Managed: Hevo requires no management and maintenance as it is a fully automated platform.

- Data Transformation: Hevo provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Faster Insight Generation: Hevo offers near real-time data replication, so you have access to real-time insight generation and faster decision making.

- Schema Management: Hevo can automatically detect the schema of the incoming data and map it to the destination schema.

- Scalable Infrastructure: As your sources and the volume of data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Live Support: Hevo team is available round the clock to extend exceptional support to its customers through chat, email, and support calls.

Method 2: Using HubSpot APIs

You can migrate data from HubSpot to Amazon Aurora using HubSpot APIs. Follow the following stepwise process for a successful migration:

Step 2.1: Create a HubSpot developer account.

- Go to the HubSpot Developer website: and click on the Create Account button.

- Provide your Name, email address, and password, and create an Account.

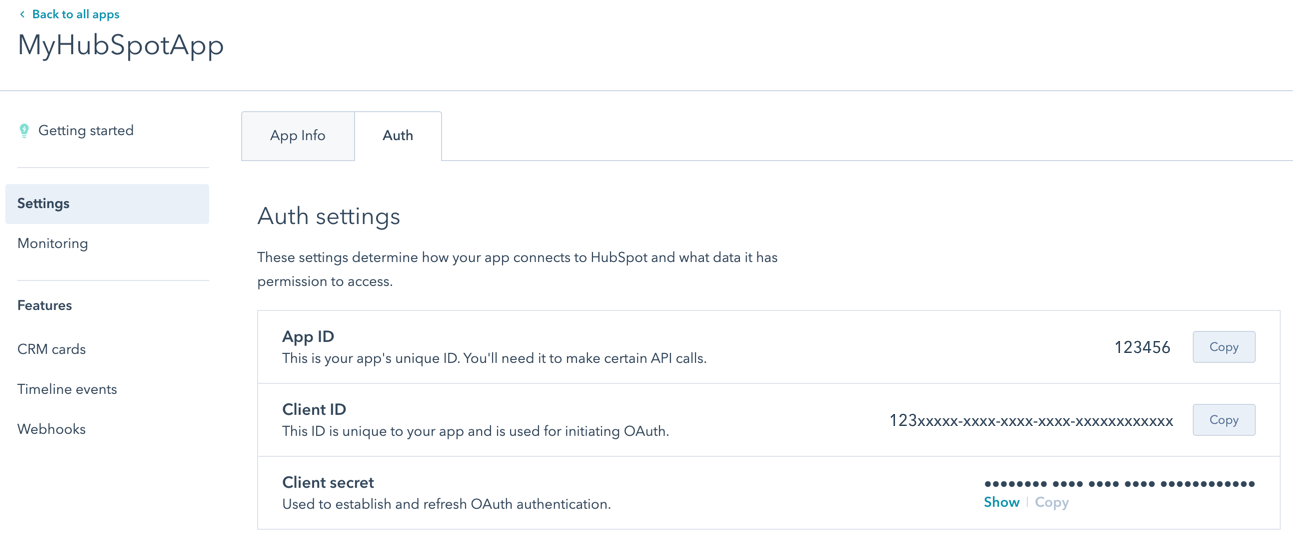

Step 2.2: Choose the authentication method and create an access token.

- The HubSpot API requires authentication before you can access it. You can choose to use OAuth 2.0 or a private app access token.

- OAuth 2.0 is more secure, but it is also more complex to set up. A private app access token is less secure, but it is easier to set up.

To create an access token:

- Go to the Settings tab on the HubSpot Developer website.

- Click on the API tab.

- If you use a private app access token, click Create a private app access token.

- If you choose to use OAuth 2.0, follow the instructions on the HubSpot Developer website to generate an access token.

Once you have created an access token, make sure you save it at a secure location.

Step 2.3: Install the HubSpot API Client Library

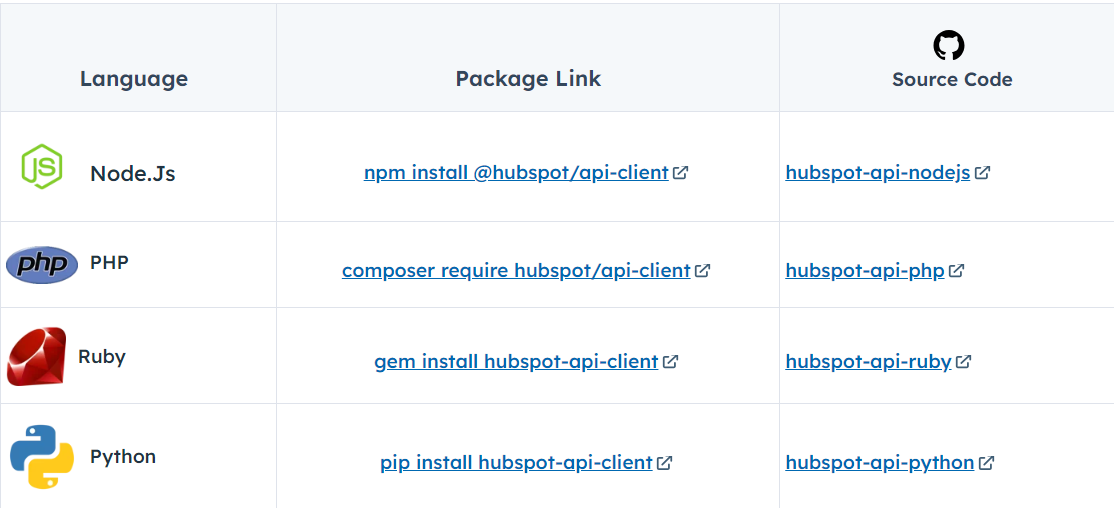

- Find the client library for your programming language on the HubSpot Developer website. HubSpot Developer website has client libraries for a variety of programming languages, including Python, Java, and JavaScript.

- Install the client library according to the instructions on the HubSpot Developer website.

Step 2.4: Create a Staging Table in Your Amazon Aurora Database

The staging table is a temporary table that you can use to store your migrated data before it is loaded into your production database. The staging table should have the same schema as the production database table that you are migrating data to.

To create a staging table:

- Use the AWS Management Console or the AWS CLI to create a connection to your Amazon Aurora database.

- Run the SQL command to create the staging table.

Here’s an example

CREATE TABLE staging_table (

name VARCHAR(255),

email VARCHAR(255),

phone VARCHAR(255),

deal_name VARCHAR(255),

deal_stage VARCHAR(255),

deal_value VARCHAR(255)

);

Step 2.5: Write a Script to Retrieve the Data From HubSpot and Load It into the Staging Table

Here is an example of a script that you can use to migrate contacts and deals from HubSpot to Amazon Aurora:

import hubspot

import psycopg2

def migrate_data():

"""

Migrates data from HubSpot to Amazon Aurora.

"""

# Create a connection to the HubSpot API.

client = hubspot.Client(access_token="my_access_token")

# Create a connection to the Amazon Aurora database.

conn = psycopg2.connect("host=localhost dbname=mydb user=myuser password=mypassword")

# Retrieve the data from HubSpot.

contacts = client. contacts.get_all()

deals = client.deals.get_all()

# Load the data into the Amazon Aurora database.

for contact in contacts:

cursor = conn.cursor()

cursor.execute("INSERT INTO staging_table (name, email, phone) VALUES (%s, %s, %s)", (contact["name"], contact["email"], contact["phone"]))

for a deal in deals:

cursor = conn.cursor()

cursor.execute("INSERT INTO staging_table (deal_name, deal_stage, deal_value) VALUES (%s, %s, %s)", (deal["name"], deal["stage"], deal["value"]))

# Commit the changes to the database.

conn.commit()

# Load the data from the staging table into your production database.

cursor = conn.cursor()

cursor.execute("INSERT INTO production_table (name, email, phone) SELECT name, email, phone FROM staging_table;")

cursor.execute("INSERT INTO production_table (deal_name, deal_stage, deal_value) SELECT deal

Advantages of Connecting HubSpot to Amazon Aurora Manually

- Small data: If your data requirement from HubSpot is small, you can use this method to migrate data from HubSpot to Amazon Aurora one record at a time.

- More control over the migration process: This method will allow you more control in customizing the migration process to meet your specific needs.

Limitations of Connecting HubSpot to Amazon Aurora Manually

- When you need to migrate a large amount of data on a regular basis, this method is not scalable as it’s time-consuming, error-prone, and requires specialized skills.

- Instead, identify key fields that your script can use to bookmark its progress through the data and return to as it searches for updated data. It’s best to use auto-incrementing fields like updated at or created at for this.

- As with any code, you must maintain it once you’ve written it. You may need to change the script if HubSpot changes its API or if the API sends a field with a datatype your code doesn’t recognize. You must undoubtedly do so if your users require slightly different information.

What Can You Achieve by Migrating Your Data From HubSpot to Amazon Aurora?

By replicating data from HubSpot to Amazon Aurora, you can gain insights into your business that you may not be able to see with just HubSpot’s native reporting tools. Here are five questions that you can answer by replicating data from HubSpot to Amazon Aurora:

- What are the top sources of leads for my business?

- What are the most popular pages on my website?

- What are my most effective marketing campaigns?

- What are my most profitable customers?

- What are the trends in my industry?.

Final Thoughts

- There are two ways to migrate data from HubSpot to Amazon Aurora: using HubSpot APIs or a third-party ETL tool.

- Using HubSpot APIs involves creating a HubSpot developer account, getting an access token, installing the HubSpot API Client Library, creating a staging table, writing a script, and loading the data. This method can be a great option if your data requirements are small and infrequent.

- But what if you need to migrate large amounts of data on a regular basis? Will you still be dependent on the manual and laborious tasks of exporting data from different sources?

- In that case, you can free yourself from the hassle of writing code and manual tasks by opting for an automated ETL Tool like Hevo Data.

A custom ETL solution becomes necessary for real-time data demands such as monitoring email campaign performance or viewing the sales funnel. You can free your engineering bandwidth from these repetitive & resource-intensive tasks by selecting Hevo Data’s 150+ plug-and-play integrations (including 60+ accessible sources).

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQ

1. How do I connect HubSpot to AWS?

To connect HubSpot to AWS, you can use third-party tools like Fivetran or Hevo to

integrate HubSpot with AWS services such as Amazon Redshift or S3, enabling

seamless data transfer.

2. What two databases is Amazon Aurora compatible with?

Amazon Aurora is compatible with MySQL and PostgreSQL, offering enhanced performance for both database engines.

3. Is Amazon Aurora more expensive?

Amazon Aurora can be more expensive than standard MySQL or PostgreSQL due to its high-availability and performance features, though it’s optimized for better cost-performance efficiency at scale.