Apache Airflow is a powerful tool for managing and automating workflows and can be installed on multiple operating systems. It is one of the most trusted platforms for orchestrating workflows and is widely used and recommended by top data engineers.

Apache Airflow provides many features, such as a proper visualization of the data pipelines and workflows, the status of the workflows, the data logs, and codes in quite a detail.

This article provides a clear, step-by-step guide to help you install Airflow on your system, no matter what platform you are using

Table of Contents

What is Apache Airflow?

Apache Airflow is a workflow engine that helps in scheduling and running data pipelines that are complex. Airflow makes sure that all the steps of the data pipeline get executed in the predefined order and all the tasks get the resources based on the requirement.

How to Install Airflow?

- Installing Airflow is a difficult task, but the benefits it provides sometimes outweigh the difficulties faced by data professionals since it is mostly a one-time process.

Method 1: Installing Airflow in Linux

The installation of Airflow is four steps.

- Step 1: Installing Ubuntu

- Step 2: Installing pip

- Step 3: Install Airflow Dependencies

- Step 4: Install Airflow

As you learn about Airflow, it’s important to know about the best platforms for data integration as well. Hevo Data, a No-code Data Pipeline platform, helps to replicate data from any data source such as Databases, SaaS applications, Cloud Storage, SDKs, and Streaming Services, and simplifies the ETL process.

It supports 150+ data sources (including 60+ free data sources) like Asana and is an easy 3-step process. With Hevo’s transformation feature, you can modify the data and make it into analysis-ready form.

Check out some of the cool features of Hevo:

- Completely Automated: Set up in minutes with minimal maintenance.

- Real-Time Data Transfer: Get analysis-ready data with zero delays.

- 24/5 Live Support: Round-the-clock support via chat, email, and calls.

- Schema Management: Automatic schema detection and mapping.

- Live Monitoring: Track data flow and status in real-time.

Let us understand the steps in detail, to install airflow.

Step 1.1: Installing Ubuntu

Ubuntu is a Linux operating system. It provides a more controlled environment over all the functionalities of the system.

- Before installing Ubuntu, turn on the developer option on the windows system.

- Enable the subsystem for the Linux option located in Windows Features.

- Download the visual C++ module.

- Install Ubuntu from Microsoft Store or use an ISO file. start the installation process

- A terminal will open where you need to enter your username and password. The terminal doesn’t show the password that you type in.

Ubuntu Installing, this may take a few minutes... Please create a default UNIX user account. The username does not need to match your Windows username. For more information visit: https://aka.ms/wslusers Enter new UNIX username: bull87- Bash command can be used if you closed the terminal after the last step and reopen it. Bash command helps to communicate with the computer.

C:Usersjacks>bash To run a command as administrator (user "root"), use "sudo <command>". See "man sudo root" for details.

bull87@DESKTOP-G50VTBF:/mnt/c/Users/jacksStep 1.2: Installing PIP

Pip is a tool that manages and is designed to install the packages that are written for python and written in python. Pip is required to download Apache Airflow. Run through the following code commands to implement this step:

sudo apt-get install software-properties-common

sudo apt-add-repository universe

sudo apt-get update

sudo apt-get install python-setuptools

sudo apt install python3-pip

sudo -H pip3python install --upgrade pipStep 1.3: Install Airflow Dependencies

For airflow to work properly you need to install all its dependencies. Without dependencies Airflow cannot function to its potential i.e, there would be a lot of missing features and may even give bugs. To avoid it run the following commands and install all dependencies.

sudo apt-get install libmysqlclient-dev

sudo apt-get install libssl-dev

sudo apt-get install libkrb5-devAirflow uses SQLite as its default database

Step 1.4: Install Airflow

Run the following command to finally install airflow on your system.

export AIRFLOW_HOME=~/airflowpip3 install apache-airflowpip3 install typing_extensions# initialize the database

airflow initdb

# start the web server, default port is 8080

airflow webserver -p 8080# start the scheduler. I recommend opening up a separate terminal #window for this step

airflow scheduler

# visit localhost:8080 in the browser and enable the example dag in the home pageAfter you execute the following commands, the process to install airflow on your system is complete. This allows you to access and utilize the complete potential of the airflow tool.

Method 2: Installing Airflow for Windows PC

Here, we are going to install airflow for Windows PC. So there are certain prerequisites for it, such as:

- Docker Desktop

- Visual Studio

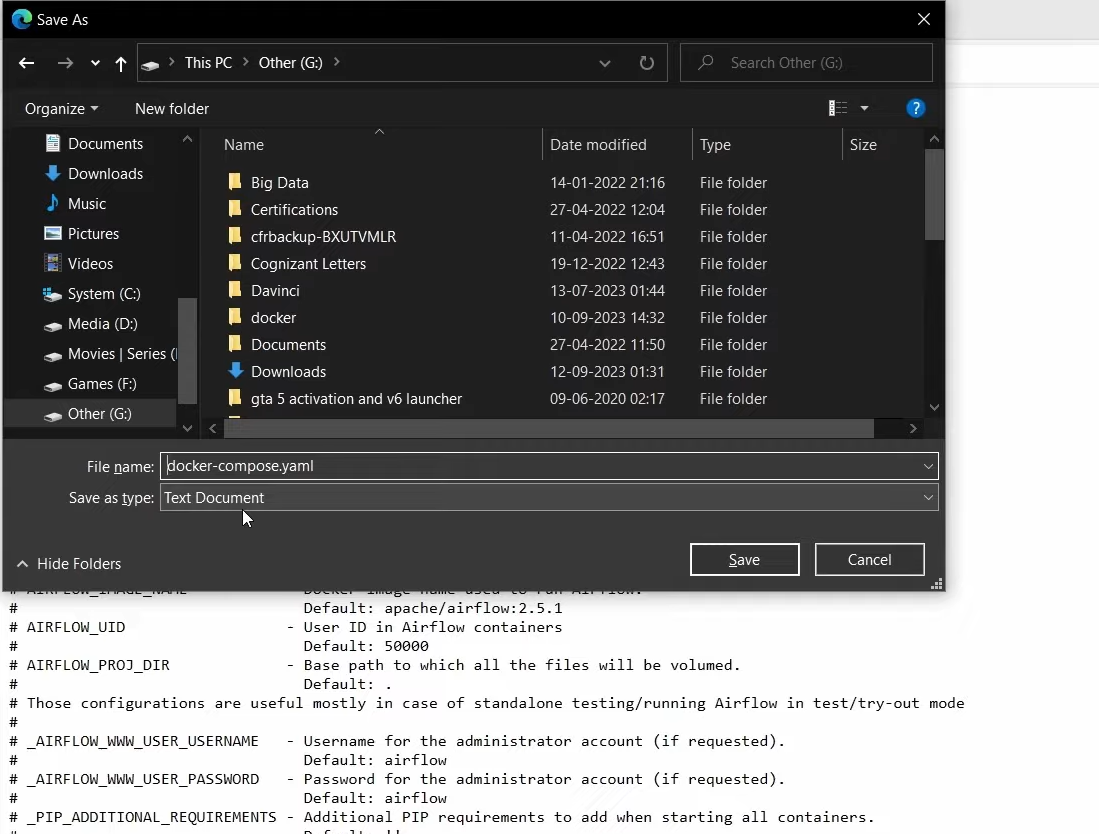

Step 1 – Save the .YAML file in a separate folder. It is needed to start the Apache Airflow.

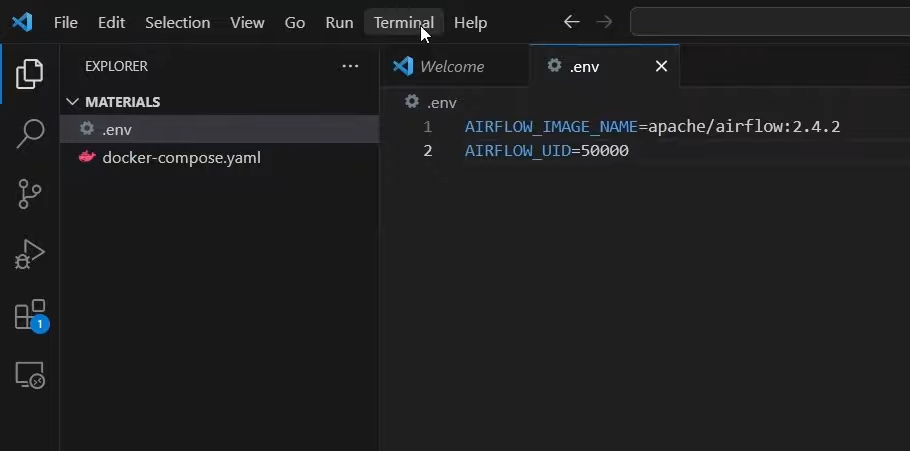

Step 2 – Create a .env file to define configuration variables. For this, open Visual Studio code and open your .yaml file containing the folder in this. After this, create a new file with the extension .env. Enter Airflow_Image_name and Airflow_UID into it and save it.

Step 3 – Pick up the docker-compose file. To do so, go to the new terminal and pass the argument.

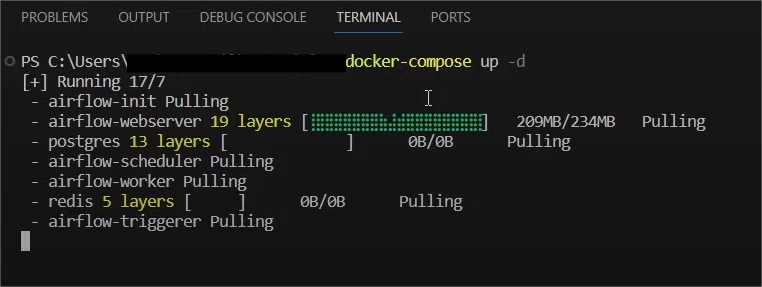

Docker-compose up-d

Step 4 – You can execute it, and it starts pulling all the files and Airflow services.

Step 5 – This shows that Apache airflow has been successfully installed

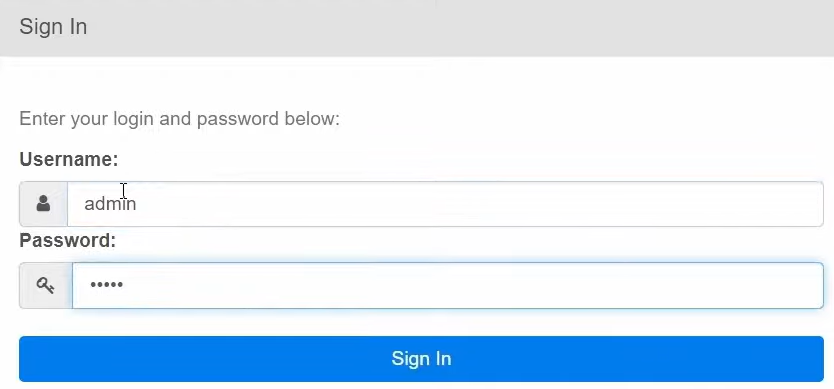

Step 6 – Go to your local host 8080, to access the login page of airflow. To create an admin username and password, copy the following command and paste in Visual Studio

docker -compose run airflow-worker airflow users create --role Admin --

username admin --email admin --firstname admin --lastname admin --password

adminStep 7 – Now enter the credentials to log in to Apache airflow

Thus, you have successfully set up Apache airflow in windows PC.

Other Methods to Install Airflow

Using Released Sources

- This is suitable if you want to build your software from sources and want to verify the integrity of the software.

- You will have to build, install, set, and handle all components of Airflow on your own.

Using PyPi

- It is useful when you want to install Airflow on the physical or virtual machine, and you are not familiar with docker and containers.

- This is only supported by pip through the constraint mechanism.

- You can use this method if you are familiar with Python programming and running custom deployment mechanism software.

Using Production Docker images

- This is useful if you are familiar with the docker stack and know how to build container images.

- If you understand how to install providers and dependencies from PyPI

- If you know how to create docker deployments and link multiple docker containers together.

Using Official Airflow Helm Chart

- This is helpful if you know how to manage infrastructure using Kubernetes and applications on Kubernetes using Helm Charts.

Using Managed Airflow Services

- This can be used when you want someone else to manage your Airflow account and are ready to pay for it.

Using 3rd party images, charts, deployments

- You can use this if you have tried other ways of installations and found them insufficient.

Overview of Apache Airflow Platform Workflow

- Apache Airflow is an open-source platform for properly monitoring, scheduling, and executing complex workflows. It is useful in creating workflow architecture.

- Airflow is one of the most powerful open source data pipeline platforms currently in the market. Airflow uses DAG ( Directed Acyclic Graphs) to structure and represent the workflows, where nodes of DAG represent the tasks.

- The ideology behind Airflow’s design is that all the data pipelines can be expressed as code. It soon became a platform where workflows can iterate quickly and utilize code-first platforms.

Features of Apache Airflow

- Ease of use: Deploying airflow is easy as it requires just a little bit of knowledge in python.

- Open Source: It is free to use, open-source platform that results in a lot of active users.

- Good Integrations: It has readily available integrations that allow working with platforms like Google Cloud, Amazon AWS, and many more.

- Standard Python for coding: Relatively little knowledge of python can help in creating complex workflows

- User Interface: Airflow’s UI helps in monitoring and managing the workflows. It also provides a view of the status of tasks.

- Dynamic: All the tasks of python can be performed in airflow since it is based on python itself.

- Highly Scalable: Airflow allows the execution of thousands of different tasks per day.

Final Thoughts

- Airflow is one of the most powerful workflow management tools on the market. By utilizing its potential, companies can solve their problems and complete tasks on time with efficiency.

- This article gave a comprehensive guide on Airflow and then a step-by-step guide to installing Airflow in an easy manner. Airflow is a trusted source that a lot of companies use as it is an open-source platform.

- Creating pipelines, installing them on the system, and monitoring pipelines are all very difficult on Airflow as it is a completely coding platform that requires a lot of expertise to run properly. A platform that creates data pipelines with any code can solve this issue.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

1. How can I install Airflow?

Airflow can be installed using the command `pip install apache-Airflow` in your terminal. Make sure to set up a virtual environment first.

2. How do you install an Airflow worker?

To install an Airflow worker, use the command `pip install apache-Airflow[celery]` in your terminal and configure it as a Celery worker.

3. Is Airflow an ETL tool?

Yes, Airflow can be used as an ETL tool to orchestrate and automate data extraction, transformation, and loading workflows.