You are living in the digital era surrounded by a lot of data. Every online marketing or advertising agency generated a huge amount of data daily. This data contains lots of information about potential customers, sales, trends, markets, products, and many more. But, it is not easy to study and analyze this data independently.

You need to transfer app data to a database where it can be analyzed and visualized to achieve future targets easily. Deep and right data Analysis and visualization give the right direction to the businesses and help increase ROI(Return on Investment).

In this article, you will learn two methods to integrate one such app called Outbrain to PostgreSQL. With correct integration, data can be loaded easily to the database and can be further analyzed for the benefit of the organization.

Table of Contents

What is PostgreSQL?

PostgreSQL is an open-source object-relational database system known for reliability, feature robustness, data integrity, and high performance. It was earlier named Postgres but later remanded as PostgreSQL as it is SQL compliance. It can handle concurrent users on single machines to data warehouses or web services easily. PostgreSQL runs on all major operating systems Windows, Linux, FreeBSD, and OpenBSD, and it is the default database for macOS servers.

PostgreSQL assists developers in creating the most complex applications, running administrative tasks, and creating integral environments. Many web applications, as well as mobile and analytics apps, use PostgreSQL as their primary database.

PostgreSQL was designed to allow the addition of new capabilities and functionality. You can create your data types, index types, functional languages, and other features in PostgreSQL.If you don’t like something about the system, you can always create a custom plugin to make it better, such as adding a new optimizer.

Key Features Of PostgreSQL

The PostgreSQL features make it stand out and lead to high demand. Some important features of PostgreSQL are

- Data Integrity: PostgreSQL follows ACID properties before and after every read or writes operation to maintain consistency in a database. ACID properties mean Atomicity, Consistency, Isolation, and Durability which help in maintaining rock-solid transactional integrity.

- High-Performance: PostgreSQL is a fast and high-performing database. Advanced Indexing, B-tree indexes, Concurrency, query planning and optimization, nested Transactions, Multi-Version Concurrency Control (MVCC), Parallelization, and Table partitioning are some of the features which result in high performing PostgreSQL database.

- Security: PostgreSQL is a secured database with GSSAPI, SSPI, LDAP, SCRAM-SHA-256, and many more authentication certificates. It offers a robust access control system, row and column level security, and Multi-factor authentication with certificates.

Why Integrate Outbrain to PostgreSQL?

Marketers analyze the data generated from Ad campaigns and run it on Outbrain. The transfer of data to a reliable database such as PostgreSQL helps with a lot of data problems. Without analysis and visualization, the data generated is a waste. Integration of Outbrain to PostgreSQL provides a platform to help marketers analyze ad campaign data and take decisions accordingly to increase sales and ROI(Return on Investment).

Methods to Integrate Outbrain to PostgreSQL

Method 1: Using Hevo to Set Up Outbrain to PostgreSQL

Hevo Data provides an automated, no-code solution for Outbrain to PostgreSQL integration. It migrates data effortlessly and enriches and transforms it into an analysis-ready form without any coding required.

Method 2: Using Custom Code to Move Data from Outbrain to PostgreSQL

Under this method, you’ll use RESTful Amplify API and SQL to extract and load data. Manually loading data into PostgreSQL is simple, but it needs practical expertise and technical knowledge.

Move Data to PostgreSQL for Free!Method 1: Using Hevo to Set Up Outbrain to PostgreSQL

Hevo provides an Automated No-code Data Pipeline that helps you move your Outbrain to PostgreSQL. Hevo is fully-managed and completely automates the process of not only loading data from your 150+ data sources(including 60+ free sources)but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code. Its fault-tolerant architecture ensures that the data is handled securely and consistently with zero data loss.

Using Hevo, you can connect Outbrain to PostgreSQL in the following 2 steps:

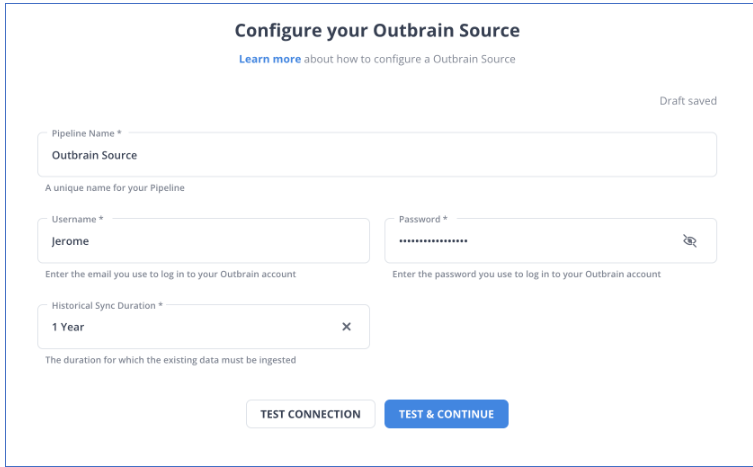

- Step 1: Perform the following steps to configure Outbrain as the Source in your Pipeline.

- Step 1.1: In the Asset Palette, click PIPELINES.

- Step 1.2: In the Pipelines List View, click the +CREATE button.

- Step 1.3: In the Select Source Type page, select Outbrain.

- Step 1.4: In the Configure your Outbrain Source page, specify the fields mentioned below:

- Pipeline Name: Give a unique name for the Pipeline, not exceeding 255 characters.

- Username: Give the username obtained from Outbrain for access credentials.

- Password: The password for the above outbrain username.

- Historical Sync Duration: The synchronization duration for which the past data must be ingested.

- Step 1.5: Click on the TEST & CONTINUE button.

- Step 1.6: Proceed to configure the data ingestion and the Destination as PostgreSQL.

- Step 2: After configuring the source, let’s configure PostgreSQL as the destination by following the below steps.

- Step 2.1: After configuring the Source during Pipeline creation, click ADD DESTINATION or Click DESTINATIONS in the Asset Palette, and then, click +CREATE in the Destinations List View.

- Step 2.2: Select PostgreSQL on the Add Destination page.

- Step 2.3: Configure PostgreSQL connection settings as mentioned below:

- Destination Name: Give a unique name for your Destination.

- Database Host: Use the PostgreSQL host’s IP address or DNS as the database host.

- Database Port: The port number on which your PostgreSQL server listens for connections. Default value: 5432.

- Database User: In the PostgreSQL database, a user with a non-administrative role.

- Database Password: The password of the PostgreSQL database user.

- Database Name: The name of the Destination PostgreSQL database to which the data is loaded.

- Database Schema: The name of the Destination PostgreSQL database schema. Default value: public.

- Additional Settings: Choose advance setting as per the need.

- Step 2.4: After configuring the PostgreSQL settings, click on TEST CONNECTION to test connectivity to the Destination Postgres server.

- Step 2.5: Once the test connection is successful, save the connection by clicking on SAVE & CONTINUE.

Method 2: Using Custom Code to Move Data from Outbrain to PostgreSQL

Follow the below steps to manually integrate Outbrain to PostgreSQL:

Step 1: Extract Data from Outbrain

The first step for the Outbrain to PostgreSQL integration is to extract the data from outbrain. Outbrain allows you to extract marketers, campaigns, or performance data using RESTful Amplify API. With a GET/reports/marketers/[id]/content call, an API call can be created.

In API calls performance metrics can be specified according to the requirement. There are many options available to choose from.

Step 2: Sample Data response

Below is an example of a JSON response from an API query for Outbrain marketers performance data:

{

"results": [

{

"metadata":

{

"id": "00f4b02153ee75f3c9dc4fc128ab041962",

"text": "Yet another promoted link",

"creationTime": "2017-11-26",

"lastModified": "2017-11-26",

"url": "http://money.outbrain.com/2017/11/26/news/economy/crash-disaster/",

"status": "APPROVED",

"enabled": true,

"cachedImageUrl": "http://images.outbrain.com/imageserver/v2/s/gtE/n/plcyz/abc/iGYzT/plcyz-f8A-158x114.jpg",

"campaignId": "abf4b02153ee75f3cadc4fc128ab0419ab",

"campaignName": "Boost 'ABC' Brand",

"archived": false,

"documentLanguage": "EN",

"sectionName": "Economics",

},

"metrics":

{

"impressions": 18479333,

"clicks": 58659,

"conversions": 12,

"spend": 9187.16,

"ecpc": 0.16,

"ctr": 0.32,

"conversionRate": 0.02,

"cpa": 765.6

}

}

],

"totalResults": 27830,

"summary": {

"impressions": 1177363701,

"clicks": 2615150,

"conversions": 2155,

"spend": 455013.97,

"ecpc": 0.17,

"ctr": 0.22,

"conversionRate": 0.08,

"cpa": 211.14

},

"totalFilteredResults": 1,

"summaryFiltered": {

"impressions": 18479333,

"clicks": 58659,

"conversions": 12,

"spend": 9187.16,

"ecpc": 0.16,

"ctr": 0.32,

"conversionRate": 0.02,

"cpa": 765.6

}

}Step 3: Data Preparation

Data that is extracted need to be prepared. Create a data schema if it is not already there. A data structure is required to store data from where data can be easily uploaded to PostgreSQL.

Step 4: Load Data

Now, it’s time to load data in PostgreSQL. Use CREATE TABLE command to create a table in the Database. A sample code is shown below:

CREATE TABLE client(

id serial,

first_name VARCHAR(50),

last_name VARCHAR(50),

email VARCHAR(50),

contact_num VARCHAR(50)

);After creating the table, use INSERT statements to add data to your PostgreSQL table row by row.

Step 5: Final Check

Run SQL Query to check whether data is properly uploaded on PostgreSQL or not.

Conclusion

You have learned two methods to ingrate Outbrain to PostgreSQL. It is easy to load data to PostgreSQL manually but it requires hands-on experience and technical knowledge to do the same.

While with Hevo data it is easy to upload data in a few clicks and a non-technical person can do the same. It makes the Outbrain PostgreSQL integration process easy and user-friendly. Hevo’s reliable, flexible, and easy-to-use GUI make it highly recommended for data loading from Outbrain to PostgreSQL database.

Visit our Website to Explore HevoHevo offers a No-code Data Pipeline that can automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Marketing, Customer Management, etc.

This platform allows you to transfer data from 150+ sources (including 60+ Free Sources) such as Outbrain to a destination of your choice such as PostgreSQL, Cloud-based Data Warehouses, etc. It will provide you with a hassle-free experience and make your work life much easier.

Want to take Hevo for a spin? Explore Hevo’s 14-day free trial and experience the feature-rich Hevo suite firsthand. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs.

FAQs

1. Can I move data from Outbrain to Bigquery?

Yes, you can move data from Outbrain to BigQuery using custom pipelines. This typically involves exporting Outbrain reports via their API, then transforming the data as needed and loading it into BigQuery using tools like Google Cloud Storage or Cloud Dataflow.

2. How can I extract data from Outbrain?

You can extract data from Outbrain using its API, which allows you to pull reports and campaign performance data in JSON or CSV format.

3. How do I schedule regular data transfers from Outbrain to PostgreSQL?

Use cron jobs, cloud services, or ETL tools with built-in scheduling features to automate and schedule data extraction and loading into PostgreSQL.

4. Can I load large amounts of data from Outbrain to PostgreSQL efficiently?

Yes, to handle large data volumes efficiently, you can batch the data, use bulk inserts, or leverage tools like PostgreSQL’s COPY command for faster data loading.