There is a growing need among businesses to capture relevant information from the data they produce because businesses that fail to capture data and transform it into timely and valuable information will struggle to stay competitive and viable.

To be able to do this you will need to have Data Connectors that can gather all kinds of data from different sources, build effective Data Pipelines to access Data Warehouses and Data Lakes to gain knowledge from these produced data to attain your desired goals. These connectors will be needed to extract, load, and transform your data into formats on which analysis can be done on them.

There is a common link between ETL and ELT as they can both be used to get data from disparate sources but they both have slightly different steps in the process. In ELT, you do not need to transform data before loading it into a Data Warehouse as this can be carried out there making it easier to load data of any kind and format because it does not require a specific standard from the Data Warehouse before loading.

The purpose of this article is to draw your attention to the procedures of Snowflake ELT by defining what it means, stating its advantages, and ultimately mentioning some Snowflake ELT tools that are popular in the market.

Table of Contents

Introduction to Snowflake

Snowflake is a Data Warehouse that has been built for the Cloud. It is a Software-as-a-Service (SaaS) platform that provides a fast and robust engine for storing and querying data, built on Amazon Web Service, Microsoft Azure, and Google infrastructure.

Snowflake is ideal for organizations that do not want to have dedicated resources for setup, maintenance, and support for in-house servers as its architecture separates its storage unit from its compute unit, thereby scaling differently so customers can use and pay for both independently. Snowflake is highly scalable and flexible with Big Data.

Introduction to ELT

ELT stands for Extract, Load, and Transform, it is similar to the popular ETL (Extract, Transform, and Load) process but differs in the arrangement of the procedures. In ELT, data is extracted from disparate sources and loaded into a target datastore, mainly a data warehouse from where it is then transformed to meet certain criteria. ELT is a relatively new technology that is made possible by modern, cloud-based server warehouses that offer near-endless storage capabilities and have high scaling processing.

With ELT used in your business, you maximize the ingestion speed of your data as you do not need to validate or transform the data before loading it into the data warehouse unlike in the ETL process where data needs to be transformed or altered to meet the required standard of the Data Warehouse.

What this simply means is that with ELT tools, the order of events is changed slightly as the data is first loaded into the data warehouse from which it will be transformed to the desired format to come up with useful insights avoiding data staging, and this is made possible as ELT leverages on a target’s warehouse Massive Parallel Processing (MPP) architecture to induce the transformation of the data.

This is done for all kinds of data including Structured, Semi-Structured, Unstructured, and even raw data types. ELT processes also work in tandem with Data Lakes.

Read more about ETL/ELT:

How to Build an ELT Pipeline in Snowflake?

You can build an ELT pipeline using Snowflake by performing the following steps:

1. Extract data from the source

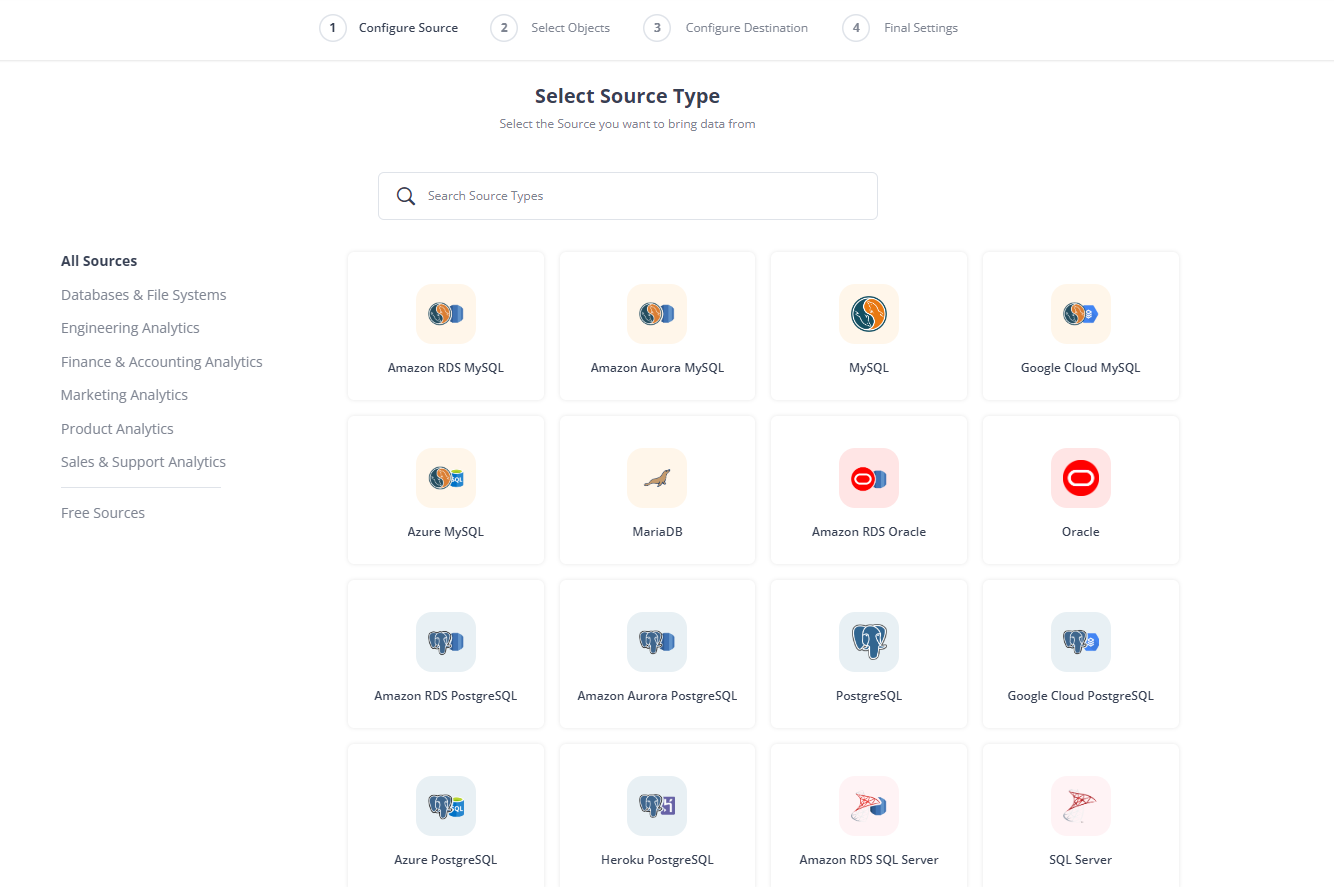

- After Logging into your Hevo Account, click on “Create Pipeline.”

- Select your Source from a list of 150+ sources.

2. Connect to Snowflake: Select Snowflake as your Destination and fill in your details as shown in the image below.

After this, you can monitor and optimize your pipeline to meet your needs.

Here’s a list of some of the best Snowflake ELT Tools available in the market that you can choose from to simplify Snowflake ETL. Selecting the right tool for your business needs has never been this easy:

Get Started with Hevo for FreeTop Snowflake ELT Tools in the Market

Snowflake supports both ETL and ELT tools and works with a large range of integration tools. Using Snowflake as a Data Warehouse or Data Lake makes it easier to load data into its server without having to come up with complex Data Pipelines and Schemas. Snowflake also has Snowpark which helps Developers to interact directly with Snowflake without having to move data.

This section of the article will focus on the best Snowflake ELT tools, below is a list of some Snowflake ELT tools:

| Tool | Key Features | Use Cases |

| Hevo Data | – No-code data pipeline – Supports 150+ data sources – Fault-tolerant architecture – Schema management | – Real-time data transfer – Data enrichment and transformation |

| Blendo | – 40+ integrations – Native connectors for replicating data – Centralized location for datasets | – CRM & ERP data replication – Data integration across apps |

| Matillion | – Cloud-based ETL – Visual workspace with drag-and-drop – Requires SQL knowledge | – ETL for Snowflake and other data warehouses – Complex pipelines |

| Luigi | – Python library for batch processing – Workflow management – Visualizations | – Batch data processing – Complex task dependency management |

| Apache Airflow | – Open-source workflow orchestration – Python-based DAGs – Supports event-driven tasks | – Data pipeline orchestration – Scheduling complex workflows |

| StreamSets | – DataOps platform – Adaptive pipelines – Hybrid and multi-cloud architecture | – Hybrid cloud data ingestion – Schema change adaptation |

| Etleap | – AWS-based Snowflake integration – Intuitive GUI for building pipelines- 50+ integrations | – Low-maintenance Snowflake ETL – No-coding data pipeline setup |

| Talend | – Big Data integration – Data integrity across cloud and on-premise – Connects to all major cloud systems | – Big Data Integration – Data integrity across cloud and on-premise – Connects to all major cloud systems |

1) Hevo Data

Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK’s, and Streaming Services to your Snowflake and simplifies the ETL and ELT process.

It supports 150+ data sources (Including 60+ Free Data Sources) and is a 2-step process by just selecting the data source, providing valid credentials, and choosing the destination. Hevo not only loads the data onto the desired Data Warehouse such as Snowflake but also enriches the data and transforms it into an analysis-ready form without having to write a single line of code. Its fault-tolerant architecture ensures that the data is handled in a secure, consistent manner with zero data loss.

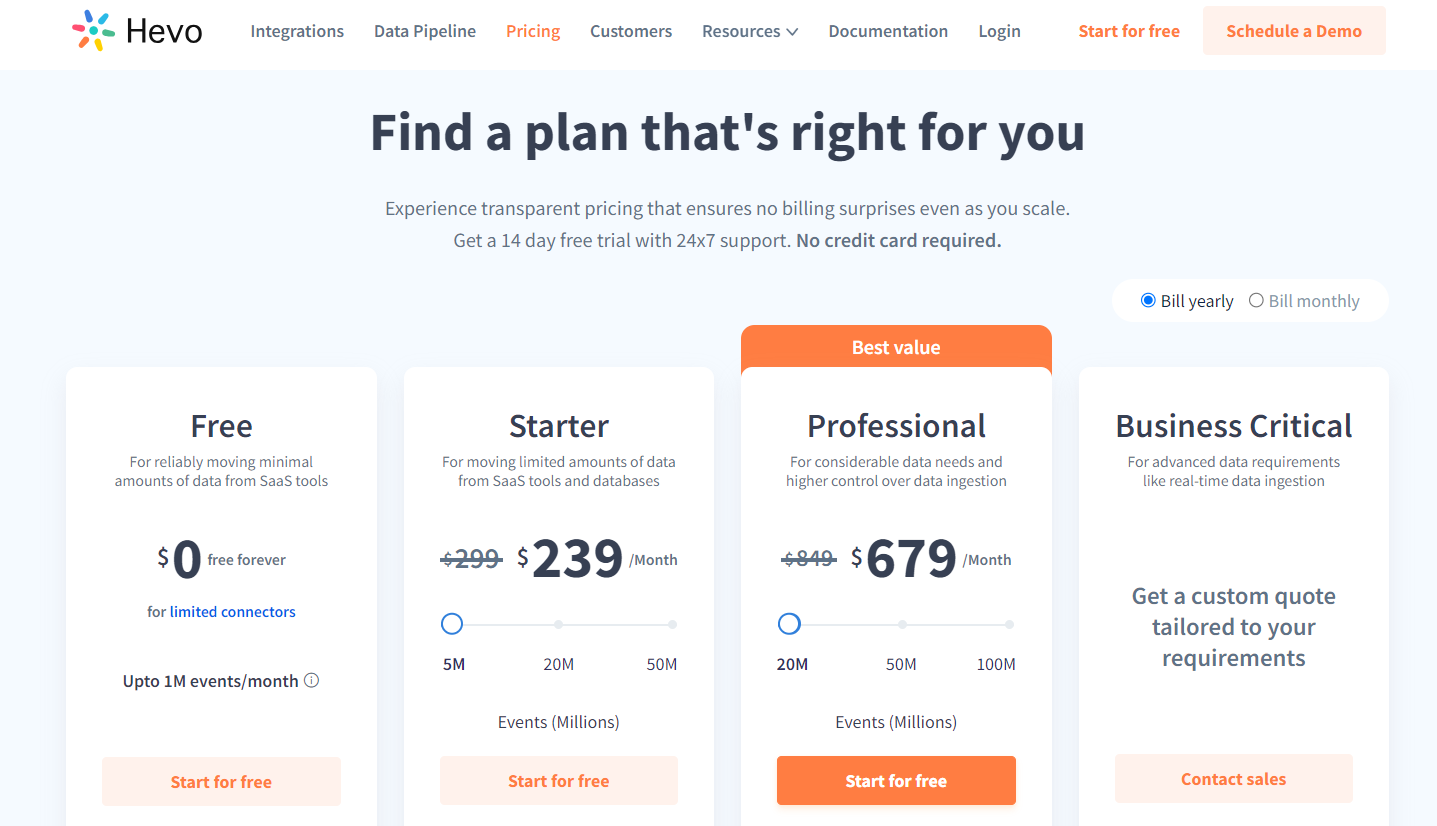

Hevo Data provides users with 4 different subscription offerings, namely, Free, Starter, Professional, and Business. The free plan houses support for unlimited free data sources, allowing users to load their data to a data warehouse/desired destination for absolutely no cost! The basic Starter plan is available at $149/month and can be scaled up as per your data requirements. You can also opt for the Business plan and get a tailor-made plan devised exclusively for your business.

Hevo Data also provides users with a 14-day free trial. You can learn more about Hevo Data’s pricing here.

2) Blendo

This is one of the best-known Snowflake ELT tools available in the market today. It enables customers to have a centralized location for all kinds of datasets and inputs from different sources by using native connectors providing fast methods to replicate databases, applications, files, and events into your data warehouse. Blendo has over 40+ integrations and is very useful when moving data from CRM and ERP systems such as Snowflake, Facebook Ads, Google Ads, Google Analytics, Mailchimp, Salesforce, and many more.

3) Matillion

Matillion is a Cloud-based ETL platform specifically built for Snowflake, Amazon Redshift, Google BigQuery, and Amazon Redshift. Users can use it by simply dragging components onto visual workspaces to create pipelines though, it requires you to have SQL knowledge to use it efficiently. You can create numerous pipelines in Matillion and its data sources are made up of a large range of databases, social networks, CRMs, and ERPS.

4) Luigi

Luigi is a Snowflake ETL tool built at Spotify for the automation and creation of batch processes. It is a Python library that provides a framework for building complex data pipelines of batch jobs comprising thousands of tasks to handle dependency resolution, workflow management, visualizations, etc. It is also used in building support for running Python MapReduce on Hadoop, Hive, and Pig.

5) Apache Airflow

This is a free Open-Source Snowflake ELT tool started at Airbnb and over time has been created by the community to orchestrate complex data processing pipelines. It is used to create and schedule jobs, build Data Workflows and Pipelines. Airflow’s workflow is a sequence of tasks defined using the Python programming language, the tasks can be initiated on a schedule or by an event.

6) StreamSets

StreamSets is an Open-Source data collector software with which you can build a data ingestion pipeline needed to power DataOps across hybrid and multi-cloud architecture. These Data Pipelines are adjustable as they automatically update to reflect changes in schema, infrastructure, and schematics. StreamSets is easy to use and highly extensible as new data sources can be added at any time. It also integrates with Java platforms making it a popular analysis tool for businesses.

7) Etleap

It is a Snowflake ELT tool built on the base of Amazon Web Services (AWS) to allow you to create robust Data Pipelines into Warehouses with low maintenance. Etleap is used with Snowflake to provide an intuitive GUI for the creation of Data Pipelines for the extraction, loading, and transformation of data. It has integrations with over 50+ data sources and connectors that can be built quickly without necessarily having coding skills.

8) Talend

Talend is a modern Big Data and integration software that can be used to connect to data sources to extract, load, and transform data. Talend helps you to maintain healthy data across your on-premise and cloud data systems. It connects to all major cloud offerings be it Data Warehouses or Cloud infrastructure providers to allow you to have clean, complete, and uncompromised data..

Key Advantages of Snowflake ELT Tools

Now that you have explored the best Snowflake ELT tools popularly used in the industry, in this section, you will unravel some of the benefits of the Snowflake ELT tools.

- ELT Flexibility: The main advantage of ELT is its flexibility to store unstructured data. You can save any type of information using ELT and transform or structure them at your convenience. What this does is provide immediate storage for all your data which can be accessed whenever you need to transform them in the future.

- High Speed: Snowflake ELT tools allow all your data to go immediately into the storage system making it a faster option than ETL. From there, you can determine the extracted data to transform and subsequently carry out an analysis.

- Ease of Loading: You do not have to develop complex Snowflake ELT processes before ingesting data into a Warehouse or Data Lakes thereby making it easy for Developers and Data Analysts when working with new information.

- Time Efficient: Having to carry out the transformation of your data after it has been loaded into the data warehouse cuts down on the time it takes to load the data into its destination. Cleansing or modifying the data to meet the standard of the Data Warehouse is not needed as you do not need to transform it before ingestion because all kinds of data are accepted thereby saving time.

- Effective Collaboration with Data Lake: Snowflake ELT paired with a Data Lake lets you load your ever-expanding and changing pool of raw data immediately without hesitation since there is no need to change the data into any special format before storing it in the lake.

- Low Maintenance: Since Snowflake ELT is mainly Cloud-based, it relies on automated processes meaning you do not need to perform any manual activity or updates ensuring low maintenance.

- Transformation of only the Data you Need: Snowflake ELT tools allow you to transform only the data you need for a particular analysis and the data can be transformed at the go, in different ways to produce alternative metrics, forecasts, or reports.

Best Practices for Snowflake ELT

- Use Efficient Data Loading Techniques: Decide which data loading technique suits your migration. Choose the ‘copy Into’ command for bulk loading and the snowpipe method for real-time data sync.

- Implement Data Validation Checks: Validate data before and after loading to ensure accuracy. This could include checks for data completeness, format consistency, and schema validation. You can do this using primary keys, foreign keys, and other unique constraints.

- Handle Duplicate Data: Check your data for duplicates or remove them post-load to ensure data quality and better business reporting.

- Audit Logs and Alerts: Implement logging and alerts for tracking data loading and transformation processes. This ensures that any issues are detected and addressed promptly.

- Ensure Consistent Data Formats: Make sure the format of the data you extracted from the source matches the supported data format of snowflake.

Conclusion

This article has given you a comprehensive overview of ELT and shown you some of the best Snowflake ELT tools to use with Snowflake. It covered a lot on ELT and highlighted some of the Snowflake ELT tools to work with using Snowflake. There are a lot of others not mentioned in this article as there is an increasing demand by companies to invest more time and resources in running Analytics and generating insights from their data or data management in general, there is also an increase in the availability of tools to handle such demands.

However, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, Marketing Platforms, and loading to Snowflake can be quite challenging. This is where a simpler alternative like Hevo can save your day! Hevo Data will help simplify the ELT and management process of both the data sources and the data destinations such as Snowflake.

Frequently Asked Questions

1. What is ETL in Snowflake?

ETL in Snowflake involves extracting data, transforming it, and loading it into the Snowflake data warehouse, with support for both ETL and ELT processes.

2. Is Snowflake Just SQL?

No, Snowflake is a cloud data platform that includes SQL but also offers data warehousing, data lakes, and data sharing capabilities.

3. What is the Difference Between Snowflake and Databricks for ETL?

Snowflake is SQL-centric and ideal for ELT, while Databricks is built on Apache Spark, excelling in big data processing and machine learning.