Easily move your data from Typeform To Redshift to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Typeform allows organizations to connect with their customers by building engaging forms and conducting surveys. Organizations can use Typeform to collect data about customer support, onboarding, feedback, and more. The collected customer data can be stored in centralized storage to be further used for analysis, product development, customer mappings, and more. You can use a data warehouse to store such data, which is capable of handling large volumes of data while ensuring faster query performance. Amazon Redshift is one such data warehousing service. You can store your Typeform data using standard APIs, user interface, or the third-party ETL (Extract, Load, and Transform) tools.

In this article, you will learn how to connect Typeform to Redshift using APIs and manual processes.

Table of Contents

Prerequisites

- Basic understanding of integration.

What is Typeform?

Developed in 2012, Typeform is a top-rated platform for businesses to create interactive forms and surveys ensuring customer communication. With Typeform, users can easily create forms and surveys without writing a single line of code. Users can get started with templates and make changes by just dragging and dropping elements.

Typeform allows businesses to create visually appealing forms and surveys that attract and engages customers to answer their questions. Using Typeform, organizations can understand their customer by obtaining answers to a particular set of questions. Organizations can then store the data obtained from these forms and surveys in a centralized repository, which can be used for further analysis.

Key Features of Typeform

Here are a few key features of Typeform:

- Interactive Designs: Typeform consists of several interactive designs that attract customers to complete surveys and forms by answering questions. It includes default templates with drag-and-drop functionality, which can help users build colorful and user-friendly forms.

- Personalize Journeys: Unlike other tools, Typeform uses Logic jumps, which respond to people’s answers by filtering the questions. As a result, respondents never see questions that are irrelevant to their responses.

Method 1: Connect Typeform to Redshift Using Hevo

Hevo Data, an Automated Data Pipeline, provides you a hassle-free solution to connect Typeform to Redshift within minutes with an easy-to-use no-code interface. Hevo is fully managed and completely automates the process of not only loading data from Kafka but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Connect Typeform to Redshift Manually

This method would be time consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes, streaming data from Typeform and ingesting data into Redshift. This method is suitable for users with a technical background.

Get Started with Hevo for FreeWhat is Amazon Redshift?

Developed in 2012, Amazon Redshift is a popular, fully scalable, fast, and reliable data warehousing service. Amazon Redshift consists of a column-oriented database designed to connect SQL-based clients with BI tools for providing data to users in batch or real-time. You can start using Amazon Redshift with a set of nodes called Amazon clusters, upload the datasets, and perform queries for analysis. These Amazon clusters can be managed by Amazon Command Line Interface, Amazon Redshift Query API, or the AWS Software Development Kit. With Redshift, you can also perform all the administrative tasks such as memory, configuration, resource allocation, and more automatically.

Key Features of Amazon Redshift

Here are a few key features of Amazon Redshift:

- ANSI SQL: Amazon Redshift is based on ANSI-SQL, which uses industry-standard ODBC and JDBC connections, enabling you to use your existing SQL clients and BI tools. You can seamlessly query files such as CSV, JSON, ORC, Avro, Parquet, and more with ANSI SQL.

- AQUA (Advanced Query Accelerator): Amazon Redshift consists of a distributed and hardware-accelerated cache known as AQUA. It speeds up Amazon Redshift up to 10x compared to other enterprise cloud data warehouses.

- Fast Performance: Amazon Redshift gives fast performance due to its features like massively parallel processing, columnar data storage, data compression, query optimizer, result caching, and compiled code.

Method 1: Connect Typeform to Redshift Using Hevo

Configuring Typeform as a Source

Configure Typeform as the Source in your Pipeline by following the instructions below:

- Step 1: In the Asset Palette, choose PIPELINES.

- Step 2: In the Pipelines List View, click + CREATE.

- Step 3: Choose Typeform from the drop-down menu on the Select Source Type page.

- Step 4: Click + ADD TYPEFORM ACCOUNT on the Configure your Typeform account page.

- Step 5: Use your Typeform credentials to log in.

- Step 6: To grant Hevo access to your Typeform account, click Accept.

- Step 7: Set the following in the Configure your Typeform Source page:

- Step 8: Set the following in the Configure your Typeform Source page:

- Pipeline Name: A name for your Pipeline that is unique and does not exceed 255 characters.

- Historical Sync Duration: The time it takes to consume the existing data in the Source.

- Select Workspaces: These are the workspaces in your Typeform account where you want to consume data.

- Step 9: TEST & CONTINUE should be selected.

- Step 10: Proceed to setting up the Destination and configuring the data ingestion.

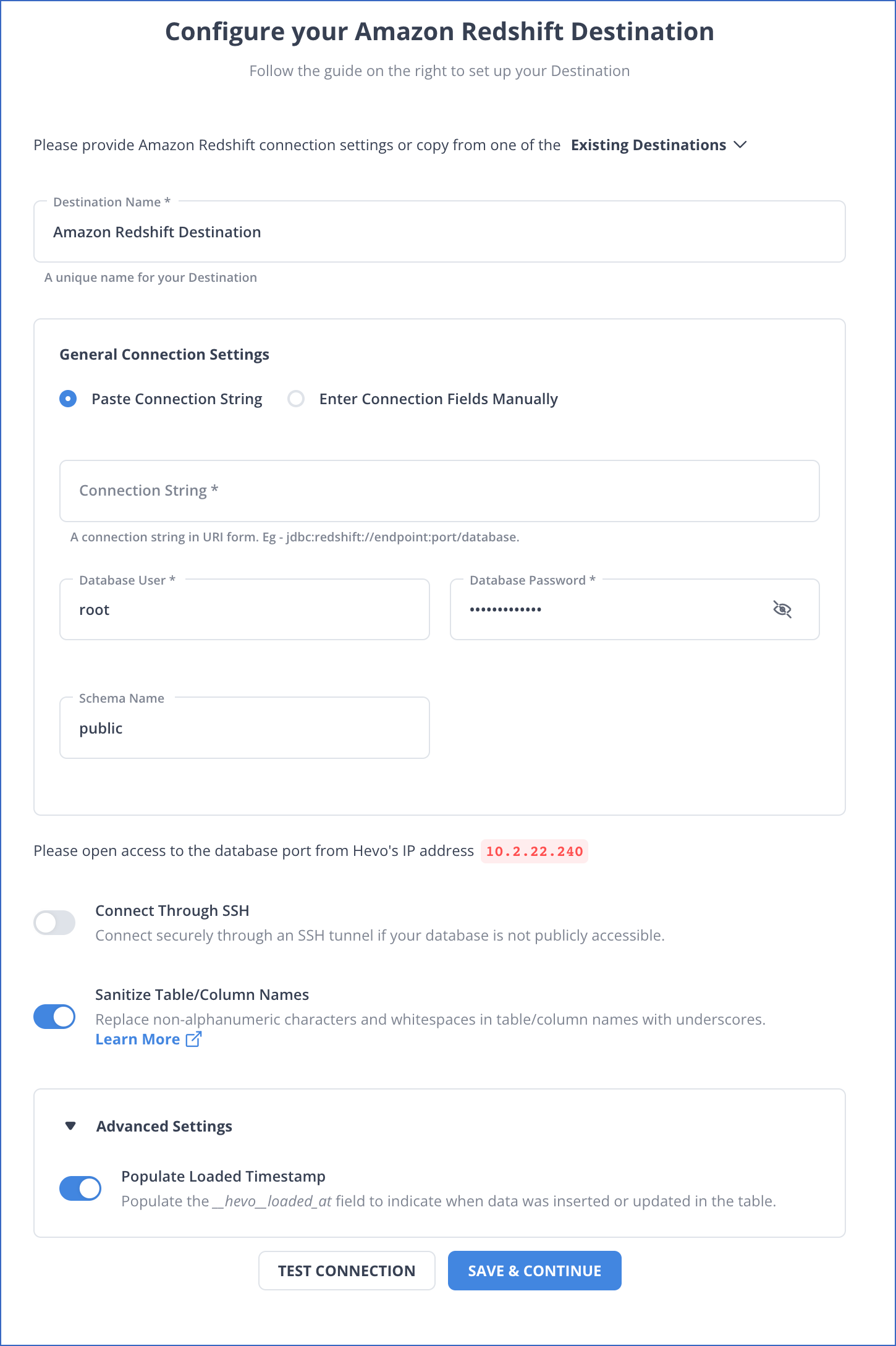

Configure Redshift as a Destination

To set up Amazon Redshift as a destination in Hevo, follow these steps:

- Step 1: In the Asset Palette, select DESTINATIONS.

- Step 2: In the Destinations List View, click + CREATE.

- Step 3: Select Amazon Redshift from the Add Destination page.

- Step 4: Set the following parameters on the Configure your Amazon Redshift Destination page:

- Destination Name: Give your destination a unique name.

- Database Cluster Identifier: The IP address or DNS of the Amazon Redshift host is used as the database cluster identifier.

- Database Port: The port on which your Amazon Redshift server listens for connections is known as the database port. 5439 is the default value.

- Database User: In the Redshift database, a user with a non-administrative position.

- Database Password: The user’s password.

- Database Name: The name of the destination database into which the data will be loaded.

- Database Schema: The Destination database schema’s name. The default setting is public.

- Step 5: To test connectivity with the Amazon Redshift warehouse, click Test Connection.

- Step 6: When the test is complete, select SAVE DESTINATION.

Method 2: Connect Typeform to Redshift Manually

You can connect your Typeform to Redshift using standard APIs or manually exporting and importing.

- Connecting Typeform to Redshift using APIs

- Connecting Typeform to Redshift by Exporting and Importing Data

Connecting Typeform to Redshift using APIs

Follow the below steps to connect Typeform to Redshift:

- Step 1: Getting data out of Typeform

Typeform consists of several APIs, which can be used for getting information. For example, to retrieve a Typeform response, you will use the GET with the below url/command.

https://api.typeform.com/forms/{form_id}/responses

- Step 2: Preparing Typeform data

If you do not have a data structure to store the data, you can create schemas for your data tables. For each value in the response form, you should have a predefined datatype such as String, Integer, Datetime, and more. Then, build a table for receiving the values in the response form.

- Step 3: Loading data into Amazon Redshift

After identifying all the columns, use the CREATE TABLE statement in Redshift to create a table that can receive all the retrieved data. To insert data into the Redshift table row-by-row, you can use the INSERT statements. But, Amazon Redshift is not optimized to insert one row at a time. Therefore, if you want to insert a huge amount of data at a time to Amazon Redshift, you need to load this data into Amazon S3. Next, use the COPY command to load that data into Amazon Redshift to connect Typeform to Redshift.

- Step 4: Keeping Typeform data up-to-date

After retrieving your data and moving it from Typeform to Redshift, you’ll have to keep it up-to-date. Instead of replicating your data each time, writing a program that can identify incremental updates is better.

Typeform’s API results include fields like submitted_at, allowing you to identify new records since your last update. After taking new or updated data into Amazon Redshift, you can set your code to a continuous loop for pulling new data as it appears.

Connecting Typeform to Redshift by Exporting and Importing Data

Connecting Typeform to Redshift manually consists of two processes-exporting Typeform data and importing data into Amazon Redshift.

Exporting Typeform Data

The first step in Typeform to Redshift Integration is exporting Typeform data. It consists of two sub-processes-export Typeform data and download Typeform data.

- Step 1: Export Typeform data

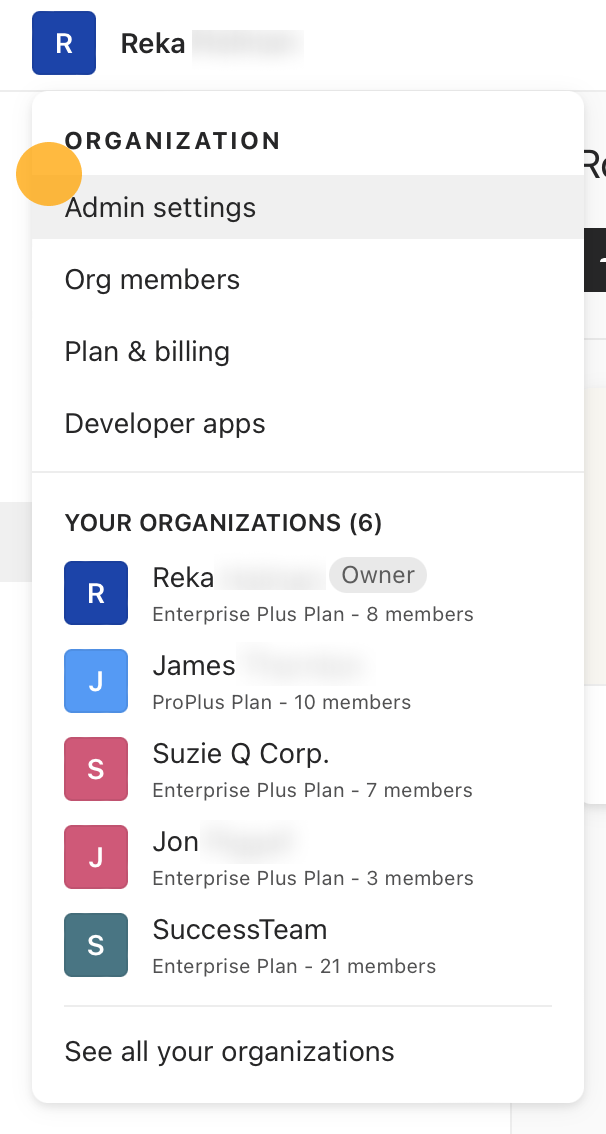

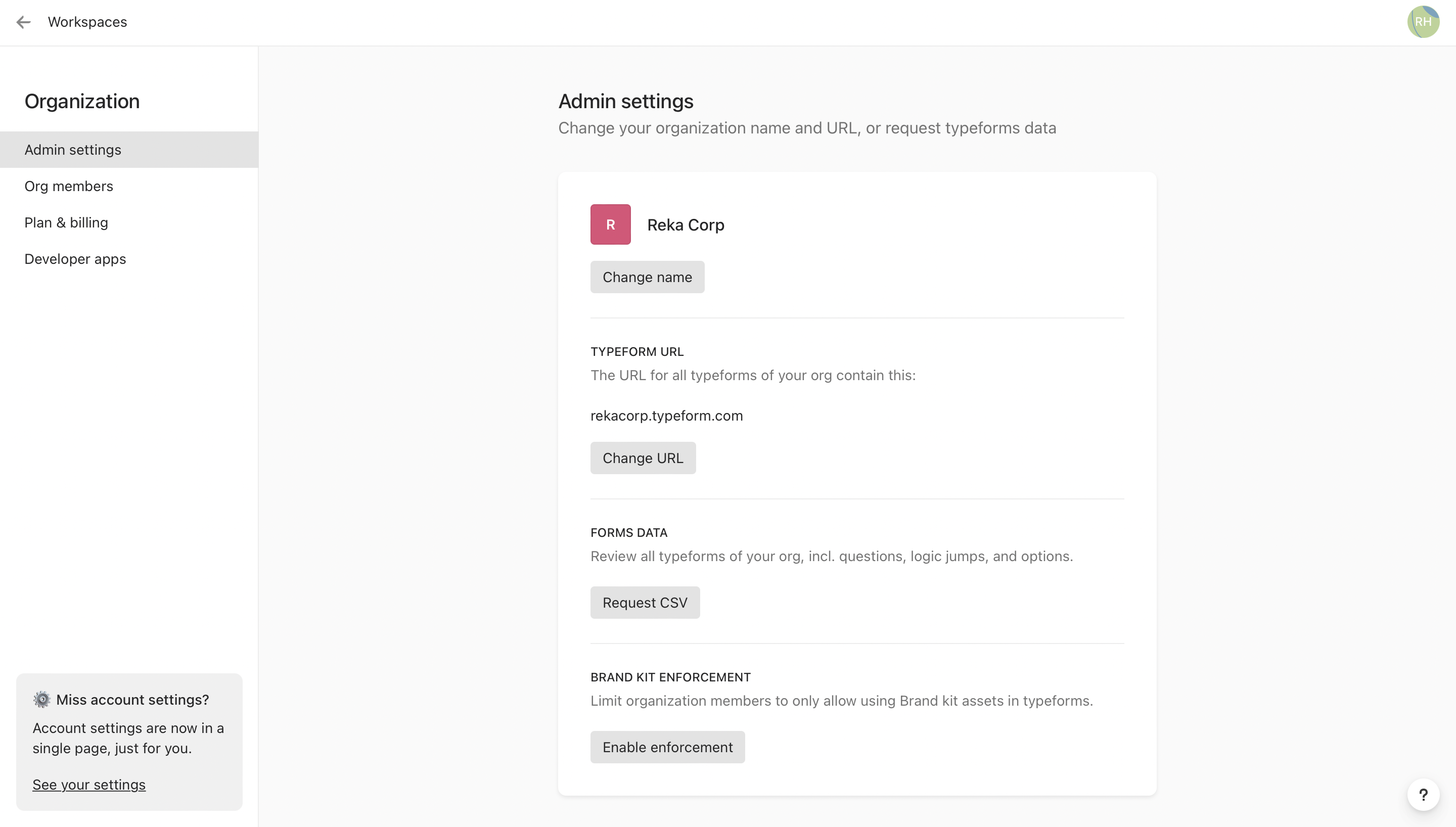

Follow the below steps for exporting Typeform data. (It is assumed that you have signed in to Typeform.)

- Click on the Accounts in the right corner. Then, click on the settings.

- Click on the Request data tab. Typeform will send you an email with a zip file containing your Typeform data in JSON files.

- Open the JSON files.

- Step 2: Download Typeform Results

You can download the results or the responses of your Typeform forms into an Excel sheet or a csv file.

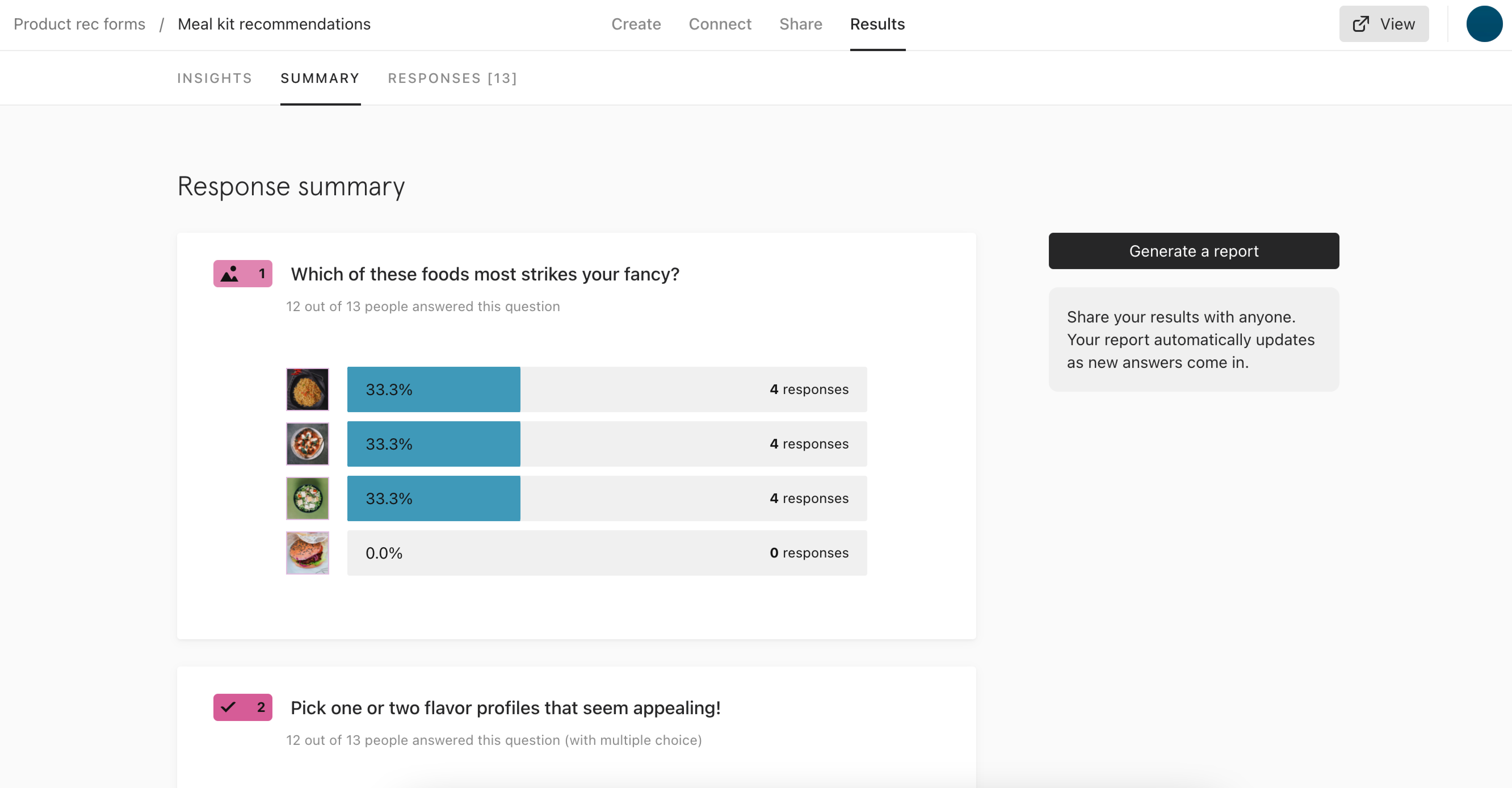

- Open your Typeform and click on Results.

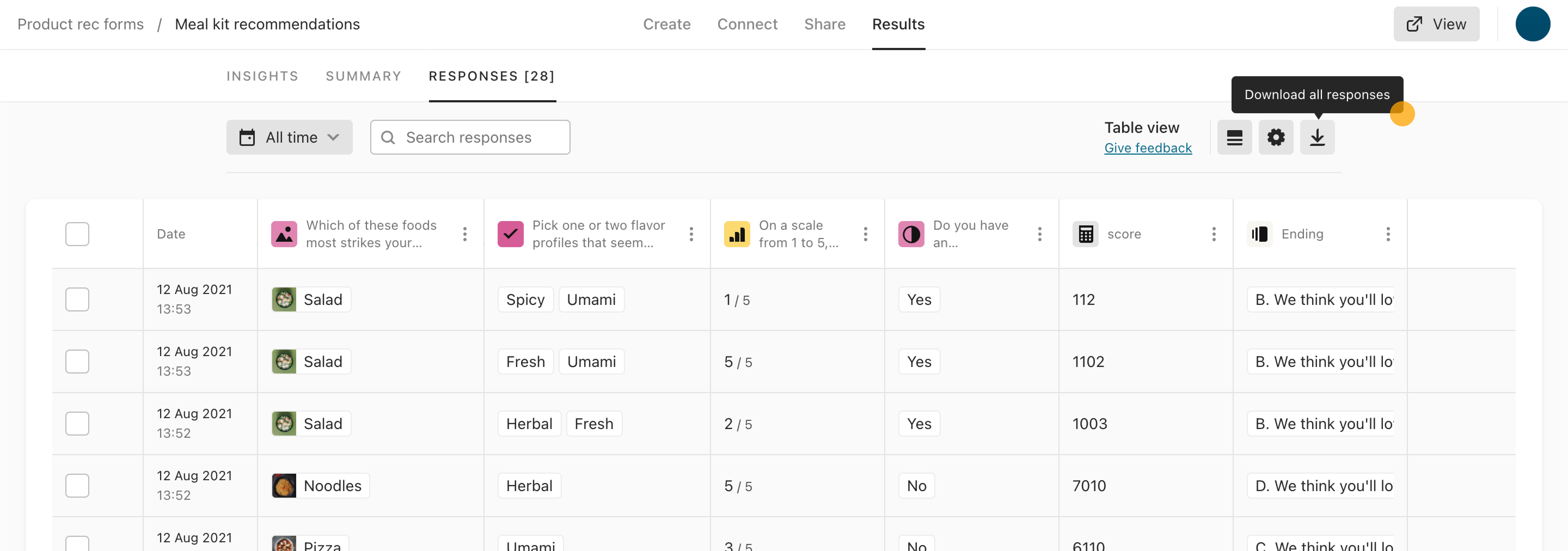

- Click on the Responses tab, which will then open a new panel. Click on the Download all responses option in the top right as the next step in connecting Typeform to Redshift.

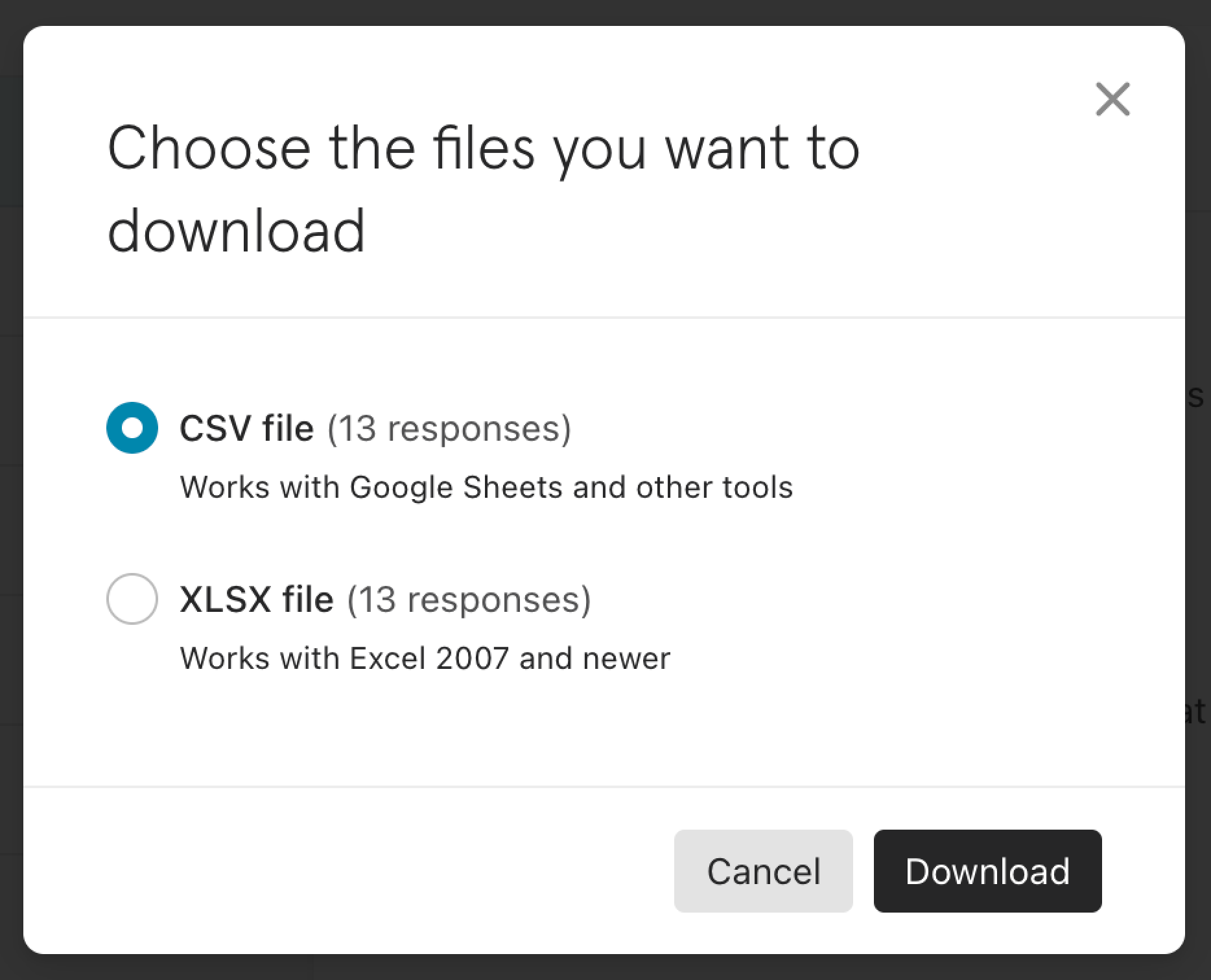

- Download your Typeform data in CSV or XLS format. Choose your option and then click on the Download tab.

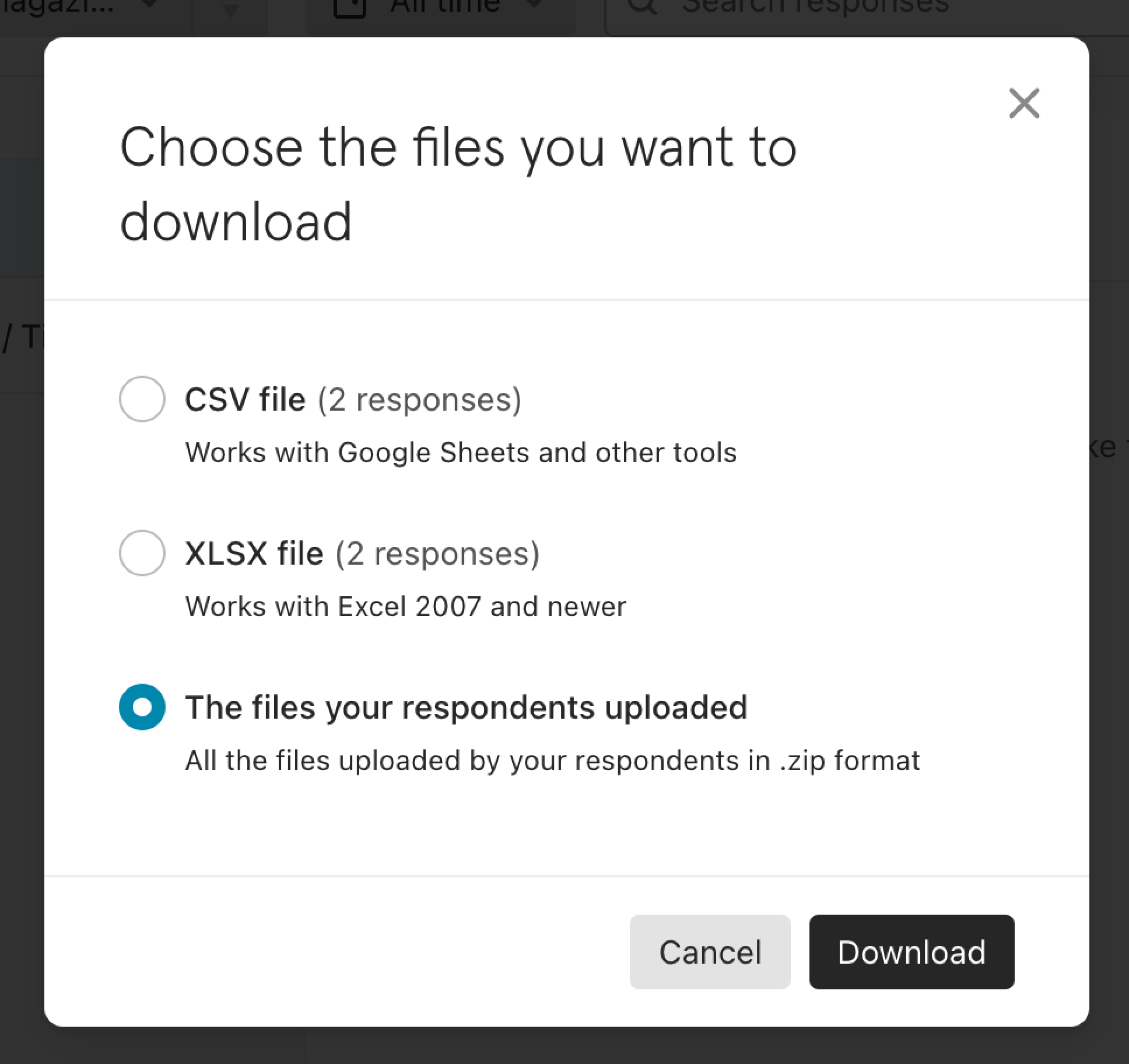

- In your web browser’s default download folder, your Typeform data is downloaded. While designing forms or surveys, if you have created the File Upload option in your Typeform, you can download all files your customers have uploaded by clicking on the Download all responses tab, as shown below.

Importing Data into Amazon Redshift

The next step in connecting Typeform to Redshift is importing data into Amazon Redshift. You can import a csv file using the two methods below.

- Importing a csv file in Amazon Redshift using the Amazon S3 bucket.

- Importing a csv file in Amazon Redshift using AWS Data Pipeline.

You will be using the first method of importing a csv file in Amazon Redshift using the Amazon S3 bucket.

- Importing a csv file in Amazon Redshift using the Amazon S3 bucket.

Loading the csv file into Amazon Redshift using the S3 bucket is an easy process. It includes two stages — loading the csv file into the S3 bucket and loading the data from the S3 bucket to Amazon Redshift.

- Upload this CSV file to the S3 bucket.

- After loading the file into the S3 bucket, run the COPY command for pulling the file from S3 and loading it to the desired table. If you have zipped the file, your code will have the following sections:

COPY <schema-name>.<table-name> (<ordered-list-of-columns>) FROM '<manifest-file-s3-url>'

CREDENTIALS'aws_access_key_id=<key>;aws_secret_access_key=<secret-key>' GZIP MANIFEST;In the COPY command, you need to use the ‘csv’ keyword to help Amazon Redshift identify the file format, as shown below.

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV;

-- Ignore the first line

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV

INGOREHEADER 1;Limitations of Connecting Typeform to Redshift

Connecting Typeform to Redshift is a time-consuming and complex process. But, if you have the required skills or technical experts, you can write custom code for the integration of Typeform to Redshift like above. Besides, connecting Typeform to Redshift manually can be challenging too. It not only slackens the process but also is not ideal for working with real-time data. To overcome such issues, you can use many third-party ETL tools like Hevo, which allow seamless and automatic integration of Typeform to Redshift with simple steps.

Conclusion

In this article, you learned to connect Typeform to Redshift. Typeform allows businesses to communicate with customers by asking questions and completing surveys. Typeform consists of attractive features such as logic jump, themes, templates, data export options, and 128-bit SSL encryption that helps users build interactive forms, surveys, and questionnaires.

However, as a Developer, extracting complex data from a diverse set of data sources like Databases, CRMs, Project management Tools, Streaming Services, and Marketing Platforms like Typeform to your Database or data warehouse like Amazon Redshift can seem to be quite challenging. If you are from non-technical background or are new in the game of data warehouse and analytics, Hevo Data can help!

Hevo Data will automate your data transfer process, hence allowing you to focus on other aspects of your business like Analytics, Customer Management, etc. This platform allows you to transfer data from 150+ multiple sources to Cloud-based Data Warehouses like Snowflake, Google BigQuery, Amazon Redshift, etc. It will provide you with a hassle-free experience and make your work life much easier.

Want to take Hevo for a spin? Try Hevo’s 14-day free trial and experience the feature-rich Hevo suite firsthand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

FAQs

1. Does AWS Redshift store data?

Yes, AWS Redshift stores data. It is a fully managed, petabyte-scale data warehouse service that stores structured data, allowing you to run complex queries and perform data analysis.

2. Which feature of AWS Redshift allows you to perform SQL queries against data stored directly on Amazon S3 buckets?

The feature is Amazon Redshift Spectrum. This enables you to run SQL queries directly on data stored in Amazon S3 without having to load the data into Redshift tables.

3. Is Redshift OLAP or OLTP?

AWS Redshift is primarily an OLAP (online analytical processing) system. It is designed for querying large datasets, performing complex data analysis, and running business intelligence tasks.