As new technologies are released, the field of Data Modeling continues to evolve.

- Data Warehousing came first, followed by MPP Data Warehouses, Hadoop, and Data Lakes. You are now living in the era of Cloud Data Warehouses.

- The rise in popularity of Cloud Data Warehouses like Snowflake has changed our perceptions about Data Transformation and Modeling.

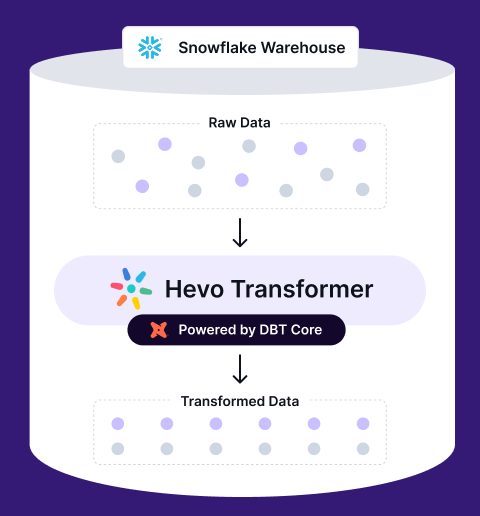

- The new ELT process extracts and loads raw source data into Snowflake, which is subsequently transformed into the final form for your analytics.

Table of Contents

What is Data Transformation?

- Data transformation is the backbone of any data-driven business.

- It’s the process of taking raw data, often from various sources and formats, and shaping it into a clean, consistent, and usable form.

- This can involve anything from converting file types to scrubbing inconsistencies and ensuring data integrity.

- Data transformation plays a crucial role in procedures like Data Integration, Migration, Warehousing, and Preparation – essentially, anywhere you need to manipulate data for further analysis.

- One of the most common workflows for data transformation is Extract-Transform-Load (ETL). In this process, data is first extracted from various sources, brought together in a central location, and then cleansed and transformed.

- This might involve tasks like:

- Data Type Conversion: Ensuring data is stored in the appropriate format (e.g., dates, numbers, text).

- Duplicate Removal: Eliminating redundant data entries for better accuracy.

- Data Enrichment: Improving the quality of the source data by adding missing information or context.

As the volume of data continues to explode, having efficient ways to manage and utilize it becomes paramount. Data transformation, when done right, guarantees that your data is:

- Accessible: Easy to find and retrieve for analysis.

- Consistent: Maintains a uniform structure throughout, regardless of source.

- Secure: Protected from unauthorized access or manipulation.

- Trustworthy: Reliable and dependable for making informed decisions.

Struggling with manual SQL and scattered workflows? Hevo Transformer, powered by dbt Core™, lets you connect to Snowflake warehouse in minutes, automate dbt workflows, and collaborate effortlessly with Git version control.

🚀 Seamless Warehouse Integration – Auto-fetch schemas, no setup hassle

⚡ Effortless dbt Workflow Automation – Build, test, and deploy in one place

📂 Built-in Version Control – Track every change with Git

When to perform Transformation in Snowflake?

Deciding when to perform transformation – before loading, while loading or after loading the data into Snowflake hinges on some critical factors such as performance requirements, data complexity and specific use cases.

- Pre-Loading Transformation: Transforming data before ingesting it into Snowflake can streamline the loading process, reduce data volume, and enhance overall performance. However, this approach carries the risk of potentially losing valuable data that might be needed for future analytics. Therefore, it is generally not recommended unless you are certain that the transformed data will suffice for all your analytical needs.

- Transformation while loading the Data: For such transformations, Snowflake offers options through commands like

<a href="https://docs.snowflake.com/en/sql-reference/sql/copy-into-table" data-type="link" data-id="https://docs.snowflake.com/en/sql-reference/sql/copy-into-table" target="_blank" rel="noreferrer noopener nofollow">COPY INTO</a>and Snowpipe, providing a versatile ecosystem for refining data. For instance, you can extract elements like JSON into distinct columns within Snowflake tables. - Post-Loading Transformation: Loading raw data into Snowflake and then transforming it offers several advantages:

- It allows you to adjust your data model and queries as business requirements evolve without the need to reload data.

- It enables quick data ingestion by focusing solely on loading operations, with transformations being executed asynchronously, ensuring data is available for analysis sooner.

- It facilitates an iterative data processing approach, where data scientists and analysts can experiment with various transformations and analyses without impacting the initial load.

- It optimizes resource utilization during the loading process by deferring complex transformations until after loading, preventing resource contention.

- Loading raw data preserves its historical state, which can be valuable for auditing, compliance, and retrospective analysis.

- See the latest conversation on Snowflake Data Transformation on Reddit

Snowflake Data Transformation Process

- The rise in popularity of Cloud Data Warehouses like Snowflake has changed our perceptions about data transformation and modeling.

- The new ELT process extracts and loads raw source data into Snowflake, which is subsequently transformed into the final form for your analytics.

- This allows businesses to take advantage of Snowflake’s low-cost, scalable compute and storage capabilities while also increasing agility by separating the Data Loading and Snowflake Data Transformation processes and workloads, with Data Engineers handling the former and Data Analysts handling the latter.

- Organizations can employ modern organizing approaches like Snowflake Virtual Data Warehouses to construct a variety of subject-specific analytical data models that are optimized for their unique needs.

Snowflake Data Transformation Process: Getting Data into CDW

The first step in Snowflake Data Transformation is getting the data into CDW (Cloud Data Warehouse). Data originates from a wide range of sources in today’s data world. SaaS apps and cloud services are the fastest-growing sources of data for analytics. The data structures and APIs for these sources are highly complicated.

As a result, the first Data Model your team will employ is a set of tables in the Cloud Data Warehouse that look like objects from your Data Sources, are categorized similarly, and have the same fields. However, because the data is still in a format similar to that of a SaaS application or cloud service object, it may be difficult to decipher for a Data Analyst.

There is one extremely crucial Snowflake Data Transformation step that must be completed. Any data that is personal or sensitive should be anonymized or concealed. This is necessary to protect data privacy and ensure regulatory compliance.

After the raw data has been loaded, the data engineering team can begin the Data Purification process. Data Engineers could use this first phase to (a) discover and fix missing or inaccurate values, (b) modify poorly structured fields, and (c) extract particular fields from complicated, multi-faceted columns using generic, standardized cleanses.

Snowflake Data Transformation Process: Canonical Data Modeling

The next step in Snowflake Data Transformation is Canonical Data modeling. The Data Engineering team can transform raw data into Canonical Data Models that represent certain subjects once the data is in the CDW and has gone through the first pass of Data Transformation. Data Models representing consumers, contacts, leads, prospects, activities, and more are examples of this.

The basic goal of Canonical Data Models is to establish shared, reusable components that may be used in a variety of scenarios. Additional advantages occur as a result of this:

- Creating a single version of the truth for each subject and field within that subject, as well as providing shared and consistent definitions and documentation for each subject’s data.

- To develop trust in the analytics community, transparency into Data Models and how they are built is required.

- To construct the Canonical Data Models, the Data Engineering team will collect needs from multiple business and analytics teams. Typically, these Data Models will be supersets of the criteria for maximum reuse and consumption. As new requirements or Data Sources become available, the Data Models will continue to evolve.

The Canonical Data Models will often blend (JOIN, UNION, etc.) data from several objects to build a rich and full set of fields to represent the subject because the raw data from the data sources is often normalized (in some cases lightly normalized and others strongly normalized). Furthermore, the Canonical Data Models may include some Data Enrichment in order to calculate new fields for standardized use in various use cases.

Snowflake Data Transformation Process: Use Case Data Modeling

The final phase in the Snowflake Data Transformation is to develop datasets tailored to the analytics use case. This is often done by the Data Analyst in Snowflake for current data modeling because it all comes down to duties and abilities:

- Data Engineers are more concerned with the data itself. Where it is stored, how it is structured and formatted, and how to obtain it rather than how the business uses the data. As a result, they’re well-suited to importing data into Snowflake and performing first-pass data modeling.

- Data Analysts are less familiar with raw data but are well-versed in how the business will use it and how it will be incorporated into analytics. As a result, use case Data Modeling and transformation is their optimal job.

Data Analysts may have a wide range of technical abilities, but typically prefer to focus on what they do best – analysis – rather than coding data transformation. This is where a low-code or no-code data transformation user interface comes in handy, as it eliminates the need for analysts to write sophisticated SQL code or Python-like programs.

Modeling and Snowflake Data Transformation of Use Case Data will often entail:

- Data cleansing that is particular to the use case, such as detecting and correcting outliers or deduping records

- Data shaping and reduction, such as sorting and structuring the data, removing unnecessary fields, or limiting the scope of the data to specified time periods or dimensions, are all examples of data shaping and reduction.

- Adding new computed fields related to the analysis or uploading local files specific to the use case, such as external or department-specific data, are examples of data enrichment.

In the Data Model, the final form will be a single flattened data structure – a very large, wide table. This, together with materialization, reduces the requirement for time-consuming JOINs every time a query is run for the analysis.

Snowflake Data Transformation Methods

Let us explore Data Transformation methods while loading the data and after loading the data in Snowflake.

Transforming while loading the Data using the COPY INTO Command

Snowflake provides a range of versatile data transformation options that can be applied during the data loading process using the COPY INTO command:

- Supported Functions: Snowflake supports functions like

CONCAT,FLOOR,REGEXP_REPLACE,SPLIT,REPLACE, among others. - Data Format Transformation: You can define file formats for data in external files, specifying delimiter characters, field enclosures, and escaping options to handle formats like CSV, JSON, or Avro.

- Column Mapping and Ordering: The

COLUMN_MAPPINGoption allows you to map columns in the external file to the target table, which is useful if the column names or orders differ. - Expression Transformation: Apply expressions to transform data during loading. This can include converting data types, performing calculations, or modifying values before loading them into the table.

- Truncate Columns: The

TRUNCATECOLUMNSoption enables truncation of data in columns that exceed the maximum length defined in the target table. - Row Filtering: Use the

WHEREclause to filter rows based on specific conditions during the loading process.

Post Load Transformations in Snowflake

1. Using SQL

To begin transforming your data using Snowflake’s SQL power, launch a fresh Snowflake worksheet and work your way through this simple example:

Let’s create a sample table with the name sample_table.

| order_id | order_date | product_info |

| 1 | 2024-04-17 11.30.00 | (“product”. “Book”, “price”: 25.99, “quantity”: 5) |

| 2 | 2024-04-21 09.30.00 | (“product”. “Pen”, “price”: 5.99, “quantity”: 10) |

The requirement is to turn the order_date into a more readable format and extract information from the JSON product_info column.

Use the following code in Snowflake SQL:

SELECT

order_id,

TO_VARCHAR(order_date, 'YYYY-MM-DD HH12:MI:SS AM') AS formatted_order_date,

PARSE_JSON(product_info):product AS product_name,

PARSE_JSON(product_info):price AS product_price,

PARSE_JSON(product_info):quantity AS product_quantity

FROM

sample_table;The output of the query should be:

| order_id | formatted_order_date | product_name | product_price | product_quantity |

| 1 | 2024-04-17 11.30.00 | Book | 25.99 | 5 |

| 2 | 2024-04-21 09.30.00 | Pen | 5.99 | 10 |

Here is what the query does:

- Uses the

TO_VARCHARfunction to convert theorder_datecolumn into a more user-friendly format. - It leverages the

PARSE_JSONfunction to extract specific details like product name, price, and quantity from the JSONproduct_infocolumn.

2. Using SQL with DBT

To streamline the data transformation and modeling process, it would be more efficient to leverage dbt (data build tool). This tool enables you to centralize your data transformation logic, integrate version control for collaborative efforts, and automate the creation of views or materialized tables.

With dbt, the code structure becomes more organized, enhancing maintainability, facilitating continuous integration and deployment (CI/CD), and simplifying testing procedures.

The dbt code follows a structured format, which could look like this:

-- transformed_sales.sql

WITH transformed_sales AS (

SELECT

order_id,

TO_VARCHAR(order_date, 'YYYY-MM-DD HH12:MI:SS AM') AS formatted_order_date,

PARSE_JSON(product_info):product AS product_name,

PARSE_JSON(product_info):price AS product_price,

PARSE_JSON(product_info):quantity AS product_quantity

FROM

{{ source('sample_table') }}

)

SELECT * FROM transformed_sales;3. Using Javascript

While dbt excels at data transformation workflows, there are situations where a JavaScript stored procedure might be a better fit.

For instance, if you need to perform a simple one-off task like splitting a column based on a delimiter, a JavaScript stored procedure can be a quicker solution. Here’s an example of how you might achieve this in JavaScript.

CREATE OR REPLACE PROCEDURE split_multi_value_column()

RETURNS STRING

LANGUAGE JAVASCRIPT

EXECUTE AS CALLER

AS

$$

try {

// Query to select rows with multi-value column

var selectQuery = `

SELECT col_name as input_string from table_name

`;

var stmt = snowflake.createStatement({sqlText: selectQuery});

var result = stmt.execute();

// Loop through the result set and split values

while (result.next()) {

var multiValueColumn = result.getColumnValue(1);

// Split values using a delimiter (e.g., comma)

var valuesArray = multiValueColumn.split('|');

// Insert new rows for each value

var insertQuery = `

INSERT INTO splitted_table_name (col1, col2, col3, col4)

VALUES ('`+valuesArray[0] +`','` +valuesArray[1] +`','` + valuesArray[2] + `','` + valuesArray[3] + `')

`;

var insertStmt = snowflake.createStatement({sqlText: insertQuery});

insertStmt.execute();

}

return "Multi-value column split completed successfully.";

} catch (err) {

return "Error: " + err;

}

$$;4. Using Python

Although Snowpark’s performance may not surpass Snowflake SQL in every scenario, it presents a compelling solution when complex transformations require the use of a more advanced programming language.

One compelling use case for leveraging Snowpark with Python is data transformation involving the conversion of latitude and longitude coordinates into area names in New York City.

import snowflake.snowpark as snowpark

from snowflake.snowpark.functions import col, udf, lit

from snowflake.snowpark.types import StringType

def lat_long_to_area(lat, long):

# Implement your geospatial logic here to determine the area in New York

# You might use geospatial libraries or APIs for accurate area mapping

# Return the area name as a string

# You can import a library that can help you doing this task!

return "Central Park" # Replace with actual area calculation

def main(session: snowpark.Session):

# Your code goes here, inside the "main" handler.

tableName = 'xxx'

latitudeColumnName = 'LATITUDE'

longitudeColumnName = 'LONGITUDE'

dataframe = session.table(tableName) \

.select(col(latitudeColumnName), col(longitudeColumnName))

# Or you can write dataframe = session.sql('<write your sql here>')

# Print a sample of the dataframe to standard output.

dataframe.show()

# Register the UDF

udf_lat_long_to_area = udf(lat_long_to_area, StringType())

# Apply the UDF to transform latitude and longitude to area name

dataframe = dataframe.withColumn('area_name', udf_lat_long_to_area(col(latitudeColumnName), col(longitudeColumnName)))

# Show the transformed dataframe

dataframe.show()

# Return value will appear in the Results tab.

return dataframeOptimizing Snowflake Data Transformation

Three factors come into play when it comes to optimizing Snowflake Data Transformation:

- Using virtual data warehouses to their full potential

- What method do you use to run your data transformation queries?

- Using a variety of data transformation techniques

Virtual Data Warehouses

In Snowflake Data Transformation, virtual data warehouses are a common way to scale out your data and analytics. Virtual Data Warehouse instances can also assist each analytics project’s workload to be optimized. Each use case should have its own Virtual Data Warehouse, according to Snowflake.

Users should also split “loading” and “execution” into different virtual Data Warehouses, with loading being an area where external data is imported and execution being areas for user query and reporting, according to Snowflake. Because of the differing demands for data loading and user queries, this separation is advised.

Snowflake Data Transformation queries have a different workload than other queries. Furthermore, the way you deploy your Snowflake Data Transformation queries has a significant impact on the workload. As a general guideline, segregate your Snowflake Data Transformation views/queries from your raw data in a different database. This gives you the freedom to employ several virtual data warehouses – one for your EL and another for your T – and allows you to tailor those virtual data warehouses to the computational requirements of each while lowering expenses.

Data Transformation Execution

Snowflake Data Transformation is greatly influenced by how your data transformation queries are run. In general, the choice is whether to materialize the data transformation views or run them “at query-time.”

Materialized views are another technique to boost query speed in workloads with a lot of the same queries. And Snowflake Data Transformation models are often created with this goal in mind – to build a dataset that can support a common, repeatable query pattern.

A typical recommended practice shared by Snowflake is to employ materialized views to increase query performance for frequent and repetitive queries. Storage is always less expensive than computing. Materialized views will increase your storage expenses slightly, but storage costs are so low that you’ll save far more in computing costs and query response time. As a result, materialize your models if you want them to enable repetitive query patterns.

The Snowflake Data Transformation views could be stored in the use case database, for example. When (a) the views are unique to the use case and (b) the views have unique workload or computation requirements, this solution works effectively. Another alternative is to keep all of your Snowflake Data Transformation views in a single database. When you have Snowflake Data Transformation views that are shared across multiple use cases and have similar workload characteristics, this solution works effectively.

Data Transformation Techniques

For improving your Snowflake Data Transformation views/queries, you can use a variety of strategies. These will have an effect on the performance. They can be summed up in four points:

- Wherever possible, keep things simple. Combine numerous requests into one if possible. It’s usually preferable to keep things simple.

- JOINs should be materialized. In Cloud Data Warehouses, JOINS are relatively expensive procedures. Materialize a Data Transformation view that JOINS numerous tables.

- Remove any data that you don’t require. Use projection in your Snowflake Data Transformation models to get rid of fields you don’t need, which saves you money on query time.

- Materialized pre-aggregation should be used. Create materialized aggregated views if there are frequently used aggregation values and dimensions.

Additional Resources on Snowflake Data Transformation

- Explore more on Data Transformation

- Read on Data Transformation Tools

- How to Transform Data in Power BI

- Data Transformation Best Practices

Which Method is Best for You?

- There are no right or wrong methods for transforming your data in Snowflake; it depends entirely on your data requirements and budget.

- While transformation during data loading reduces the volume of data, post-load transformations allow for complex querying. It is your decision to choose an approach that suits you the best.

- However, it’s important to note that transforming data can be a time-consuming and resource-intensive process, especially when dealing with large datasets or complex transformations. This is where a modern data integration platform like Hevo Data can be a game-changer.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Frequently Asked Questions

Q. Can you transform data in Snowflake?

Yes, Snowflake provides powerful data transformation capabilities through its SQL interface, allowing you to clean, enrich, and reshape your data as needed.

Q. What are some Snowflake features that help data transformation?

Some key Snowflake features that aid data transformation include SQL support for window functions, pivot/unpivot operations, regular expression matching, JSON handling, semi-structured data processing, and the ability to create user-defined functions and stored procedures.