Key takeaways

Key takeawaysSyncing HubSpot data to BigQuery can be approached in several ways, each with its own trade-offs and benefits.

- Hevo offers a no-code, fully automated solution to move data from HubSpot to BigQuery. It handles both historical and real-time syncing, reducing manual work and errors.

- Using the HubSpot API provides precise control over data extraction and workflow customization, making it ideal for technical teams with complex data needs.

- The manual CSV export/import method is the simplest and works well for small or one-off transfers. Its benefit lies in minimal setup and low technical requirements. Finally, custom integrations allow full flexibility using code, cloud functions, and APIs.While it offers maximum control, the trade-off is the need for engineering resources, ongoing monitoring, and maintenance

Need a better way to handle all that customer and marketing data in HubSpot. Transfer it to BigQuery. Simple! Want to know how?

This article will explain how you can transfer your HubSpot data into Google BigQuery through various means, be it HubSpot’s API or an automated ETL tool like Hevo Data, which does it effectively and efficiently, ensuring the process runs smoothly.

Table of Contents

Prerequisites

When moving your HubSpot data to BigQuery manually, make sure you have the following set up:

- An account with billing enabled on Google Cloud Platform.

- Admin access to a HubSpot account.

- You have the Google Cloud CLI installed.

- Connect your Google Cloud project to the Google Cloud SDK.

- Activate the Google Cloud BigQuery API.

- Make sure you have BigQuery Admin permissions before loading data into BigQuery.

These steps ensure you’re all set for a smooth data migration process!

Easily transfer your HubSpot data to BigQuery with Hevo’s intuitive platform. Our no-code solution makes data integration and migration seamless and efficient.

Why choose Hevo?

- Auto-Schema Mapping: Automatically handle schema mapping for a smooth data transfer.

- Flexible Transformations: Utilize drag-and-drop tools or custom Python scripts to prepare your data.

- Real-Time Sync: Ensure your BigQuery warehouse is always up-to-date with real-time data ingestion.

Join over 2000 satisfied customers, including companies like FairMoney and Pelago, who trust Hevo for their data integration needs. Check out why Hevo is rated 4.7 stars on Capterra.

Get Started with Hevo for FreeMethods to move data from HubSpot to BigQuery

Method 1 – Using Hevo

Configuring HubSpot as a Source

- Navigate to Pipelines

- Click PIPELINES in the navigation bar.

- Click + CREATE PIPELINE in the Pipelines List View.

- Click PIPELINES in the navigation bar.

- Select HubSpot as Source

- On the Select Source Type page, choose HubSpot.

- On the Select Source Type page, choose HubSpot.

- Connect Your HubSpot Account

- On the Configure your HubSpot Account page, do one of the following:

- Use an existing account: Select a previously configured account and click CONTINUE.

- Add a new account:

- Click + ADD HUBSPOT ACCOUNT.

- Log in to your HubSpot account.

- On the HubSpot Accounts page, select the account you want to sync.

- Authorize Hevo Data Inc to access your HubSpot data.

- Click + ADD HUBSPOT ACCOUNT.

- Use an existing account: Select a previously configured account and click CONTINUE.

- On the Configure your HubSpot Account page, do one of the following:

- Configure Source Settings

- On the Configure your HubSpot Source page, provide the following:

- Pipeline Name: Enter a unique name (max 255 characters).

- Authorized Account: Auto-filled with your HubSpot email.

- HubSpot API Version: Choose the API version for ingestion (default: API v3).

- Historical Sync Duration: Set how much past data to ingest (default: 3 months).

- Tip: Select All Available Data to ingest everything since Jan 1, 1970.

- Tip: Select All Available Data to ingest everything since Jan 1, 1970.

- Pipeline Name: Enter a unique name (max 255 characters).

- On the Configure your HubSpot Source page, provide the following:

- Continue Setup

- Click CONTINUE.

- Proceed to configure data ingestion and set up the Destination.

- Click CONTINUE.

Configuring Google BigQuery as a Destination

- Go to Destinations

- Click DESTINATIONS in the navigation bar.

- In the list view, click + CREATE DESTINATION.

- Click DESTINATIONS in the navigation bar.

- Choose BigQuery

- On the Add Destination page, select Google BigQuery as your Destination type.

- On the Add Destination page, select Google BigQuery as your Destination type.

- Configure BigQuery

- On the Configure your Google BigQuery Destination page, fill in the following:

- Destination Name: Provide a unique name (up to 255 characters).

- Account: Select how you want to connect to BigQuery.

- Once the Pipeline is created, you cannot switch account types.

- Service Account: Upload the Service Account Key JSON from GCP.

- User Account:

- Click + ADD GOOGLE BIGQUERY ACCOUNT.

- Sign in to your Google account.

- Click Allow to authorize Hevo.

- Click + ADD GOOGLE BIGQUERY ACCOUNT.

- Once the Pipeline is created, you cannot switch account types.

- Project ID: Select the Project ID for your BigQuery instance.

- Destination Name: Provide a unique name (up to 255 characters).

- On the Configure your Google BigQuery Destination page, fill in the following:

- Dataset Options

- Auto-Create Dataset Enabled: Hevo checks your permissions and automatically creates a dataset named hevo_dataset_

. - Example:Project ID westeros-153019 → hevo_dataset_westeros_153019.

- Missing permissions? Update them in GCP and click Check Again.

- Example:Project ID westeros-153019 → hevo_dataset_westeros_153019.

- Auto-Create Dataset Disabled: Pick an existing dataset from the drop-down or click + New Dataset to create one manually.

- Auto-Create Dataset Enabled: Hevo checks your permissions and automatically creates a dataset named hevo_dataset_

- GCS Bucket Options

- Auto-Create GCS Bucket Enabled: Hevo creates a bucket named hevo_bucket_<Project_ID> (permissions required).

- Auto-Create GCS Bucket Disabled: Select an existing bucket or click + New GCS Bucket to create one.

- Auto-Create GCS Bucket Enabled: Hevo creates a bucket named hevo_bucket_<Project_ID> (permissions required).

- Advanced Settings

- Populate Loaded Timestamp: Adds a __hevo_loaded_at_ column showing when each event was loaded.

- Sanitize Table/Column Names: Replaces spaces and special characters with underscores (_).

- This setting cannot be changed later.

- This setting cannot be changed later.

- Populate Loaded Timestamp: Adds a __hevo_loaded_at_ column showing when each event was loaded.

- Finalize Setup

- Click TEST CONNECTION to confirm everything works.

- Click SAVE & CONTINUE to finish the setup.

- Click TEST CONNECTION to confirm everything works.

Manual CSV Export/Import

This is the most straightforward way to move your HubSpot data into BigQuery, but it can be time-consuming.

You start by exporting your HubSpot objects (contacts, deals, companies, or custom objects) as CSV files. Then, you manually upload these files into BigQuery using the web UI or command-line tools like bq load.

This method works well for small datasets or occasional, one-off transfers. However, it quickly becomes cumbersome if your data is updated frequently, because you have to repeat the export-import process each time, handle duplicates, and ensure consistency across multiple tables.

Custom Integration

For teams with engineering resources, you can create a fully customized pipeline using code, cloud functions, and the HubSpot and BigQuery APIs.

This approach allows you to control the exact flow of data, transform fields on the fly, and implement automated schedules. You can also include error handling, logging, and incremental updates to optimize performance. While this method offers flexibility and scalability, it comes with trade-offs. You need to maintain the code, monitor the pipeline for failures, and periodically update it as APIs evolve. It’s ideal for organizations with complex data requirements or multiple sources to integrate, but overkill for smaller teams or simpler use cases.

Method 2: How to move data from HubSpot to BigQuery Using HubSpot Private App

Step 1: Creating a Private App

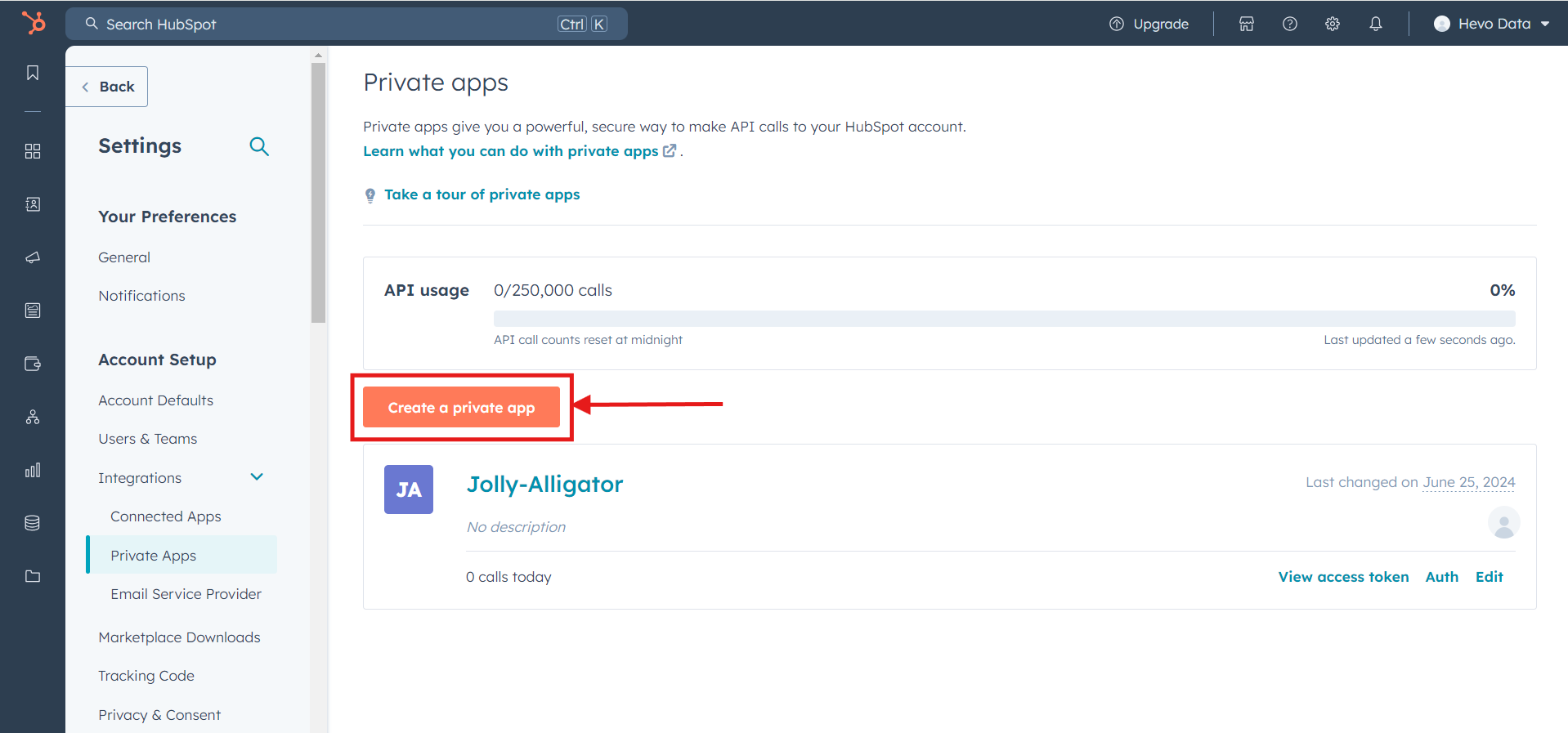

Go to the Settings of your HubSpot account and select Integrations → Private Apps. Click on Create a Private App.

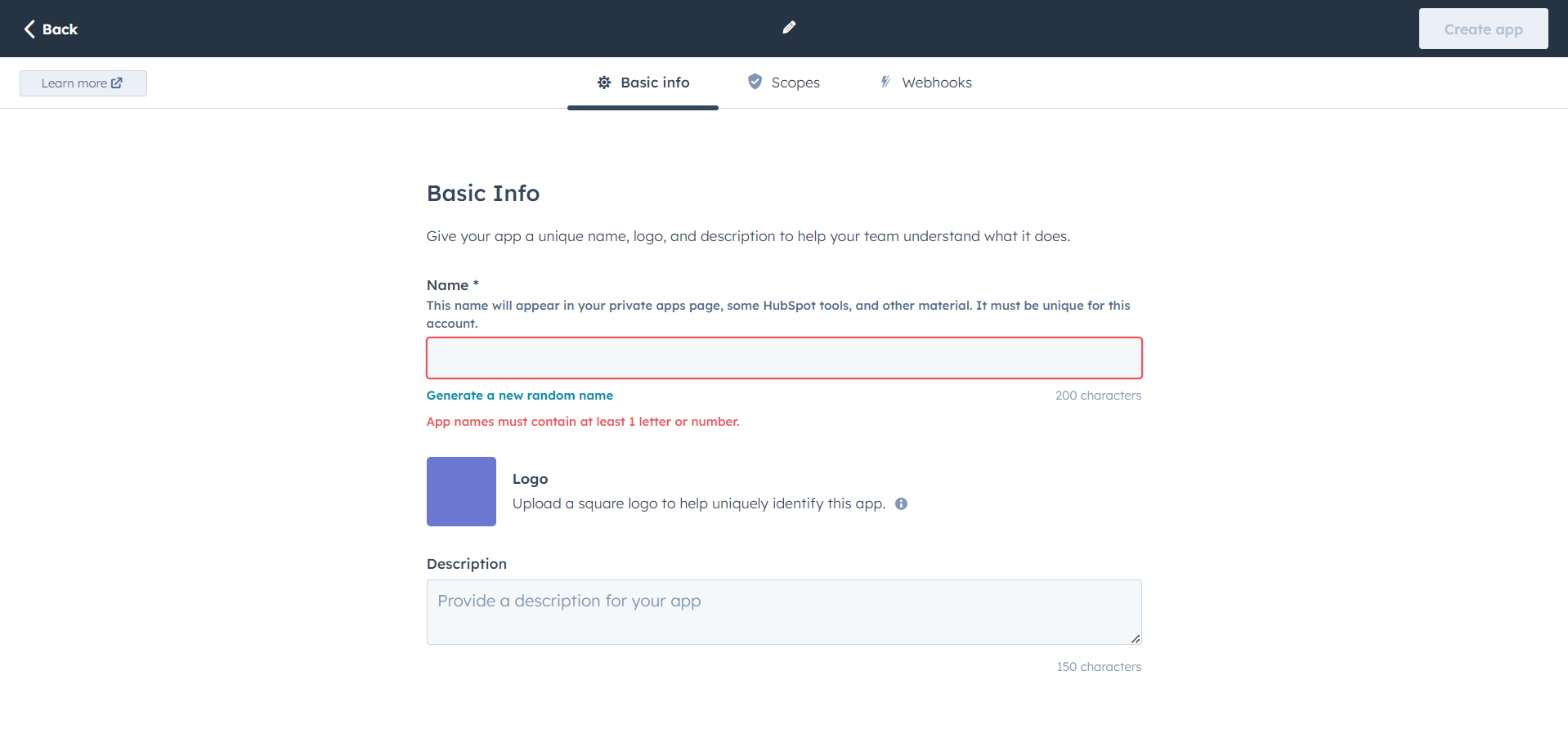

On the Basic Info page, enter your app name or generate a random one, and upload a logo by hovering over the placeholder (or use the initials of your app name as the default logo). You can also provide a description for your app, though leaving it blank is an option; however, a relevant description is recommended.

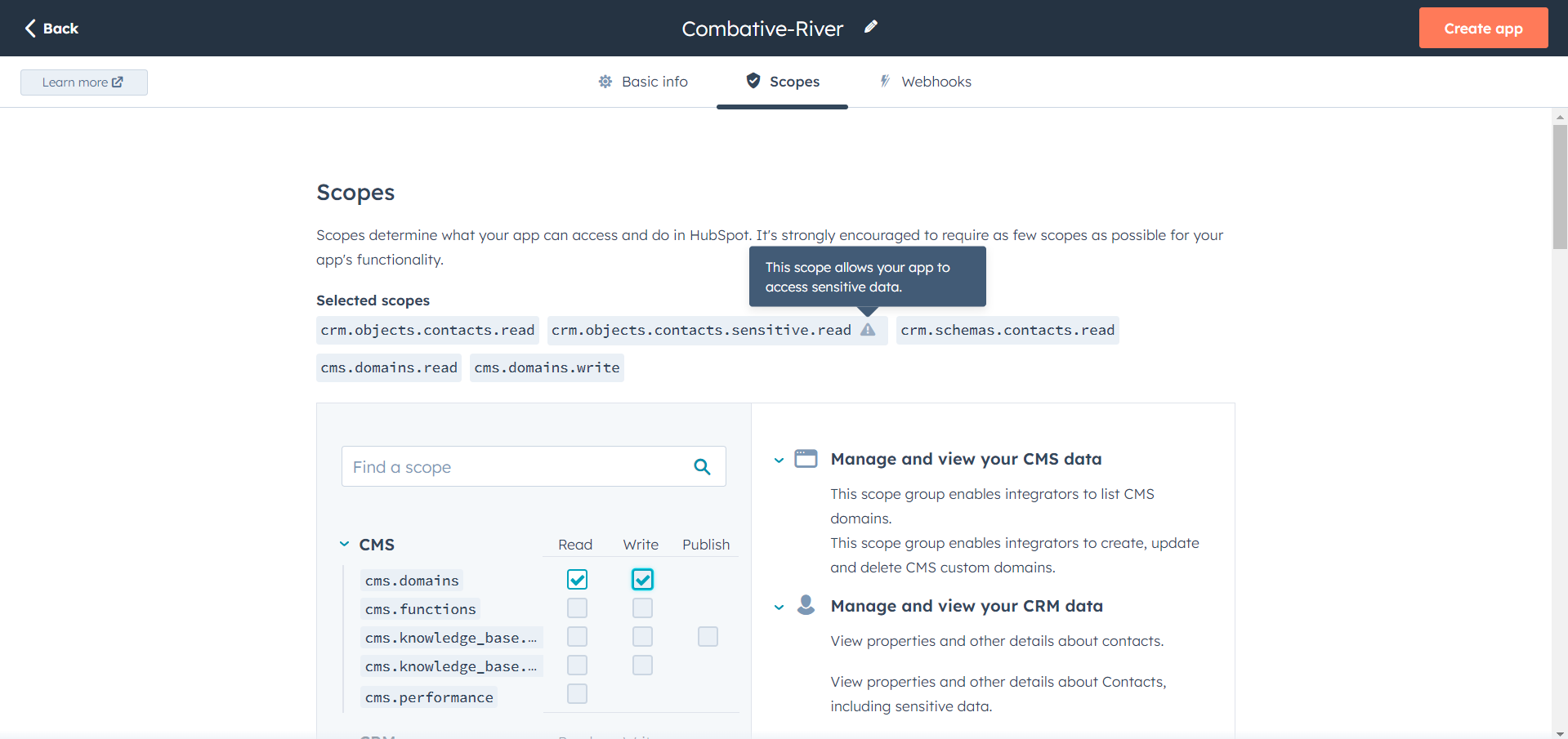

Click on the Scopes tab next to the Basic Info button to configure permissions for Read, Write, or both. For example, if you only want to transfer contact information from your HubSpot data into BigQuery, select only the Read configuration, as shown in the attached screenshot.

Note: If you access some sensitive data, it will also showcase a warning message, as shown below.

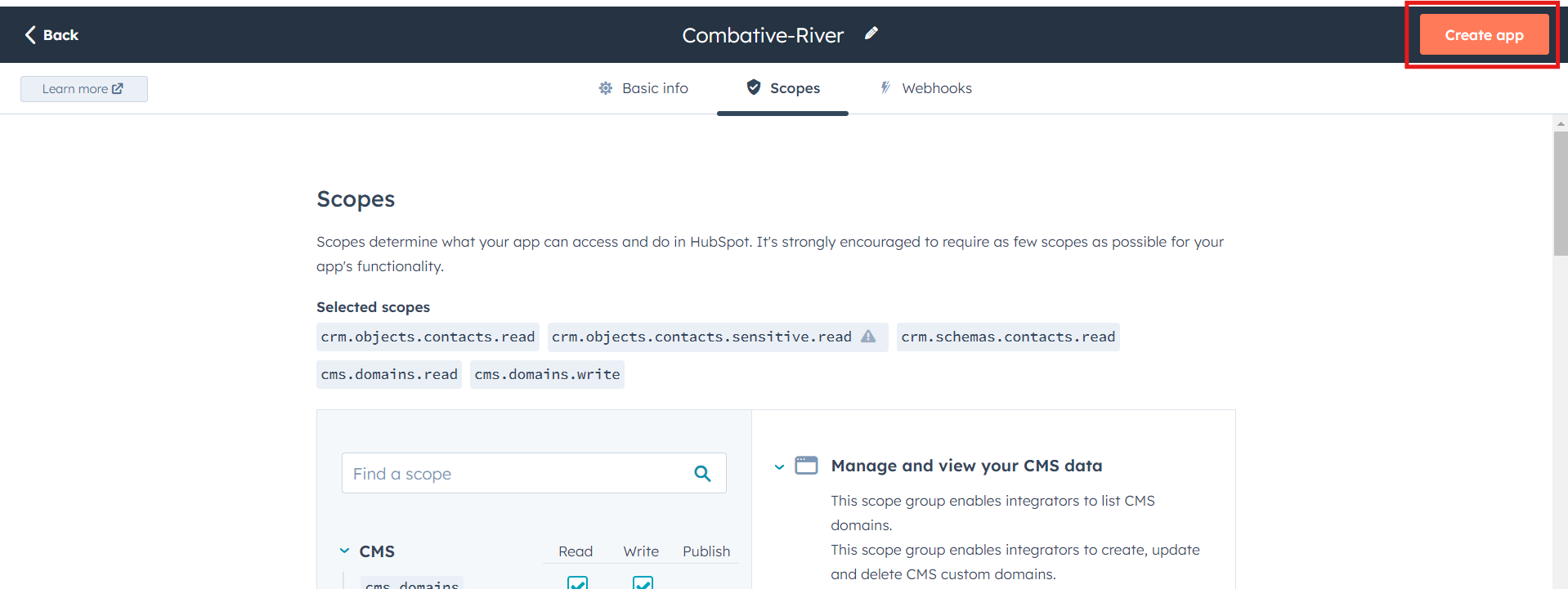

Once you have configured your permissions, click the Create App button at the top right.

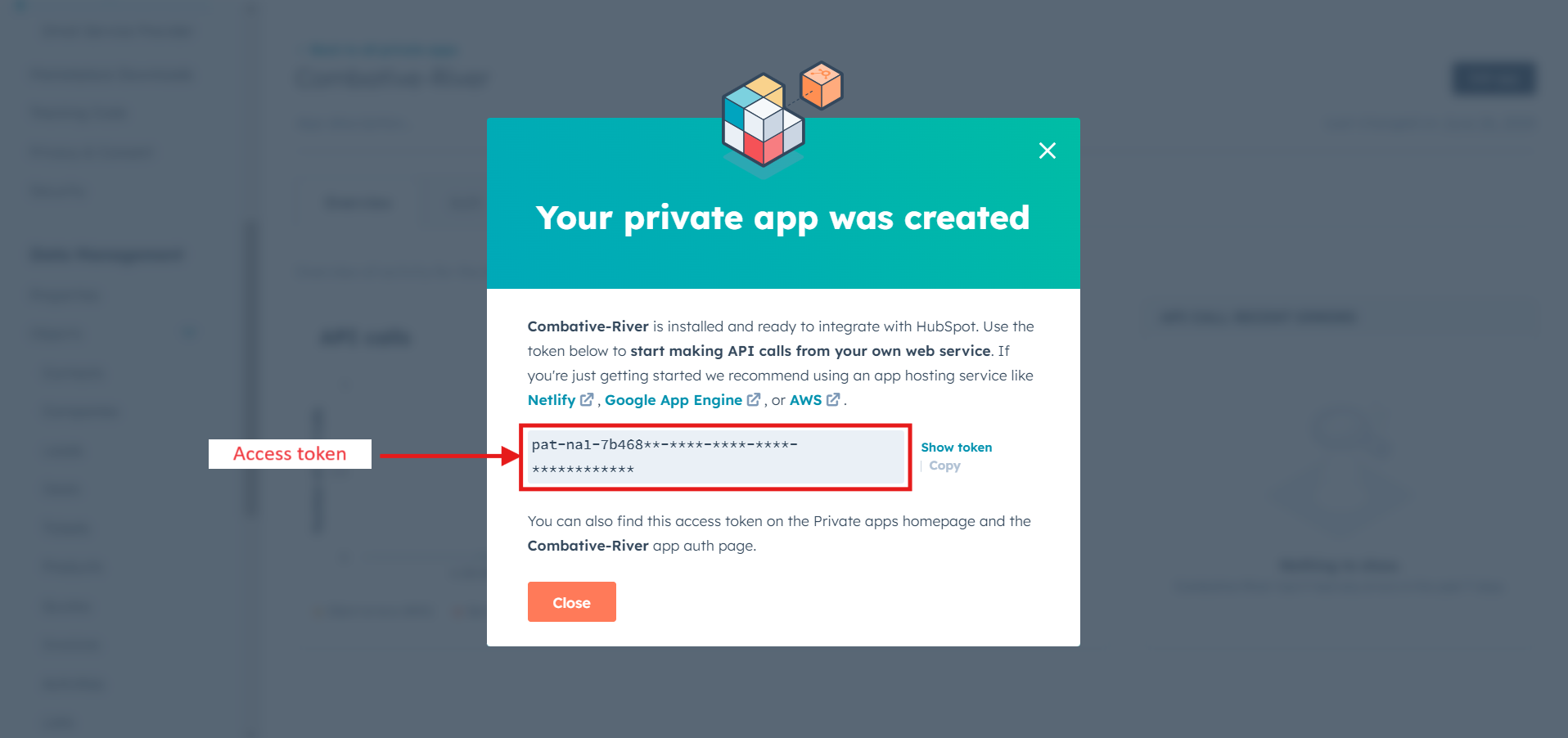

After selecting the Continue Creating button, a prompt screen with your Access token will appear.

Once you click on Show Token, you can copy your token.

Note: Keep your access token handy; we will require that for the next step. Your Client Secret is not needed.

Step 2: Making API Calls with your Access Token

Use curl or Axios (Node.js) to call:

https://api.hubapi.com/crm/v3/objects/contactsAdd headers:

<code>Authorization: Bearer YOUR_TOKENContent-Type: application/json

Step 3: Create a BigQuery Dataset

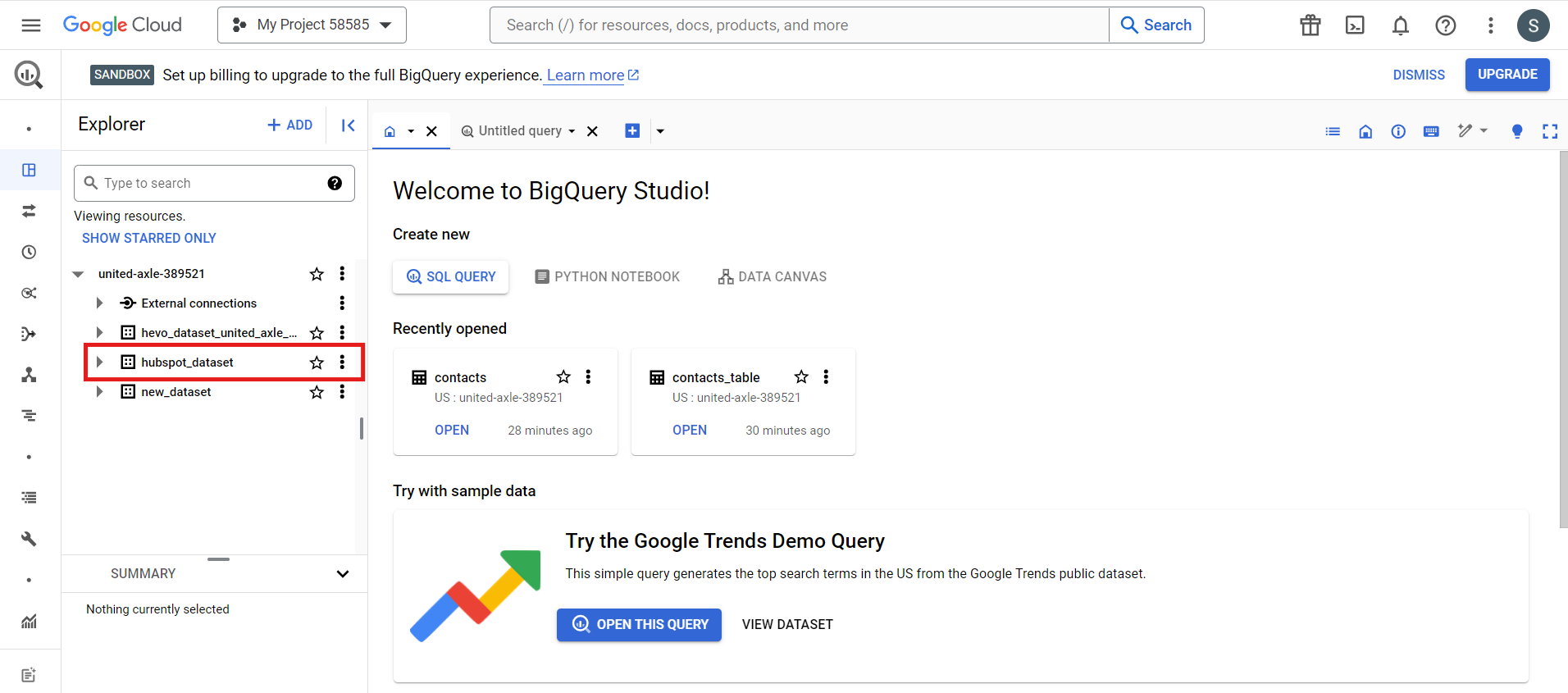

From your Google Cloud command line, run this command:

bq mk hubspot_datasethubspot_dataset is just a name that I have chosen. You can change it accordingly. The changes will automatically be reflected in your Google Cloud console. Also, a message “Dataset ‘united-axle-389521:hubspot_dataset’ successfully created.” will be displayed in your CLI.

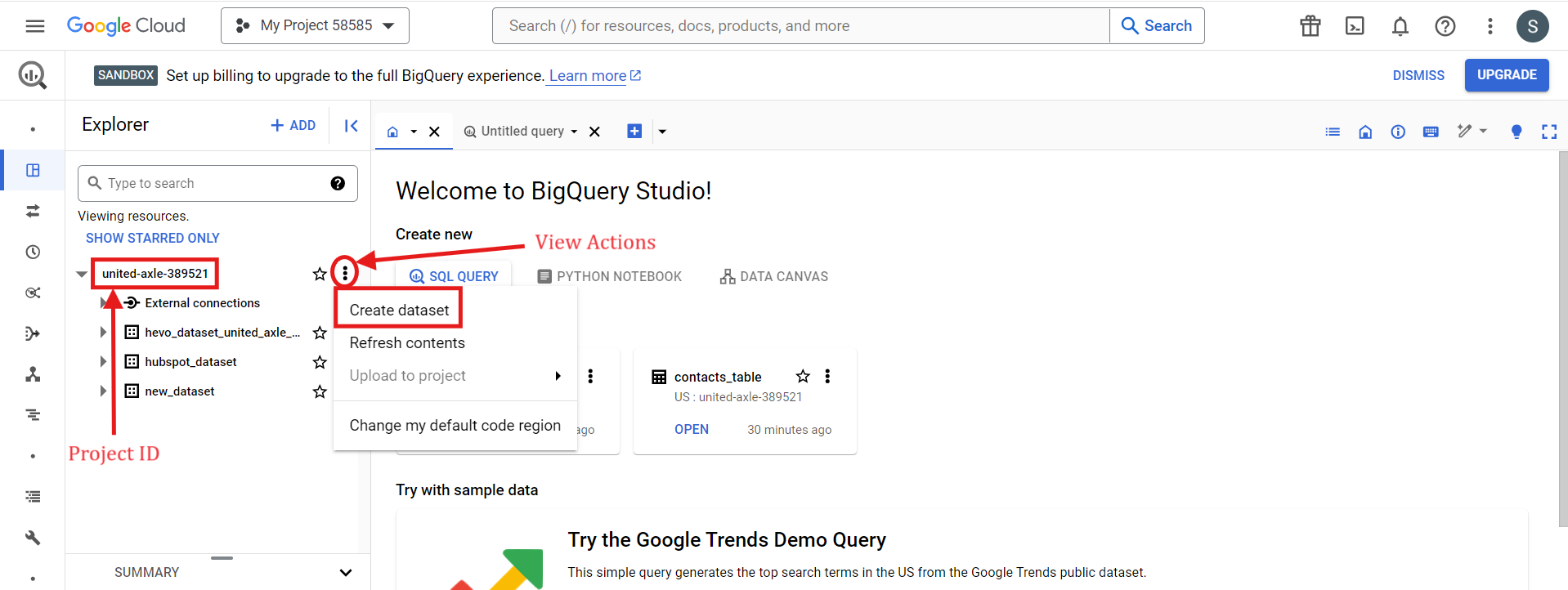

NOTE: Instead of using the Google command line, you can also create a dataset from the console. Just hover over View Actions on your project ID. Once you click it, you will see a Create Dataset option.

Step 4: Create an Empty Table

Run the following command in your Google CLI:

bq mk

--table

--expiration 86400

--description "Contacts table"

--label organization:development

hubspot_dataset.contacts_tableAfter your table is successfully created, a message “Table ‘united-axle-389521:hubspot_dataset.contacts_table’ successfully created” will be displayed. The changes will also be reflected in the cloud console.

NOTE: Alternatively, you can create a table from your BigQuery Console. Once your dataset has been created, click on View Actions and select Create Table.

Step 5: Adding Data to your Empty Table

Convert HubSpot response (e.g., JSON) into newline-delimited JSON. Define schema in contacts_schema.json. Load using:

bq load \

--source_format=NEWLINE_DELIMITED_JSON \

hubspot_dataset.contacts_table \

./contacts_data.json \

./contacts_schema.jsonSee the documentation’s “DataTypes” and “Introduction to loading data” pages for more details.

Step 6: Scheduling Recurring Load Jobs

First, create a directory for your scripts and an empty backup script:

$ sudo mkdir /bin/scripts/ && touch /bin/scripts/backup.shNext, add the following content to the backup.sh file and save it:

#!/bin/bash

bq load --autodetect --replace --source_format=NEWLINE_DELIMITED_JSON hubspot_dataset.contacts_table ./contacts_data.jsonLet’s edit the crontab to schedule this script. From your CLI, run:

$ crontab -eYou’ll be prompted to edit a file where you can schedule tasks. Add this line to schedule the job to run at 6 PM daily:

0 18 * * * /bin/scripts/backup.shFinally, navigate to the directory where your backup.sh file is located and make it executable:

$ chmod +x /bin/scripts/backup.shAnd there you go! These steps ensure that cron runs your backup.sh script daily at 6 PM, keeping your data in BigQuery up-to-date.

Limitations of the Manual Method to Move Data from HubSpot to BigQuery

- HubSpot APIs have a rate limit of 250,000 daily calls that reset every midnight.

- You can’t use wildcards, so you must load each file individually.

- CronJobs won’t alert you if something goes wrong.

- You need to set up separate schemas for each API endpoint in BigQuery.

- Not ideal for real-time data needs.

- Extra code is needed for data cleaning and transformation.

Use Cases to transfer your data from HubSpot to BigQuery

BigQuery’s flexible pricing model can lead to major cost savings compared to having an on-premise data warehouse that you pay to maintain.

Moving HubSpot data to BigQuery creates a single source of truth that aggregates information to deliver accurate analysis. Therefore, you can promptly understand customers’ behavior and improve your decision-making concerning business operations.

BigQuery can manage huge amounts of data with ease. If there is a need for your business expansion and the production of data increases, BigQuery will be there, making it easy for you.

BigQuery, built on Google Cloud, has robust security features like auditing, access controls, and data encryption. User data is kept securely and compliant with the rules, thus making it safe for you.

Conclusion

HubSpot is a key part of many businesses’ tech stack, enhancing customer relationships and communication strategies; your business growth potential skyrockets when you combine HubSpot data with other sources. Moving your data lets you enjoy a single source of truth, which can significantly boost your business growth.

We’ve discussed two methods to move data. The first is the manual process, which requires a lot of configuration and effort. Instead, try Hevo Data, it does all the heavy lifting for you with a simple, intuitive process. Hevo Data helps you integrate data from multiple sources like HubSpot and load it into BigQuery for real-time analysis. It’s user-friendly, reliable, and secure, and makes data transfer hassle-free.

Sign up for a 14-day free trial with Hevo and connect Hubspot to BigQuery in minutes. Also, check out Hevo’s unbeatable pricing or get a custom quote for your requirements.

FAQs

Q1. How often can I sync my HubSpot data with BigQuery?

You can sync your HubSpot data with BigQuery as often as needed. With tools such as Hevo Data, you can set up a real-time process to keep your data up-to-date.

Q2. What are the costs associated with this integration?

The costs for integrating HubSpot with BigQuery depend on the tool you use and the amount of data you’re transferring. Hevo Data offers a flexible pricing model. Our prices can help you better understand. BigQuery costs are based on the amount of data stored and processed.

Q3. How secure is the data transfer process?

The data transfer process is highly secure. Hevo Data ensures data security with its fault-tolerant architecture, access controls, data encryption, and compliance with industry standards, ensuring your data is always protected throughout the transfer.

Q4. What support options are available if I encounter issues?

Hevo Data offers extensive support options, including detailed documentation, a dedicated support team through our Chat support available 24×5, and community forums. If you run into any issues, you can easily reach out for assistance to ensure a smooth data integration process.