Unlock the full potential of your Azure SQL data by integrating it seamlessly with BigQuery. With Hevo’s automated pipeline, get data flowing effortlessly—watch our 1-minute demo below to see it in action!

Integrating data from a database engine like Azure SQL to a data warehouse like Google BigQuery can benefit you substantially. It can enable you to perform advanced analytics on your data, allowing you to extract essential patterns that can help maximize profits and customer satisfaction.

BigQuery has built-in artificial intelligence features that can help you simplify analytics workflow. With it, you get the flexibility to define functions to manage and transform data.

This article discusses three major methods for integrating data from Azure SQL to BigQuery and mentions the use cases of this integration.

Table of Contents

Why Integrate Azure SQL to BigQuery?

There are multiple reasons to link Azure SQL into BigQuery. Here are some of the key advantages of performing this integration:

- BigQuery leverages Gemini to provide AI-powered code-assist, recommendations, and visual data preparation features, enhancing productivity while maintaining costs.

- BigQuery’s Serverless Apache Spark feature enables you to run Spark code in BigQuery Studio, eliminating the need to export data and manage infrastructure.

- BigLake—a feature of BigQuery—enables deriving insights from images, audio files, and documents with the help of AI models, including Vertex AI’s vision and speech-to-text APIs.

- Method 1: Replicating Data from Azure SQL to BigQuery Using CSV File Transfer

Export your data as CSV files from Azure SQL and manually import them into BigQuery.

- Method 2: Move Data from Azure SQL to BigQuery Using SQL Server

SQL Server can be used as an intermediary to transfer data from Azure SQL to BigQuery.

- Method 3: Connect Azure SQL Data to BigQuery Using Hevo

Hevo’s no-code platform ensures a seamless, automated data flow from Azure SQL to BigQuery with real-time data ingestion and pre/post-load transformations.

An Overview of Azure SQL

Azure SQL is a fully managed product using an SQL Server database engine in the Azure cloud. It consists of Azure SQL Database, Azure Instance Managed Instance, and SQL Server for Azure VMs. Azure SQL contains platform-as-a-service (PaaS) and infrastructure-as-a-service (IaaS) options with an availability SLA of 99.99% and 99.95%, respectively.

It has multi-layered security options with built-in security controls, including networking, authentication, T-SQL, and key management.

An Overview of Google BigQuery

Google BigQuery is a fully managed cloud data warehousing platform that helps generate valuable insights from the data. Its AI-enabled features can allow users to perform complex queries without technical expertise. BigQuery Studio lets you run Apache Spark code to perform advanced analytics on your data. It supports open-table formats, allowing you to use open-source tools while enjoying the benefits of an integrated data platform.

BigQuery ML is a built-in machine learning feature that allows you to run ML models on your data and generate valuable insights cost-efficiently. As a new customer, you can get a $300 free trial of BigQuery. Refer to pricing to learn more about the associated costs.

Methods of Integrating Data from Azure SQL to BigQuery

Method 1: Replicating Data from Azure SQL to BigQuery Using CSV File Transfer

Step 1: Exporting Data from Azure SQL Using BCP Utility

To export data from the Azure SQL database, you can run the following statement by replacing the <placeholders> in the Windows command prompt:

bcp <schema.object_name> out <./data/file.csv> -t "," -w -S <server-name.database.windows.net> -U <username> -d <database>This command will copy your data from Azure SQL to a CSV format on your local machine.

Step 2: Importing CSV File into Google BigQuery

This section highlights the steps to import CSV files into Google BigQuery using the Google Cloud console. Follow the steps below:

- Navigate to the Google BigQuery page in the Google Cloud console.

- From the Explorer panel, select your project and a dataset.

- Click Open by expanding the Actions option.

- Select Create table + in the details panel.

- On the Create table page, navigate to the Source section:

- Select Upload for the Create table from option.

- For Select file, click on Browse.

- Browse the CSV file and click Open.

- Select CSV for the File format.

- In the Destination section on the Create table page.

- Mention the Project, Dataset, and Table fields.

- Ensure the Table type is a Native table.

- Under the Schema option, enter the schema definition.

- Select applicable items from the Advanced options.

- Finally, click on the Create table.

Follow the steps mentioned in Load data from local files to learn more about importing data directly into BigQuery.

Limitations of Using CSV File Transfer Method

- Lack of Automation: This method lacks automation, as it requires manually transferring data from Azure SQL to BigQuery. You must repeatedly perform these steps to ensure changes made to the source data reflect on the destination.

- File Size Limit: When loading data from a local machine, the file size cannot exceed 100 megabytes. To extend this limit, you might need to add your data to Google Cloud Storage (GCS) first.

Method 2: Move Data from Azure SQL to BigQuery Using SQL Server

This section discusses moving data from Azure SQL to BigQuery using an SQL server as a mediator. First, you must move data from Azure SQL to an SQL Server. Before transferring data from SQL Server to BigQuery, you must satisfy all the prerequisites.

Prerequisites:

- You must have a Google Cloud account.

- On the project selector page of the Google Cloud console, create a Google Cloud project and verify the billing status for the project.

- You must create a public Cloud Data Fusion instance by enabling BigQuery, Cloud Data Fusion, and Cloud Storage APIs.

- Set up VPC network peering if you choose to create a private instance.

- You must have the required permissions to perform this integration, follow IAM Access Control, and grant permissions.

After satisfying the prerequisites, you can follow these steps:

- You can enable CDC in your SQL Server database.

- You must create and run a Cloud Data Fusion replication job.

- To do this, you must upload the JDBC driver to the Cloud Data Fusion interface.

- You must create a job, start, and monitor it.

- Finally, you can view the result on BigQuery in the Google Cloud console.

To learn more about the steps involved in this method, visit the SQL Server to BigQuery page.

Limitations of Using SQL Server Method

Although moving data from Azure SQL to BigQuery using SQL Server as a mediator is efficient, this method has limitations.

- Time Consumption: This method can take a lot of time to follow, as it requires moving data through multiple steps. As SQL Server acts as a mediator, there is no direct data transfer from Azure SQL to BigQuery.

- Technical Complexity: This method is technically complex to follow, increasing the chances of encountering errors. Additional technical knowledge is required to perform the steps.

Method 3: Connect Azure SQL Data to BigQuery Using Hevo

Hevo is a no-code, real-time ELT data pipeline platform that automates your data integration process. It provides a cost-effective way to develop data pipelines that integrate data from multiple sources to the destination of your choice. Hevo Data has over 150+ data source connector options.

Here are some of the features provided by Hevo:

- Data Transformation: It streamlines analytical tasks with its data transformation features. Hevo provides Python-based and drag-and-drop transformations that can enable you to clean and prepare data.

- Incremental Data Load: Hevo allows real-time modified data transfer, ensuring elegant bandwidth utilization on both source and destination.

- Automated Schema Mapping: It automates the schema management process by detecting the incoming data and replicating it according to the replication schema. Hevo allows you to choose from Full & Incremental Mappings according to your specific data replication needs.

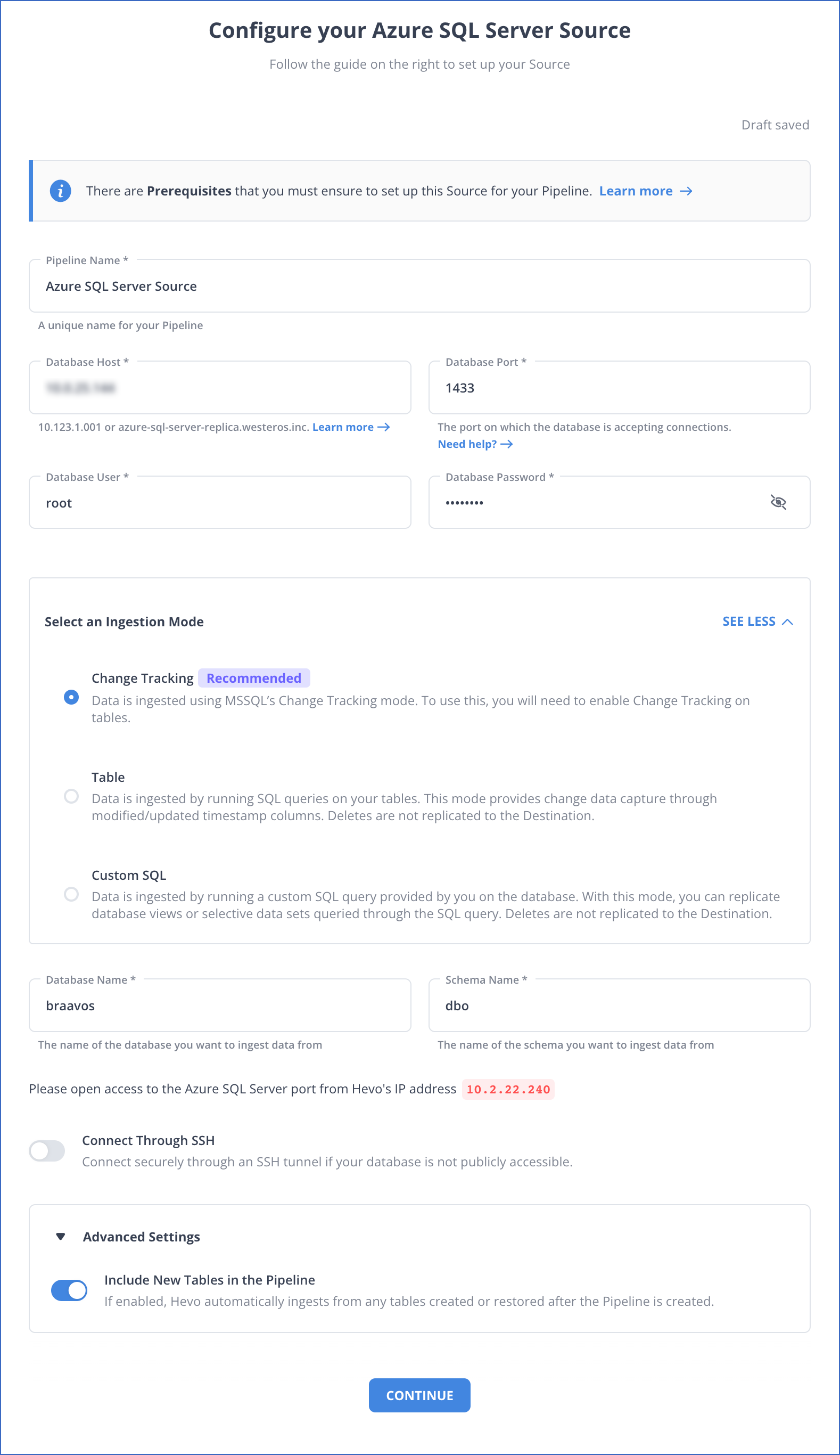

Step 1: Configure Azure SQL as Source

Prerequisites:

- You must have the MS SQL Server version 2008 or higher.

- Whitelist Hevo IP addresses for your region.

- If the Pipeline Mode is Change Tracking or Table and the Query mode is Change Tracking, you must grant the CHANGE TRACKING and ALTER DATABASE privileges to the database user.

- You must grant the database user SELECT and VIEW CHANGE TRACKING privileges.

- Retrieve the port number and hostname of the Source instance.

- You must have a Team Administrator, Pipeline Administrator, or Team Collaborator role in Hevo.

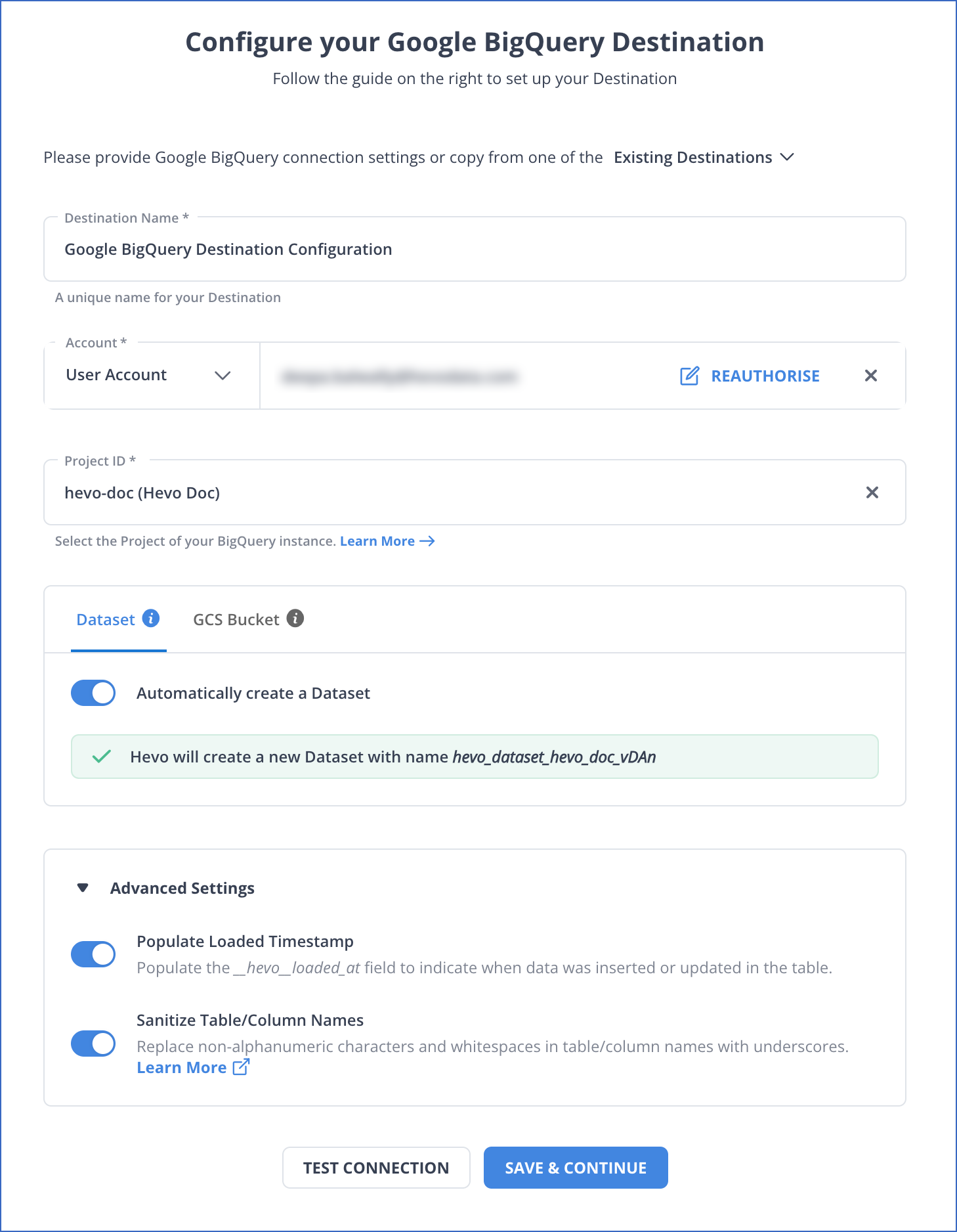

Step 2: Configure BigQuery as Destination

Prerequisites:

- You must create a Google Cloud Project if you don’t have one.

- Assign the necessary roles for the GCP project to the connecting Google account.

- You must have the GCP project linked to an active payment account.

- To establish a destination in Hevo, you must have a Team Collaborator or administrative role other than the Billing Administrator.

Use Cases of Integrating Azure SQL to BigQuery

- Getting Azure SQL data into BigQuery allows users to perform complex queries on the dataset and extract insights from it.

- BigQuery is a robust data warehouse that creates a unified platform for a single data source for your organization.

- This integration provides a cost-effective solution for data storage, enabling backups for huge volumes of data.

Conclusion

This article discusses the three widely used methods for integrating data from Azure SQL to BigQuery. All the mentioned methods efficiently transfer data, but some limitations are associated with the second and third methods. To overcome these limitations, you can use Hevo Data for data integration.

Hevo provides over 150 data source connectors from which you can extract data and move it to the destination of your choice.

Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

Frequently Asked Questions (FAQs)

1. What are the considerations before selecting BigQuery as a data warehouse?

Here are the key factors that you must keep in mind before selecting BigQuery as your data warehouse:

– BigQuery charges per gigabyte of data stored monthly around $0.005 per GB. The free trial includes 10 GB storage, so the size of the data matters while using BigQuery.

– It can handle large volumes of data with a capacity of 140 GB tables.

– BigQuery offers flexible access controls, but they might become complex for you initially. Ensure you understand access management to secure your data.

2. How do I connect Azure to BigQuery?

To connect Azure to BigQuery:

1. Use a third-party ETL tool like Hevo Data to automate data transfer.

2. Alternatively, export data from Azure services (e.g., Azure SQL Database) to a file format like CSV, store it in Google Cloud Storage, and load it into BigQuery using BigQuery’s GCS integration.1

3. How do I sync Cloud SQL with BigQuery?

To sync Cloud SQL with BigQuery:

1. Set up a Cloud SQL export to Google Cloud Storage (GCS).

2. Automate this export using Cloud SQL scheduled exports.

3. Use BigQuery’s external table feature or load data from GCS into BigQuery.

4. For real-time sync, use Datastream or other third-party connectors like Hevo to automate the data flow between Cloud SQL and BigQuery.