Quick Takeaway

Quick TakeawayMigrating data from MySQL to Snowflake includes extracting data from MySQL, transforming it, creating Snowflake tables and then loading it to Snowflake.

Here’s a breakdown of three easy methods:

- Method 1: Using custom code involves writing scripts to extract data from MySQL, transform it, and load it into Snowflake, offering more flexibility but requiring manual effort.

- Method 2: Using Snowsight interface allows users to directly interact with Snowflake for data loading and transformation, providing a user-friendly interface but requiring manual setup.

- Method 3: Using Hevo offers a no-code, automated ETL solution that simplifies the process of moving data from MySQL to Snowflake with real-time syncing.

Moving your data from MySQL to Snowflake is not as straightforward as it sounds. If you are juggling with messy scripts, worrying about breaking things mid-transfer, or spending hours trying to match schemas. Whether you’re scaling up, need real-time analytics, or just want a more efficient system, this guide is for you.

I’ve been through trial and error, and now I’ll walk you through simple, proven ways to migrate your MySQL data to Snowflake, both manually and automatically, along with the factors to consider while choosing the right approach. Let’s simplify this process together.

Table of Contents

Why move MySQL data to Snowflake?

- Performance and Scalability: MySQL may experience issues managing massive amounts of data and numerous user queries simultaneously as data quantity increases. Snowflake’s cloud-native architecture, which offers nearly limitless scalability and great performance, allows you to handle large datasets and intricate queries effectively.

- Higher Level Analytics: Snowflake offers advanced analytical features like data science and machine learning workflow assistance. These features can give you deeper insights and promote data-driven decision-making.

- Economy of Cost: Because Snowflake separates computation and storage resources, you can optimize your expenses by only paying for what you utilize. The pay-as-you-go approach is more economical than the upkeep and expansion of MySQL servers situated on-site.

- Data Integration and Sharing: Snowflake’s powerful data-sharing features make integrating and securely exchanging data easier across departments and external partners. This skill is valuable for firms seeking to establish a cohesive data environment.

- Streamlined Upkeep: Snowflake removes the need for database administration duties, which include software patching, hardware provisioning, and backups. It is a fully managed service that enables you to concentrate less on maintenance and more on data analysis.

You can also move data from GCP MySQL to Snowflake within minutes for seamless data integration.

Tired of writing complex ETL scripts to move data from MySQL to Snowflake? Hevo’s no-code platform makes the process fast, reliable, and fully automated.

With Hevo:

- Connect MySQL to Snowflake without writing a single line of code

- Stream data in near real-time with built-in change data capture (CDC)

- Automatically handle schema changes and data type mismatches

Trusted by 2000+ data professionals from companies like Postman and ThoughtSpot. See how Hevo can simplify your MySQL to Snowflake data pipelines today!

Move your Data to Snowflake for Free using HevoStep-by-Step Approach To Perform MySQL-Snowflake Migration

Method 1: Using Hevo

Step 1: Configure MySQL as your Source

Step 2: Configure Snowflake as your Destination

You have successfully connected your source and destination with these two simple steps.

Advantages of using Hevo

- Auto Schema Mapping: Hevo eliminates the tedious task of schema management. It automatically detects the schema of incoming data and maps it to the destination schema.

- Incremental Data Load: Allows the transfer of modified data in real-time, ensuring efficient bandwidth utilization on both ends.

- Data Transformation: It provides a simple interface for perfecting, modifying, and enriching the data you want to transfer.

Method 2: Using Custom Code

Prerequisites

- You should have a Snowflake Account. If you don’t have one, check out Snowflake and register for a trial account.

- A MySQL server with your database. You can download it from MySQL’s official website if you don’t have one.

Let’s examine the step-by-step method for connecting MySQL Snowflake using the MySQL Application Interface and Snowflake Web Interface.

Step 1: Extract Data from MySQL

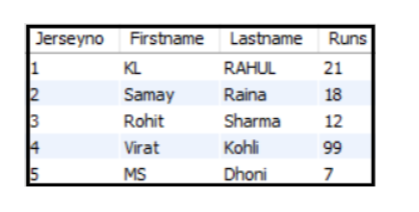

I created a dummy table called cricketers in MySQL for this demo. You can click on the rightmost table icon to view your table.

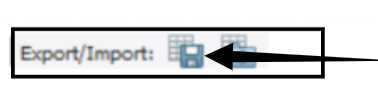

Click the icon next to Export/Import to automatically save a .csv file of the selected table to your local storage for loading into Snowflake.

Step 2: Create a new Database in Snowflake

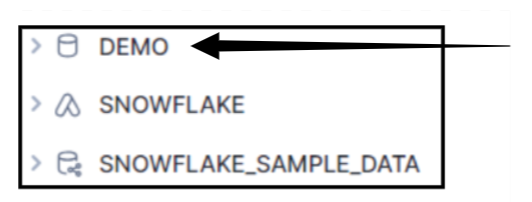

Log in to your Snowflake account, go to Data > Databases, and click the +Database icon to create a new database. For this guide, we’ll use an existing database called DEMO.

Step 3: Create a new Table in that database

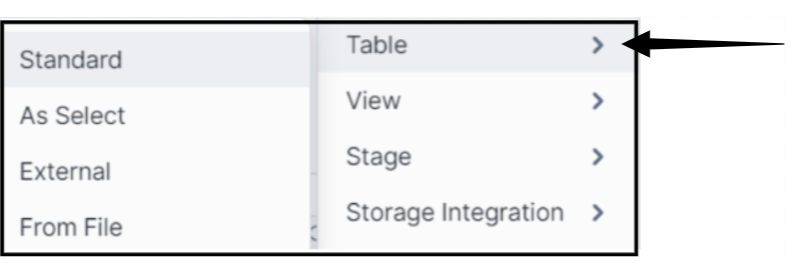

Now click DEMO>PUBLIC>Tables, click the Create button, and select the From File option from the drop-down menu.

A Dropbox will appear where you can drag and drop your .csv file, then create a new table with a name or choose an existing one. If you select an existing table, the data will be appended to it.

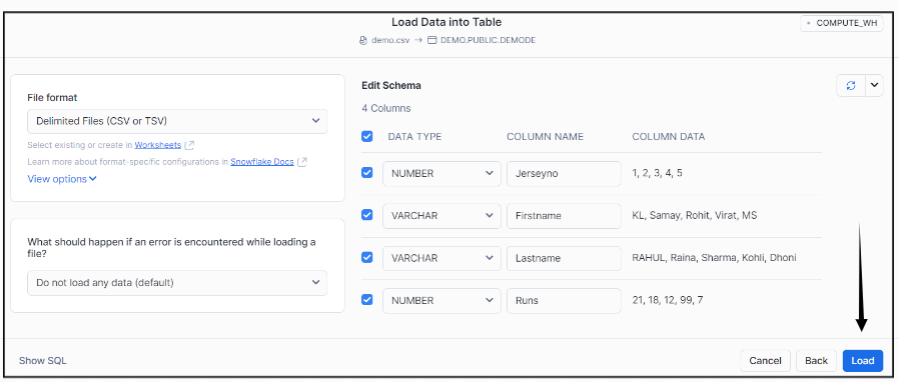

Step 4: Edit your table schema

Click Next to open the schema editor, where you can modify the schema as needed. Once done, click Load to begin importing your table data from the .csv file into Snowflake.

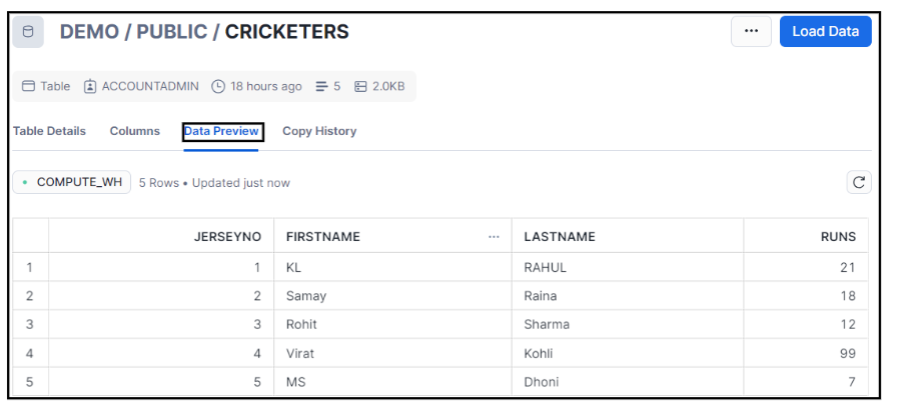

Step 5: Preview your loaded table

Once the loading process has been completed, you can view your data by clicking the preview button.

Note: An alternative method of moving data is to use Snowflake’s COPY INTO command, which allows you to load data from other sources into your Snowflake Data Warehouse. You will have to write SQL queries to connect your data from your local storage to the target table in Snowflake. Finally, you can execute your COPY INTO statement to start loading your MySQL data into Snowflake.

Limitations of Manually Migrating MySQL Data to Snowflake

- Error-prone: Custom coding and SQL Queries introduce a higher risk of errors, potentially leading to data loss or corruption.

- Time-Consuming: Handling tables for large datasets is highly time-consuming.

- Orchestration Challenges: Manually migrating data needs more monitoring, alerting, and progress-tracking features.

Bonus Method 3: Using Snowsight Interface

You can use Snowsight to create and run SQL scripts that define data loading processes from MySQL to Snowflake using external stages and Snowpipe. It offers an interactive interface to monitor and validate the migration process seamlessly.

Read the full blog on migrating data from MySQL to Snowflake using the Snowsight interface.

You can also Learn more about:

Conclusion

You can now seamlessly connect MySQL and Snowflake using manual or automated methods. The manual method will work if you seek a more granular approach to your migration. However, if you are looking for an automated and zero solution for your migration, book a demo with Hevo.

See how to connect Azure MySQL to Snowflake for optimized data handling. Explore our guide for clear instructions on setting up the transfer.

FAQ

1. How to transfer data from MySQL to Snowflake?

Step 1: Export Data from MySQL

Step 2: Upload Data to Snowflake

Step 3: Create Snowflake Table

Step 4: Load Data into Snowflake

2. How do I connect MySQL to Snowflake Migration?

1. Snowflake Connector for MySQL

2. ETL/ELT Tools

3. Custom Scripts

3. Does Snowflake use MySQL?

No, Snowflake does not use MySQL.

4. How to get data from SQL to Snowflake?

Step 1: Export Data

Step 2: Stage the Data

Step 3: Load Data

5. How to replicate data from SQL Server to Snowflake?

1. Using ETL/ELT Tools

2. Custom Scripts

3. Database Migration Services