Easily move your data from GCP MySQL to Snowflake to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

With Google Cloud Platform (GCP) MySQL, businesses can manage relational databases with more stability and scalability. GCP MySQL provides dependable data storage and effective query processing. However, enterprises can run into constraints with GCP MySQL, such as agility and scalability issues, performance constraints, and manual resource management requirements.

You can overcome these constraints and open up new data management and analytics options by switching from GCP MySQL to Snowflake. Snowflake provides unmatched concurrency, scalability, and performance, allowing enterprises to easily manage various workloads. In this article, you can examine the advantages and how to read GCP MySQL in Snowflake.

Table of Contents

Why Migrate from GCP MySQL to Snowflake?

There are multiple perks of integration between GCP MySQL and Snowflakes, so let’s look at some of them:

- Unlike standard MySQL deployments, Snowflake’s architecture allows for simple scaling, handling variable workloads effortlessly without the need for manual intervention.

- Snowflake’s division of computing and storage resources guarantees superior performance, facilitating quicker data processing and query execution while retaining high-performance analytical capabilities.

- Snowflake offers a fully managed pay-as-you-go SaaS cloud data warehouse.

Method 1: Using Hevo Data, a No-code Data Pipeline

Effortlessly integrate GCP MySQL with Snowflake using Hevo Data’s intuitive no-code platform. Hevo Data supports automated data pipelines, ensuring seamless data transfer and real-time analytics without the need for manual coding.

Method 2: Manually Connect GCP MySQL to Snowflake using CSV Files

Integrating GCP MySQL to Snowflake is a tedious and time-consuming process. To do so, export your data manually using CSV files and load the files into your Snowflake Account.

Get Started with Hevo for FreeOverview of Google Cloud Platform (GCP) MySQL

One of the fully managed, highly available, and scalable database options offered by Google is GCP MySQL. The Google Cloud SQL offers numerous database infrastructure configuration choices, read replicas, and scaling up with the replication feature. Cloud SQL has a backup feature that lets you restore datasets in case of data loss. The platform also makes it easier to convert from a backup by providing an extensive toolkit. Google Cloud SQL is an appealing option because of its flexibility, dependability, and user-friendliness.

Overview of Snowflake

Snowflake is a fully managed SaaS that was developed in 2012. It offers a single platform for data warehousing, data lakes, data engineering, data science, and data application development. The scalable architecture that underlines Snowflake supports large workloads and huge data. Any SQL client can establish a connection with its robust management panel. With only a few easy API calls, developers can work in real-time using the Snowflake service’s support for REST APIs. Maximize your Snowflake investment – calculate your costs in seconds with our free Snowflake Pricing Calculator.

Method 1: Migrating GCP MySQL to Snowflake Using Hevo

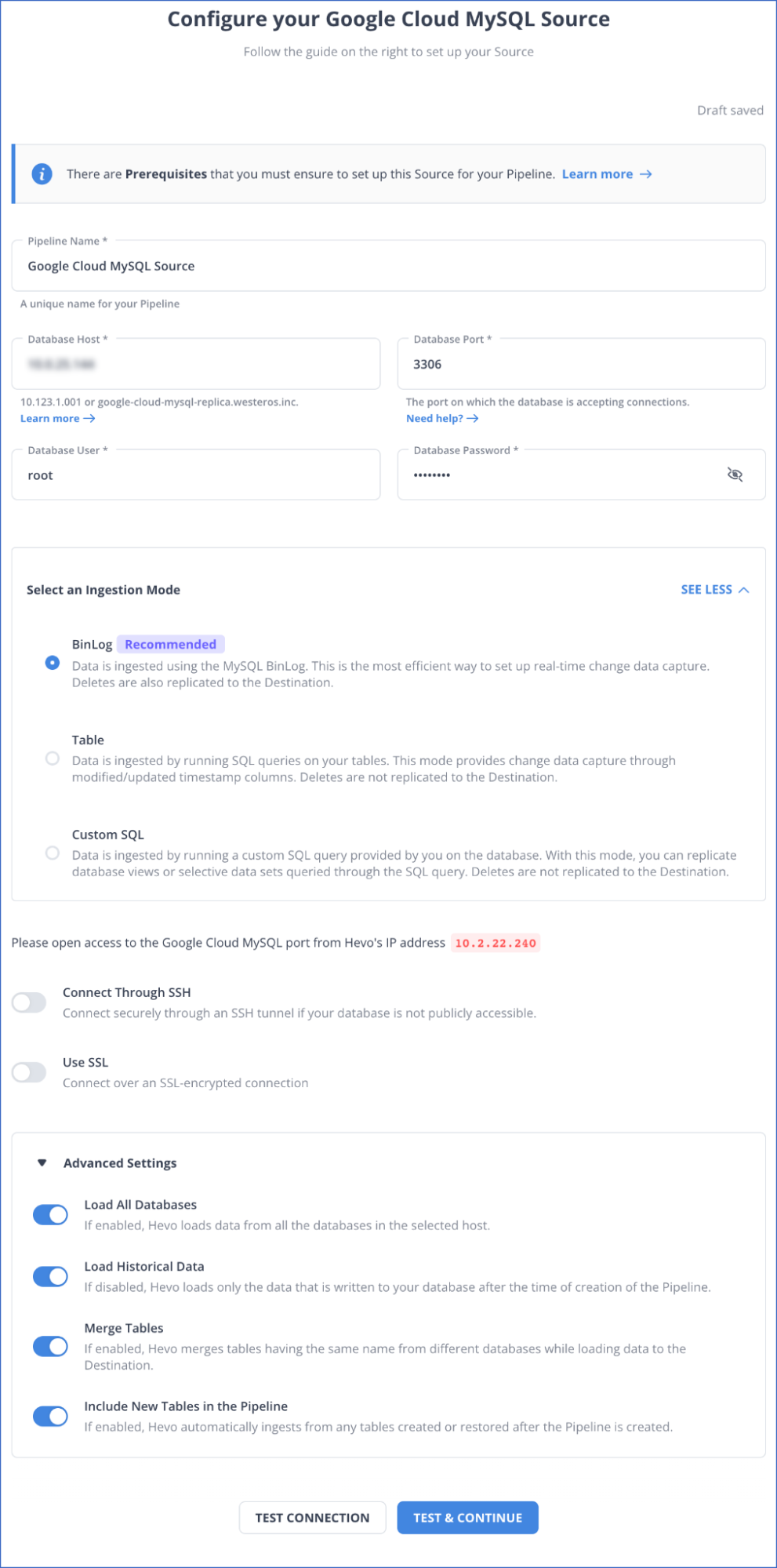

Step 1.1: Configure GCP MySQL as your Source

Read the GCP MySQL Hevo Documentation to learn more about the procedures.

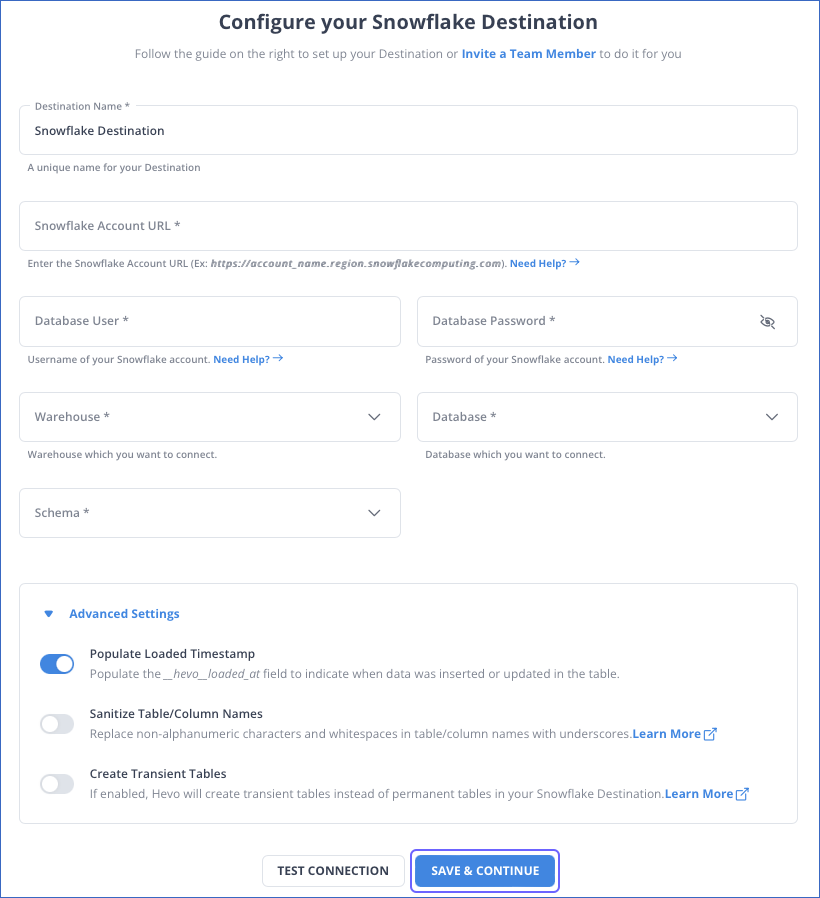

Step 1.2: Configure Snowflake as your Destination

You can consult the Hevo Documentation on Snowflake to learn more about the procedures involved.

With the two simple steps, you successfully connected GCP MySQL with Snowflake. There are numerous reasons to choose Hevo as your no-code ETL tool for integrating GCP MySQL with Snowflake.

Key Features of Hevo

- Data Transformation: Hevo Data makes data analysis more accessible by offering a user-friendly approach to data transformation. To enhance the quality of the data, you can create a Python-based Transformation script or utilize Drag-and-Drop Transformation blocks. These tools allow cleaning, preparing, and standardizing the data before importing it into the desired destination.

- Auto Schema Mapping: Hevo Data allows you to transfer recently updated data while optimizing bandwidth usage at the source and destination ends of a data pipeline.

- Incremental Data Load: The Auto Mapping feature in Hevo saves you from the difficulty of manually managing schemas. It automatically recognizes the format of incoming data and replicates it in the destination schema. Depending on your data replication needs, you can select between full or incremental mappings.

Method 2: Migrating GCP MySQL to Snowflake using CSV files

You can use the CSV file transfer method to import GCP MySQL to Snowflake.

Step 2.1: Export Data from GCP MySQL to CSV

Prerequisites:

To initiate the export from Cloud SQL into Cloud Storage, you must have one of the following roles:

- The Cloud SQL Editor role.

- A custom role, which includes the following permissions.

cloudsql.instances.get

cloudsql.instances.export

Furthermore, one of the following roles has to be present in the service account for the Cloud SQL instance:

- The storage.objectionAdmin Identify and Access Management (IAM) role

- A custom role, which includes the following permissions:

storage.objects.create

storage.objects.list

storage.objects.delete

For assistance with IAM roles, visit Identity and Access Management.

Once the prerequisites are met, follow the steps below:

- Navigate to the Cloud SQL instances page in the Google Cloud console.

- Click the instance name to bring up the instance’s Overview page.

- Click on Export.

- To allow other tasks to take place while the export is in progress, select Offload export.

- Click on Show Advanced Options.

- Select the database from the drop-down menu located in the Database section.

- To specify the table from which to export data, execute an SQL query.

- To start the export, click on Export.

- When the Export database? box opens, a message stating that the “export procedure for a big database may take up to an hour” will appear. You can only examine the instance’s contents while the export is in progress. You also have the option to stop the export after it has begun. Click Export if you think it is an appropriate moment to begin an export. If not, select Cancel.

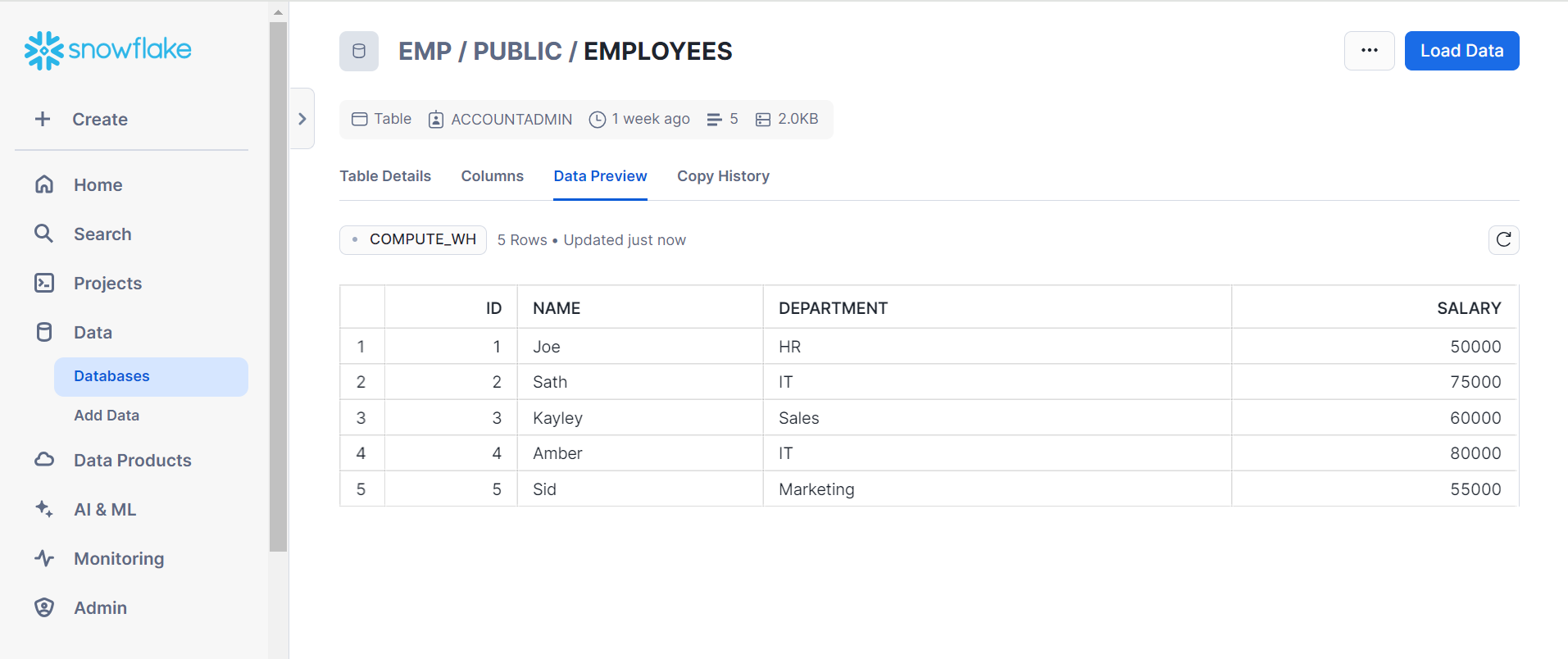

Step 2.2: Export Data from CSV file to Snowflake

The process of loading CSV files into your Snowflake database is explained in the section below.

Step 2.2.1: Generate an Object in the File Format for CSV Data

To create the mycsvformat file format, use the CREATE FILE FORMAT command.

CREATE OR REPLACE FILE FORMAT mycsvformat

TYPE = 'CSV'

FIELD_DELIMITER = '|'

SKIP_HEADER = 1;

Step 2.2.2: Set up a Stage for CSV Files

The my_CSV_stage can be created by executing CREATE STAGE function:

CREATE OR REPLACE STAGE my_csv_stage

FILE_FORMAT = mycsvformat;

Step 2.2.3: Set up the CSV Sample Data Files

To upload the CSV files from your local file system, run the PUT command.

Linux or macOS

PUT file:///tmp/load/contacts*.csv @my_csv_stage AUTO_COMPRESS=TRUE;Windows

PUT file://C:\temp\load\contacts*.csv @my_csv_stage AUTO_COMPRESS=TRUE;

Step 2.2.4: Copy Data to the Intended Tables

To load your staged data into the target tables, execute COPY INTO <table>.

COPY INTO mycsvtable

FROM @my_csv_stage/contacts1.csv.gz

FILE_FORMAT = (FORMAT_NAME = mycsvformat)

ON_ERROR = 'skip_file';

Once data has been moved to the target tables, you need to use the Remove command to delete the copied data files from the intimidating staging table.

Limitations of Migrating GCP MySQL to Snowflake Using CSV files

- No Real-time Data Access: Neither automated data refreshes nor real-time data access is supported by the manual CSV export/import method.

- Technical Knowledge: To ensure correct transfer, a thorough grasp of cloud platforms and SQL scripts is required.

- Manual errors: The quantity of code and actions needed to debug and rectify data might result in errors and additional time spent.

Use Cases

- Data Replication: Snowflake supports advanced features such as data replication for multi-vendor engagement or downstream application integration, enhancing data access traceability, and a zero-copy clone that prevents data export (I/O).

- SQL Support: Snowflake complies with ANSI SQL to provide you with full support for analytical functions and automatic query optimization. The platform also supports SQL capabilities, including the ability to do JOINS utilizing the WHERE and AND clauses and the “(+)” operator.

- High Data Availability: Snowflake’s Database Storage layer ensures high data availability, which is synchronously duplicated over many disc devices and three distinct availability zones within the same area.

- Storage: The cloud data platform from Snowflake separates computation from storage, enabling more computing and storage capacity.

Also, check out how to migrate data from MySQL to Snowflake to get a better understanding of how you can load data and work with Snowflake.

You can also Learn more about:

- MySQL to Snowflake

- Azure MySQL to Snowflake

- Simplifying GCP Setup

- PostgreSQL on Google Cloud SQL to Snowflake

Conclusion

Migrating from GCP MySQL to Snowflake can greatly improve your data management and analytics capabilities. Although manual CSV file techniques can work well for short-term or small-scale data transfers, they are less effective for larger or more complicated migrations. Consider exploring alternative methods like Hevo. It can manage schema conversion, streamline the process, and ensure data integrity.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQs

Q1. Is there any way to migrate the MySQL data to Snowflake without using the third-party connector?

If you don’t have a third-party connector, you can utilize Snowflake’s internal stage option. Create an internal stage by logging in with the required Snowflake credentials at the command prompt. Then, import your MySQL data into Snowflake using the PUT command.

Q2. How to transfer data from MySQL to Snowflake?

You can easily transfer data from MySQL to Snowflake using Hevo, which automates the process and ensures real-time, error-free integration without coding. Alternatively, you can use ETL tools or Snowflake’s data-loading features.

Q3. How do you load data from GCP to Snowflake?

To load data from GCP to Snowflake, you can use Google Cloud Storage as an intermediary and then run Snowflake’s COPY INTO command to load the data from the cloud storage into Snowflake.