AWS S3 is a completely managed general-purpose storage mechanism offered by Amazon based on a software-as-a-service business model. S3 can be used to serve any storage requirement ranging from a simple backup service to archiving a full data warehouse. It can be used for any requirement of up to 5 TB of data. In enterprise data pipelines, it is typical to use S3 as a staging location or a temporary data dumping location before loading data into a Data Warehouse for offline Data Analysis. For customers staying within the AWS ecosystem, a Redshift is a great option as a completely managed Data Warehouse service.

This post will introduce you to Amazon S3 and Redshift. Moreover, it will explain 3 step-by-step methods which will help you to connect Amazon S3 to Redshift easily. Read along to understand more about the steps, benefits, and limitations of these methods.

Table of Contents

Prerequisites

- An S3 account.

- A Redshift account.

- Working knowledge of Redshift commands.

Method 1: Using the COPY Command to Connect Amazon S3 to Redshift

Redshift’s native COPY command allows you to use AWS S3 as your data source and perform a direct bulk data load process. The allowed data source formats are CSV, JSON, or AVRO.

Method 2: Using AWS Services to Connect Amazon S3 to Redshift

AWS offers a number of services that can be used to perform data load operations to Redshift Data Warehouse. AWS Glue and AWS Data pipeline are 2 such services that enable you to transfer data from Amazon S3 to Redshift.

Method 3: Using Hevo’s No Code Data Pipeline to Connect Amazon S3 to Redshift

Hevodata, an Automated No-code Data Pipeline provides a hassle-free solution and helps you directly transfer data from S3 to Redshift within minutes. Hevo is fully managed and completely automates the process of not only loading data from your desired source but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Get Started with Hevo for FreeWhat is Amazon S3?

As mentioned above, AWS S3 is a completely managed object storage service that is accessed entirely through web APIs and AWS-provided CLI utilities. Like any completely managed service offered by Amazon, all operational activities related to pre-provisioning, capacity scaling, etc, are abstracted away from users. The customers are required to pay for the amount of space that they use. Access controls are comprehensive enough to meet typical compliance requirements. It offers granular access controls to meet all kinds of organizational and business compliance requirements.

As a highly scalable data lake, Amazon S3 is a critical component for modern data architectures, facilitating the export of table data and serving as a staging area to load that data into powerful platforms like Redshift, Snowflake, and BigQuery for analytics, or into Elasticsearch for full-text search.

Key Features of Amazon S3

- AWS Athena and AWS Redshift spectrum allow users to run analytical queries on data stored in S3 buckets. S3 offers high availability. This comes from the fact that it stores data across a cluster of distributed servers. This approach means there is a related propagation delay and S3 can only guarantee eventual consistency. S3 writes are atomic though.

- Structurally, S3 is envisioned as buckets and objects. A bucket is a container for storing all kinds of objects.

- It also represents the highest level of the namespace. Buckets contain objects which represent the basic storage entity. An object is a fusion of the stored object as well as its metadata. A unique key and version identify an object uniquely.

- To bridge the gap with legacy systems, modern services provide a simple FTP S3 gateway or a more secure SFTP S3 integration for seamless file transfers directly to cloud storage.

- By defining an Amazon S3 table with a service like AWS Glue, business intelligence tools such as Tableau can directly query raw data files for visualization.

What is AWS Redshift?

AWS Redshift is capable of executing complex queries over millions of runs and returning instant results through a Postgres-compatible querying layer. A massively parallel architecture made using a cluster of processing nodes is responsible for this capability. One of these nodes acts as the leader and handles activities related to client communication, query execution plans, and work assignments to other nodes.

Key Features of Amazon Redshift

- Redshift provides customers with the flexibility to choose from different types of instances that suit their budget and the nature of use cases.

- Redshift can scale up to 2 PB of data and this is done by adding more nodes, upgrading nodes, or both.

- Redshift offers a unique feature called the concurrency scaling feature which makes scaling as seamless as it can without going over budget and resource limits set by customers.

Learn the difference between Amazon Redshift and PostgreSQL to get a better understanding of how your data is stored in your Redshift destination.

Method 1: Using COPY Command Connect Amazon S3 to Redshift

Redshift’s COPY command can use AWS S3 as a source and perform a bulk data load. The data source format can be CSV, JSON, or AVRO.

Assuming the target table is already created, the simplest COPY command to load a CSV file from S3 to Redshift will be as below. The S3 data location here is the product_details.csv. Please ensure Redshift tables are created already.

copy product_tgt1

from 's3://productdata/product_tgt/product_details.csv

iam_role 'arn:aws:iam::<aws-account-id>:role/<role-name>'

region 'us-east-2';The above approach uses a single CSV file to load the data. A better approach in the case of large files will be to split the file to multiple smaller ones so that the COPY operation can exploit the parallel processing capability that is inherent to Redshift. This can be done using a manifest file that has the list of locations from which COPY operation should take its input files.

By default, the COPY operation tries to convert the source data types to Redshift data types. This implicit conversion can lead to unanticipated results if done without proper planning. To mitigate this, Redshift provides configuration options for explicit data type conversions. A configuration file can also be used to set up the source and target column name mapping.

Learn about the COPY command in detail to get a better understanding of how it can be used.

Method 2: Using AWS Services to Connect Amazon S3 to Redshift

AWS provides a number of alternatives to perform data load operations to Redshift. AWS Glue and AWS Data pipeline are two such services that can fit this requirement:

- Using AWS Data Pipeline to Connect Amazon S3 to Redshift

- Using AWS Glue to Connect Amazon S3 to Redshift

Step 2.1: Using AWS Data Pipeline to Connect Amazon S3 to Redshift

AWS data pipeline hides away the complex details of setting up an ETL pipeline behind a simple web UI. It does this by offering template activities that users can customize based on their requirements. The template activity which we will use here is the RedshiftCopyActivity. This activity supports S3 as a source type.

Different insert modes are possible in RedshiftCopyActivity – KEEP EXISTING, OVERWRITE EXISTING, TRUNCATE, APPEND.

KEEP EXISTING and OVERWRITE EXISTING are here to enable the users to define if the rows with the same primary key are to be overwritten or kept as such

AWS Data pipeline and the features offered can be explored in detail by reading this simple guide.

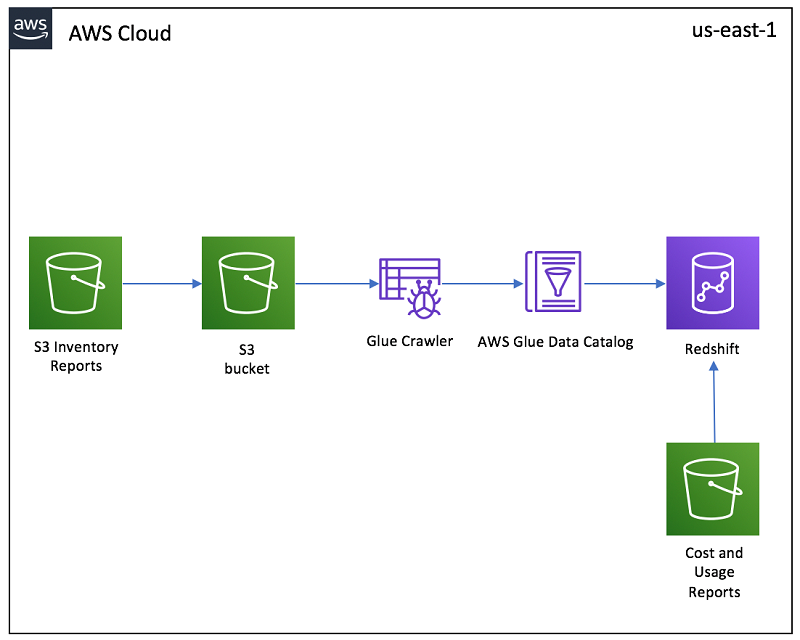

Step 2.2: Using AWS Glue to Connect Amazon S3 to Redshift

Glue is an Extract Transform and Load tool as a web service offered by Amazon. It works based on an elastic spark backend to execute the processing jobs. Glue supports S3 locations as a storage source in Glue scripts. Internally It uses the COPY and UNLOAD commands to accomplish copying data to Redshift, but spares users from learning the COPY command configuration by abstracting away the details. It uses a script in its own proprietary domain-specific language to represent data flows.

Glue uses a concept called dynamic frames to represent the source and targets. S3 location is a supported dynamic frame. The complete script will look as below.

s3_connection_options = {"paths": [ "s3://src_data/src_table_1", "s3://src_data/src_table_2"]}

df = glueContext.create_dynamic_frame_from_options("s3_table", s3_connection_options)The dynamic frame created using the above commands can then be used to execute a copy process as follows.

redshift_connection_options = {

"dbtable": "target_table",

"database": "target_database",

"aws_iam_role": "arn:aws:iam::account-id:role/role-name"

}

glueContext.write_dynamic_frame.from_jdbc_conf(

frame = s3_table,

catalog_connection = "redshift_con_name",

connection_options = redshift_con_options,

redshift_tmp_dir = args["TempDir"])Writing a custom script for a simple process like this can seem a bit convoluted. Glue offers a simpler method using a web UI to automatically create these scripts if the above configurations are known. You can learn more about how AWS Glue works and how you can work with it seamlessly.

Limitations of the Connecting S3 to Redshift Manually

The first method described here uses Redshift’s native abilities to load data from S3. This method has a number of limitations which are as follows:

- This will work only in case of a first-time bulk load and if your use case needs incremental load, then a separate process involving a staging table will need to be implemented.

- The implicit data type conversions that happen by default can become a serious issue leading to data corruption.

- In case you are looking to transform any data before loading to Redshift, these approaches do not accommodate that.

Method 3: Using Hevo’s No Code Data Pipeline to Connect Amazon S3 to Redshift

Step 3.1: Configure Amazon S3 as your Source

Step 3.2: Configure Redshift as your Destination

That’s it! You have successfully loaded data from your S3 source to your Redshift destination by following these 2 easy steps.

Significant Features of Hevo

- Transformation: Hevo provides a drag-and-drop transformation feature, a user-friendly method of performing simple data transformations. Alternatively, you can use the Python interface for specific data transformations.

- Fully Managed Service & Live Support: Hevo manages the entire ETL process, from data extraction to loading, ensuring flawless execution. Additionally, Hevo provides round-the-clock support for your data integration queries via email, call, or live chat.

- Pre-Built Connectors: Hevo offers 150+ pre-built connectors for various data sources, enabling you to establish an ETL pipeline quickly.

- Live Monitoring: Hevo provides live monitoring support, allowing you to check the data flow at any point in time. You can also receive instant notifications about your data transfer pipelines across devices.

Conclusion

This article discussed Amazon S3 and Redshift and explained the 3 methods using which you can connect S3 to Redshift with ease. AWS Services like Glue and Data pipeline abstracts away such details to an extent, but they can still become overwhelming for a first-time user. The first 2 methods involve manual efforts using significant engineering bandwidth and require knowledge of the AWS environment. For someone to quickly create a load job from S3 to Redshift without going in deep into AWS configurations and other details, an ETL tool like Hevo which can accomplish this in a matter of clicks is a better alternative.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions (FAQs)

Q1) Can we load data from S3 to Redshift?

Yes, you can load data from S3 to Redshift. Using tools like Hevo, you can automate this process with ease, transferring data directly from S3 to Redshift without manual steps.

Q2) Does Redshift use S3?

Yes, Amazon Redshift relies on S3 for efficient data storage and backups, allowing seamless data import and export between Redshift and S3.

Q3) How to connect Redshift database to S3 bucket?

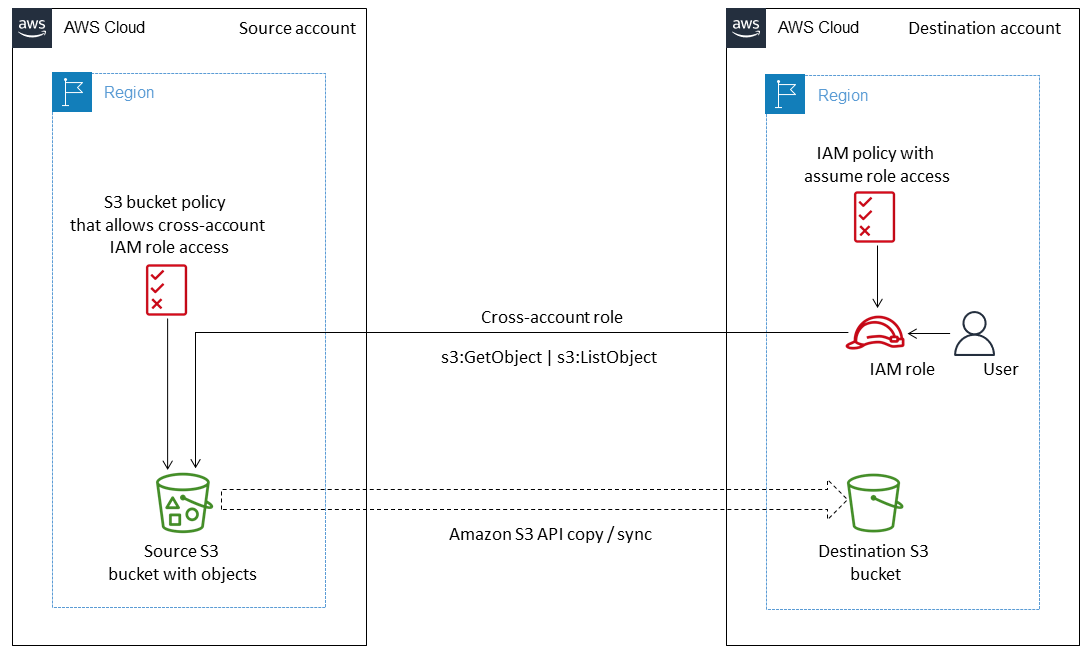

You can connect Redshift to an S3 bucket by setting up an IAM role with appropriate permissions, then use COPY or UNLOAD commands in Redshift to move data between the two.