Data scientists today spend 80% of their time cleaning and transforming data, and only 20% actually analyzing it. This statistic stings because it’s true. Every day, data teams struggle with messy, incomplete, and inconsistent data, and they waste hours fixing what should’ve been caught. Whether you’re a data engineer scaling pipelines, an analyst waiting on cleaner tables, or an analytics engineer bridging the two, the main culprit is often the same: poor data transformation practices.

The cost of poor transformation practices negatively impacts a business’s ability to achieve its goals, as companies seek faster insights and AI-ready pipelines. Let’s explore the 10 data transformation best practices that will enable you to simplify your data processes and offer premium data at the lowest possible cost. You will also learn how Hevo optimizes your transformation pipelines in the background. These techniques will help you make better and more cost-effective business decisions.

Table of Contents

What is Data Transformation?

Data transformation is the process of organising and standardising structured data from its unstructured form. It is the main stage in modern data workflows, where raw data is cleaned, normalised, validated, and enriched so it can be reliably used for reporting and analytics.

The process can either be ELT or ETL: ELT (Extract-Load-Transform) process transforms data after it is loaded into a data lake or warehouse while ETL (Extract-Transform-Load) processes transforms data before it is loaded. Both processes play pivotal roles in moving data from sources to destinations while applying necessary transformations. You can read here to learn more about ETL in detail.

There are many ways you can transform your data. You can change file formats, aggregate or split datasets, derive new features, and apply business logic. The messy data becomes organized and reliable, just like turning crude oil into fuel that powers data-driven insights. Data transformation is a crucial step in the data pipeline where raw data is converted into a meaningful format suitable for analysis and reporting. To understand more about the data transformation process in detail, check out this comprehensive guide on data transformation.

10 Data Transformation Best Practices to Follow

- First Ask Why: Align Transformations with Business Goals

- Know Your Data Before You Touch It

- Clean and Validate Early

- Only Use Modularized, Tested, and Versioned Transformations

- Automate Your Workflows Wherever Possible

- Build Pipelines That can Scale

- Monitor, Log, and Optimize Everything

- Pick the Right Tools for the Right Job

- Support Real-Time and Streaming

- Keep your Data Protected and Compliant

1. First Ask Why: Align Transformations with Business Goals

First, you need to ask yourself why you are using data transformation. If you do not know your goals, your efforts will be wasted. Teams may design dashboards that fall short of expectations or transform data that is not required. Ask stakeholders what decisions they are making and which KPIs are most important for starting every project. Then trace those goals back to the source data using a simple schema or checklist.

Set the rules for your business by deciding what a customer or a success means. These definitions can be written down in a consistent way with tools like dbt. For example, Starbucks connected raw data to clear goals like daily performance and product trends. These initiatives enabled all of their thousands of stores to have accurate and reliable insights.

2. Know Your Data Before You Touch It

You can’t fix what you don’t understand. Many data issues arise because no one reviewed the source closely. Mixed date formats or unexpected text values can cause transformations to fail. You should profile your data before writing any code. Do a regular check for nulls, duplicates, format issues, and outliers. Confirm findings with data owners and document them in a shared space.

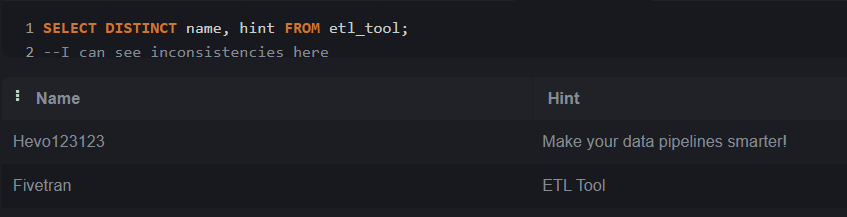

There are many tools that can help automate this step. Simple SQL queries like SELECT DISTINCT can reveal inconsistencies. Platforms like Snowflake and Hevo let you preview data and review the schema. Mayo Clinic released a course for healthcare professionals that focuses on maintaining healthcare data quality, early profiling, and generating consistent reports, such as catching non-numeric age entries like “twenty-five” or wrong date formats.

3. Clean and Validate Data Early

According to Gartner research, poor data quality costs organizations an average of $12.9 million per year. Bad data is a leading cause of broken pipelines. If loaded without checks, it can lead to incorrect reports and wasted time resolving avoidable issues. You should clean and validate early which includes standardizing, trimming, handling NULL values, and removing duplicates. You can set up basic checks for freshness and completeness as data enters your system, like in dbt.

You don’t need to build everything from the beginning. There are many tools that offer quality data cleanups. dbt offers simple tests for NULLs, joins, and outliers, while Hevo supports in-pipeline validations using SQL or Python. Netflix uses RAD to detect anomalies in payments, signups, and user devices and generate alerts if there is an unusual pattern within the data, helping catch issues fast.

4. Only Use Modularized, Tested, and Versioned Transformations

When transformation code gets too complex, it becomes challenging to maintain. It is risky to update long SQL scripts or pipelines without breaking something. It is better to divide the work into smaller and reusable parts. In dbt, you can create separate models for daily orders or monthly summaries, test them individually, and store them in Git with branching for safe changes.

You can keep track of versions and modular codes at the same time. Git-based processes can be used with tools like dbt and Hevo. Shopify leverages dbt for its business use cases, testing every change before deployment. This approach leads to safer, faster, and more reliable pipelines.

5. Automate Your Workflows Wherever Possible

When you change data manually, you might miss steps, write broken code, and cause production delays. Without automation, errors occur a lot, and environments fall out of sync. When you set up CI/CD tools for your data pipelines, every change is tested automatically. If the tests pass, the pipeline continues but they fail, it stops.

You can test changes to the code with tools such as GitHub or Jenkins while Hevo handles scheduling. Infrastructure-as-code tools such as Terraform help keep your environment settings consistent. You can use Slack that uses dbt and Airflow to run tests in a dev warehouse before deploying. The goal is faster and safer updates with fewer errors.

6. Build Pipelines That Can Scale

Data volumes are growing rapidly, often doubling or even tripling each year. Pipelines built today may slow or fail when the scale of data increases. You should design your pipelines to make them scalable from the start. You can use cloud warehouses such as Snowflake that separate storage and compute, and leverage parallel-processing tools such as Apache Spark when handling large datasets. You can learn more about data transformation in Snowflake and its unique features here.

You can use an ELT approach too: load the raw data first and then transform it inside the warehouse. Platforms like Hevo manage scaling automatically. Cisco improved performance by moving jobs from on-prem Hadoop to Snowflake, improving productivity by 30% and reducing hours of work to minutes.

7. Monitor, Log, and Optimize Everything

If you don’t monitor pipelines, bad data can go unnoticed and your reports are badly affected. Important pipeline metrics such as row counts, job time, and errors must be logged. You can set up alerts in Grafana or CloudWatch to help you find bugs quickly and resolve the problems that cause them.

Many tools help with monitoring: Snowflake alerts on long queries, Hevo provides real-time stats, and Slack can notify teams instantly. Fintech startup Plaid uses this setup to cut recovery from hours to minutes. Good monitoring makes sure that data is correct, updated, and reliable.

8. Pick the Right Tools for the Right Job

You should not use every available tool for data transformation. Poorly chosen tools and platforms make systems challenging to manage. You should assess your team’s needs, such as data volume, speed, and technical skills, before selecting tools. Keep your stack as simple as possible.

As a hypothetical use case, a retail company can use Hevo for data ingestion, Snowflake for data storage, and dbt for transformation. By linking dbt models to business terms, this uniform setup can speed up onboarding and reduce errors, all while making it easier for people to collaborate together. You can avoid niche or trendy tools without strong community support to ensure better adoption and performance.

9. Support Real-Time and Streaming

Batch-only pipelines can’t support real-time analytics well. Streaming data takes too long or may not work at all. You must identify use cases needing real-time insights, such as fraud and anomaly detection, and use streaming tools like Apache Kafka or Flink. You can apply idempotence to handle duplicate records and maintain accuracy.

Uber and other ride-hailing services use Kafka as a streaming system to ingest ride events, enabling fraud detection in seconds. Moving from batch jobs to real-time streaming saves processing time by hours. Streaming requires separate workflows or full migration to streaming tools. Here, we achieve low latency, fresh data, and stability for large events.

10. Keep your Data Protected and Compliant

Data pipelines often handle sensitive information, so security is critical at every phase in the data transformation process. You may have to deal with sensitive data, such as financial or healthcare information. There is always a chance that protected data could be leaked through data pipelines if security measures are not put in place. Such incidents could result in fines and breaches. To keep data safe, businesses should label critical fields and use masking or encryption while processing it. Role-based access rules ensure that only authorized individuals can access or modify pipelines. You must also secure data, keep audit logs, follow rules like GDPR and HIPAA, and clearly show the history of your data to be compliant.

Let’s look at the health care business as an example. When a healthcare company uses dbt on a HIPAA-compliant cloud, it automatically drops or hashes private data. The process protects privacy and lets the company do analytics. People make common mistakes such as copying full logs with sensitive info and lacking audit trails. You can succeed in data compliance by monitoring logs, enforcing access controls, passing compliance scans, and speeding up data governance proof.

What Are the Business Benefits of Data Transformation?

1. Fuel Data-driven Decisions

Clean data is the first step to reliable analytics. With high-quality pipelines, teams create high-quality datasets that stakeholders can trust. This means business reports and machine learning models are based on accurate information, enabling faster and more confident decisions. IDC Market Research has shared an important insight that transforming data can directly boost the bottom line by preventing the “20-30% of revenue” loss often caused by poor data. This statistic shows the huge potential loss saved by effective data transformation practices.

2. Speed up your Analytics

Well-designed transformations speed up analytics. When data arrives in a consistent format, data analysts and BI tools can derive insights immediately. For example, normalized and aggregated tables save time when cleaning data. This means metrics and dashboards refresh faster: sometimes in seconds instead of hours! Clean and normalized data helps people make decisions faster and reduce redundancy.

3. Scale without Fear

Pipelines should be built to handle vast amounts of data without major changes in infrastructure. A scalable pipeline reduces bottlenecks so that analytics workloads can expand with the business. Building for scale often reduces the total cost of ownership, since you pay only for additional capacity as needed.

4. Automate Smarter not Harder

Pipelines should save time and reduce errors. With pipelines scheduled and orchestrated in tools like Airflow or Hevo, redundant tasks and quality checks can be automated. Automation cuts costs and speeds up deployment. You can run data flows in real time all day without human intervention.

5. Stay Compliant and Regulated

Make pipelines that help you sleep well at night. You stay compliant with the data governance and compliance rules if you apply the best practices. For example, standardizing formats and enforcing validation make it easier to track data lineage. Encryption and masking rules applied during transformation protect sensitive information, helping businesses meet regulations such as GDPR or HIPAA. Make your business confident enough to use customer data without legal risk.

Check out our blog on Snowflake Data Transformation to explore how you can efficiently transform and manage your data in the Snowflake ecosystem.

How will Hevo make your Data Pipelines Smarter?

Hevo Data is a fully managed, no-code pipeline platform that simplifies data transformation end-to-end. It automates ingestion from 150+ sources and performs both ELT and streaming transformations with built-in connectors and schema mapping.

You don’t have to worry about managing servers or complex orchestration with Hevo. The platform grows with your data instantly, and transformations are running in parallel. It even works with popular tools like Snowflake and dbt, which makes it easy to deploy your models. Hevo saves development time by a huge amount and gets your data pipeline ready for AI by giving you real-time previews, automated error handling, and Git version control.

Ready to build your first AI data pipeline? Start your free Hevo trial today and see how easy it is. No infrastructure to manage, instant scaling, pay-as-you-go!

FAQs

1. What are the four types of data transformation?

Data transformation includes organizing, enriching, cleaning and combining data. Structuring helps in making data more consistent such as date formats. Data enrichment adds additional context or fields to existing data. Data cleaning helps in removing duplicates, errors and inconsistencies. Aggregation summarizes or joins datasets which makes them easier to analyze, model and report.

2. What are the principles of data transformation?

The main principles of effective data transformation are accuracy, integrity, automation and consistency. Accuracy and integrity of data should be maintained. Processes should be automated with clear documentation. Consistent standards should be applied and transformations should minimize data loss.

3. What are data transformation techniques?

The basic techniques include filtering, sorting, joining, and pivoting. But when you move to advanced, there are methods involving normalization, smoothing, aggregation, discretization, and feature engineering. All of these techniques clean and shape your data, which improves it for analysis and helps you find patterns or make data processes run more smoothly.

4. What is a data transformation strategy?

A data transformation strategy is a structured plan to convert raw data into an organized form that is ready for analysis. It includes clear goals, step-by-step processes (data profiling, aggregating, smoothing, cleaning, etc.), and right tool selection. The strategy ensures that the data quality is perfect to support reliable and scalable data pipeline