The current data-driven era produces massive event data collection through business digital communications. The efficient analysis of this massive data set proves vital for both decision-making excellence and operational optimization and company expansion purposes. However, when dealing with billions of records daily, processing entire datasets repeatedly can become computationally expensive and time-consuming.

dbt (Data Build Tool) represents a widely accepted analytics solution that data engineers and analysts strongly prefer. The rising popularity shows how dbt has become a highly demanded transformation tool among professionals working with data. The main strength of dbt lies in its ability to enable incremental materialization that enables organizations to work with only new information instead of full database reloads.

Using dbt incremental models boosts operational speed and enhances system performance along with reducing operational expenses. The data transformation system utilizes dbt incremental models to achieve scalability on different platforms, including Snowflake, BigQuery, and Redshift.

The article explores dbt incremental models, covering their core principles, installation process, best practices, and performance optimization strategies. It also explains how to manage updates effectively, prevent common issues, and leverage Snowflake’s advanced features for maximum efficiency.

Table of Contents

What is dbt Incremental Materialization?

Understanding dbt Incremental Materialization

Materialization in dbt refers to how models are stored and maintained in the data warehouse. The selected materialization strategy determines performance levels, storage efficiency, and query execution speed in dbt while providing four core materialization options.

- View: A virtual table that dynamically runs queries without storing data.

- Table: The table represents a physical data storage that maintains transformed data to accelerate query execution speed.

- Ephemeral: The transformation exists only during execution because it does not store anything after completion.

- Incremental: The incremental model updates fresh data with modifications without reconstructing the database completely.

Struggling with manual SQL and scattered workflows? Hevo Transformer, powered by dbt Core™, lets you connect to Snowflake warehouse in minutes, automate dbt workflows, and collaborate effortlessly with Git version control.

🚀 Seamless Warehouse Integration – Auto-fetch schemas, no setup hassle

⚡ Effortless dbt Workflow Automation – Build, test, and deploy in one place

📂 Built-in Version Control – Track every change with Git

Why Use Incremental Models?

Through dbt tools, organizations can optimize data transformation operations by processing updated records rather than redeploying the whole dataset. The method enables fast processing and minimizes resource needs together with current analytics updates.

Traditional ETL processes reload complete data from its source through Extract, Transform, and Load operations that waste efficiency for large datasets. Incremental models perform efficient transformations because they maintain a streamlined process that updates only recent changes.

Implementing incremental models leads to quicker queries along with reduced expenses while supporting real-time analytics capabilities.

Key Benefits of Incremental Models

- Faster Processing: The system processes data at high speed because it updates fresh or adjusted records instead of entire tables.

- Cost Optimization: Cloud computing costs decrease through the optimization measure, which avoids performing unnecessary full-table refreshes.

- Enhanced Performance: Ideal for handling large-scale, event-driven datasets efficiently.

When to Use Incremental Models?

Incremental models are especially beneficial when working with large datasets or frequent data updates. The processing system operates with higher efficiency since it handles necessary changes rather than reloading entire datasets.

The following situations show where incremental models give important performance benefits:

- Frequent Data Updates: The data pipeline benefits from improved performance through incremental models when it operates on daily or real-time updates.

- Handling Large Datasets: Large dataset handling through reprocessing creates expensive computational demand and time requirements. The updated data processing becomes much faster in incremental models since they only modify the recently modified data.

- Optimizing Complex Transformations: Time-efficient execution results from performing transformations only on modified data when the majority of your data stays unchanged.

- Cost-Effective Data Processing: The processing costs related to data remain affordable within cloud-based systems because they directly link to the amount of stored information. The storage requirements and processing costs decrease because of incremental models, which require the minimum possible resources.

Creating an Incremental Model Using dbt and Snowflake

Prerequisites

You need these components for building your dbt incremental model:

- dbt command line tool

- A dbt-Snowflake package

- A Snowflake account with account admin access

- Git

- Python 3.7+ or Higher

You can get the prerequisites by following these links if you use Windows.

A comprehensive row-by-row scan for updates proves inefficient when processing large datasets because it causes longer execution times and higher expenses. Through its method of detecting new and modified records performance optimization occurs while decreasing query load and boosting operational efficiency.

Data processing occurs faster and needs fewer resources when using this approach which makes it suitable for scalable data transformations.

We will explore all the steps necessary for building an incremental model through Python with dbt and Snowflake.

1)Set up a dbt and Snowflake Account

Use the following procedures to deploy dbt combined with Snowflake integration:

1️) Install dbt:

The installation of dbt requires users to execute this command:

2) Set Up a Snowflake Account:

- Open your Snowflake account to proceed.

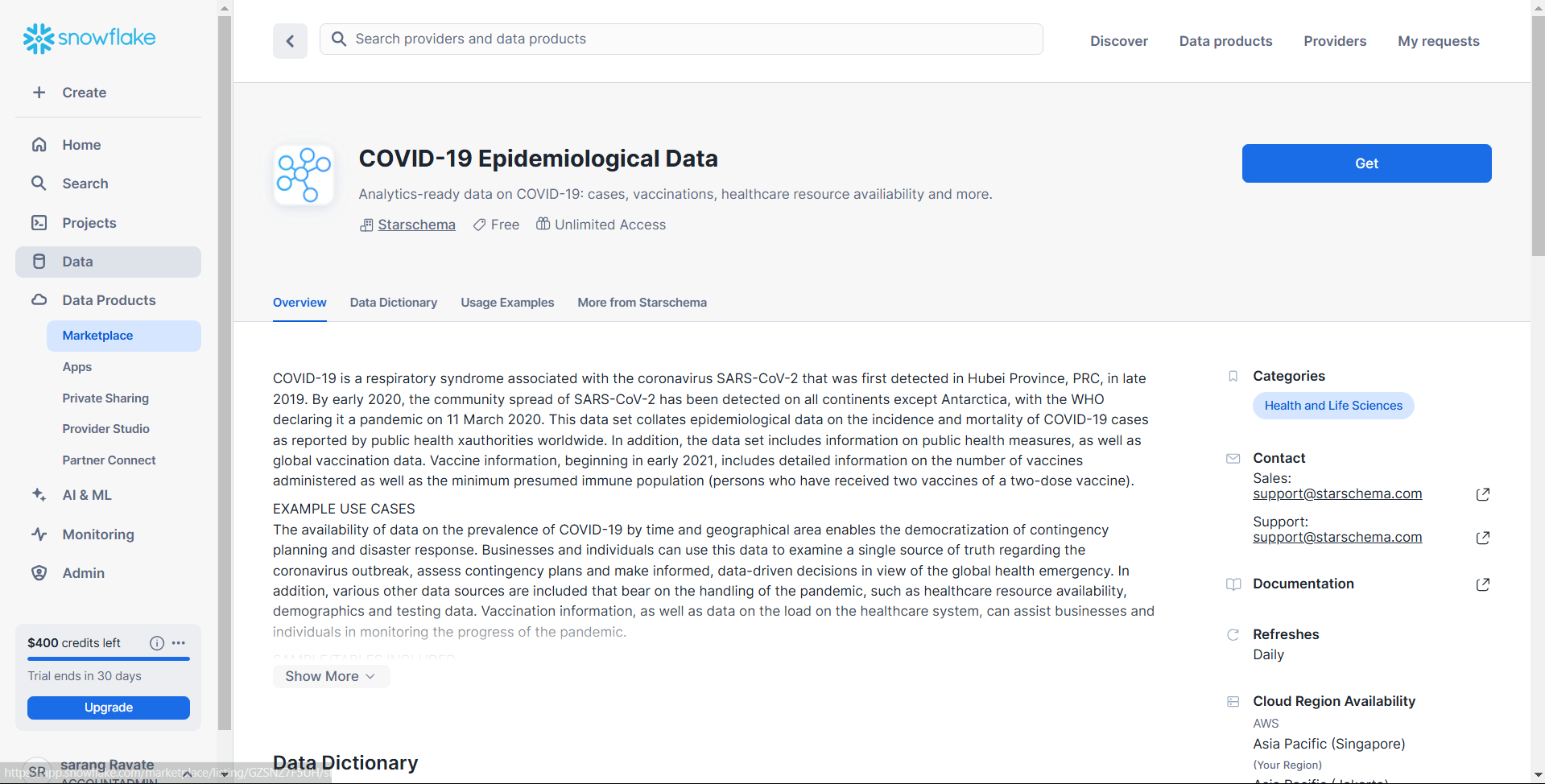

- Navigate to the Marketplace section.

- Access the search bar to identify the dataset called “COVID-19 Epidemiological Data.” and click Get to add it to your Snowflake account.

- Choose the provider-verified data source from the list and proceed with selecting the dataset.

- Your Snowflake account will receive the database after you select Get from the menu.

In addition, you will be able to analyze and test using dbt incremental models with this dataset.

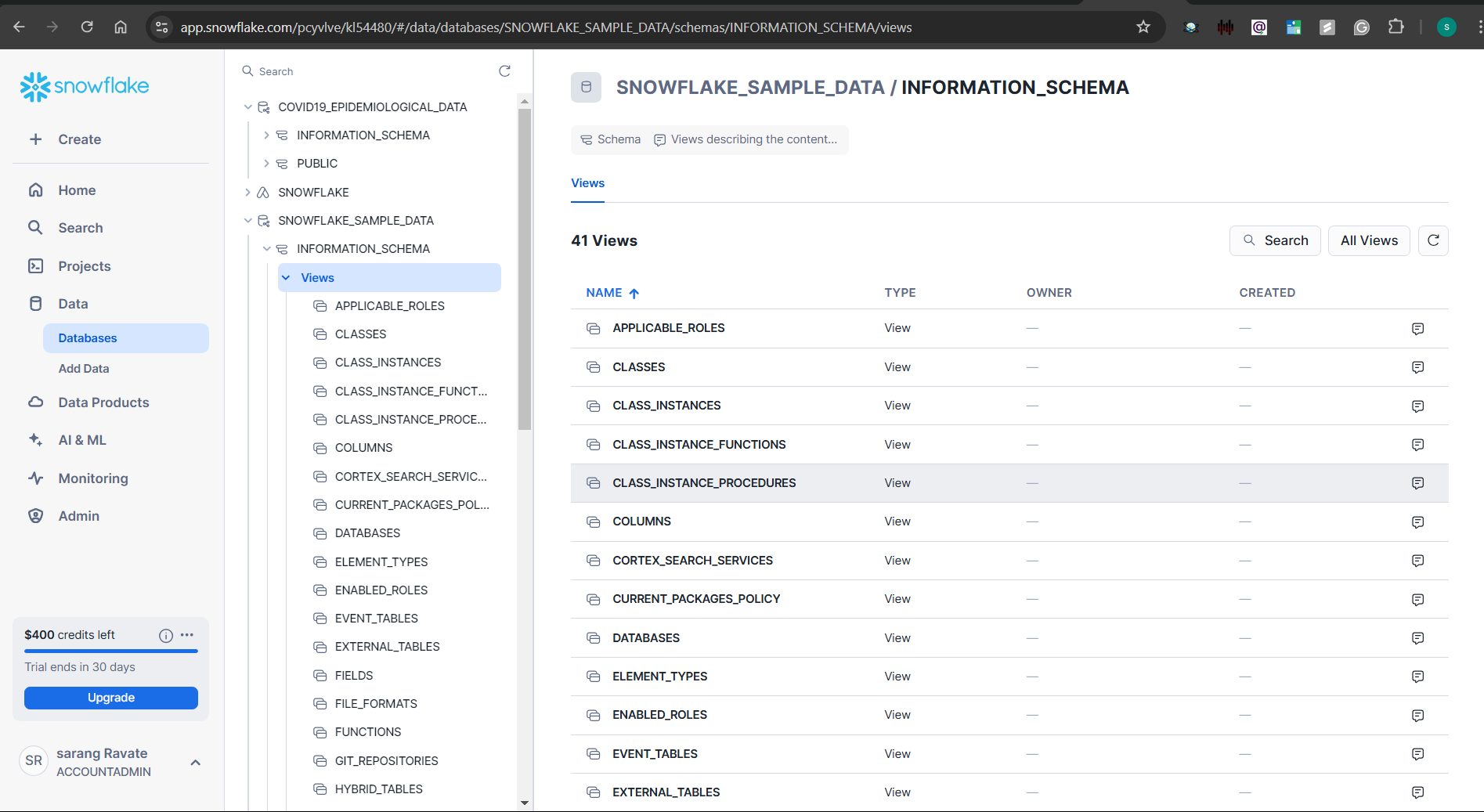

3) Verify that the COVID-19 Epidemiological Data information appears in the list:

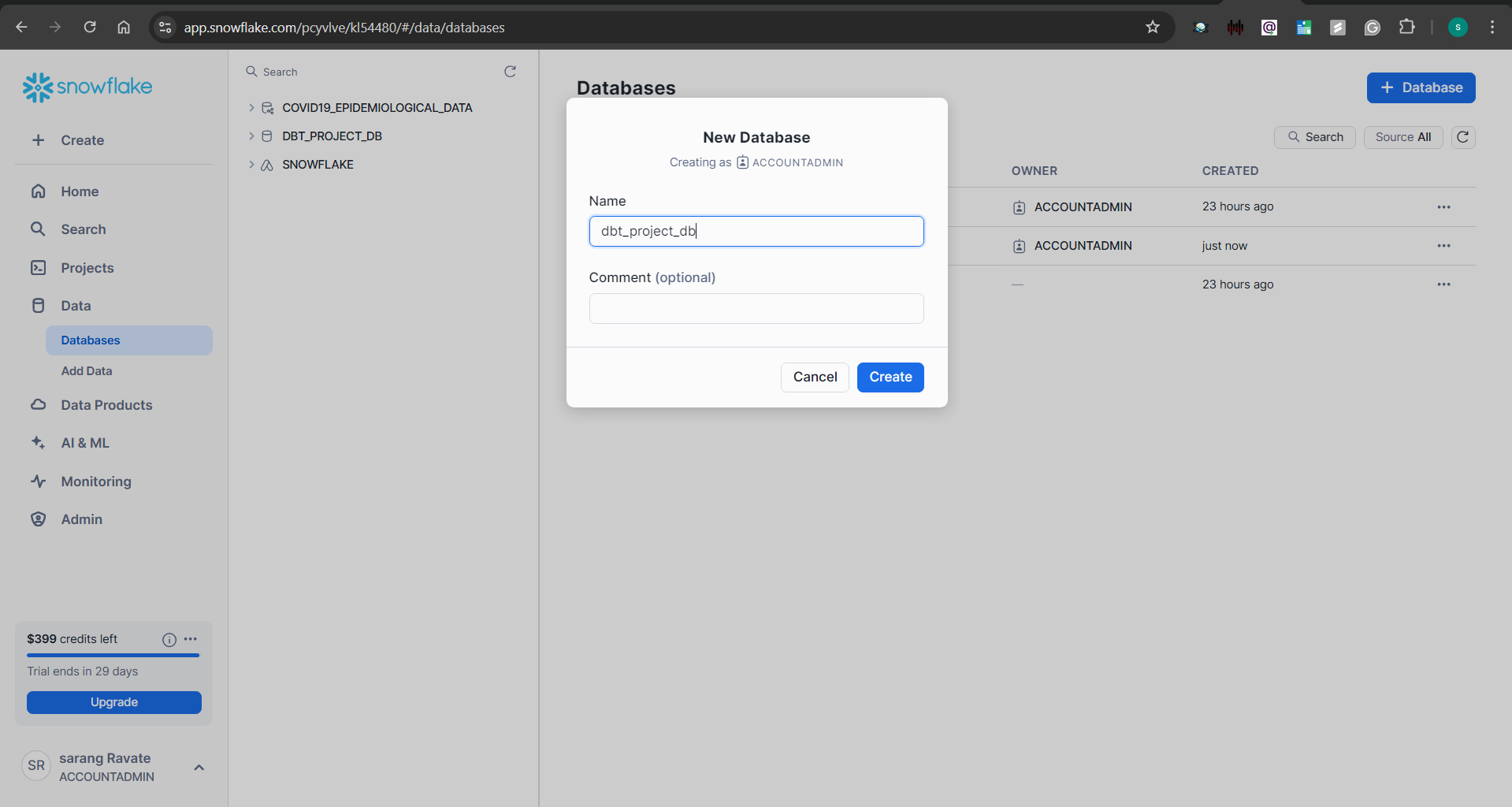

Users should navigate to Data > Databases inside their Snowflake account.

Check that COVID-19_EPIDEMIOLOGICAL_DATA exists within the list of available databases.

4) Now click the +Database button, as shown in the screenshot below, and create a new database. Enter a name for the new database and click on Create. This database will be used as a dbt access point to create and store your tables and views.

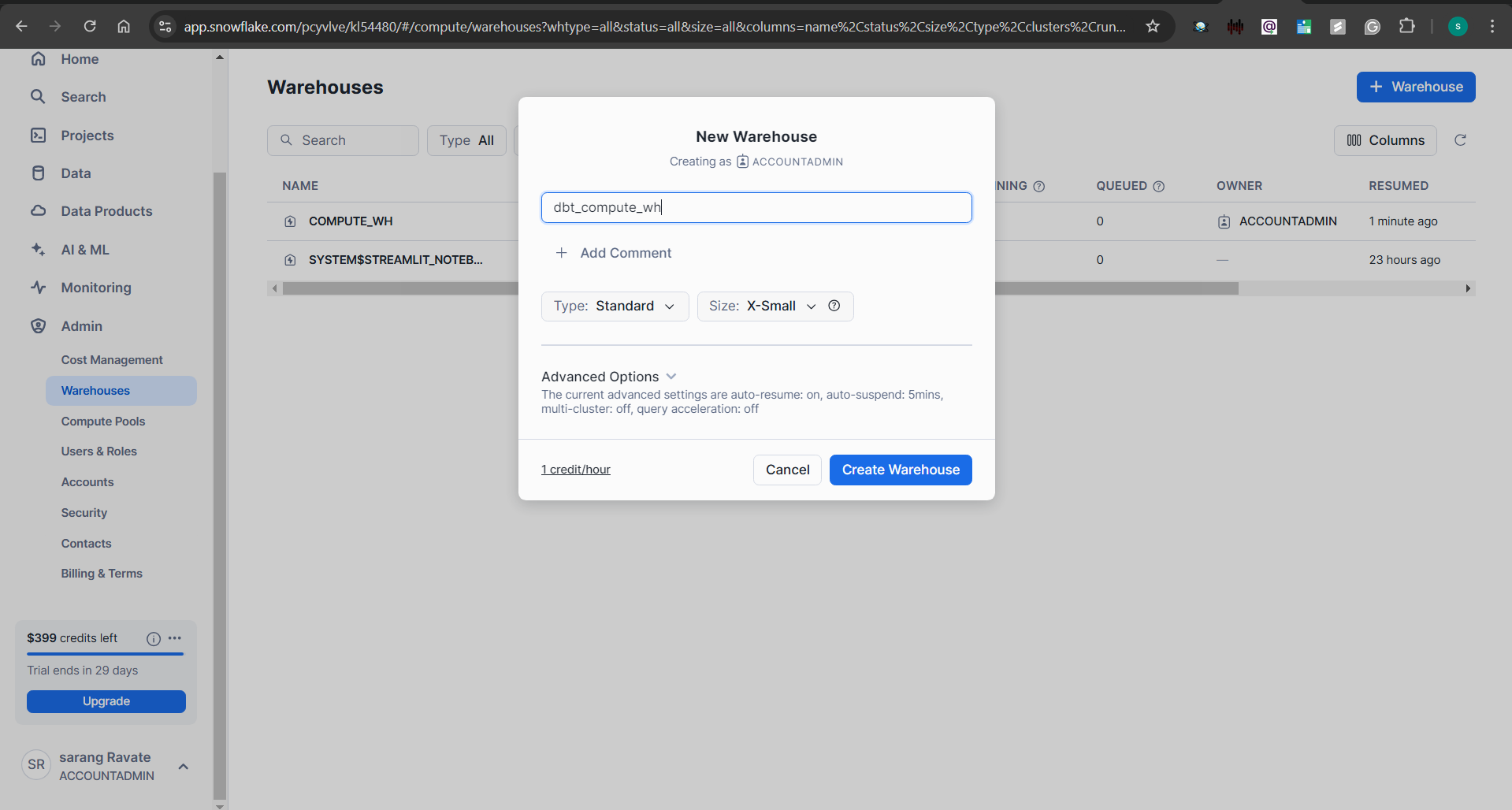

5) Next, create a warehouse on your Snowflake account. To create a warehouse, click on Admin Warehouses. Then, click on the + Warehouse button to create a warehouse. Name the warehouse and click on the Create Warehouse button.

2. Set Up Your dbt Project

- The next step to create a dbt incremental model is to install dbt-Snowflake. Create a Snowflake connection by downloading the dbt-snowflake adapter package.

- To install the dbt-snowflake package, type the following command in your command prompt.

pip install dbt-core dbt-snowflakeRun the Initialization Command:

dbt init

- Enter a Project Name: Use letters, digits, or underscores, then press Enter.

- Select the Database: Choose Snowflake when prompted.

- Provide Snowflake Credentials: Enter your username, password, role, warehouse name, and database name.

Check the Created Project Folder

- A new folder following your project name will become available after executing dbt init.

- Default configuration files and default files exist within this folder.

Navigate to the Project Directory

- Use the cd command to move into the newly created folder:

cd your_project_name

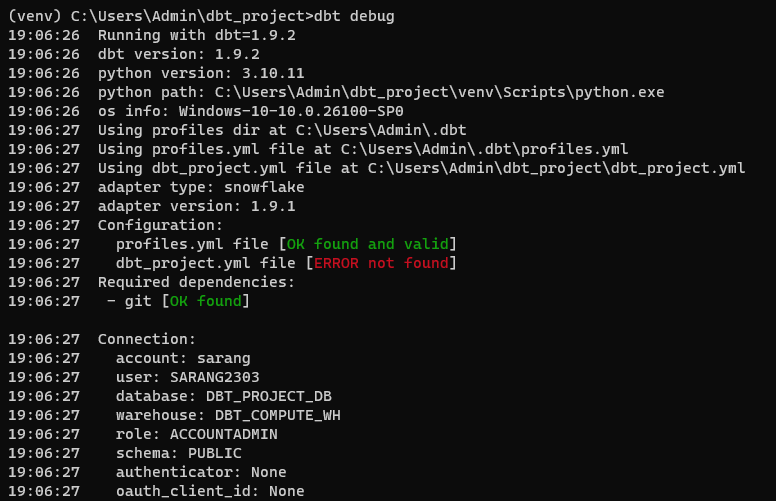

Next, run dbt debug to confirm the connection to Snowflake. On successful connection with Snowflake, you will see the “All checks passed!” in the command prompt.

3. Create a dbt Incremental Model with Snowflake

The completion of your Snowflake account setup with dbt marks your successful work. You can employ dbt to develop incremental models at this point.

Users can confirm that the Snowflake Sample Data contains various datasets within different schemas in their Snowflake account. The search page contains them in this section.

Databases > SNOWFLAKE_SAMPLE_DATA > Schema Name > Tables/Views.

For example, if you are working with epidemiological data, you can find relevant tables under:

Databases > SNOWFLAKE_SAMPLE_DATA > COVID19_EPIDEMIOLOGICAL_DATA > Tables/Views.

- To start building an incremental model with dbt, create a .sql file within the models’ folder of your dbt project. The models folder holds the transformation logic of your dbt project.

- Copy the below code in your .sql file.

{%

config(

materialized='incremental',

unique_key='ROLE_NAME'

)

%}

SELECT

GRANTEE,

IS_GRANTABLE,

ROLE_NAME,

ROLE_OWNER

FROM {{ source('SNOWFLAKE_SAMPLE_DATA','COVID19_EPIDEMIOLOGICAL_DATA') }}.ROLE_DETAILS

{% if is_incremental %}

WHERE ROLE_NAME > (SELECT MAX(ROLE_NAME) FROM {{ this }})

{% endif %}Replace {SNOWFLAKE_SAMPLE_DATA} with COVID19_EPIDEMIOLOGICAL_DATA, the name of your imported dataset, and save the file.

You can save the file, enabling the incremental model to run automatically through the dbt run command. The system links to the target database to execute SQL model files which materialize all data models.

Best Practices for Using dbt Incremental Models

Database performance and data reliability are enhanced when working with dbt and Snowflake by applying this set of guidelines.

- Define a Unique Key for Efficient Updates

Every dbt model requires a unique_key for duplicate prevention also incremental updates and accurate results. A unique key assignment in dbt models guarantees consistent data results between execution runs. - Use Partitions for Large Datasets

Date-based partitions serve as a method to optimize the handling of large database datasets. Data partitioning works as a query optimization method which decreases the amount of scanned data. - Monitor Incremental Runs

The effectiveness of incremental models should be checked through regular analysis of logs and metadata tracking. Early anomaly detection occurs through unique key specifications which stops data mismatches from occurring. - Leverage dbt Tests

Built-in dbt tests including not_null and accepted_values and unique tests ensure the preservation of data integrity while checking correctness through accepted_values. - Optimize Query Performance with Snowflake Features

Utilize Snowflake’s clustering keys and result caching to enhance query speed and reduce computational costs. These features improve overall efficiency in large-scale analytics.

Common Challenges and Solutions in dbt and Snowflake

1. Handling Late-Arriving Data

The arrival of delayed data introduces problems that affect consistency in incremental model behavior. A window strategy should be used which lets updates access records from a past period to correctly handle delayed data arrival. A merge logic system should be implemented because it optimizes data insertion and updating to avoid duplicates yet preserve information accuracy.

2. Schema Changes

Data pipeline execution becomes disrupted due to changes in source data schema which include new columns or data type modifications. The strategies should define automatic column evolutions which maintain compatibility while transformations proceed without disruption. Additionally, use dbt snapshots to track schema modifications over time, making it easier to handle changes without losing historical data.

3. Data Validation

Ensuring data quality is crucial for analytics and decision-making. Deploy the dbt tests not_null and unique and accepted_values to discover any absent or repeated records in the data. Moreover, perform source-to-incremental comparisons to verify that new data aligns with the expected structure and values. These strategies help maintain data consistency and reliability.

Conclusion

The dbt incremental models change whole data transformation practices through their ability to run big datasets with efficient cost management and enhanced processing speed. The procedure updates fresh records and modified records to optimize system speed decrease cloud spending and enable instant analytics. Through incremental materialization, organizations can enhance their ETL processes to achieve better query speed and platform scalability that works with Snowflake BigQuery and Redshift.

Implementing best practices—such as defining unique keys, using partitions, monitoring incremental runs, and optimizing queries with Snowflake features—ensures reliability and efficiency. Additionally, handling challenges like late-arriving data, schema changes, and data validation with dbt tests and merge logic safeguards data integrity.

The incremental models serve as an effective solution to handle complex transformations in developing data ecosystems. Improving data pipelines through the incorporation of dbt’s advantageous capabilities with Snowflake’s performance enhancements allows businesses to construct resilient and efficient pipelines that scale for contemporary analytic needs.

FAQs

1. How can I ensure data integrity in dbt incremental models?

The dbt tests not_null, unique, and accepted_values should be used to identify both absent and repeated entries in your data. Data consistency and accuracy validation should be performed through source-to-incremental comparisons.

2. What are some best practices for optimizing dbt incremental models?

Define a unique_key to prevent duplicates, use date-based partitions for large datasets, regularly monitor incremental runs, and leverage Snowflake’s clustering keys and result caching for enhanced performance.

3. How do dbt incremental models handle schema changes?

The management of schema changes is possible through column evolution strategies and dbt snapshots to keep track of modifications across different periods. Smooth transformations with new pipelines become possible through these strategies which prevent disruptions from existing workflows.

4. What is the key advantage of using dbt incremental models?

dbt incremental models improve efficiency by updating only new or modified records instead of reprocessing entire datasets. The system performs faster and more cost-efficiently because it updates minimal data and this leads to better performance levels.