Airflow is a powerful task automation tool that helps organizations schedule and execute tasks at the right time, freeing employees from repetitive work. By connecting to Airflow from other tools, you can enhance your workflow using the Airflow REST API. This API enables seamless interaction with Airflow, allowing you to automate various tasks based on their frequency and requirements.

Automating tasks can significantly reduce the burden on employees, enabling them to focus on more strategic activities. In this article, we’ll introduce you to Airflow, its key features, and provide a detailed overview of the Airflow REST API and how it works.

Table of Contents

Prerequisites

This is what you need for this article:

What is Airflow?

- Airflow is a platform that enables its users to automate scripts for performing tasks. It comes with a scheduler that executes tasks on an array of workers while following a set of defined dependencies. Airflow also comes with rich command-line utilities that make it easy for its users to work with directed acyclic graphs (DAGs). The DAGs simplify the process of ordering and managing tasks for companies.

- Airflow also has a rich user interface that makes it easy to monitor progress, visualize pipelines running in production, and troubleshoot issues when necessary.

Hevo Data, a No-code Data Pipeline helps to Load Data from any data source such as Databases, SaaS applications, Cloud Storage, REST APIs and Streaming Services and simplifies the ETL process. It supports 150+ data sources and loads the data onto the desired Data Warehouse, enriches the data, and transforms it into an analysis-ready form without writing a single line of code.

Let’s see some unbeatable features of Hevo Data:

- Fully Managed: Hevo Data is a fully managed service and is straightforward to set up.

- Schema Management: Hevo Data automatically maps the source schema to perform analysis without worrying about the changing schema.

- Real-Time: Hevo Data works on the batch as well as real-time data transfer so that your data is analysis-ready always.

- Live Support: With 24/5 support, Hevo provides customer-centric solutions to the business use case.

Key Features of Airflow

- Easy to Use: If you are already familiar with standard Python scripts, you know how to use Apache Airflow. It’s as simple as that.

- Open Source: Apache Airflow is open-source, which means it’s available for free and has an active community of contributors.

- Dynamic: Airflow pipelines are defined in Python and can be used to generate dynamic pipelines. This allows for the development of code that dynamically instantiates with your data pipelines.

- Extensible: You can easily define your own operators and extend libraries to fit the level of abstraction that works best for your environment.

- Elegant: Airflow pipelines are simple and to the point. To parameterize your scripts Jinja templating engine is used.

- Scalable: Airflow has a modular architecture and uses a message queue to orchestrate an arbitrary number of workers. You can expand Airflow indefinitely.

- Robust Integrations: Airflow can readily integrate with your commonly used services like Google Cloud Platform, Amazon Web Services, Microsoft Azure, and many other third-party services.

Uses of Airflow

Some of the uses of Airflow include:

- Scheduling and running data pipelines and jobs.

- Ordering jobs correctly based on dependencies.

- Managing the allocation of scarce resources.

- Tracking the state of jobs and recovering from failures.

What are REST APIs?

- By offering publicly available codes and information pipelines, an Application Programming Interface (API) establishes a connection between computers or between computer programs (applications).

- It’s a form of software interface that acts as a middleman between different pieces of software to help them communicate more efficiently. Different types of APIs (such as Program, Local, Web, or REST API) aid developers in constructing powerful digital solutions due to varying application designs.

- REST APIs are a lightweight and flexible way to integrate computer applications. REST APIs are a straightforward and standardized method of communication, which means you don’t have to worry about how to format your data because it’s all standardized.

- REST APIs are also scalable, so you won’t have to worry about increasing complexity as your service grows. You may quickly make changes to your data and track them across Clients and Servers. They support caching, which helps to ensure excellent performance.

What is Airflow REST API?

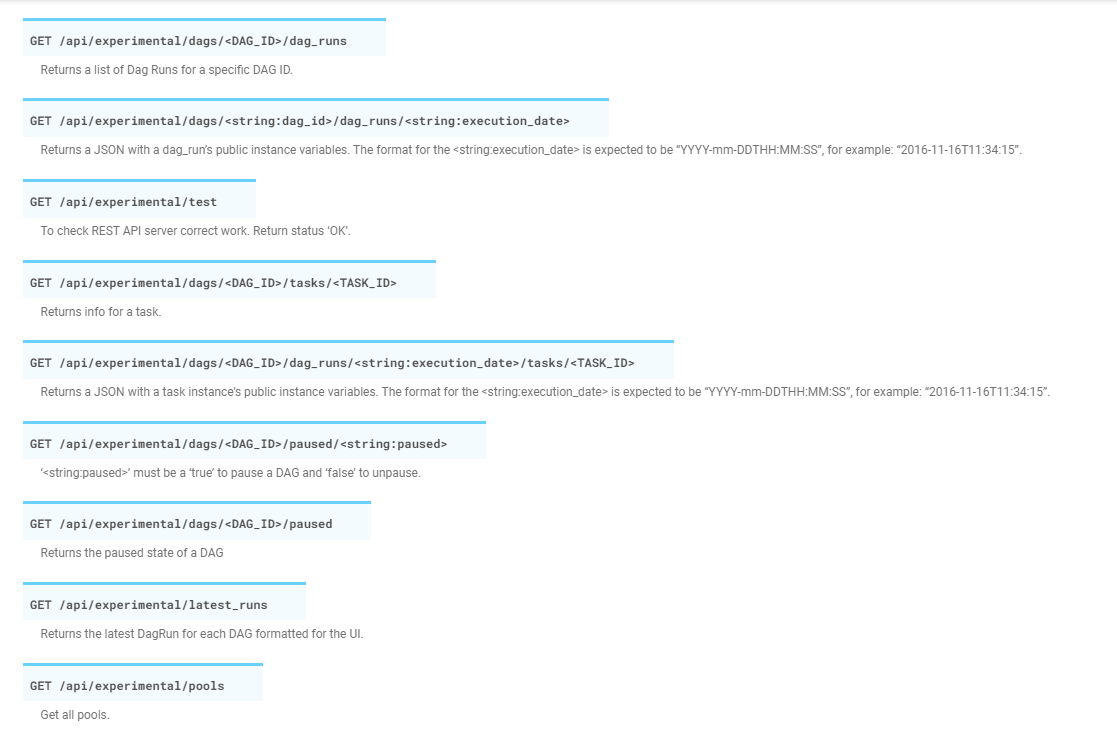

- Apache Airflow has an API interface that can help you perform tasks like getting information about tasks and DAGs, getting Airflow configuration, updating DAGs, listing users, and adding and deleting connections.

- The Airflow REST API facilitates management by providing a number of REST API endpoints across its objects. Most of these endpoints accept input in a JSON format and return the output in a JSON format.

- You interact with the API by using the endpoint, which will help you accomplish the task that you need to accomplish.

How to Work with Airflow REST API?

To start using the Airflow REST API, you should know a configuration setting known as AUTH_BACKEND. This configuration setting defines how to authenticate the API users. By default, its value is set to “airflow.api.auth.backend.deny_all”, meaning that all requests are denied. Note that users of Airflow versions below 1.10.11 may have security issues since all API requests are permitted without authentication before version 1.10.11.

There are different backends that you can use to authenticate with the REST API:

- Kerberos authentication- When using Kerberos, your API backend should be set to “airlfow.api.auth.backend.kerberos_auth”. Additionally, there are other parameters that you should define too.

- Basic username/password authentication- This works with users created via LDAP or within the Airflow DB. The value of auth_backend parameter should be set to “airflow.api.auth.backend.basic_auth”. Both the username and the password must also be base64 encoded and sent via the HTTP header.

- Your API authentication- You can also create your own backend. Airflow allows you to customize it the way you want.

Sending the First Request from the Airflow REST API

Before sending the request, ensure that the auth_backend setting has been set to “airflow.api.auth.backend.basic_auth”. Note that with the official docker-compose file of Airflow, a user named admin with the password admin is created by default. Once Airflow is started, issue the following request with curl:

curl --verbose 'http://localhost:8080/api/v1/dags' -H 'content-type: application/json' --user "airflow:airflow"The request should return all the DAGs and the returned output should be in a JSON format:

{

"dags": [

{

"dag_id": "bash_operator",

"description": null,

"file_token": ".eFw8yjEOgCAMAMC_kEsHE79DClYgFttQEuX3boyXiIMijQBtv1he8CxJGbG0DmKPLs_iOqgTTHdmMlWpQ-rMoUTsy1EtBJEqeOQ7nW6H6ZgI_x.PjpiEYEF1Ph3O5aZCIvOz5qAOMC",

"fileloc": "/home/airflow/.local/lib/python3.6/site-packages/airflow/example_dags/bash_operator.py",

"is_paused": true,

"is_subdag": false,

"owners": [

"airflow"

],

"root_dag_id": null,

"schedule_interval": {

"__type": "CronExpression",

"value": "0 0 * * *"

},

"tags": [

{

"name": "example1"

},

{

"name": "example2"

}

]

},Note that it is possible to specify a limit on the number of items that are returned. You simply have to specify the limit in the request. The default value for this is 100. You can change it via the maximum_page_limit parameter of Airflow configuration settings. This parameter will save you from requests that may overload the server and cause instability. The right value will depend on the type and number of requests that you make.

Triggering a DAG from the Airflow REST API

The API comes with a resource named dagRuns to help you interact with your DAGs.

To start a new DAG run, issue the following request:

curl -X POST -d '{"execution_date": "2021-10-09T20:00:00Z", "conf": {}}' 'http://localhost:8080/api/v1/dags/bash_operator/dagRuns' -H 'content-type: application/json' --user "airflow:airflow"Note that you can’t trigger a DAG more than once on the same execution date. To do this, you should first delete the DAG run and trigger it again. A DAG run can be deleted using the following request:

curl -X DELETE 'http://localhost:8080/api/v1/dags/bash_operator/dagRuns/manual__2021-10-09T22:00:00+00:00' -H 'content-type: application/json' --user "airflow:airflow"If the request runs successfully, you will only get code 204 as the output. If you need to delete the DAG from the Airflow REST API, use the following request:

curl -X PATCH -d '{"is_paused": true}' 'http://localhost:8080/api/v1/dags/bash_operator?update_mask=is_paused' -H 'content-type: application/json' --user "airflow:airflow"Note that the new Airflow API doesn’t have endpoints like the experimental API. You have to “patch” the DAG so as to pause or unpause it.

How to Delete a DAG from the API?

You can’t do it. That’s all there is to it. It’s understandable that you can’t delete a DAG from the API. The API’s purpose is to represent what a typical user can do. Deleting a DAG is a critical operation that should not be performed simply by submitting a request. However, you can pause your DAG to prevent it from being triggered in the future. You can do so by making the following request:

curl -X PATCH -d '{"is_paused": true}' 'http://localhost:8080/api/v1/dags/example_bash_operator?update_mask=is_paused' -H 'content-type: application/json' --user "airflow:airflow"The new Airflow REST API, unlike the experimental API, does not have any specific endpoints. You can pause or unpause the DAG by “patching” it here.

How to Monitor Your Airflow Instance?

I believe you agree with me that keeping an eye on your Airflow instance is critical. Before triggering a DAG, you might want to double-check that your Airflow scheduler is in good working order. You can do so by submitting the following request:

curl --verbose 'http://localhost:8080/api/v1/health' -H 'content-type: application/json' --user "airflow:airflow"This request will be fulfilled if both the metadatabase and the scheduler are in good working order. If they’re “on,” that is. Keep in mind that this request won’t tell you if they’re under a lot of stress or if they’re using too much CPU or memory. In conclusion, you can use that request as a preliminary check, but you should always rely on a real monitoring system, as described here.

You can also use the following request to see if your DAGs have any import issues:

curl --verbose 'http://localhost:8080/api/v1/importErrors' -H 'content-type: application/json' --user "airflow:airflow"Key Takeaways

- From this article, you were introduced to Airflow and its features.

- You have learned about Airflow REST API in detail.

- You will have complete knowledge of how Airflow REST API works.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions

1. Does Airflow have a REST API?

Yes, Airflow has a REST API that allows users to interact with it programmatically. You can use the API to manage DAGs, trigger tasks, and access various features of Airflow from other applications.

2. How to trigger Airflow DAG using REST API?

To trigger an Airflow DAG using the REST API, you need to make a POST request to the endpoint /api/v1/dags/<dag_id>/dagRuns. Include necessary parameters like the dag_id and optional configuration details in the request body. This will start the specified DAG.

3. Is Airflow an ETL?

Apache Airflow is not an ETL tool itself but a workflow automation platform. It helps

schedule and manage ETL processes by orchestrating tasks. You can integrate it with

ETL tools like Hevo to streamline data workflows and ensure efficient data processing.