More than ever, organizations face increasing challenges in maintaining data quality as their data size and complexity grow exponentially. They must now rely on efficient tools and services to ensure data accuracy, integrity, and anomalies-free.

Quality data is essential for deriving accurate insights and making informed decisions. Poor Data can lead to inaccurate insights, which, in turn, can result in costly business decisions. To address these concerns, AWS Glue, a fully managed ETL service, has emerged as a powerful tool for managing data pipelines. This blog will explore the importance of data quality in AWS Glue, the key concepts and features involved, and best practices for implementing effective data quality measures.

Table of Contents

Overview of AWS Glue

AWS Glue is a serverless data integration service that makes it easy for users to discover, prepare, move, and integrate data from multiple sources for machine learning, application development, and analytical purposes. It also includes additional productivity and data ops tooling for authoring, running jobs, and implementing business workflows. AWS Glue Data Quality allows you to measure and monitor the quality of your data so that you can make sound business decisions.

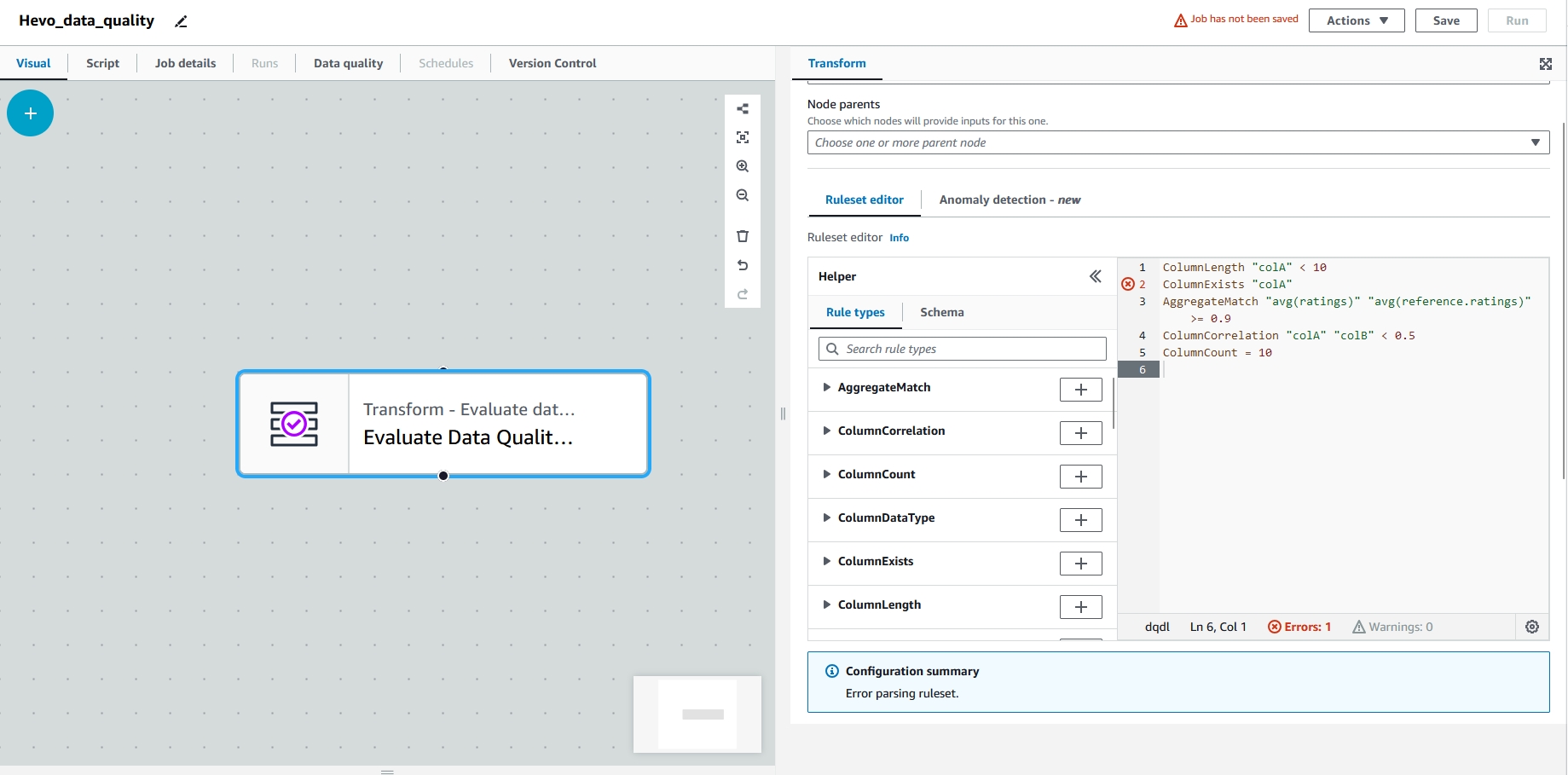

Looking for a comprehensive data quality solution that complements AWS Glue? Look no further! Hevo’s no-code platform helps streamline your data quality processes. Try Hevo and empower your team to:

- Integrate data from 150+ sources (60+ free sources), including AWS services.

- Utilize drag-and-drop and custom Python script features for advanced data cleansing and transformation.

- Ensure data consistency with built-in deduplication and flexible replication options.

Try Hevo and discover why 2000+ customers, including Postman and ThoughtSpot, have chosen Hevo for building a robust, modern data stack.

Get Started with Hevo for FreeImportance of Data Quality in ETL Processes

Data quality must be maintained throughout the ETL process for several reasons.

- Accurate Insights: Poor data quality can lead to inaccurate analytics and misleading results.

- Data Integrity: Ensuring data integrity helps maintain the reliability and trustworthiness of your data.

- Regulatory Compliance: Many industries have strict data quality requirements to comply with regulations.

- Decision Making: High-quality data is essential for making informed business decisions.

Understanding Data Quality in AWS Glue

Architecture of an AWS Glue Environment

- Data Catalog: This is a central metadata repository that stores information about data sources and their structure.

- Data Stores: These are the sources and destinations of your data. They can be databases (like MySQL, PostgreSQL, or Oracle), file systems (like S3), or other data sources.

- Crawler: A service that automatically discovers and catalogues data sources.

- Jobs: Workflows that define the steps involved in extracting, transforming, and loading data.

- Script: A Python or Scala script that contains the logic for transforming data.

- Trigger: An event that can initiate an ETL job, such as a schedule or a message from a messaging service.

- Developer Endpoints: Environments for developing and testing ETL scripts.

- Interactive Sessions: A way to interactively explore and analyse data within AWS Glue.

How AWS Glue Ensures Data Quality

To ensure data quality, AWS Glue uses several mechanisms, some of which are listed below:

- Serverless, cost-effective, petabyte-scale data quality without lock-in

With AWS Glue’s serverless nature, you can scale without managing infrastructures. It can be used to scale data of any size, and its pay-as-you-go billing feature increases the service’s agility and improves cost. AWS Data Glue is built on Deequ, an open-source framework that can manage petabyte-scale datasets. Because of its open-source nature, AWS Glue Data Quality offers flexibility and portability.

- Get started quickly with automatic rule recommendations.

AWS Glue Data Quality automatically analyses datasets and computes their statistics. These statistics recommend data quality rules that check for accuracy, freshness, and hidden issues. These rules can be adjusted or discarded, or new rules can be added as needed.

- Uncover hidden data quality issues and anomalies using ML

AWS Glue uses ML algorithms to learn patterns of data statistics gathered over time. It also detects anomalies and unusual data patterns and alerts the users. It also automatically creates rules to monitor specific patterns so that they can be used to build data quality rules progressively.

- Achieve data quality at rest and in pipelines.

AWS Glue Data Quality sets rules based on your datasets, which can be applied to data at rest in your database and the entire data pipeline where data is in motion. These rules can also be used for data across multiple datasets.

- Understand and correct data quality issues.

AWS Glue Data Quality has over 25 out-of-the-box rules that can validate data quality and identify specific data that causes issues. The rules can also be used to implement data quality checks, which compare different data sets in disparate data sources in minutes.

AWS Glue Data Quality Features

Data Quality Rules and Constraints

With AWS Glue, you can define and enforce data quality rules and constraints as part of your ETL process. These rules can be changed and custom-defined at various stages to ensure the data meets specific standards before loading it into the desired destination.

Some of the rules are stated below.

- Data type validation: This rule ensures data conforms to defined data types. (For example: string, integer, date)

- Value range validation: This checks if data values fall within predefined ranges.

- Uniqueness constraints: This ensures that values are unique within all datasets.

- Referential integrity: This verifies the relationships between different datasets.

With this rule, you can identify and spot errors early in the ETL process.

Data Profiling

Data profiling is vital in organising, understanding, and interpreting organisational data. Organised data is precious in achieving organisational goals.

AWS Glue provides tools to analyse our data’s content, structure, and quality to understand its characteristics, such as distribution, patterns, and outliers.

Key Benefits of Data Profiling

- Detecting Anomalies: Identifying unexpected patterns and values in your data.

- Data distribution: it can be used to understand the frequency of different values within the dataset.

- Data statistics: Can be used to calculate summary statistics like mean, median, mode

- Data completeness: Identifying missing or null values.

- Data consistency: Checking for inconsistencies and anomalies.

Data Validation and Cleansing

AWS Glue also provides tools for data validation against defined rules and cleansing data to address quality issues.

Data Validation Checks:

- As part of the ETL job, you can perform data validation checks. These checks involve applying data quality rules and constraints to identify violations. They can be implemented using custom scripts or built-in functions to ensure the data meets the required standards before loading it into a database.

Data Cleansing functions:

- This involves transforming data to correct errors, remove duplicates, and handle missing values. This process can be automated using AWS Glue jobs, which apply the necessary transformations to the data as it moves through the ETL pipeline.

By validating and cleansing data during the ETL process, AWS Glue helps ensure that only high-quality data is loaded into your target systems, improving your data’s overall reliability and accuracy.

Implementing Data Quality with AWS Glue

Setting Up Data Quality Rules

AWS Glue allows users to define and set up data quality rules. To implement these rules, you can:

- Use built-in data quality functions: AWS Glue provides data validation and cleansing.

- Create custom data quality checks: With AWS Glue, you can write custom Python or Scala code to implement specific data quality rules.

- Leverage data quality frameworks: Users can integrate with third-party data quality frameworks for more advanced features.

By setting up data quality rules, you can automate the process of ensuring your data meets the necessary standards before it’s loaded into a desired database.

Creating Data Quality Jobs

AWS Glue allows users to create data quality jobs that automate the process of validating, cleansing, and transforming data. These jobs can be scheduled to run at regular intervals or triggered by specific events, ensuring continuous data quality monitoring.

To create a data quality job:

- Job Creation: Use the AWS Glue console or AWS Glue Studio to create a new ETL job.

- Define Data Sources: Specify the data sources you want to process.

- Apply Transformations: Use AWS Glue’s transformation tools to apply data quality rules, such as filtering out invalid records, correcting formats, and removing duplicates.

- Schedule and Execute: Schedule the job to run at regular intervals or set up triggers to execute the job based on specific conditions.

Data quality jobs in AWS Glue offer a scalable and automated way to maintain high data quality across ETL pipelines.

Monitoring and Reporting Data Quality

Monitoring and reporting are critical aspects of maintaining data quality. AWS Glue provides tools to monitor the performance of your ETL jobs and report on the data quality processed.

Monitoring Data Quality:

- AWS Glue integrates with AWS CloudWatch, allowing you to monitor the performance of your ETL jobs in real time. You can set up alerts for specific metrics, such as job failures or performance issues, to ensure that any problems are addressed promptly.

Reporting Data Quality:

- AWS Glue allows you to generate reports on the quality of the data processed by your ETL jobs. These reports can include metrics such as the number of records processed, the number of records that failed validation, and the types of errors encountered.

By monitoring and reporting on data quality, you can ensure that your ETL processes function as expected and that your data remains accurate and reliable.

Best Practices for Ensuring Data Quality

Ensuring data quality is an ongoing process that requires careful planning and execution. Here are some best practices for maintaining high data quality in AWS Glue:

- Define Clear Data Quality Metrics: Establish clear metrics for data quality, such as accuracy, completeness, and consistency, and ensure that these metrics are aligned with your business goals.

- Automate Data Quality Checks: Use AWS Glue’s automation capabilities to implement data quality checks as part of your ETL jobs. This helps to ensure that data quality is maintained consistently across all data pipelines.

- Regularly Monitor Data Quality: Continuously monitor the quality of your data using AWS Glue’s monitoring and reporting tools. Set up alerts to notify you of any issues so that they can be addressed promptly.

- Implement Data Governance: Establish a framework that includes policies and procedures for maintaining data quality. This framework should cover data ownership, stewardship, and quality standards.

- Document Data Quality Rules: Document the data quality rules and standards you implemented in AWS Glue. This documentation should be easily accessible to all stakeholders and regularly updated.

By following these best practices, you can maintain high data quality in your AWS Glue ETL processes, ensuring your data is reliable, accurate, and ready for analysis.

Drawbacks of Using AWS Glue Data Quality

AWS Glue Data Quality offers robust features to ensure data quality within ETL processes. However, certain limitations and complexities may make it challenging for some users.

Service Limitations:

- Ruleset Size and Quantity Limits: The size and quantity of rulesets you can create are restricted by AWS Glue Data Quality. If you exceed these limits, you may be required to split your rulesets into smaller components.

- Statistics Storage Limit: While there’s no cost associated with storing statistics, there’s a limit of 100,000 statistics per account, and these statistics are retained for a maximum of two years.

Additional Considerations:

- Complexity: Implementing data quality rules and workflows in AWS Glue can be complex for users unfamiliar with ETL processes and scripting.

- Limited Customization: While AWS Glue provides built-in data quality functions, it may offer a different level of customisation than dedicated data quality tools.

- Performance Overhead: Implementing data quality checks can introduce additional processing overhead, potentially impacting the performance of your ETL pipelines.

- Steep Learning Curve: Effectively using AWS Glue and its data quality features can require a significant investment of time and effort

How Hevo Can Help in Monitoring Data Quality

Hevo is a cloud-based zero-maintenance data pipeline platform that simplifies data integration, transformation, and monitoring. It offers several advantages over AWS Glue Data Quality, making it a compelling alternative for many users:

- Simplified User Interface: Hevo provides a user-friendly interface that makes setting up and managing data pipelines easier, even for users without extensive technical expertise.

- Pre-built Integrations: Hevo offers pre-built integrations with a wide range of data sources and destinations, simplifying data ingestion.

- Automated Data Quality Checks: Hevo includes built-in data quality checks that can be easily configured and applied to your data pipelines.

- Real-time Monitoring: Hevo provides real-time monitoring of data quality metrics, allowing you to identify and address issues promptly.

- Comprehensive Reporting: Hevo generates detailed reports on data quality, providing valuable insights into the health of your data pipelines.

- Scalability: Hevo is designed to handle large volumes of data and can scale to meet your growing needs.

- No Service Limits: Hevo does not have the same service limits as AWS Glue Data Quality, allowing you to create and manage complex data quality rules without restrictions.

By choosing Hevo, you can significantly benefit from a more streamlined and efficient data quality management solution. This will reduce the complexity and overhead of using AWS Glue Data Quality.

Conclusion

As data volumes continue to grow and data environments become more complex, the importance of data quality will only increase. While AWS Glue Data Quality offers robust features, Hevo provides a more user-friendly, scalable, and efficient solution for data quality management. By choosing Hevo, you can simplify your data pipelines, improve data quality, and gain valuable insights into your data’s health.

Try a 14-day free trial and experience the feature-rich Hevo suite firsthand. Also, check out our unbeatable pricing to choose the best plan for your organization.

Frequently Asked Questions

1. What is AWS Glue?

AWS Glue is a fully managed ETL service that automates discovering, preparing, and integrating data from multiple sources for analytics, machine learning, and application development.

2. Why is data quality important in ETL processes?

Data quality is critical in ETL processes because data quality can lead to accurate analysis and sound business decisions. Ensuring data quality helps maintain the data’s accuracy, completeness, and reliability.

3. How does AWS Glue ensure data quality?

AWS Glue ensures data quality through features such as data quality rules and constraints, profiling, validation, and cleansing. These features help to maintain high data quality throughout the ETL process.

4. What are some best practices for ensuring data quality in AWS Glue?

Best practices for ensuring data quality in AWS Glue include defining clear metrics, automating data quality checks, regularly monitoring data quality, implementing data governance, and documenting data quality rules.