In today’s fast-paced world, where data volumes are rapidly increasing and frequent transactions are essential, processing information in real-time is crucial. This has led to the growing popularity of event-driven design patterns. However, developing and deploying event-driven architectures can be challenging and often requires extensive custom development. Amazon’s AWS Lambda simplifies this process by providing a fully managed service for building event-driven systems. Positioned between the data and compute layers, Lambda allows developers to upload Node.js code and trigger it using AWS services like S3, Kinesis, and DynamoDB. Additionally, Lambda automatically scales with the size of the event, making it both cost-efficient and reliable.

Easily integrate your Amazon S3 data with your destination using Hevo’s no-code platform. Automate your data pipeline for real-time data flow and seamless analysis.

- Quick Integration: Connect Amazon S3 to the destination with just a few clicks.

- Real-Time Sync: Ensure up-to-date data with continuous real-time updates.

- No-Code Transformations: Apply data transformations without writing any code.

- Reliable Data Transfer: Enjoy fault-tolerant data transfer with zero data loss.

Simplify your S3 to Destination data workflows and focus on deriving insights faster with Hevo.

Get Started with Hevo for FreeTable of Contents

What is Amazon S3?

Amazon S3 (Simple Storage Service) is a low-latency, high-throughput object storage service that allows developers to store massive volumes of data. It was launched by Amazon in 2006.

Key Features of Amazon S3

- Storage Management: Storage management capabilities in Amazon S3 are used to reduce latency, manage expenses, and save multiple copies of your data.

- S3 Replication: This feature replicates items and their metadata to one or more destination buckets in the same or different AWS region for a variety of purposes, including latency reduction, security, and compliance.

- S3 Batch Operations: With a single Amazon S3 API request, it manages billions of objects at scale. On billions of objects, batch operations are also utilised to accomplish the Invoke AWS Lamda function, Restore, and Copy actions.

- Access Control: You can control who has access to your objects and buckets with Amazon S3. S3 buckets and objects are kept private by default. Users can access the S3 resources that they have produced. You can grant permission to some resources for specific use cases by using the access features shown below.

- Bucket Policies: Bucket Policies is an IAM-based policy language for configuring resource-based permissions for S3 buckets and objects.

- Amazon S3 Access Points: It’s used to build up network endpoints with dedicated access policies so that shared datasets in Amazon S3 can be accessed at scale.

- S3 Access Analyzer: This tool is used to examine and monitor S3 bucket access controls in order to verify that your S3 resources are accessible.

What is AWS Lambda?

AWS Lambda is an event-driven serverless compute solution that allows you to run code for almost any application or backend service without having to provision or manage servers. More than 200 AWS services and SaaS apps can contact Lambda, and you only pay for what you use.

Key Features of AWS Lambda

Here are a few key features:

- Concurrency and Scaling Controls: Concurrency and scaling controls, such as concurrency limits and provisioned concurrency, let you fine-tune your production applications’ scalability and reactivity.

- Functions Defined as Container Images: You may utilise your chosen container image tooling, processes, and dependencies to design, test, and deploy your Lambda functions.

- Code Signing: Code signing for Lambda provides trust and integrity controls, allowing you to ensure that your Lambda services only use unmodified code provided by authorised developers.

- Lambda Extensions: You can use Lambda extensions to improve your Lambda functions. For example, you can utilise extensions to make it easier to integrate Lambda with your preferred monitoring, observability, security, and governance tools.

How to Use AWS Lambda S3?

You can process Amazon Simple Storage Service event notifications using Lambda. Whenever an object is created or deleted, Amazon S3 can send an event to notify a Lambda function. Amazon S3 permissions are granted on the function’s resource-based permissions policy, so you can configure notification settings on a bucket.

In Amazon S3, your function is invoked asynchronously with an event that includes information about the object. The following example illustrates an Amazon S3 event that was triggered when a deployment package was uploaded to Amazon S3.

Event notification example for Amazon S3:

{

"Records": [

{

"eventVersion": "2.1",

"eventSource": "aws:s3",

"awsRegion": "us-east-2",

"eventTime": "2019-09-03T19:37:27.192Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "AWS:AIDAINPONIXQXHT3IKHL2"

},

"requestParameters": {

"sourceIPAddress": "205.255.255.255"

},

"responseElements": {

"x-amz-request-id": "D82B88E5F771F645",

"x-amz-id-2": "vlR7PnpV2Ce81l0PRw6jlUpck7Jo5ZsQjryTjKlc5aLWGVHPZLj5NeC6qMa0emYBDXOo6QBU0Wo="

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "828aa6fc-f7b5-4305-8584-487c791949c1",

"bucket": {

"name": "DOC-EXAMPLE-BUCKET",

"ownerIdentity": {

"principalId": "A3I5XTEXAMAI3E"

},

"arn": "arn:aws:s3:::lambda-artifacts-deafc19498e3f2df"

},

"object": {

"key": "b21b84d653bb07b05b1e6b33684dc11b",

"size": 1305107,

"eTag": "b21b84d653bb07b05b1e6b33684dc11b",

"sequencer": "0C0F6F405D6ED209E1"

}

}

}

]

}Amazon S3 needs the resource-based policy of your function before it can invoke your function. You can configure an Amazon S3 trigger based on the bucket name and account ID in the Lambda console. The console then modifies the resource-based policy to allow Amazon S3 to invoke the function. The policy is updated using the Lambda API when the notification is configured in Amazon S3. Likewise, you can grant permissions to another account or restrict them to a particular alias using the Lambda API.

- AWS Lambda S3 Steps: Invoking a Lambda Function from an Amazon S3 Trigger

- AWS Lambda S3 Steps: Creating a Bucket and Uploading a Sample Object

- AWS Lambda S3 Steps: Creating the Lambda Function

- AWS Lambda S3 Steps: Re-examine the Function Code

- AWS Lambda S3 Steps: Test in the Console

- AWS Lambda S3 Steps: Use the S3 Trigger to Test

- AWS Lambda S3 Steps: Reorganize your Resources

1. AWS Lambda S3 Steps: Invoking a Lambda Function from an Amazon S3 Trigger

The first step in using AWS Lambda S3 is Invoking Lambda Function. We will discuss how to create a Lambda function that triggers Amazon Simple Storage Service (Amazon S3) using the console. Then, when an Amazon S3 bucket is added, your trigger calls the function you specified.

Please Note: You need an AWS account to use Lambda and other services on AWS. Visit aws.amazon.com to create an account if you don’t already have one.

It’s assumed that you are familiar with the Lambda console and basic Lambda operations.

2. AWS Lambda S3 Steps: Creating a Bucket and Uploading a Sample Object

The next step in using AWS Lambda S3 is to create a bucket. You will need to create an Amazon S3 bucket and upload a test file there. When you view this file from the console, your Lambda function retrieves information about it.

You can use the console to create an Amazon S3 bucket by following the processes below:

- Step 1: Log in to the Amazon S3 console.

- Step 2: Select the Create bucket option.

- Step 3: Do the following in General configuration:

- Name your bucket with a unique name.

- Please select a region for AWS. A Lambda function must be created within the same region.

- Step 4: Select the Create bucket option.

Once the bucket has been created, Amazon S3 opens the Buckets page, which displays a list of all buckets for the current region in your account.

The Amazon S3 console can be used to upload a test object:

- Step 1: Using the buckets page of the Amazon S3 console, select the bucket that you created from the list of bucket names.

- Step 2: Select the Upload option under the Objects tab.

- Step 3: Drag and drop a sample file from your local computer to the Upload page.

- Step 4: Select the Upload option.

3. AWS Lambda S3 Steps: Creating the Lambda Function

The next step in using AWS Lambda S3 is Lambda functions are created using function blueprints. A function demonstrates how Lambda can be used with other AWS services in a blueprint. In addition, blueprints include samples of code and presets for function configuration for a certain runtime. For this practice, either Node.js or Python can be chosen.

Creating Lambdas from blueprints on the console

To use AWS Lambda S3, create lambdas from blueprints:

- Step 1: Go to the Lambda console’s Functions page.

- Step 2: Select the Create option.

- Step 3: Select Use a blueprint under Create function.

- Step 4: Search for S3 under Blueprints.

- Step 5: Select one of these options from the search results:

- Choose s3-get-object for a Node.js function.

- Choose s3-get-object-python if you want a Python function.

- Step 6: Select Configure.

- Step 7: Do the following under Basic information:

- Enter the name of the function my-s3-function.

- You can create an Execution role from an AWS policy template by choosing to Create a new role.

- Enter my-s3-function-role in the Role Name field.

- Step 7: You need to select the S3 bucket you created previously under the S3 trigger.

- Step 8: By configuring an S3 trigger in the Lambda console, you allow Amazon S3 to invoke your function with a resource-based policy.

- Step 9: Select the Create option.

4. AWS Lambda S3 Steps: Re-examine the Function Code

The next step in using AWS Lambda S3 is n response to the event parameter, the Lambda function retrieves the uploaded object’s S3 bucket name and key name from the source. The function retrieves the content type of an object using the Amazon S3 getObject API.

5. AWS Lambda S3 Steps: Test in the Console

The next step in using AWS Lambda S3 is to run the Lambda function manually with sample data from Amazon S3.

The console can be used to test the Lambda function by following the steps below:

- Step 1: You can configure the test events on the Code tab by clicking the arrow next to Test and selecting Configure test events from the dropdown list.

- Step 2: You can configure a test event by following these steps:

- Select Create a new test event.

- Select Amazon S3 Put (s3-put) as the template for your event.

- Give the test event a name. For instance, mys3testevent.

- Change the bucket name and object key from the example-bucket JSON to your bucket name and test file name in the test event JSON. You should get something like this:

{

"Records": [

{

"eventVersion": "2.0",

"eventSource": "aws:s3",

"awsRegion": "us-west-2",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "EXAMPLE"

},

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"responseElements": {

"x-amz-request-id": "EXAMPLE123456789",

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "my-s3-bucket",

"ownerIdentity": {

"principalId": "EXAMPLE"

},

"arn": "arn:aws:s3:::example-bucket"

},

"object": {

"key": "HappyFace.jpg",

"size": 1024,

"eTag": "0123456789abcdef0123456789abcdef",

"sequencer": "0A1B2C3D4E5F678901"

}

}

}

]

}- Step 3: Select the Create option.

- Step 4: To call this function from your test event, choose Test under Code source.

6. AWS Lambda S3 Steps: Use the S3 Trigger to Test

The next step in using AWS Lambda S3 is to use s# trigger. Your function is called as soon as you upload a file to the Amazon S3 destination bucket.

For testing Lambda functions using S3 triggers, follow the steps below:

- Step 1: You will need to select the bucket name that you have already created in the buckets section of the Amazon S3 console.

- Step 2: You can upload a few .jpg or .png image files to the bucket from the Upload page.

- Step 3: On the Lambda console, open the Functions page.

- Step 4: Select the name of your function (my-s3-function).

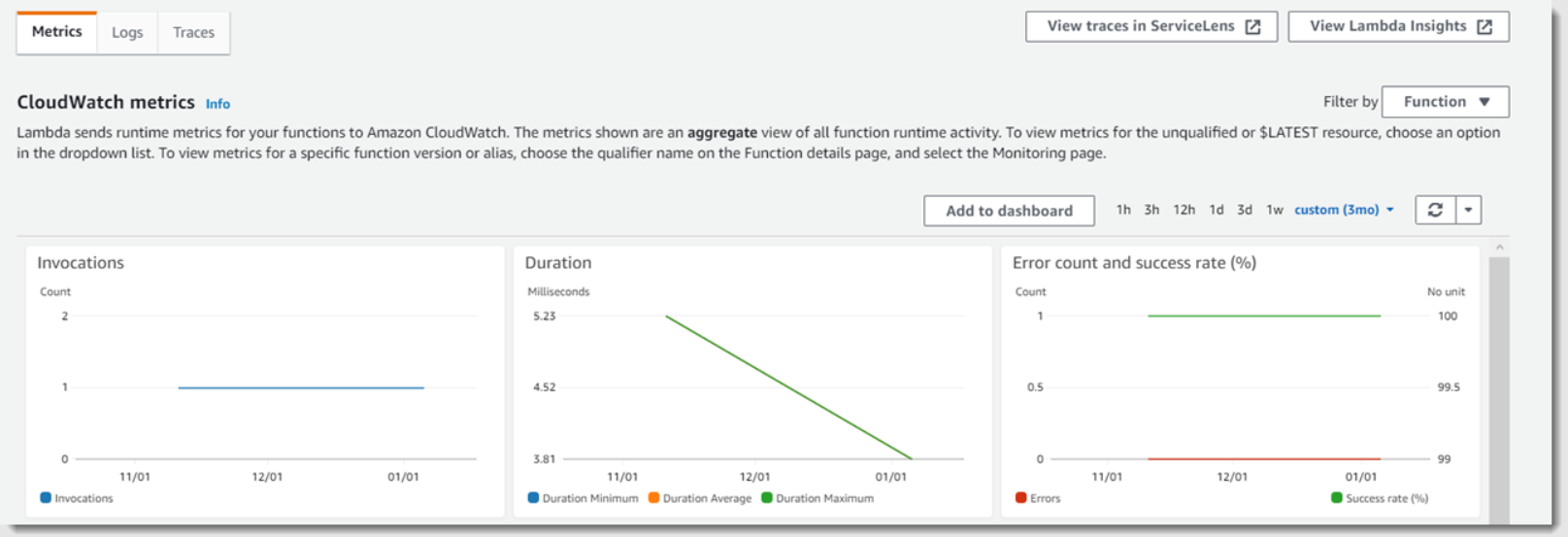

- Step 5: Check the Monitor tab to see if each file you uploaded was processed once. Lambda sends metrics to CloudWatch, which are presented on this page. According to the Invocations graph, the number of files in the Amazon S3 bucket should match the number in the Invocations graph.

- Step 6: The logs can be viewed in the CloudWatch console by selecting View logs in CloudWatch on the menu. Choose a log stream to display the logs for one of the function invocations.

7. AWS Lambda S3 Steps: Reorganize your Resources

The next step in using AWS Lambda S3 is to recognize your resources. If you don’t want to keep the resources that you created for this tutorial, you can delete them now. Your AWS account will avoid unnecessary charges when you delete no longer-used AWS resources.

In order to delete a Lambda function, follow the steps below:

- Step 1: Go to the Lambda console’s Functions page.

- Step 2: Choose the function you created.

- Step 3: Select Delete from the Actions menu.

- Step 4: Click Delete.

Deleting the IAM policy

To use AWS Lambda S3, delete the IAM Policy:

- Step 1: On the AWS IAM) console, open the Policies page.

- Step 2: Make sure you select the Lambda-created policy. The name of the policy starts with AWSLambdaS3ExecutionRole-.

- Step 3: Select Delete from the Policy actions menu.

- Step 4: Click Delete.

Deleting an execution role

To use AWS Lambda S3, delete an execution role:

- Step 1: Navigate to the Roles page of the IAM console.

- Step 2: You need to select the execution role you created.

- Step 3: Select the Delete role.

- Step 4: Select Yes, delete.

Deleting the S3 bucket

To use AWS Lambda S3, delete the S3 bucket:

- Step 1: Launch the Amazon S3 console.

- Step 2: Choose the bucket you created.

- Step 3: Select Delete.

- Step 4: Provide a name for the bucket.

- Step 5: Select Confirm.

Conclusion

- You have successfully learned how to use AWS Lambda S3. An S3 trigger is used in this tutorial to create thumbnail images for each image that is uploaded to an S3 bucket.

- Before attempting this tutorial, you should have some familiarity with the AWS and Lambda domains.

- AWS Command Line Interface (AWS CLI) is used to create resources, and .zip files are created as deployment packages for the function and its dependencies.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand.

You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

FAQs

1. What is AWS Lambda S3?

AWS Lambda can be triggered by Amazon S3 events to execute code in response to actions like file uploads or deletions.

2. How do I add S3 to Lambda?

Configure an S3 event notification to invoke your Lambda function by specifying the S3 bucket and event type in the Lambda trigger settings.

3. How to read S3 from Lambda?

Use the AWS SDK in your Lambda function to access S3, e.g., s3Client.getObject() to read files from a bucket.