Optimization plays a big role in data engineering since Large scale and complex data requires better management and Querying of data. In platforms like Databricks based on speed and performance, such as knowledge of query optimization allows organisations to take advantage of their data by speeding up.It is in this world of data engineering that optimization is not a mere trend, but actually the order of the day . Thus, having the possibility to work with that amount of data as fast as possible becomes important when the velocity at which we receive new information increases. This is where Databricks query optimization proves the difference. It will start or enable high speed and apt performance ,efficiency and low usage of resources which is a plus in plus in any data team advantageous.

In any environment like Databricks where high speed and performance is crucial, knowing about query optimization applies knowledge on how organisations can extract maximum efficiency from the data they have to process and enhance the overall speed of data processing.

Table of Contents

Why is Databricks Query Optimization Important?

When working with Databricks, query optimization is crucial for several reasons:

- Speed: Optimized queries can run faster, reducing wait times and quicker insights.

- Cost-efficiency: Fewer resources are required for optimized queries, which can lead to cost savings on cloud services.

- Scalability: As data grows, efficient queries help maintain performance standards without over-provisioning resources.

- User Experience: For end-users, faster query performance translates into better experiences and more timely decision-making.

Parameters That Describe the Query Performance of Databricks

Query Execution Process

It is important to understand how queries are executed in Databricks in order to carry out optimisations. The lifecycle of a query typically unfolds in several stages:The lifecycle of a query typically unfolds in several stages:

- Parsing: The first step, involved, is to parse the SQL statement where the text is checked for correct syntactic form.

- Planning: This stage involves developing what is logical and efficient, in coming up with the policy of how to deliver the query. This can involve such things as what data to read or in what sequence it should be sorted.

- Execution: Last of all, the physical manifestation of the plan is done which involves the retrieval of the data, converting it and returning it to the user.

Other Key Factors

- Data Size: Sometimes the larger datasets take longer time and may overload the performance of a system.

- Data Distribution: As this is the case, the way your data is partitioned determines how efficiently the given query can be done.

- Cluster Resources: The next factor is the size and the configuration of your Databricks cluster which have a great impact on query speeds.

Hevo is a powerful, no-code data integration platform designed to simplify and automate the process of moving data from various sources to your desired data warehouse, including Databricks. With Hevo, you can easily connect, transfer, and transform your data without writing a single line of code, enabling faster and more efficient data migration.

What Hevo Offers:

- Seamless Integration: Hevo supports 150+ data sources, making it easy to integrate data from databases, cloud applications, and flat files into Databricks.

- Real-Time Data Transfer: Hevo ensures that your data is always up-to-date with real-time data syncing and automatic schema detection.

- User-Friendly Interface: With its intuitive, no-code platform, Hevo enables anyone to set up data pipelines quickly and efficiently, regardless of technical expertise.

10 Key Techniques for Databricks Query Optimization

Leverage Data Caching: Caching data if often done in an effort to support improved performance by storing it into memory instead of continually reading it from disk. This helps in enhancing the pace of query processing and where the computation is repetitive in nature.

Here are some key points about leveraging data caching:Here are some key points about leveraging data caching-

1. Cache levels:

- Memory-only caching: It is the fastest but most volatile type of return that investors can earn on their portfolio.

- Disk caching: Slower than memory but persistent I have regularly used it for my simulation models where its capability of saving the results in a folder enhances its value.

- Memory and disk caching: An approach that is a fusion of the two kinds of epistemology.

2. Cache persistence:

- Temporary caching: Is temporary and is valid only for the current Spark session.

- Persistent caching: Persists to Spark sessions unless expunged

3. Caching methods:

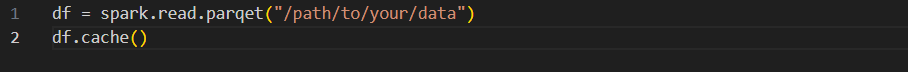

- cache(): Saves the DataFrame or RDD on the driver node or on a cluster node.

spark.read.parquet()

df.cache()persist(): Allows specifying storage level (e.g., memory only, disk only, both)

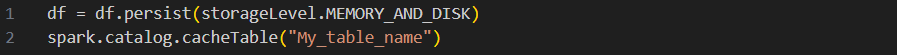

This code persists in the DataFrame in memory and disk and caches the corresponding table.

df.persist(storageLevel.MEMORY_AND_DISK)

spark.catalog.cacheTable("My_Table_name")- Efficient Joins: Use techniques like broadcast joins to reduce shuffle operations for smaller datasets. A well-planned join can drastically cut down on processing time.

Below are some key strategies for using efficient joins for query optimization:

1. Choose the right join type:

- Use INNER JOIN to match records from both tables.

- Use LEFT JOIN when you want all records from the left table and matching records from the right.

- Use RIGHT JOIN when you want all records from the right table and matching records from the left.

- Avoid FULL OUTER JOIN when possible, as it’s typically less efficient.

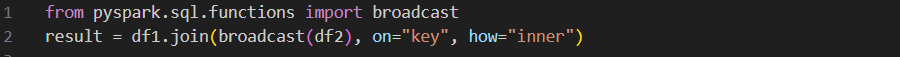

2. Use broadcast joins:

Use a broadcast join whenever you want to join a large table with a smaller one. Databricks automatically chooses broadcast joins, but you can also give a hint.

from pyspark.sql.functions import broadcast

df1.join(broadcast(df2), on="key", how="inner")In this example, Df1 is a larger dataframe, and df2 is a smaller dataframe. The key is the column on which you want to join the dataframes. Broadcasting (df2) hints at Spark that df2 should be broadcasted to all worker nodes, which helps optimize the join operation.

- Use Predicate Pushdown: This technique allows you to filter data at the data source level, minimizing the amount of data that needs to be processed later in the query. Predicate Pushdown is an important optimization method in Databricks that boosts query performance by applying filter conditions nearer to the data source. This technique filters the data before it’s fully loaded into the processing engine, which reduces the amount of data that needs to be processed and speeds up query execution.

For example, if a query specifies WHERE age > 30, predicate pushdown applies this condition during data retrieval instead of after loading all the data. This approach minimizes I/O operations and processing overhead. In Databricks, which utilizes Spark’s distributed computing framework, predicate pushdown significantly enhances data access speed and processing efficiency, resulting in quicker query responses and lower resource usage.

- Partition Your Data: Organizing your data into partitions can help the query engine only read the necessary data, speeding up processing time.

Let me give you an example: You are working on vast sales data spanning multiple years. You can split this data by year, storing each year’s data in its respective partition.

Example:

Partition 1: Sales data for the year 2020

Partition 2: Sales data of 2021

Partition 3: 2022 Sales

Partitioning ensures that when you want to analyze the sales data 2021, it will access only the partition for 2021, making the process more efficient.

- Partitioning and Bucketing:

Partitioning involves dividing a large dataset into smaller, more manageable chunks called partitions. Each partition is a subset of the data and can be processed independently. On the other hand, Bucketing is a technique used to divide the data into a fixed number of buckets or bins. In an extensive data processing system, bucketing might distribute data evenly across several buckets to optimize joint operations. This technique also helps in optimizing query performance.

- Optimising Shuffle Operations: Improper shuffles can lead to performance bottlenecks. Keep shuffles to a minimum and ensure they run efficiently when necessary. Shuffling is a useful mechanism when data needs to be redistributed across partitions and nodes in operations like joins and aggregation. However, shuffles are expensive, and they affect job performance. For efficient data processing, optimizing those operations becomes extremely important.

- Use Delta Lake for Optimised Storage: Delta Lake provides a way to manage large datasets more effectively, with features that handle ACID transactions and scalable reads. Delta Lake’s benefits for storage optimization and query performance:

Delta Lake provides both storage of data and querying with efficiency due to a few key features: on the storage side, it provides ACID transactions, normalizes metadata management by an optimization of storing metadata in transaction logs that reduces the number of small files, while file merging is supported-merging small ones into larger ones for optimal storage. Partitioning in Delta Lake allows data to be split into manageable chunks to accelerate the data retrieval. Second, it skips irrelevant data by using file statistics.

Another salient feature of Delta Lake is that it offers query performance caching, which keeps frequently accessed data in memory for quicker access. It also optimizes the data using Z-Ordering, a method for physically storing related columns together, which helps enhance query performance. Further, the table statistics are useful to allow the system to elicit better query planning based on how data is distributed. Other features, such as time travel, provide easy access to historical data or the capability to roll back to earlier versions. In contrast, schema evolution provides for schema changes without extensive rework.

- Tune Cluster Configuration: Adjusting your cluster configuration by choosing the right instance types and sizes can significantly speed up query execution.

Here’s a concise guide on how to adjust cluster settings to enhance query efficiency:

Choose the Right Cluster Type:

Standard Clusters: Ideal for general-purpose workloads. Suitable for most tasks.

High Concurrency Clusters: Designed for workloads that involve multiple simultaneous queries, such as interactive SQL queries and dashboards.

Configure Spark Settings:

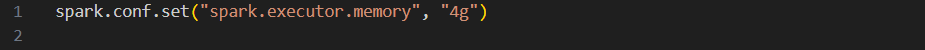

Adjust the amount of memory allocated to each executor.

# Example for setting 4 GB of memory per executor

spark.conf.set("spark.executor.memory","4g")Increase the number of cores per executor to allow parallel processing.

# Example for setting 4 cores per executor

spark.conf.set("spark.executor.cores","4")Allocate sufficient memory to the driver for handling Spark operations and metadata.

# Example for setting 8 GB of memory for the driver

spark.conf.set("spark.driver.memory","8g")- Avoid Excessive DataFrame Operations: Limit the transformations you perform on DataFrames before actions. Each transformation creates a new logical plan to slow down the overall process. Optimize Databricks queries to prevent the execution of too many operations and functions on a DataFrame and minimize shuffles that occur during groupBy and join.

Push down filters as deep as possible into the query to reduce data flowing through the operation. A nice approach to avoiding common repetitive computations is to cache intermediate results using the cache method to enhance performance during subsequent stages. Efficient columnar data formats like Parquet must be preferred to ensure improved read performance. With all these practices in place, your Databricks queries will be much more efficient.

- Monitor and Optimize with Databricks Utilities: Use Databricks’ built-in monitoring tools to monitor performance metrics and improve as needed over time.

Explore how Deletion Vectors in Databricks can complement query optimization strategies to boost performance in 2025.

Best Practices for Databricks Performance Tuning

- Cluster Configuration: As per the above statement, configure your cluster in relation to the characteristics of your job workloads. This involves determining the types of instances to use based on the type of data one has and the types of queries likely to be made.

- Auto Scaling:Initiate the auto-scaling function to enable the cluster to respond to demand without having to go overboard with potential wasted funds.

- Monitoring and Logging:Getting alerts and logs on performance almost always helps in mitigating losses to an extent. Explore query execution queries as the Queries backend Databricks cloud gives historical pass-through execution time.

- SQL Analytics:Utilise SQL analytics on query execution performance. Using this tool it is possible to evaluate how long executed queries took compared to a time unit such as a day and this can pinpoint aspects that need feedback.

To further enhance your Databricks experience, discover how to fully utilize the overwrite feature for better performance.

Configure your cluster according to the specific requirements of your workloads. This means choosing the right instance types based on the nature of your data and query patte

Conclusion

In the world of data engineering, where speed and efficiency are paramount, optimizing queries in Databricks is essential for maximizing performance. By understanding the query execution process and implementing techniques like data caching, efficient joins, and partitioning, you can significantly enhance query speeds, reduce costs, and improve resource management. Regularly tuning your cluster configuration and leveraging monitoring tools will ensure sustained performance improvements over time.

Learn how Databricks Lakehouse Monitoring enhances query optimization by providing critical insights for boosting performance.

Moreover, with the integration of Hevo, your data migration journey to Databricks becomes effortless and streamlined. Hevo’s no-code platform automates and optimizes the data transfer process, allowing you to focus on extracting insights rather than managing complex migrations. Together, Databricks and Hevo provide a powerful combination that enables you to fully leverage your data with speed, efficiency, and ease.

FAQ on Databricks Query Optimization

Why should I optimize queries in Databricks?

Optimizing queries boosts their performance limits, decreases the amount of resources used, and reduces the costs of queries that already run faster, thus generating more powerful insights and a better user experience.

How does caching data help in Databricks?

Caching stores data that is often used in memory, so it isn’t necessary to drag it to the disk, which results in faster queries.

What’s the difference between partitioning and bucketing?

Partitioning splits the data into subsections to make it easier to access, but bucketing goes a step further: It involves organizing the data within a section of the database and usually results in a faster speed of joined and queried operations.

Why is monitoring important for query optimization?

By monitoring, you can not only see which queries are the most time-consuming but also find issues and make necessary changes to keep things running smoothly.

How can I avoid too many DataFrame operations?

Perform a minimum number of intermediate operations before executing actions. This can prevent any unwanted effects of your queries’ sluggish performance, keeping them efficient and clear-cut.