The average organization generates 2.5 quintillion bytes1 of data daily. Businesses globally prioritize data management due to its exponential growth. How can organizations extract, convert, and load (ETL) meaningful and useable data with so much to process?

A robust architecture is crucial to modern data management. This blog will explain how they can assist your business in streamlining data operations for better decision-making and business intelligence. Whether you’re new to ETL or trying to optimize your existing systems, this guide will help you understand processes and develop an efficient ETL architecture for your business.

Table of Contents

What is an ETL Process?

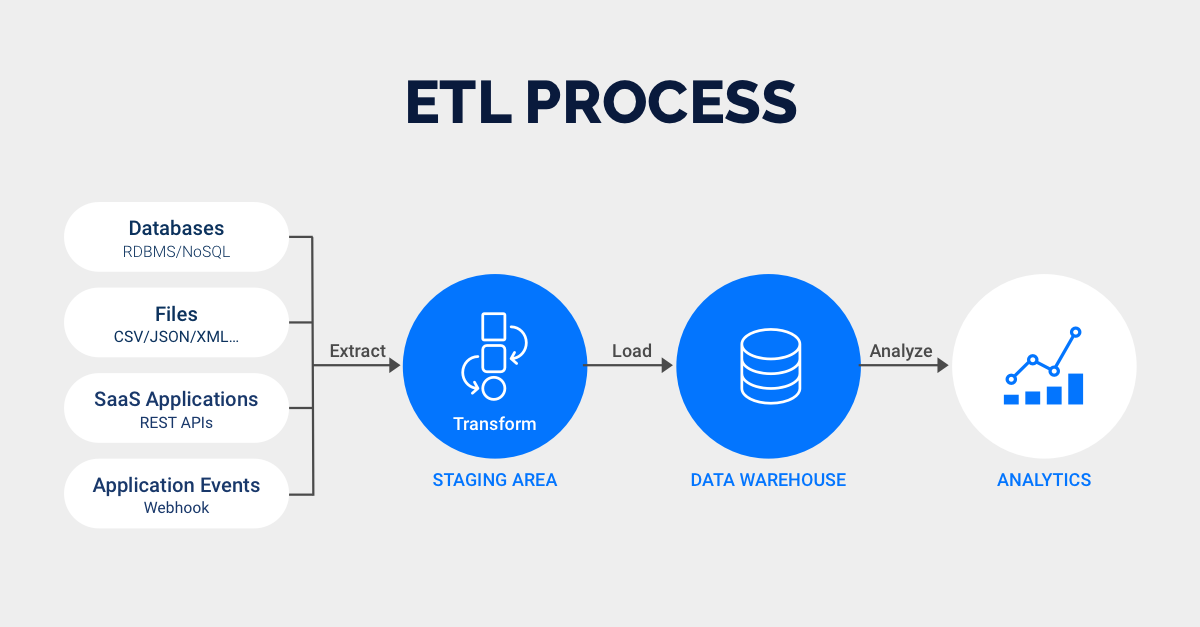

The ETL process is essential to modern data management. It consists of three elements: Extract, Transform, and Load. An ETL pipeline gathers data from diverse sources, processes it, and loads it into a data warehouse or other storage system for analysis.

Three primary phases make up the ETL process:

E for Extract

Data is retrieved in the extraction phase from various systems such as databases, APIs, files, etc. The objective is to collect all data that is relevant for analysis.

T for Transform

Data is transformed, which includes data cleaning, validating, and formatting the extracted data according to business and ETL requirements. This includes removing errors, adding relevant information, and applying business rules. Data quality and usefulness are ensured by data filtering, aggregating, and enriching in this step.

L for Load

After transforming the data, it is next loaded into the database or data warehouse. This makes it possible to retrieve the data for reporting and analysis. You can execute this process either in batches or in real-time. The data can now be used to learn new insights by analysts, business intelligence tools, and machine learning algorithms.

For example, American Express uses ETL to combine customer data from multiple platforms. The company gathers an extensive amount of data from the transactions and interactions of its customers. This data helps them make highly personalized campaigns. This enables them to examine customer behavior and enhance the effectiveness of targeted marketing efforts.

Searching for top ETL tools to connect your data seamlessly? Look no further! Hevo’s no-code platform simplifies your ETL process like never before. Try Hevo today and empower your team to:

- Integrate data from 150+ sources(60+ free sources).

- Simplify data mapping with an intuitive, user-friendly interface.

- Instantly load and sync your transformed data into your desired destination.

Don’t just take our word for it—try Hevo and experience why industry leaders like Whatfix say,” We’re extremely happy to have Hevo on our side.”

Get Started with Hevo for FreeWhat is ETL Architecture?

An ETL architecture defines how data moves from source to target storage systems. It involves the design of protocols, tools, and systems that handle data loading, data transformation, and extraction.

Here is an ETL architecture diagram to show its key components:

1. Data Source Systems

It includes original data sources such as databases, applications, sensors, and third-party services.

2. ETL Engine

This core component extracts, transforms, and loads data. This is the ETL “workhorse”.

3. Staging Area

It is a temporary storage space before transformation. This prevents source and target systems from being affected by transformation.

4. Data Warehouse/Target Storage

It is the final destination for the transformed data, typically a database or data warehouse where data is stored and made available for analysis.

The data flow in an ETL pipeline typically follows this sequence an ETL pipeline extracts data from the source system, moves it to a staging area, transforms it, and loads it into a data warehouse or target storage system. The data warehouse provides a single source of truth for reporting and analytics, giving enterprises a clear perspective of their data.

Importance of ETL Architecture

A well-designed architecture is essential for streamlining data workflows and ensuring businesses can make informed decisions. Here’s how

1. Streamlining Data Workflows

It simplifies the movement and management of data across systems. It streamlines the whole data workflow and automates many of the manual processes and operations.

2. Business Intelligence

It ensures that data is updated and correct so that the business can make reliable decisions. High-quality data fuels better business intelligence, improving strategic planning, forecasting, and performance monitoring.

3. Scalability

Data grows with your business. A robust architecture ensures that your system can handle large-scale data processing without affecting performance. As data grows, scalability is vital to maintain efficiency.

4. Reduced Complexity

A clear and structured architecture reduces the complexity of managing diverse data sources. Businesses can streamline maintenance and troubleshooting by organizing data flows and processing steps.

ETL architecture is the backbone of the data-driven decision-making process. It ensures that data is appropriately processed and stored, enabling businesses to leverage their data effectively.

What to Consider When Designing ETL Architecture?

Designing an efficient ETL architecture requires careful consideration of several factors. These factors can influence your data pipelines’ performance, scalability, and reliability.

- Data Volume: The way your architecture is designed will depend on how much data your company uses. Distributed systems and high-performance computing may be needed for large amounts of data.

- Data Variety: Your data may be structured, semi-structured, or unstructured. Your architecture must be able to support data integration and conversion.

- Performance Requirements: How fast must the ETL process run? Real-time or batch processing must be considered when designing the architecture to meet performance goals.

- Data Quality: High data quality is essential. Data validation, cleaning, and enrichment should be part of your architecture to improve insights.

- Scalability: Data volume rises with your business. Your ETL solution should be scalable enough to accommodate growing data sets without reengineering.

- Cost efficiency: Always consider cost. Choose ETL tools and solutions that fit your budget without sacrificing performance, dependability, or scalability.

- Security and Compliance: Security should be a top priority, especially when dealing with sensitive data. Your architecture should encrypt, restrict access, and comply with regulatory bodies such as GDPR and HIPAA.

How to Build an ETL Architecture for Your Business?

Here are the main steps involved in designing an effective architecture for ETL:

- Define Data Sources: Determine your data sources. Knowing your data sources is essential for extraction.

- Choose ETL Tools: Choose the correct ETL tools. Apache Nifi, Stitch, Informatica, and Talend are a few examples. Check that the tools support your data formats and processing.

- Planning the ETL workflow: Map out the ETL workflow, including clear data mapping in detail from extraction to loading. Check that data transformation steps match business standards and reporting. Plan the staging area and transformation logic.

- Set Up Data Storage: Select a storage solution for your transformed data. A traditional data warehouse (e.g., Amazon Redshift, Google BigQuery) or a modern data lake can store massive amounts of unstructured data.

- ETL Automation: ETL Automation is key to maintaining efficient data pipelines. Schedule or trigger data operations such as extraction and transformation. This can reduce manual intervention and ensure that your data is always up-to-date.

- Monitor and Optimize: After installing the architecture, monitor and optimize its performance. Track data processing times, error rates, and system health. Regular optimization will ensure that your system remains efficient as data volumes grow.

- Security and Compliance: Encrypt, access control, and audit your data pipelines for security and compliance. Make sure your architecture fulfills industry-specific regulatory criteria.

- Validation: ETL testing and validating your ETL system before going live. Verify data quality, performance, and reliability. Verify that transformed data fulfills business needs.

- Scale as needed: Your architecture should grow with your data. This could require increasing processing power, optimizing data storage, or using advanced data integration methods.

Challenges in Designing ETL Architecture

Designing an architecture for ETL is a challenging task. Data is extracted from numerous sources, formatted, and loaded into a storage system. The method may provide various obstacles.

- Handling huge data sets is challenging. Big data has made organizing, processing, and integrating large datasets more difficult. Furthermore, ensuring data quality can be a daunting task.

- Data integration can be complex. Organizations often rely on different databases and cloud systems. It requires careful planning and sophisticated techniques.

- Scalability and flexibility are essential. As data grows and business needs change, ETL design should adapt to new data sources and transformations. Architecture can become a bottleneck without scalability.

- Finally, ETL monitoring and troubleshooting are crucial. Strong monitoring tools are needed to find bottlenecks, mistakes, and inefficiencies. Lack of tracking can reduce performance and miss critical issues, affecting decision-making.

To know more about the challenges and best practices related to ETL, check out our blog.

How to Build an Effective ETL Architecture Using Hevo?

While designing an architecture for your ETL pipelines may seem complex, using the right tools can simplify the process. Hevo is a cloud-based ETL tool that is easy to use and does not require any coding. You can integrate data from many sources into your data warehouse seamlessly. Users with little to no coding experience can set up ETL procedures with its simple interface. Organizations can benefit from Hevo’s efficiency because of its many important features:

1. Seamless Data Integration

Hevo facilitates data integration by connecting to 150+ data sources such as databases, SaaS applications, and cloud storage systems, e.g. BigQuery, Postgres, and AWS.

2. Automated Platform

All ETL actions are automated within Hevo, saving time and reducing manual work and errors.

3. Real-time data transfer

Hevo provides real-time data transfer capabilities, ensuring organizations have access to the most up-to-date information for analysis and decision-making. Your data is always current.

4. Data Quality Assurance

Hevo has built-in validation tools that can help accomplish data consistency and accuracy. The platform checks your data for accuracy before it’s loaded, so you can trust the insights you get.

5. Scalability

Hevo makes your data infrastructure scalable as your business grows.

Best Practices for ETL Architecture Design

To develop an effective architecture, apply ETL best practices to reduce errors and improve performance and scale.

1. Design for Scalability

To accommodate growing data, your ETL design should support scaling. Scalability should be a design principle for larger data sets and more complicated transformations. It allows your architecture to scale as your business grows.

2. Consider Data Quality

Maintaining data quality is critical to successful ETL implementation. Data inconsistencies, duplicates, or missing values can affect your analysis. Implement validation checks and data-cleaning techniques.

3. Selecting ETL Tools

The appropriate tools can make or break your ETL infrastructure. You can use open-source tools, cloud-based solutions like Hevo, or proprietary systems. Make sure your tool supports your data sources and manipulations.

4. Using Parallel Processing

Slow sequential processing of huge data sets. Parallel processing speeds up ETL. This improves efficiency by splitting jobs into smaller parts that can be processed simultaneously.

5. Highlight Error Handling

ETL errors can disrupt the data pipeline. Strong error-handling methods that collect, log, and fix mistakes are necessary. Consider adding rerun logic to reprocess unsuccessful tasks automatically.

6. Improve Performance

Minimize needless transformations and data transfer to improve ETL speed. Effective transformations, indexing, and reducing redundant operations can speed up your ETL pipeline.

References:

1. General Data Generation Statistics

Conclusion

ETL architecture is more than just a technical process—it is a fundamental component of how businesses manage and leverage their data. Designing an effective architecture requires careful planning and attention to detail. You can build an architecture that meets your business needs by understanding the problems and implementing best practices. You can leverage tools like Hevo that can simplify data extraction, transformation, and loading seamlessly. Sign up for a free trial today and experience Hevo’s rich feature suit for seamless pipeline creation.

FAQs

1. What’s ETL architecture?

Data is extracted, transformed, and loaded into a storage system for analysis or reporting using ETL architecture.

2. The three ETL architecture layers are?

ETL architecture has three layers: extraction, transformation, and loading. Each layer handles a data pipeline stage.

3. What are 5 ETL steps?

The five ETL steps are extraction, transformation, loading, automation, and monitoring.

4. What ETL architecture should you use?

Your data size, sources, transformation needs, and scalability requirements determine your ETL architecture. Cloud-based ETL solutions like Hevo simplify integration and automation.