Easily move your data from Google Drive to Snowflake to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

As a data engineer, you hold all the cards to make data easily and timely accessible to your business teams. Your marketing, sales, & finance teams just requested a Google Drive to Snowflake connection on priority to quickly replicate their spreadsheets data. We know you don’t wanna keep your data scientists and business analysts waiting to get critical business insights.

As the most direct approach, you can go straight to downloading data from Google Drive and uploading it to Snowflake if this is a one-time thing. Or, hunt for a no-code Tool that fully automates & manages data integration for you while you focus on your core objectives.

Well, look no further. With this article, get a step-by-step guide to connect Google Drive to Snowflake effectively and quickly deliver data to your marketing team.

Table of Contents

Overview of Snowflake

Snowflake is a cloud-native data warehousing platform that offers unparalleled scalability and analytical capabilities. It enables seamless data storage, processing, and analysis, making it perfect for handling complex data workloads.

Together, they form a robust ecosystem that addresses the technical challenges associated with data integration. Simplify Snowflake budgeting with our easy-to-use Snowflake pricing calculator – tailor costs to your needs.

Method 1: Exporting & Importing spreadsheets as CSV Files

Integrating Google Drive into Snowflake is a tedious and time-consuming process. To do so, export your data manually using CSV files and load the files into your Snowflake Account.

Method 2: Automate the Data Migration process using Hevo

Skip the complexity of coding and multiple setup steps. With Hevo, you can seamlessly migrate data from Google Drive to Snowflake in minutes. Simplify your data integration process effortlessly!

Get Started with Hevo for Free

Overview of Google Drive

Google Drive is a Cloud Storage Service that allows you to save files online and access them from any smartphone, tablet, or computer that has an Internet connection.

There are several advantages to using a Cloud Storage Service such as Google Drive, such as easier file sharing and a remote location to back up your files, but when compared to competitors such as DropBox and Apple’s iCloud service, Google Drive’s popularity has been built on helpful collaboration tools and built-in integrations with Google’s product – and service suite.

You can also use free Web-Based tools to create Documents, Spreadsheets, Presentations, and more by integrating Google Drive with other Google products.

How to connect Google Drive to Snowflake?

Method 1: Exporting & Importing spreadsheets as CSV Files

To replicate data from Google Drive to Snowflake, you can follow the steps given below:

- Step 1.1: Data can be present in Google Drive as spreadsheets in the form of Google Sheets, Excel & CSV files. Select the required files and download your data in CSV format to your local system. You can first convert your responses as a Google Sheet for Google Forms and follow the same procedure.

- Step 1.2: Log in to your Snowflake Account and select the database where you want to upload the files. Use the Create or Replace FILE FORMAT command to create a named file format for CSV.

use database test_db;

create or replace file format new_csv_format

type = csv

field_delimiter = ','

skip_header = 1

null_if = ('NULL', 'null')

empty_field_as_null = true

compression = gzip;

- Step 1.3: Assuming no destination table exists, use the create or replace table command to create the new table.

CREATE OR REPLACE TABLE test_students (

student_ID number,

First_Name varchar(25),

Last_Name varchar(25),

Admission_Date DATE

);

- Step 1.4: Now, load the CSV file into the staging area of Snowflake using the PUT command.

put file://D:\test_stud.csv @test_db.PUBLIC.%test_students;- Step 1.5: Load the data into your target table using the COPY INTO command.

copy into test_students

from @%test_students

file_format = (format_name = 'new_csv_format' , error_on_column_count_mismatch=false)

pattern = '.*test_stud.csv.gz'

on_error = 'skip_file';

This 5 step hands-on approach is a great way to effectively replicate data from Google Drive to Snowflake using CSV files and SQL Queries. It is optimal for the following scenarios:

- One-Time Data Replication: When your business teams need this Google Drive data only quarterly, yearly, or just this one time, then the manual effort & time is justified.

- No Data Transformation Required: This approach has limited options in terms of data transformation. Hence, it is ideal if the data in your spreadsheets is clean, standardized, and present in an analysis-ready form.

- Less Number of Files: Downloading & writing SQL queries to upload several CSV files is a time-intensive job. It can be especially tedious if you need to generate a 360 view of the business and merge spreadsheets containing data from multiple departments across the firm.

Though, if you want to leverage the power of complete data from multiple sources apart from Google Drive, manually downloading & transforming the files doesn’t make sense. You would need to develop custom connectors and manage the data pipeline always to ensure a no data loss transfer. It also includes you continuously monitoring for any updates on the connector and being on-call to fix pipeline issues anytime. With most of the raw data being unclean and in multiple formats, setting up transformations for all these sources is another challenge.

A great alternative is to try out a No-code tool that completely automates the Google Drive to Snowflake ETL/ELT process and manages it for you.

Method 2: Automate the Data Replication process using a No-Code Tool

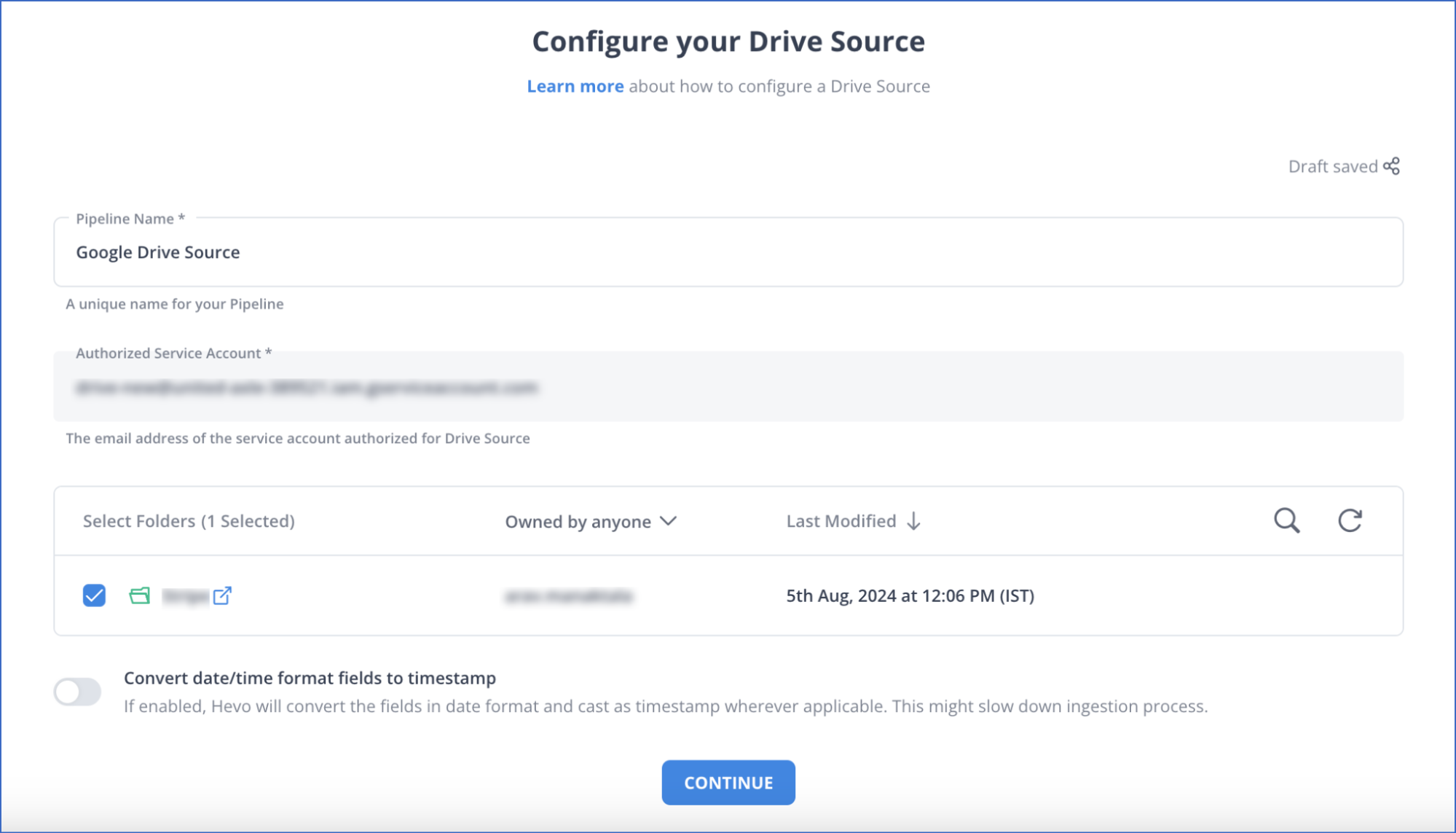

Step 2.1: Select Google Drive as your Source

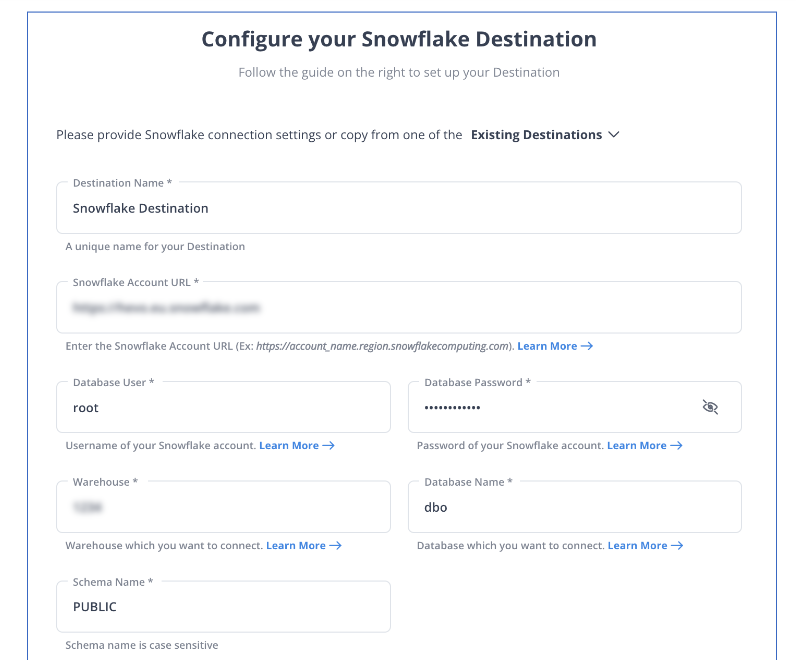

Step 2.2: Select Snowflake as your Destination

In a matter of minutes, you can complete this No-Code & automated approach of connecting Google Drive to Snowflake using Hevo and start analyzing your data.

Salient Features of Hevo

- Fully Managed: Hevo Data is a fully managed service and is straightforward to set up.

- Schema Management: Hevo Data automatically maps the source schema to perform analysis without worrying about the changing schema.

- Real-Time: Hevo Data works on the batch as well as real-time data transfer so that your data is analysis-ready always.

- Live Support: With 24/5 support, Hevo provides customer-centric solutions to the business use case.

What can you achieve by migrating your data from Google Drive to Snowflake?

Here’s a little something for the data analyst on your team. We’ve mentioned a few core insights you could get by replicating data from Google Drive to Snowflake, does your use case make the list?

- Know your customer: Get a unified view of your customer journey by combing data from all your channels and user touchpoints. Easily visualize each stage of your sales funnel and quickly derive actionable insights.

- Supercharge your conversion rates: Leverage analysis-ready impressions, website visits, & clicks data from multiple sources in a single place. Understand what content works best for you and double down on it to increase conversions.

- Boost Marketing ROI: With detailed campaign reports at your grasp in near-real time, reallocate your budget to the most effective Ad strategy.

Also, read more about loading data into Snowflake to work seamlessly with your Google Drive data.

Summing It All Together

Exporting and importing CSV files is the right path for you when your marketing & sales teams need data from Google drive once in a while. However, a custom ETL solution becomes necessary for real-time data demands such as monitoring campaign performance or viewing the recent user interaction with your product or marketing channel. You can free your engineering bandwidth from these repetitive & resource-intensive tasks by selecting Hevo’s 150+ plug-and-play integrations.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQ on Google Drive to Snowflake

1) Can Snowflake connect to Google Drive?

Snowflake does not natively support direct connections to Google Drive. However, you can work around this by using intermediate steps to transfer data from Google Drive to Snowflake.

2) How do I get my data into Snowflake?

a) Using Cloud Storage (e.g., AWS S3, Google Cloud Storage)

b) Using Snowflake Web Interface

c) Using ETL Tools

d) Using Snowpipe

3) How do you load data from GCP to Snowflake?

Load data from GCP to Snowflake by exporting your data to Google Cloud Storage and then using Snowflake’s COPY INTO command to load the data from Google Cloud Storage into Snowflake.