GPT has become a go-to search engine for many. We often use it instead of Google to get a quick solution for any query. Given its popularity, why don’t you include a customer chatbot or a troubleshooting chatbot service in your business?

Imagine having a brand-specific chatbot with expertise in answering your business related queries. That’s what Snowflake Cortex offers. It trains large language models (LLMs) on your business data to create custom RAG applications, and the process of making end-to-end RAG applications with Cortex is straightforward.

Table of Contents

What are RAG applications?

Suppose you own a brand that sells home appliances like televisions and refrigerators. You want to have a chatbot that answers customers queries about products’ features, available offers, and user guides.

Leveraging LLM-powered chatbots in these scenarios enhances customer experience. However, large language models like GPT-4 or BERT are limited to providing generic answers.

For example, if a customer asks about the features of a specific refrigerator, the LLM only answers about standard refrigerators but not the specifics of your product. These generic responses are a significant drawback to implementing LLMs in your business use cases.

That’s where RAG comes into play. Applications that combine the generative power of large language models and retrieval mechanisms on custom data are called RAG applications.

RAG allows LLMs to access specific information from your databases and resolve customer queries more accurately. This methodology tailors LLMs specific to your organization’s needs.

As you learn how to build RAG applications using Snowflake Cortex, consider how Hevo can streamline your data integration process. Our ETL solution provides real-time data syncing to keep your RAG models up-to-date.

Check out what makes Hevo amazing:

- It has a highly interactive UI, which is easy to use.

- It streamlines your data integration task and allows you to scale horizontally.

- The Hevo team is available round the clock to extend exceptional support to you.

Hevo has been rated 4.7/5 on Capterra. Know more about our 2000+ customers and give us a try.

Get Started with Hevo for FreeA Snowflake-native app to monitor Fivetran costs.

Why Snowflake Cortex for LLMs and RAG?

Snowflake Cortex is a fully-managed service that allows anyone, even without much technical background, to create and launch AI applications.

Serverless AI

Maintaining large language models needs complex infrastructure and enormous resources. Not all companies can have this flex, but they might want to leverage LLMs in their chat services. That’s where serverless AI like Cortex shines.

Snowflake Cortex is a serverless AI that allows you to build and deploy LLMs without worrying about infrastructure. The tool takes off the required resources on the cloud.

Not only does it remove the need for infrastructure management, but it also allocates resources optimally to ensure high performance. Its automatic scaling feature lets your application use resources effectively and charges you only for the resources you use.

Security

Providing enterprise data to LLMs is risky, especially if the app leaks your sales strategies, finance numbers, and customer details.

Snowflake supports ISO/IEC 27001 security measures. This security standard ensures that your data on Snowflake servers is well managed and privacy is maintained. It mentions the best practices for data security management that comply with global standards.

Moreover, the platform’s features, like role-based access controls, can be used to manage data access for different roles in your organization.

Snowflake encrypts data both at rest and in transit so that no intruder can access it. Industry-standard algorithms such as AES 256 are used for data at rest, while (Transport Layer Security) TLS is used to encrypt data in transit.

Access to industry-leading AI models

Snowflake cortex gives instant access to all major language models of different sizes.

Large models

- reka-core: Known for code generation and multilingual frequency.

- mistral-large: Mistral AI’s large language model specializes in synthetic text generation.

- llama: open-source LLM from meta.

Medium models

- snowflake arctic: Snowflake’s LLM excels at SQL generation and coding

- mixtral-8x7b: Ideal for text generation, classification, and resolving queries

- llama3-70b: Great for chat applications, content creation, and enterprise applications

Small models

- gemma-7b: Suitable for simple code and text generation capabilities

- llama3-8b: Ideal for use cases requiring moderate reasoning and better accuracy

Document AI

Document AI is a Snowflake tool designed to extract information from a variety of documents. The tool supports various document formats, such as handwritten text, logos, invoices, signatures, and more.

You can train this Cortex tool on documents specific to your use case. Snowflake never shares this trained model with external services—only you can access and use it for your business.

Moreover, the tool automates the processing of a specific document type and gives your unstructured documents a structured format.

Integrations

Snowflake Cortex supports integration with multiple types of data platforms. For streaming use cases, Cortex integrates with data ingestion tools like Apache Kafka, AWS Glue, and Google Cloud Dataflow.

If you need to use data on cloud platforms, Snowflake’s Cortex seamlessly connects with popular cloud storage systems like Amazon S3, Google Cloud, and Azure Blob.

Moreover, third-party services like Airbyte can automatically sync data between various sources and Snowflake Cortex.

Steps for Building RAG Applications with Cortex

Let’s build a search chatbot with Snowflake Cortex. In this example, we’ll use JSON files containing data on phone numbers. However, you can use any document format.

The app will generate results related to the data in the given documents. The best part is it doesn’t just search and retrieve information from documents. Instead, the app uses LLM capabilities and generates human-like responses to queries using the document data.

To create a search app for your documents, you first need to upload them to a warehouse and database. Then, your RAG applications will utilize this data.

Create and Upload Documents to Snowflake database

Step 1: Log in to your Snowflake account.

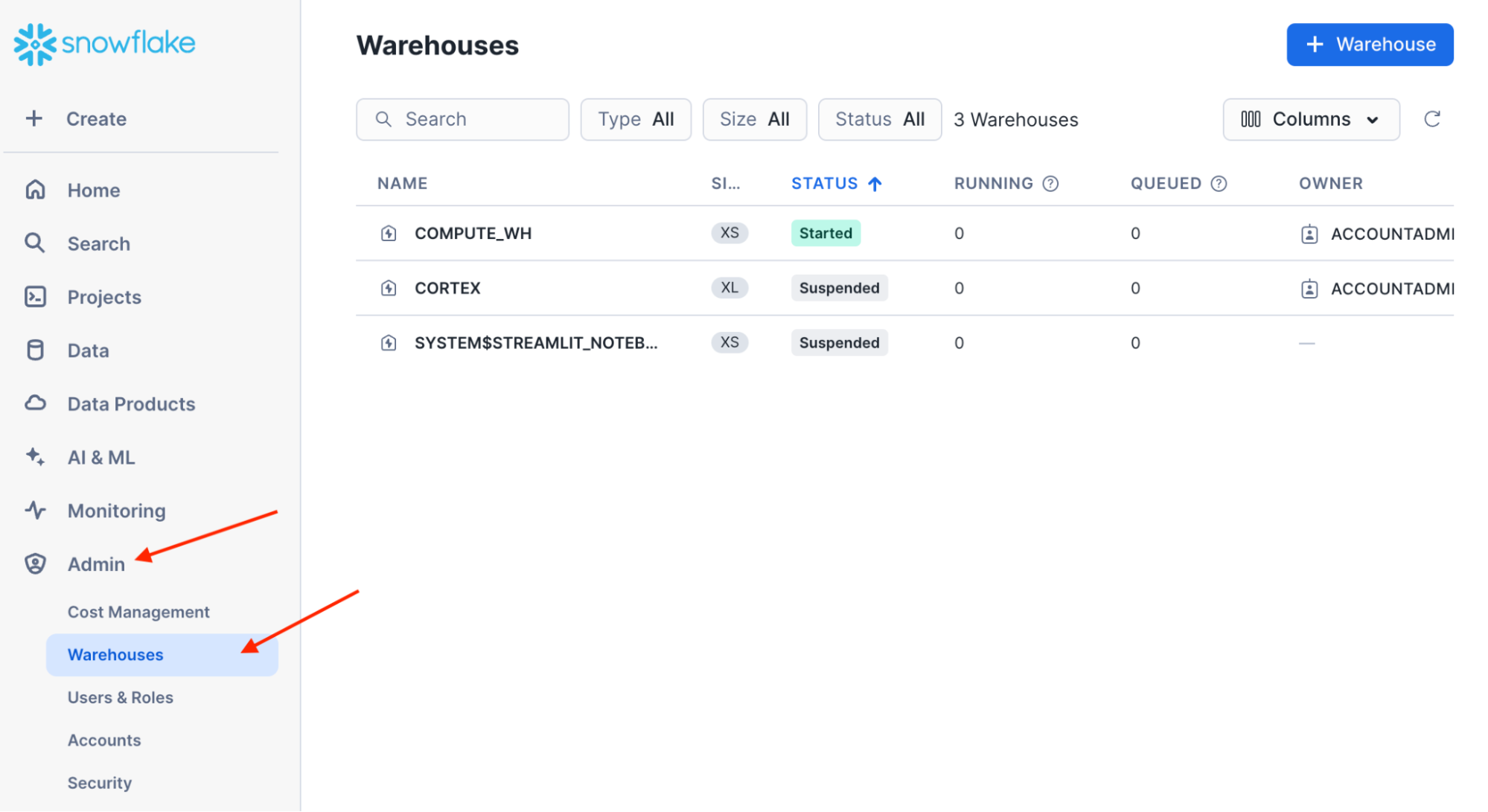

Step 2: Click “Admin” and then select “Warehouses,” as shown in the image.

Step 3: Click the “+Warehouse” button at the top right corner of the screen.

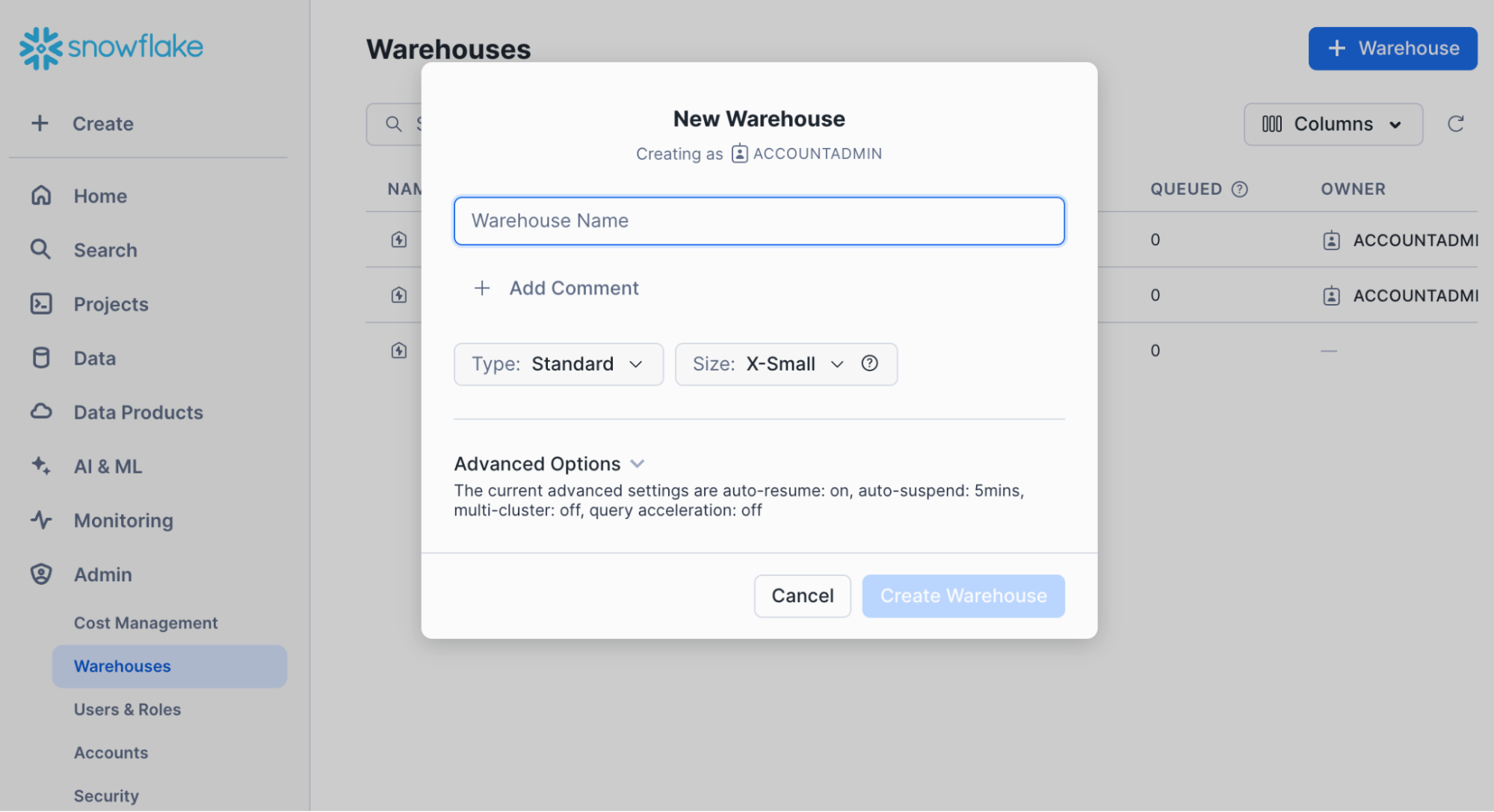

Step 4: Give a name to your Warehouse and click the “Create Warehouse” button.

Step 5: Click “Data” on the left menu bar and select “Databases” from the submenu.

Step 6: Click the “+ Database” button at the top right corner.

Step 7: Give a name to your database and click “Create.”

Now that you have created the warehouse and database. Follow the below steps to upload your files to the database.

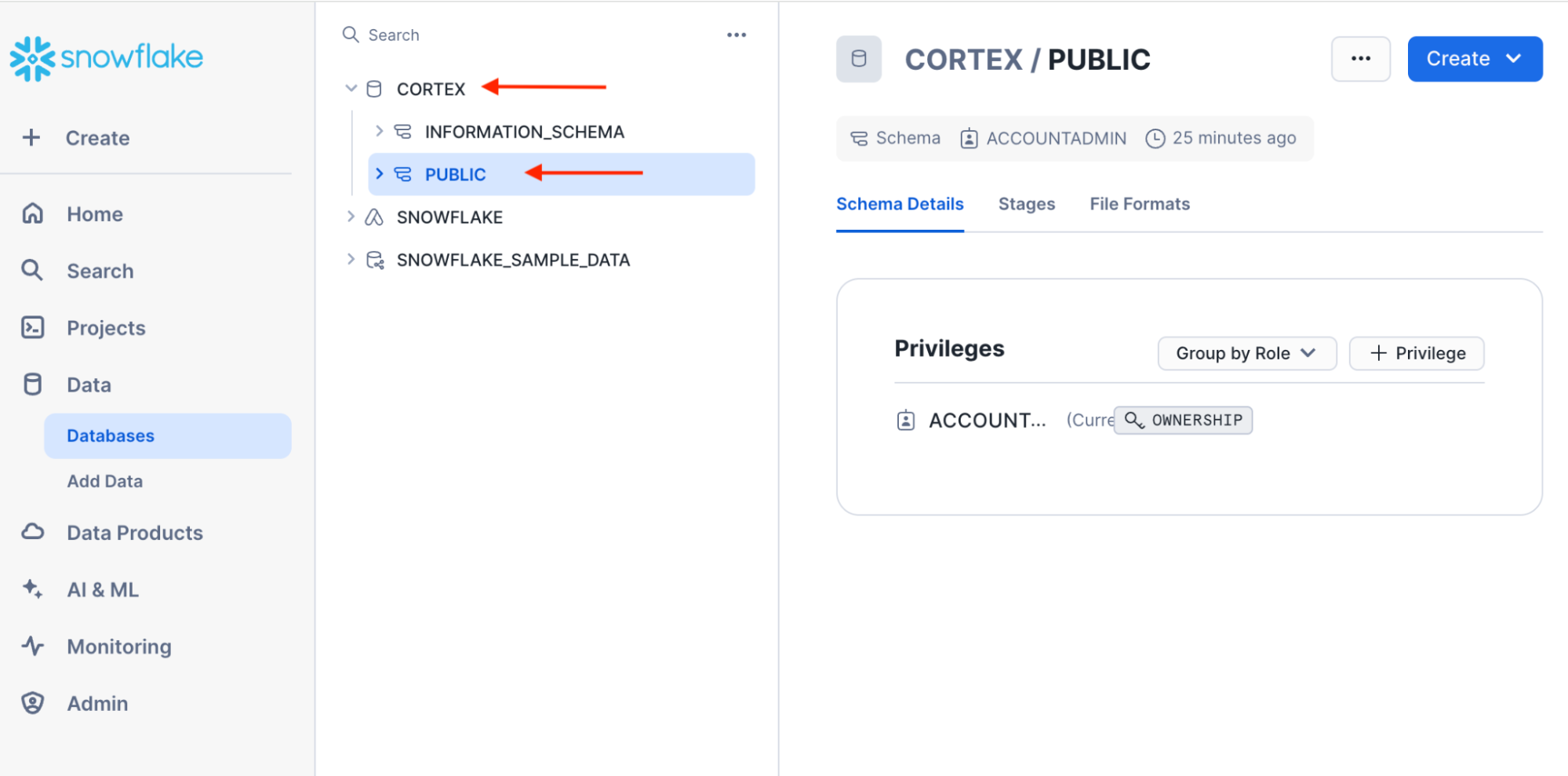

Step 8: Click on the database you just created and select “Public.”

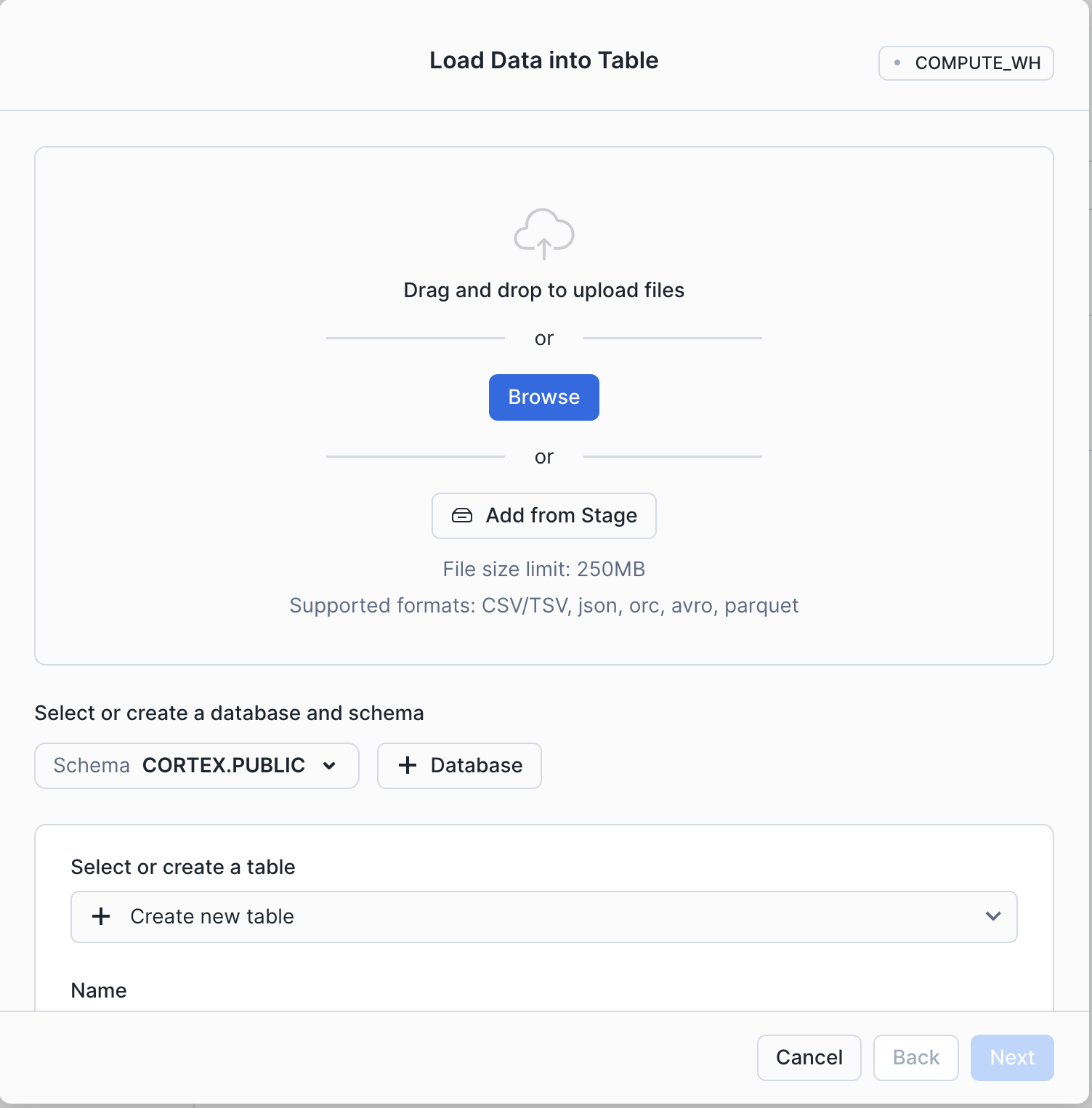

Step 9: Click “Create” at the top right corner, expand “Table”, and select “From File.”

Step 10: Click the “Browse” button and upload your files.

Create a Chatbot using Cortex

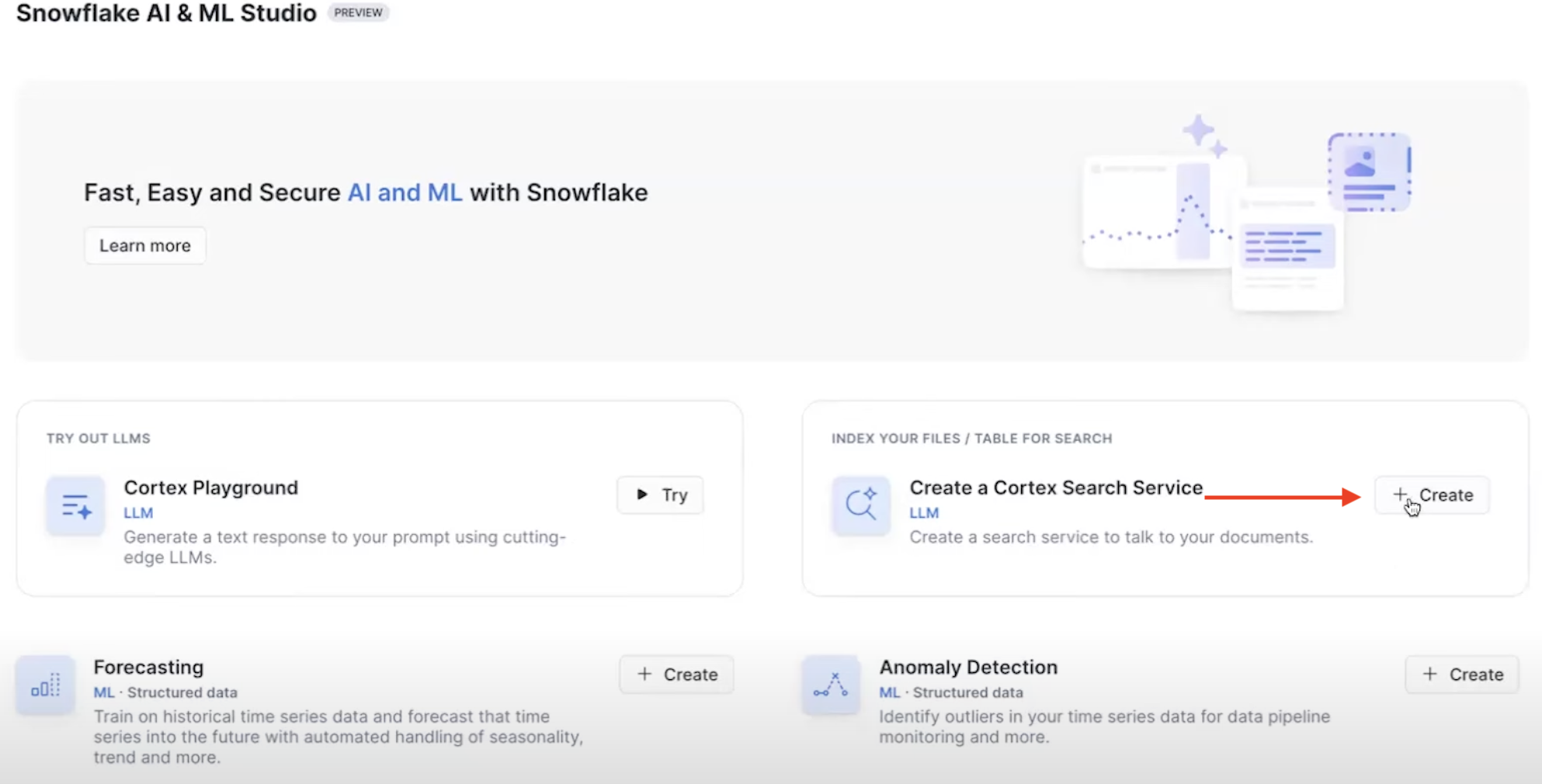

Step 11: Click the “AI & ML” button on the left menu bar and select “Studio” from the submenu.

Step 12: Click the “+” button on the “Create a Cortex Search Service” card, as shown in the image.

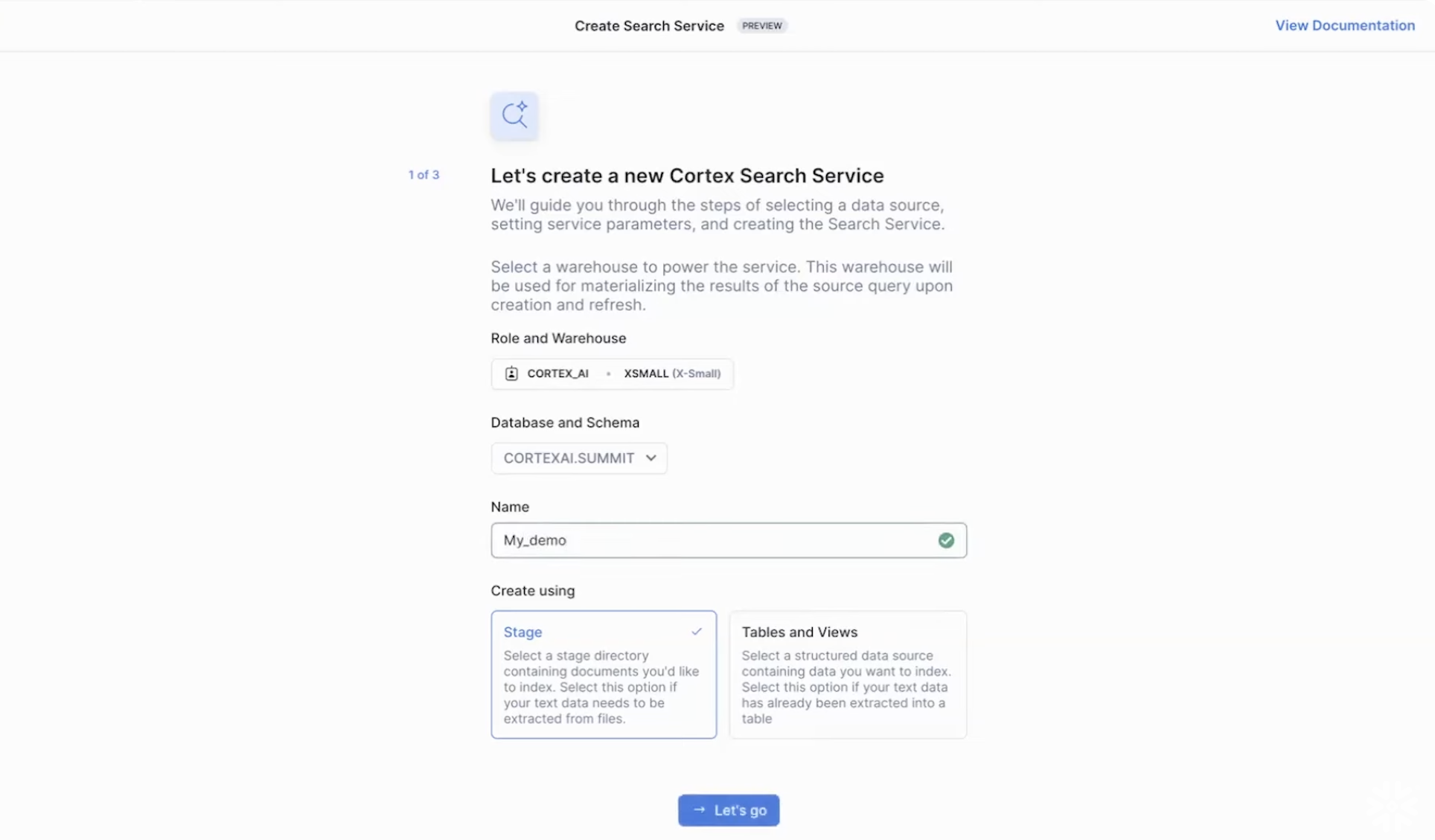

Step 13: In this step, you have to select the role of “admin” and the warehouse where your data is stored. For example, if your documents are in the Cortex_AI warehouse, choose that from the dropdown. Name your Cortex app and click “Let’s go.”

Step 14: Keep the target lag default at 1 hour and click “Create Search Service.”

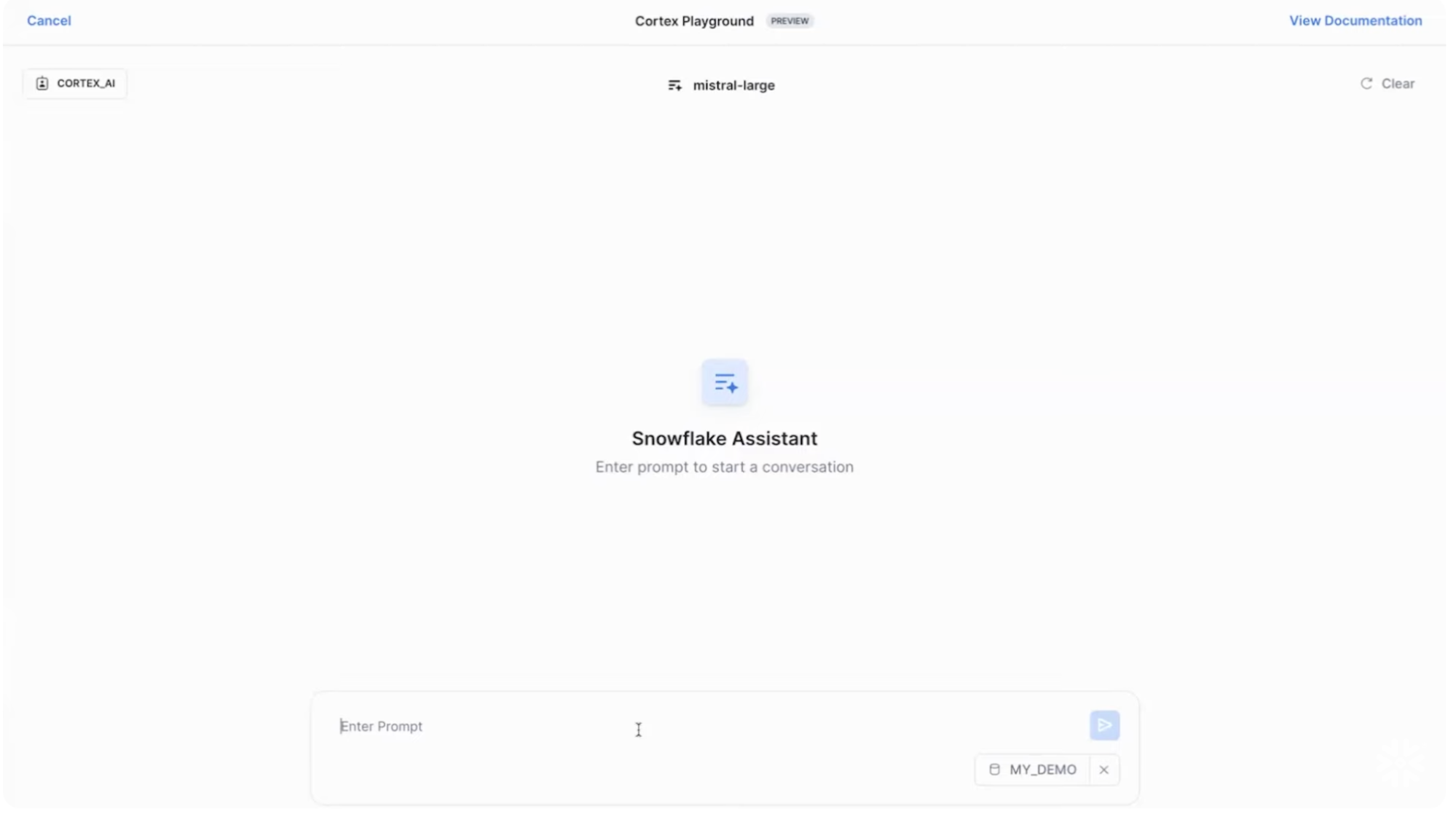

Step 15: You will see the “Cortex search service successfully created” message on the screen. Click “Open LLM Playground.”

Step 16: You’ll now see a chatbot interface to interact with. Ask anything related to the documents in your database, and the chatbot provides accurate and human-like responses.

Conclusion

Cortex simplifies creating RAG applications tailored to your business use cases. As part of Snowflake, which complies with industry-standard security protocols, Cortex ensures your data is safe.

In this blog, we’ve created an RAG application trained on our custom data. Also, we’ve shown you how simple and less technical it is to make them using the Snowflake cortex. Additionally, Hevo Data can enhance your experience by seamlessly integrating and transforming your data, ensuring you have the clean, consistent information needed to power your RAG applications effectively. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

Frequently Asked Questions

1. Can you build applications on Snowflake?

Yes, you can build applications on Snowflake. Snowflake provides a cloud-based data platform that allows developers to build data-driven applications using SQL, C#, Go and Python.

2. How to create a RAG LLM?

To create a RAG (Retrieval-Augmented Generation) LLM, you need to combine a retrieval model that fetches relevant documents from a knowledge base and a generation model that uses these documents to generate responses. This typically involves fine-tuning pre-trained language models and integrating them with efficient retrieval mechanisms.

3. What is the use case of Snowflake cortex?

Snowflake Cortex is used for building real-time analytical applications and machine learning models, offering seamless integration and processing capabilities within the Snowflake data cloud environment.