As a Kafka Developer, you might have faced challenges deploying Kafka, particularly across the hybrid cloud. Due to this, many streaming data customers choose to employ a Kafka service, which offloads infrastructure and system administration to a service provider. Hence a new service was introduced – “Kafka as a Service” to solve the above challenges. Kafka Service is a Cloud-based version of Apache Kafka.

In this article, you will learn about Kafka as a service in detail, along with its benefits.

Table of Contents

What is Apache Kafka?

Apache Kafka is a distributed Event Streaming platform that allows applications to manage large amounts of data quickly. Its fault-tolerant, highly scalable design can handle billions of events with ease. The Apache Kafka framework is a Java and Scala-based distributed Publish-Subscribe Messaging system that receives Data Streams from several sources.

The capacity of Kafka to handle Big Data input volumes is a distinct and powerful benefit. With minimum downtime, it can easily and swiftly scale up and down. Because of its minimum data redundancy and fault tolerance, Kafka has increased in popularity among other Data Streaming systems.

Key Features of Apache Kafka

Apache Kafka has become quite popular, because of its features, such as ensuring uptime, making scaling simple, and handling large volumes of data. Let’s have a look at some of its most useful features:

- High Scalability: Kafka’s partitioned log model distributes data over several servers, allowing it to scale beyond a single server’s capability.

- Low Latency: As Kafka separates data streams, it has very low latency and high throughput.

- Fault-Tolerant & Durable: Partitions are distributed and duplicated across several servers, and data is written to the disc. This makes data fault-tolerant and long-lasting by protecting it against server failure. The Kafka cluster can withstand master and database failures. It can restart the server on its own.

- High Extensibility: A lot of additional applications have developed connectors for Kafka. This makes it possible to add more features in a matter of seconds. Check out how you can integrate Kafka with Redshift and Salesforce.

- Metrics and Monitoring: For tracking operational data, Kafka is a popular solution. This necessitates collecting data from a variety of apps and combining it into consolidated feeds with analytics. To read more about how you can analyze your data in Kafka, refer to Real-time Reporting with Kafka Analytics.

A fully managed No-code Data Pipeline platform like Hevo Data helps you integrate and load data from 150+ different Data sources (including 60+ free sources) such as Kafka to a Data Warehouse or Destination of your choice in real-time effortlessly.

Its features include:

- Transformations: A simple Python-based drag-and-drop data transformation technique that allows you to transform your data for analysis.

- Schema Management: Hevo eliminates the tedious task of schema management. It automatically detects the schema of incoming data and maps it to the destination schema.

- Real-Time Data Transfer: Hevo provides real-time data migration, so you can always have analysis-ready data.

- 24/5 Live Support: The Hevo team is available 24/7 to provide exceptional support through chat, email, and support calls.

Try Hevo today to experience seamless data transformation and migration.

Get Started with Hevo for FreeWhy is Kafka as a Service Important?

Despite its many powerful features and benefits, Kafka is difficult to deploy at scale. In production, on-premises Kafka clusters are difficult to set up, expand, and maintain. For example, you must supply workstations and configure Kafka while setting up an on-premises architecture to run Kafka. You must also plan the cluster of distributed servers to assure availability, maintain data storage and security, set up monitoring, and scale data wisely to handle load variations. Then, you have to keep that infrastructure running by replacing systems when they fail and patching and upgrading it regularly.

For the above challenges, many Apache Kafka customers shift to Managed Cloud Service, in which infrastructure and system maintenance are delegated to a third party. Enterprises can immediately benefit from Kafka as a service by deploying the platform to any architecture, including On-Premises, Cloud, and Hybrid. You can leverage Apache Kafka’s large ecosystem of tools and connectors. It also offers exceptional scale, robustness, and performance when combined with Connect, ksqlDB, and KStream API.

Differences between Managed Services & Hosted Solutions in Apache Kafka

The quickly changing IT landscape, along with the complicated and ever-increasing expectations of clients, has resulted in a wide range of hosting alternatives. There are several options available, ranging from simple Hosting Solutions and Managed Services to Cloud Services and Hybrid Solutions. In this section, you will understand a few differences between Hosted Solutions and Managed Services to help you select the best solution for your Kafka.

| Feature | Hosted Solutions | Managed Services |

| User Responsibility | User manages Apache Kafka like an on-premises setup. | Service provider manages Apache Kafka, reducing user responsibility. |

| Storage Provisioning | Users must estimate and allocate storage per broker, often leading to over-provisioning. | No need for the user to provision storage; the provider handles infrastructure scaling. |

| Cost Efficiency | Users may over-provision, resulting in paying for unused resources. | Optimized resource management by the provider, avoiding over-provisioning. |

| Software Monitoring & Management | User is responsible for software updates, monitoring, and troubleshooting. | Managed by the service provider, including system updates, backups, and troubleshooting. |

| Infrastructure Control | The user is responsible for software updates, monitoring, and troubleshooting. | Minimal expertise is required as the provider handles Kafka and infrastructure management. |

| Technical Expertise Required | The user controls infrastructure choices (e.g., hardware, network configurations). | It requires an in-house team with Kafka expertise in management and maintenance. |

| Complexity | High complexity due to manual management of Kafka and event streaming applications. | Reduced complexity with the provider offering end-to-end Kafka management and monitoring. |

| Data Backups & System Admin | Managed by the user. | Handled by the provider, offering more robust backup options and system administration services. |

| Flexibility | Allows more control over Kafka deployment but at the cost of added complexity and responsibility. | Offers convenience and scalability, with less control over specific infrastructure decisions. |

Top Features & Benefits of Kafka as a Service on Confluent Cloud

This section provides the robust features offered by Kafka as a Service with Confluent Cloud:

- All-in-One Event Streaming Applications

- Interoperability

- Support by Kafka Professionals

- No Vendor Lock-In

1) All-in-One Event Streaming Applications

The Kafka Managed Service provides a one-stop shop for Event Streaming applications.

- It includes all of the tools required to create Event Streaming applications, so there’s no need to go far. Clients API, Kafka Connect, Schema Registry, Kafka Streams, and many more features are included in Kafka as a Service.

- Confluent Cloud provides ready-to-use connections, eliminating the need for Developers to create and manage their own.

- Postgres, MySQL, Oracle cloud storage (GCP Cloud Storage, Azure Blob Storage, and AWS S3), cloud functions (AWS Lambda, Google Cloud Functions, and Azure Functions), Snowflake, Elasticsearch, and others are among the integrations offered.

- More significantly, Confluent Cloud offers Kafka Connect as a Service, eliminating the requirement for users to manage their own Kafka Connect clusters.

2) Interoperability

Full support for popular tools and frameworks used by developers working with Apache Kafka and all of its features is referred to as interoperability.

- Confluent Cloud offers full-fledged Kafka clusters, allowing client applications to take advantage of all of Kafka’s and Confluents’s advantages.

- Furthermore, the code written by Developers is the same code used for Kafka clusters running On-Premises; thus, no new tools or frameworks are required.

3) Support by Kafka Professionals

Using Managed Services that Kafka specialists support also means getting better support.

- You can submit support requests for difficulties and ask sophisticated questions regarding the fundamental technology and how it might be tweaked to improve application performance.

- Experts in Apache Kafka are better equipped to deliver precise and effective responses in a timely manner.

- Confluent Cloud is the top provider of Apache Kafka support. It supports programming languages such as Java, C/C++, Go,.NET, Python, and Scala with native clients.

- Confluent, which has an engineering staff devoted to this, develops and supports all of these clients.

4) No Vendor Lock-In

Users nowadays are considerably more careful if the Managed Service allows you to switch from one Cloud provider to another. As a result, Managed Apache Kafka services must accommodate a variety of Cloud providers.

- Using Managed Services that handle Apache Kafka similarly across multiple Cloud providers will help you avoid situations like these.

- To do this, these Managed Services must support numerous Cloud providers while also ensuring that the Developer experience is consistent across all of them.

Popular Options Offered by Apache Kafka as a Service with Confluent Cloud

Using Kafka as a Service provider is one method to assure a professionally built and maintained Kafka deployment. Any managed Kafka service should include round-the-clock monitoring, preemptive maintenance, and the best uptime guarantee. Confluent Cloud is the only Kafka service designed by Kafka Developers. It’s completely managed for maximum deployment simplicity. Confluent Cloud is designed to enable enterprise-scale, mission-critical applications by being scalable, robust, and secure.

Let’s discover some of the options offered by Kafka as a Service with Confluent Cloud:

- Kafka as a Service with AWS Managed Streaming for Kafka (MSK)

- Kafka as a Service with Google Cloud Platform (GCP)

- Kafka as a Service with Microsoft Azure

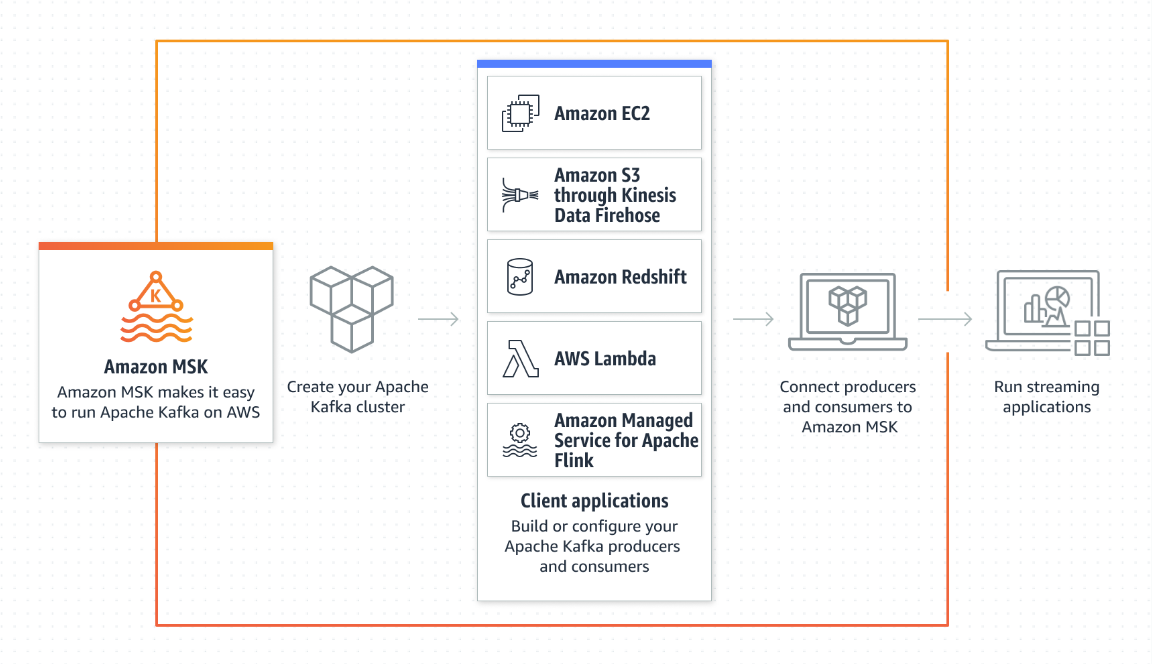

1) Kafka as a Service with AWS Managed Streaming for Kafka (MSK)

Amazon offers Managed Streaming for Kafka (MSK) – Kafka Service to its AWS customers. AWS Developers can now simply install Kafka on AWS systems and start building streaming pipelines with technologies like Spark Streaming in just a few minutes.

- They don’t have to worry about maintaining Kafka brokers, Zookeeper, or anything else, so they can focus on developing streaming pipelines. Streaming development is now a lot easier, and turnaround time is reduced.

- The price is a little convoluted, but a basic Kafka instance will start at $0.21 per hour, much like everything else on AWS.

2) Kafka as a Service with Google Cloud Platform (GCP)

Confluent Cloud on Google Cloud offers fully managed Apache Kafka as a Service, allowing you to focus on developing applications rather than cluster management.

Users may combine the premier Kafka service with GCP services to support a variety of use cases with the new Confluent Cloud on GCP. You can do the following:

- Analyze data in real-time and at a vast scale. You can stream data to BigQuery, Cloud Machine Learning Engine, and TensorFlow, which are all part of Google Cloud’s big data offerings.

- Create applications that are triggered by events. You can combine Google Cloud Functions, App Engine, and Kubernetes with Confluent Cloud pub/sub-messaging services.

- Provide a robust connection to the Cloud. You can accelerate multi-Cloud adoption by creating a real-time data conduit between data centers and Google Cloud.

3) Kafka as a Service with Microsoft Azure

Microsoft Azure in Confluent Cloud allows Developers to construct Event Streaming applications with Apache Kafka using Azure as a public cloud.

- Developers can concentrate on designing applications rather than managing infrastructure using Confluent Cloud on Azure.

- You can also integrate with Azure SQL Data Warehouse, Azure Data Lake, Azure Blob Storage, Azure Functions, and other Azure services using prebuilt Confluent connectors.

- Developers can use their existing billing service on Azure to get started with Confluent Cloud. You only pay for what you stream by using consumption-based pricing.

Managed Apache Kafka vs DIY: Pros & Cons

Following an IT audit, a company should have a better idea of the budget, time, and resources required to implement usable event streaming infrastructure and analytics. With this information in hand, the next step is to weigh the benefits and drawbacks of the various event-streaming implementation options.

Managed Cloud solutions are ideal for organizations with smaller IT teams that lack the time and resources to deploy and maintain custom event streaming infrastructure. They can instead pay a monthly subscription to have experts deploy, scale, log, and monitor their infrastructure. DIY approaches, on the other hand, can take months or even years to implement but provide greater configuration flexibility.

A) Pros

| Feature | Managed Cloud | DIY Apache Kafka |

| Adoption Speed | Faster adoption of Apache Kafka for SMBs, enabling quicker implementation. | Customizable for organizations that can manage it on platforms like VMware, OpenStack, and Kubernetes. |

| Cost Efficiency | More affordable for organizations that do not want to manage in-house 24/7 data center teams. | Suitable for organizations with sufficient IT resources, especially with high-volume needs. |

| Best Practices & Security | Provides industry best practices and enterprise-grade security | Allows for custom AI/ML processing with tools like Apache Storm, Spark, Flink, and Beam. |

| Persistent Storage & Real-Time Analytics | Offers persistent storage and real-time analytics as part of the managed service. | Supports embedded real-time analytics for high-performance web/mobile apps and IoT networks. |

| Custom Solutions | No need for custom infrastructure; focuses on using Kafka APIs efficiently. | Allows for building highly custom infrastructure for specific performance requirements. |

B) Drawbacks

| Feature | Managed Cloud | DIY Apache Kafka |

| Recurring Costs | Ongoing costs may outweigh benefits for teams with the ability to manage infrastructure in-house. | Risk of increased costs and delays due to scope creep and complexity of implementation. |

| Use Case Limitations | Less ideal for use cases with extremely high event volumes or specialized performance needs. | Requires dedicated resources for deployment, scaling, logging, and monitoring. |

| Complexity | Simplified architecture management; provider handles infrastructure. | Higher complexity, requiring a skilled IT team for effective management and maintenance. |

| Scalability | Provider manages scalability, reducing user involvement. | Custom-built, requiring the IT team to manage and scale according to organizational needs. |

| Infrastructure Control | Less control over infrastructure but reduced overhead for management. | Full control over infrastructure, but at the cost of increased responsibility and resources. |

Conclusion

This article provided a holistic overview of Kafka as a Service. You learned more about Managed Services and discussed how they are different from hosted services. In addition, you understood the need for Kafka as a service and explored its key features and benefits. At the end of this article, you will discover the popular options offered by Apache Kafka as a Service with Confluent Cloud.

However, extracting complex data from Apache Kafka can be quite challenging and cumbersome. If you are facing these challenges and are looking for some solutions, then check out a simpler alternative like Hevo.

Simplify your data analysis with Hevo today and Sign up for a 14-day free trial now. Share your experience working with Kafka as a service with us in the comments section below!

FAQs

1. Is confluent Kafka SaaS or Paas?

Confluent Kafka is primarily offered as SaaS (Software as a Service), providing fully managed Kafka services in the cloud.

2. Why use Confluent instead of Kafka?

Confluent offers additional tools like monitoring, security, and connectors, making Kafka easier to manage and scale without requiring users to handle the complexities of Kafka infrastructure.

3. What type of software is Kafka?

Kafka is an open-source distributed event streaming platform used to build real-time data pipelines and streaming applications.