Are you struggling to load data into BigQuery? Are you confused about which is the best method to load data into BigQuery? If yes, then this blog will answer all your queries. In this article, you will learn how to load data into BigQuery and explore some different data type uploads to the Google BigQuery Cloud Storage, including CSV and JSON files.

You will also learn about the ways of uploading through an API or add-on. If you need to analyze terabytes of data in a few seconds, Google BigQuery is the most affordable option.

Table of Contents

What is Google BigQuery?

Google BigQuery is a serverless, highly scalable, cost-effective multi-cloud data warehouse designed for business agility.

Here are a few features of Google BigQuery:

- BigQuery allows us to analyze petabytes of data at a quick speed with zero operational overhead.

- No cluster deployment, virtual machines, setting keys or indexes, or software are required.

- Stream millions of rows per second for real-time analysis.

- Thousands of cores are used per query.

- Separate storage and computing.

Learn more about Google BigQuery.

Say goodbye to the hassle of writing complex ETL scripts! With Hevo’s no-code platform, you can seamlessly load data into BigQuery in real time, without any manual effort. With Hevo:

- Ingest data from 150+ sources, including databases, SaaS applications, and cloud storage.

- Automate schema mapping and transformation—no manual intervention needed.

- Ensure data accuracy with built-in monitoring and alerts.

Join 2000+ data professionals from companies like Postman and ThoughtSpot who trust Hevo for effortless data integration. Rated 4.4/5 on G2! Try Hevo today!

Get Started with Hevo for FreeTypes of Data Load in BigQuery

The following types of data loads are supported in Google BigQuery:

- You can load data from cloud storage or a local file. The supported records are in the Avro, CSV or JSON format.

- Data exports from Firestore and Datastore can be uploaded into Google BigQuery.

- You can load data from other Google Services such as Google Ads Manager and Google Analytics.

- Streaming inserts can be actively loaded in BigQuery.

- Data Manipulation Language (DML) statements are also used for bulk data upload.

Data uploading through Google Drive is NOT yet supported, but data can be queried in the drive using an external table.

Data Ingestion Format

A proper data ingestion format is necessary to upload data successfully. The following factors play an essential role in deciding the data ingestion format:

- Schema Support: A critical feature of BigQuery is that it automatically creates a table schema based on the source data. Data formats like Avro, ORC, and Parquet are self-describing, so no specific schema support is needed for these. However, an explicit schema can be provided for data formats like JSON and CSV.

- Flat Data, Nested, and Repeated Fields: Nested and repeated data help to express hierarchical data. All formats, including Avro, ORC, Parquet, and Firestore exports, support data with nested and repeated fields.

- Embedded Newlines: When data is loaded from JSON files, the rows must be newline-delimited. BigQuery expects newline-delimited JSON files to contain a single record per line.

- Encoding: BigQuery supports UTF-8 encoding for nested, repeated, and flat data. CSV files also support ISO-8859-1 encoding for flat data.

Methods To Load Data into BigQuery

Method 1: Loading Data Into BigQuery Using An Automated Data Pipeline

Mthod 2: To load data into BigQuery manually

Method 1: Loading Data Into BigQuery Using An Automated Data Pipeline

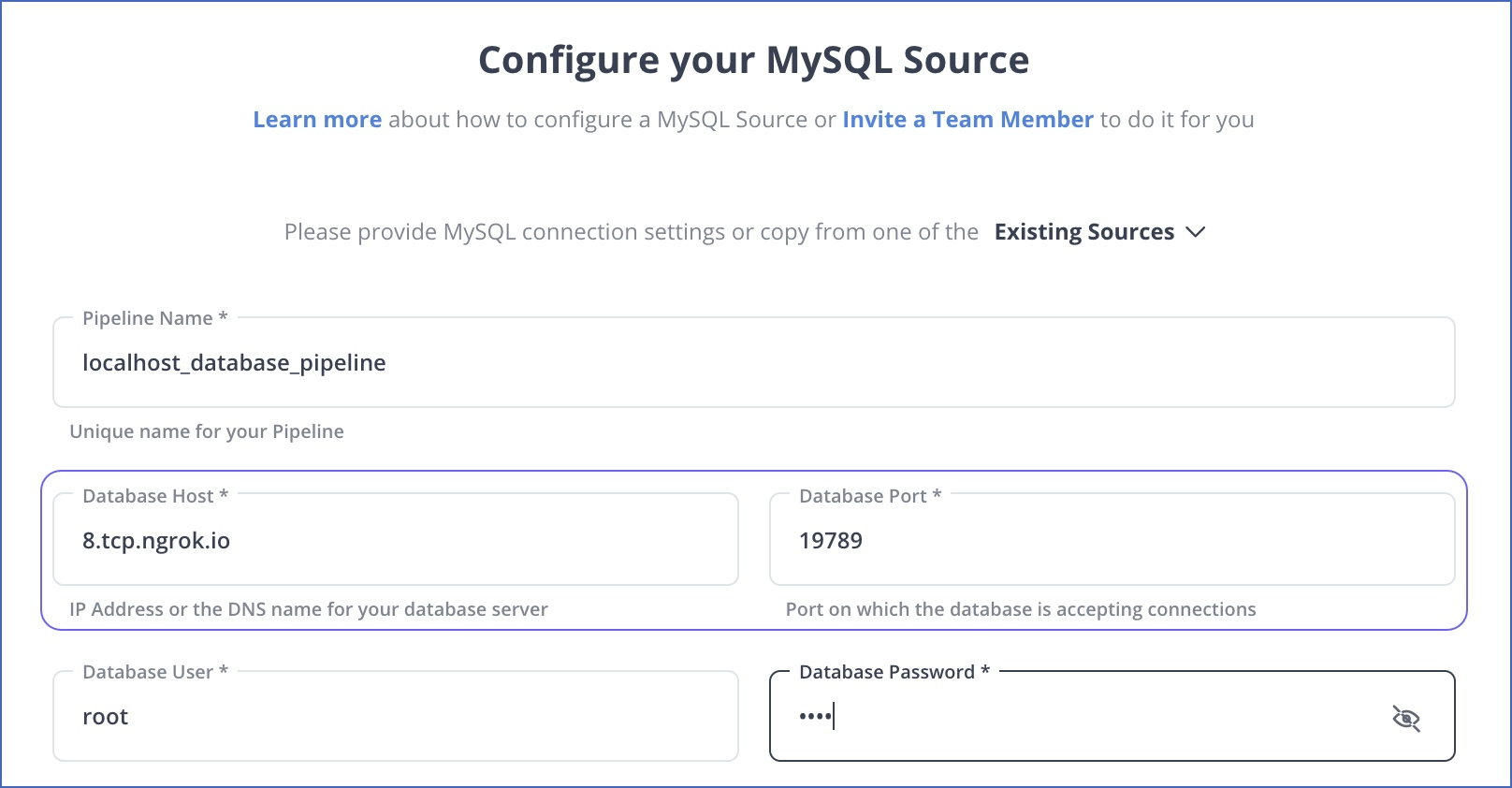

This method is simple, as it requires only two easy steps. Using Hevo, an automated, no-code solution, you can migrate your data from your source to BigQuery in a few minutes.

Step 1: Configure your source

An example of MySQL as a source is shown below.

Step 2: Configure your destination

Method 2: To load data into BigQuery manually

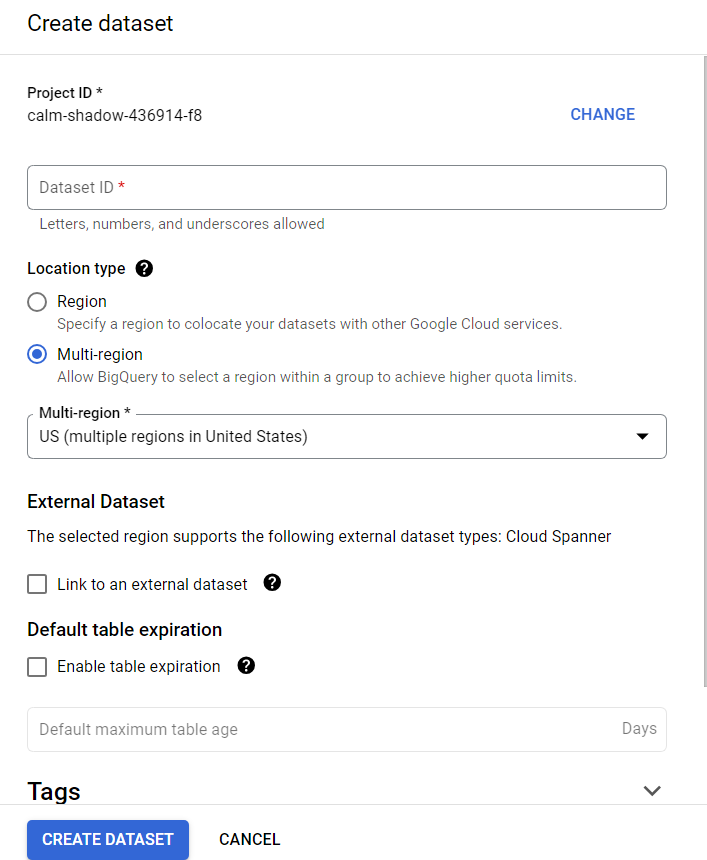

- Before you upload any data, you need to create a dataset and a table in Google BigQuery. To do this on BigQuery, go to the home page and select the resource you want to create a dataset.

- In the Create dataset window, give your dataset an ID, select a data location, and set the default table expiration period.

Note: The physical storage location will not be defined if you select “Never” for table expiration. You can specify the number of days to store them for temporary tables.

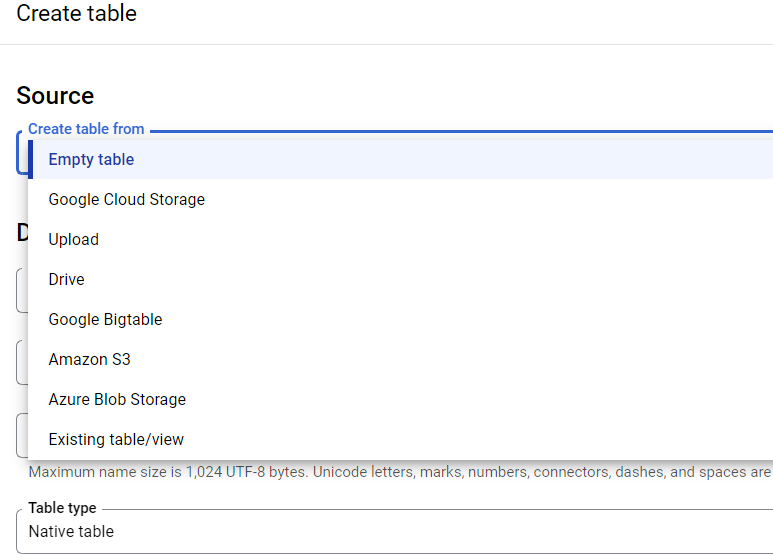

- Next, create a table in the dataset.

After table creation, you can now load data into BigQuery. Let’s explore the different data type uploads to the Google BigQuery Cloud Storage:

- Upload Data from CSV File

- Upload Data from JSON Files

- Upload Data from Google Cloud Storage

- Upload Data from Other Google Services

- Download Data with the API

1. Upload Data from CSV File

To upload data from a CSV file, go to the create table window, select a data source, and use the upload function.

- File Selection: Choose the file and format (e.g., CSV, JSON).

- Destination: Define the project and dataset name in Google BigQuery.

- Table Type: Select between native and external tables.

- Table Structure:

- Auto-detect by BigQuery or

- Manually add fields via text revision or the ‘+ Add field’ button.

- Advanced Options: Adjust parsing settings for CSV files as needed.

2. Upload Data from JSON Files

To upload data from JSON files, repeat all the steps to create or select the dataset and table that you are working with, and then select JSON as the file format. You can upload a JSON file from your computer, Google Cloud Storage, or Google Drive.

Further information about the JSON format is available on Google Cloud Documentation.

3. Upload Data from Google Cloud Storage

Google Cloud Storage allows you to store and transfer data online securely. The following file formats can be uploaded from Google Cloud Storage to Google BigQuery:

- CSV

- JSON

- Avro

- Parquet

- ORC

- Cloud Datastore

You can read more about using cloud storage with big data in the following documentation.

4. Upload Data from Other Google Services

- Configure BigQuery Data Transfer Service:

- Select or create a data project.

- Enable billing for the project (mandatory for certain services).

- Services Requiring Billing:

- Campaign Manager

- Google Ads Manager

- Google Ads

- YouTube – Channel Reports

- YouTube – Content Owner Reports

- Starting the Service:

- Go to the BigQuery Home Page and select “Transfers” from the left-hand menu.

- Admin access is required to create a transfer.

- Select Data Source:

- Choose your desired data source in the subsequent window.

- Access Methods:

- Access the BigQuery Data Transfer Service via:

- Platform console

- Classic bq_ui

- bq command-line tool

- BigQuery Data Transfer Service API

- Data Upload:

- The service automatically uploads data to BigQuery regularly.

- Note: The service cannot be used to download data from BigQuery.

5. Download Data with the API

With Cloud Client Libraries, you can use your favorite programming language to work with the Google BigQuery API.

Here you can read more about downloading data using API.

To start, you need to create or select the project with which you want to work. On the home page, go to the APIs section.

Learn more about Google Analytics to BigQuery Integration.

Additional Resources on Loading Data into Bigquery

- How to move from API to Bigquery

- Syncing Facebook Ads To Bigquery

- Enable real-time analytics by transferring API data to BigQuery effortlessly. Find details at API Integration with BigQuery.

Conclusion

In this blog, you learned about Google BigQuery and how to load data into BigQuery. You also explored some different data type uploads to the Google BigQuery Cloud Storage, including CSV and JSON files. But if you want to automate your data flow, try Hevo.

Hevo is a no-code data pipeline. It supports pre-built integrations from 150+ data sources. You can load data into BigQuery from your desired data source in a few minutes.

Give Hevo a try by signing up for a 14-day free trial today and see how Hevo will suit your organization’s needs. Check out the pricing details to find the right plan for you.

Load data into BigQuery and share your experience with us in the comment section below.

Frequently Asked Questions

1. Is BigQuery a database or a data warehouse?

Google BigQuery is a fully managed, serverless data warehouse offered by Google Cloud.

2. What is the fastest way to load files into BigQuery?

The fastest way to load files into BigQuery generally involves using Google Cloud Storage as an intermediary, leveraging the bq command-line tool or API, optimizing file formats, and using features like partitioning and clustering.

3. How do I load data to Google BigQuery?

To load data to Google BigQuery, you can upload files directly (e.g., CSV, JSON) via the BigQuery web interface or use the BigQuery Data Transfer Service to import data from various Google services. Alternatively, you can use the bq command-line tool or BigQuery API for more advanced loading options.

4. How do I load data into an existing table in BigQuery?

To load data into an existing table in BigQuery, use the BigQuery web interface to select the table, then upload your data file or configure a data transfer. You can also use the bq command-line tool with the command bq load, specifying the table name and file source.

5. How do I upload a database file to BigQuery?

To upload a database file to BigQuery, convert the database file (e.g., CSV or JSON) into a supported format and use the BigQuery web interface to import the file directly. Alternatively, you can use the bq command-line tool with the bq load command to specify the dataset and table for the upload.