Key Takeaways

Key TakeawaysLoading data from MongoDB to Redshift can be done in two simple ways:

Method 1: Using Hevo

Configure MongoDB as the source in Hevo by providing your connection URI and credentials

Select Redshift as the destination and enter your cluster, database, schema, and IAM role details

Method 2: Using Custom Scripts

Run mongoexport to export your collection to CSV or JSON

Upload the exported file to an S3 bucket with aws s3 cp

In Redshift, use the COPY command with your S3 path and IAM role to load the data into your table

Easily move your data from MongoDB to Redshift to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

If you are looking to move data from MongoDB to Redshift, I reckon that you are trying to upgrade your analytics setup to a modern data stack. Great move!

Kudos to you for taking up this mammoth of a task! In this blog, I have tried to share my two cents on how to make the data migration from MongoDB to Redshift easier for you.

Before we jump to the details, I feel it is important to understand a little bit about the nuances of how MongoDB and Redshift operate. This will ensure you understand the technical nuances that might be involved in MongoDB to Redshift ETL. In case you are already an expert at this, feel free to skim through these sections or skip them entirely.

Table of Contents

What is MongoDB?

MongoDB distinguishes itself as a NoSQL database program. It uses JSON-like documents along with optional schemas. MongoDB is written in C++. MongoDB allows you to address a diverse set of data sets, accelerate development, and adapt quickly to change with key functionalities like horizontal scaling and automatic failover.

MongoDB is the best RDBMS when you have a huge volume of structured and unstructured data. Its features make scaling and flexibility smooth. These are available for data integration, load balancing, ad-hoc queries, sharding, indexing, etc.

Another advantage is that MongoDB also supports all common operating systems (Linux, macOS, and Windows). It also supports C, C++, Go, Node.js, Python, and PHP.

What is Amazon Redshift?

Amazon Redshift is essentially a storage system that allows companies to store petabytes of data across easily accessible “Clusters” that you can query in parallel. Every Amazon Redshift Data Warehouse is fully managed, which means that the administrative tasks like maintenance, backups, configuration, and security are completely automated.

Suppose you are a data practitioner who wants to use Amazon Redshift to work with Big Data. It will make your work easily scalable due to its modular node design. It also allows you to gain more granular insight into datasets, owing to the ability of Amazon Redshift Clusters to be further divided into slices. Amazon Redshift’s multi-layered architecture allows multiple queries to be processed simultaneously thus cutting down on waiting times. Apart from these, there are a few more benefits of Amazon Redshift you can unlock with the best practices in place.

Features of Amazon Redshift

- When you submit a query, Redshift cross-checks the result cache for a valid and cached copy of the query result. When it finds a match in the result cache, the query is not executed. On the other hand, it uses a cached result to reduce the runtime of the query.

- You can use the Massive Parallel Processing (MPP) feature for writing the most complicated queries when dealing with a large volume of data.

- Your data is stored in columnar format in Redshift tables. Therefore, the number of disk I/O requests to optimize analytical query performance is reduced.

Why perform MongoDB to Redshift ETL?

It is necessary to bring MongoDB’s data to a relational format data warehouse like AWS Redshift to perform analytical queries. It is simple and cost-effective to efficiently analyze all your data by using a real-time data pipeline. MongoDB is document-oriented and uses JSON-like documents to store data.

MongoDB doesn’t enforce schema restrictions while storing data, the application developers can quickly change the schema, add new fields and forget about older ones that are not used anymore without worrying about tedious schema migrations. Owing to the schema-less nature of a MongoDB collection, converting data into a relational format is a non-trivial problem for you.

In my experience in helping customers set up their modern data stack, I have seen MongoDB be a particularly tricky database to run analytics on. Hence, I have also suggested an easier / alternative approach that can help make your journey simpler.

In this blog, I will talk about the two different methods you can use to set up a connection from MongoDB to Redshift in a seamless fashion: Using Custom ETL Scripts and with the help of a third-party tool, Hevo.

What Are the Methods to Move Data from MongoDB to Redshift?

These are the methods we can use to move data from MongoDB to Redshift in a seamless fashion:

- Method 1: Using Custom Scripts to Move Data from MongoDB to Redshift

- Method 2: Using an Automated Data Pipeline Platform to Move Data from MongoDB to Redshift

Hevo’s no-code data pipeline platform makes it effortless to move and transform your MongoDB data into Redshift—fast, reliable, and without writing a single line of code.

- Effortless Integrations: Integrate data from 150+ sources effortlessly, eliminating manual data transfers.

- Analytics-Ready in Redshift: Migrate and transform data seamlessly to make it analytics-ready.

- No-Code Automation: Automate real-time data loading with a smooth, reliable, and user-friendly platform.

Join 2,000+ happy customers who trust Hevo—like Meru, who cut costs by 70% and accessed insights 4x faster with Hevo Data.

Simplify Your Data Migration NowMethod 1: Using Custom Scripts to Move Data from MongoDB to Redshift

The following are the steps we can use to move data from MongoDB to Redshift using a Custom Script:

- Step 1: Use mongoexport to export data.

mongoexport --collection=collection_name --db=db_name --out=outputfile.csv- Step 2: Upload the .json file to the S3 bucket.

2.1: Since MongoDB allows for varied schema, it might be challenging to comprehend a collection and produce an Amazon Redshift table that works with it. For this reason, before uploading the file to the S3 bucket, you need to create a table structure.

2.2: Installing the AWS CLI will also allow you to upload files from your local computer to S3. File uploading to the S3 bucket is simple with the help of the AWS CLI. To upload .csv files to the S3 bucket, use the command below if you have previously installed the AWS CLI. You may use the command prompt to generate a table schema after transferring the CSV files into the S3 bucket.

AWS S3 CP D:\outputfile.csv S3://S3bucket01/outputfile.csv- Step 3: Create a Table schema before loading the data into Redshift.

- Step 4: Using the COPY command, load the data from S3 to Redshift. Use the following COPY command to transfer files from the S3 bucket to Redshift if you’re following Step 2 (2.1).

COPY table_name

from 's3://S3bucket_name/table_name-csv.tbl'

'aws_iam_role=arn:aws:iam::<aws-account-id>:role/<role-name>'

csv;

Use the COPY command to transfer files from the S3 bucket to Redshift if you’re following Step 2 (2.2). Add csv to the end of your COPY command in order to load files in CSV format.

COPY db_name.table_name

FROM ‘S3://S3bucket_name/outputfile.csv’

'aws_iam_role=arn:aws:iam::<aws-account-id>:role/<role-name>'

csv;We have successfully completed the MongoDB Redshift integration.

For the scope of this article, we have highlighted the challenges faced while migrating data from MongoDB to Amazon Redshift. Towards the end of the article, a detailed list of advantages of using approach two is also given. You can check out Method 1 on our other blog and learn the detailed steps to migrate MongoDB to Amazon Redshift.

Limitations of Using Custom Scripts to Move Data from MongoDB to Redshift

Here is a list of limitations of using the manual method of moving data from MongoDB to Redshift:

- Schema Detection Cannot be Done Upfront: Unlike a relational database, a MongoDB collection doesn’t have a predefined schema. Hence, it is impossible to look at a collection and create a compatible table in Redshift upfront.

- Different Documents in a Single Collection: Different documents in the single collection can have a different set of fields. A document in a collection in MongoDB can have a different set of fields.

{

"name": "John Doe",

"age": 32,

"gender": "Male"

}

{

"first_name": "John",

"last_name": "Doe",

"age": 32,

"gender": "Male"

}Different documents in a single collection can have incompatible field data types. Hence, the schema of the collection cannot be determined by reading one or a few documents.

Two documents in a single MongoDB collection can have fields with values of different types.

{

"name": "John Doe",

"age": 32,

"gender": "Male"

"mobile": "(424) 226-6998"

}{

"name": "John Doe",

"age": 32,

"gender": "Male",

"mobile": 4242266998

}The field mobile is a string and a number in the above documents respectively. It is a completely valid state in MongoDB. In Redshift, however, both these values will have to be converted to a string or a number before being persisted.

- New Fields can be added to a Document at Any Point in Time. It is possible to add columns to a document in MongoDB by running a simple update to the document. In Redshift, however, the process is harder as you have to construct and run ALTER statements each time a new field is detected.

- Character Lengths of String Columns: MongoDB doesn’t put a limit on the length of the string columns. It has a 16MB limit on the size of the entire document. However, in Redshift, it is a common practice to restrict string columns to a certain maximum length for better space utilization. Hence, each time you encounter a longer value than expected, you will have to resize the column.

- Nested Objects and Arrays in a Document: A document can have nested objects and arrays with a dynamic structure. The most complex of MongoDB ETL problems is handling nested objects and arrays.

{

"name": "John Doe",

"age": 32,

"gender": "Male",

"address": {

"street": "1390 Market St",

"city": "San Francisco",

"state": "CA"

},

"groups": ["Sports", "Technology"]

}MongoDB allows nesting objects and arrays to several levels. In a complex real-life scenario, it may become a nightmare trying to flatten such documents into rows for a Redshift table.

- Data Type Incompatibility between MongoDB and Redshift: Not all data types of MongoDB are compatible with Redshift. ObjectId, Regular Expression, and JavaScript are not supported by Redshift. While building an ETL solution to migrate data from MongoDB to Redshift from scratch, you will have to write custom code to handle these data types.

Method 2: Using Third-Party ETL Tools to Move Data from MongoDB to Redshift

Here are the steps you can use to migrate Google Sheets to SQL Server effortlessly with Hevo.

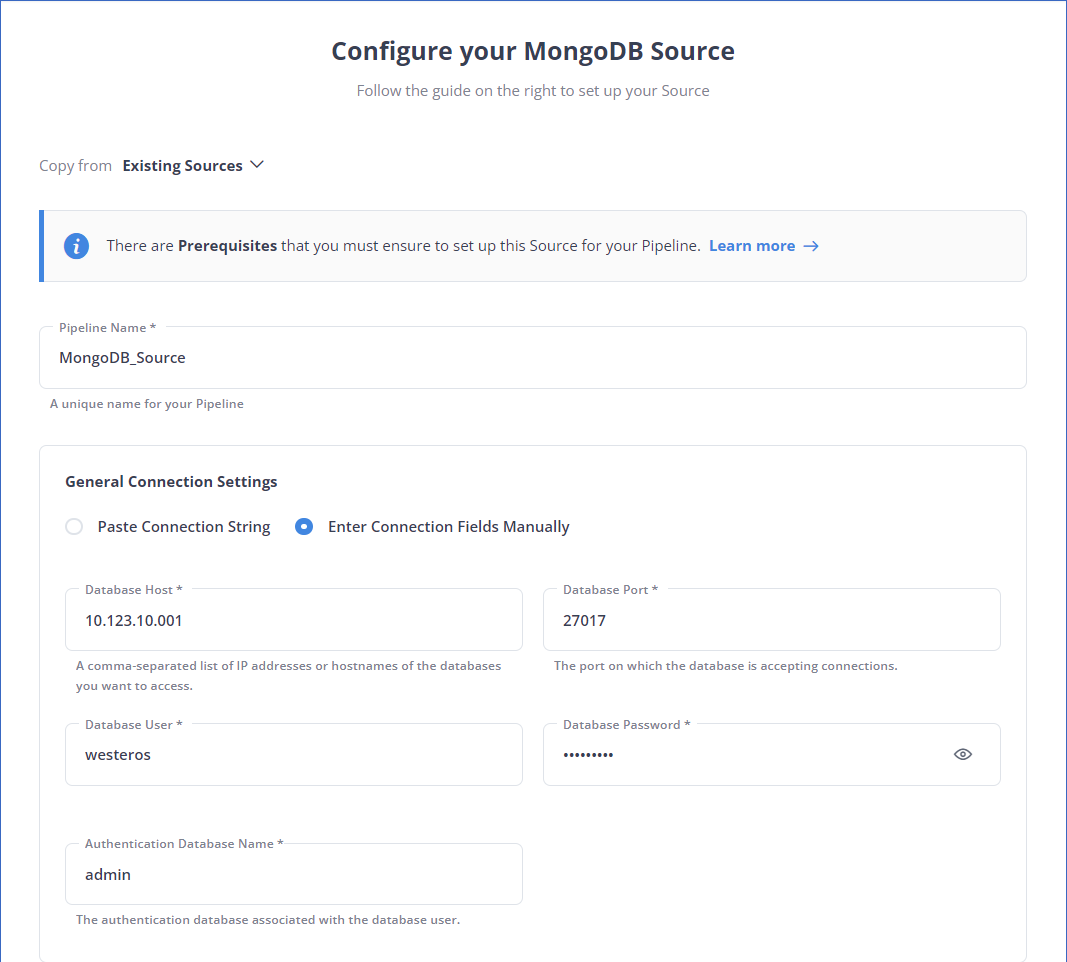

Step 1: Configure Your Source

Load Data from Hevo to MongoDB by entering details like Database Port, Database Host, Database User, Database Password, Pipeline Name, Connection URI, and the connection settings.

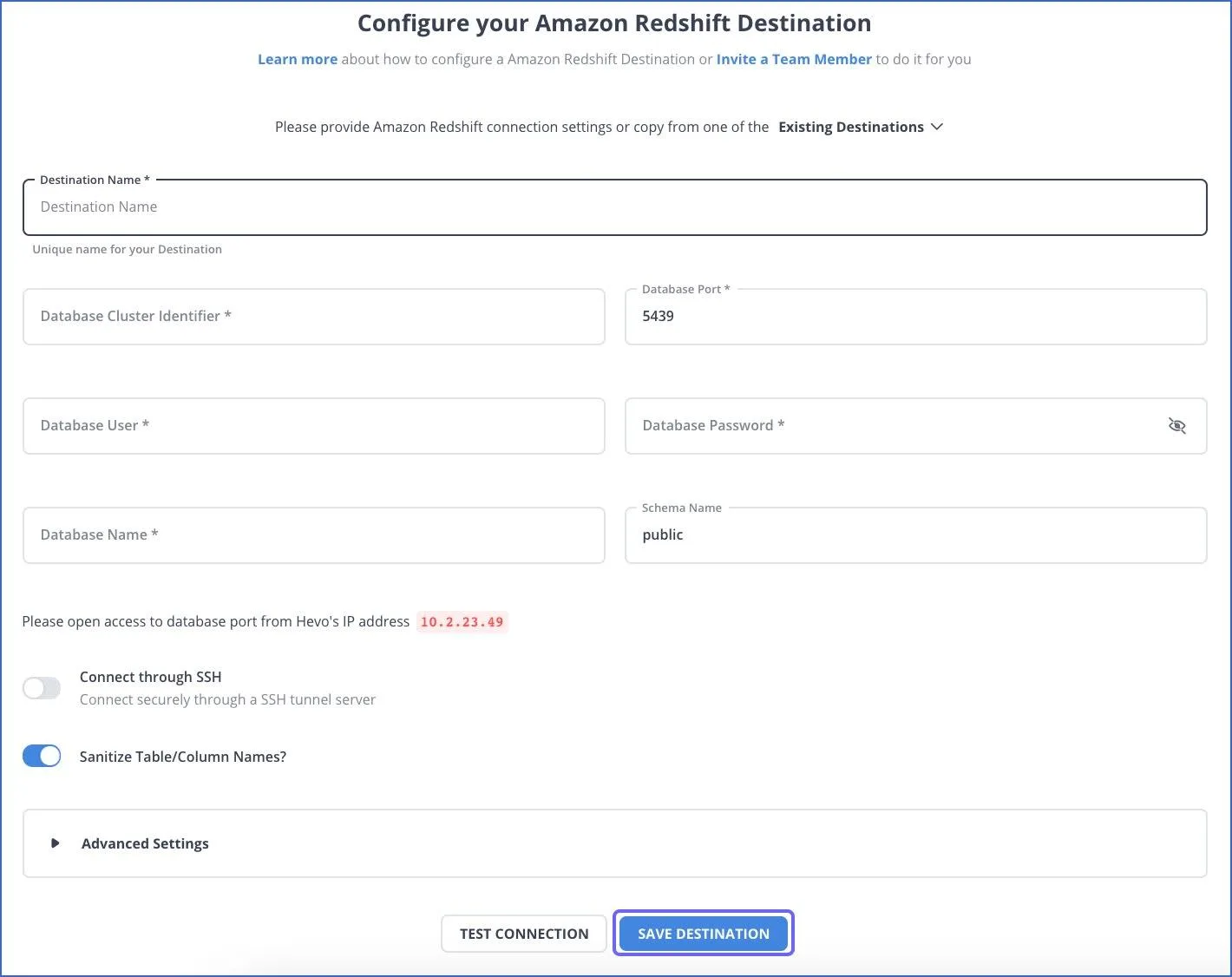

Step 2: Intgerate Data

Load data from MongoDB to Redshift by providing your Redshift database credentials like Database Port, Username, Password, Name, Schema, and Cluster Identifier, along with the Destination Name.

Hevo supports 150+ data sources, including MongoDB, and destinations like Redshift, Snowflake, BigQuery, and much more. Hevo’s fault-tolerant and scalable architecture ensures that the data is handled in a secure, consistent manner with zero data loss.

Give Hevo a try, and you can seamlessly export MongoDB to Redshift in minutes.

For detailed information on how you can use the Hevo connectors for MongoDB to Redshift ETL, check out:

What are the Common Challenges when Migrating from MongoDB to Redshift

Are you thinking about moving your data from MongoDB to Amazon Redshift? You’re not alone, many teams are shifting to Redshift for its powerful analytics capabilities. But the journey isn’t always smooth. MongoDB and Redshift are built on very different foundations, and that can create some friction during migration.

Here are a few common issues you might face and how to work around them.

1. Schema Differences Can Get Tricky

MongoDB offers flexibility and lacks a predefined schema, making it ideal for development, whereas Redshift requires structured and well-defined schemas. If your data contains nested documents or varying fields, the process can become complicated during the transfer.

Solution: Simplify your MongoDB data prior to importing it into Redshift. Utilize ETL tools such as Hevo or personalized scripts to normalize the schema and convert nested JSON into flat tables compatible with Redshift.

2. Data Type Mismatches

MongoDB has its own data types (like ObjectId, embedded arrays, or timestamps) that don’t always play nicely with Redshift. Trying to insert these directly can cause errors or unexpected behavior.

Solution: During transformation, map and change data types. Use an ETL tool like Hevo, which intelligently detects and converts data types during the transfer process. Other tools like Talend or Apache NiFi can also help ensure your data types are properly aligned.

3. Performance Issues with Large Datasets

Bulk migrating a massive MongoDB collection can lead to slow transfers or timeouts, especially if you’re inserting rows directly into Redshift.

Solution: A more efficient approach is to initially export your data to Amazon S3 (in either CSV or Parquet format) and then utilize Redshift’s COPY command for bulk data loading. It’s quicker, more dependable, and designed for large data transfers.

4. Inconsistent or Missing Data

Since MongoDB doesn’t enforce a strict schema, it’s common to have missing or inconsistent fields across documents. Redshift, however, needs clean, well-structured data.

Solution: Perform a data validation step before the migration. Fill in missing values with sensible defaults, and make sure each field aligns with your target schema in Redshift.

Additional Resources for MongoDB Integrations and Migrations

- Stream data from mongoDB Atlas to BigQuery

- Move Data from MongoDB to MySQL

- Connect MongoDB to Snowflake

- Connect MongoDB to Tableau

Conclusion

In this blog, I have talked about the two different methods you can use to set up a connection from MongoDB to Redshift in a seamless fashion: Using Custom ETL Scripts and with the help of a third-party tool, Hevo.

Outside of the benefits offered by Hevo, you can use Hevo to migrate data from an array of different sources – databases, cloud applications, SDKs, and more. This will provide the flexibility to instantly replicate data from any source, like MongoDB, to Redshift.

More related reads:

You can additionally model your data, build complex aggregates and joins to create materialized views for faster query executions on Redshift. You can define the interdependencies between various models through a drag-and-drop interface with Hevo’s Workflows to convert MongoDB data to Redshift.

FAQs

1. How to migrate MongoDB to Redshift?

There are two ways to migrate MongoDB to Redshift:

Custom Scripts: Manually extract, transform, and load data.

Hevo: Use an automated pipeline like Hevo for a no-code, real-time data migration with minimal effort and automatic sync.

2. How can I deploy MongoDB on AWS?

You can deploy MongoDB on AWS using two options:

Manual Setup: Launch an EC2 instance, install MongoDB, and configure security settings.

Amazon DocumentDB: Use Amazon’s managed service, compatible with MongoDB, for easier setup and maintenance.

3. How do I transfer data to Redshift?

You can transfer data to Redshift using:

COPY Command: Load data from S3, DynamoDB, or an external source.

ETL Tools: Use services like AWS Glue or Hevo for automated, real-time data transfer.