Businesses use Amazon RDS to simplify MySQL database management, enhance performance, and ensure high availability. MySQL on Amazon RDS to BigQuery migration offers a robust and scalable solution for businesses to perform advanced data analytics. You can leverage the capabilities of BigQuery data warehouse to analyze your marketing, customer support, and sales data from MySQL on Amazon RDS data.

While MySQL excels in creating snappy transactional databases, it may not meet the requirements for efficient analytical work. The advanced analytical capabilities of BigQuery can offer a competitive advantage with practical insights when dealing with multiple data sources. This article discusses the two methods to load data from MySQL on Amazon RDS to BigQuery.

Method 1: Move Data from MySQL on Amazon RDS Using CSV Files

Export your data manually from MySQL on Amazon RDS into CSV files and then upload them to BigQuery for further processing.

Method 2: Migrate from MySQL on Amazon RDS to BigQuery Using Hevo

Use Hevo’s automated, no-code pipeline to seamlessly transfer data from MySQL on Amazon RDS to BigQuery, ensuring real-time sync and pre/post-load transformations.

Table of Contents

Method 1: Move Data from MySQL on Amazon RDS to BigQuery using CSV Export/Import

Use mysqldump command to create a database backup file in the CSV format. With this command, you can extract data from MySQL on Amazon RDS and then load it to Google BigQuery. The command creates a single MySQL database backup file in CSV format.

Follow these steps to connect MySQL on Amazon RDS to BigQuery:

Step 1.1: Perform a MySQL Dump to Extract Data in CSV format

In a MySQL database, you must run the mysqldump command to create a database backup in CSV format.

Here is the basic syntax of the mysqldump command:

mysqldump -u [username] -p -t -T/path/to/directory --fields-terminated-by=, [database] [tableName]- -u [username]: It specifies the MySQL username to connect to the database.

- -p: It tells mysqldump to prompt for the MySQL user’s password. After entering the password, mysqldump will proceed with the export.

- -t: It instructs mysqldump to exclude the CREATE TABLE statements from the output. It is often used when exporting data only.

- -T /path/to/directory: It specifies the target directory where the data will be exported. In this case, the data will be written to files in CSV format in the specified directory on your local system.

- [database]: This is the name of the MySQL database from which the data will be exported.

- [tableName]: This is the name of the specific table within the database from which the data will be exported.

Once you execute this command, the MySQL database backup file will be downloaded in CSV format.

Step 1.2: Load the extracted CSV files to BigQuery

After extracting data from MySQL in the CSV format, you can load it into BigQuery with a batch-load job using Python.

To execute the process, you must run the following code:

from Google.cloud import bigquery

job_config = bigquery.LoadJobConfig(

source_format=bigquery.SourceFormat.CSV, skip_leading_rows=1,

autodetect=True,

)

with open(file_path, "rb") as source_file:

job = client.load_table_from_file(source_file, table_id,

job_config=job_config)

job.result()

table = client.get_table(table_id)

print(

"Loaded {} rows and {} columns to {}".format(

table.num_rows, len(table.schema), table_id

)

)

Although this method is time-consuming and effort-intensive, it is suitable for the following use cases:

- One-Time Data Integration: This method is ideal if you’re seeking a one-time data migration from Amazon RDS MySQL to BigQuery and don’t require real-time data synchronization.

- Low Volume Data: Using CSV files for data migration can be cost-effective when the dataset size is relatively small. This method eliminates the need for complex data integration tools.

- Data Security: Businesses with strict data privacy requirements may choose this method to retain complete control over their data without relying on third-party apps.

Limitations of using CSV files for MySQL on Amazon RDS to BigQuery Migration

- Not for Large Volume Data: This method involves manual export, transfer, and loading of CSV files, which can be time-consuming and labor-intensive, especially for large datasets.

- Multiple Manual Steps: The manual nature of this integration process makes it prone to errors like data duplication, causing data consistency issues.

- No Real-Time Data Synchronization: There is no automated real-time data synchronization since it’s involves several manual steps. As a result, the CSV file method allows businesses only to move historical data without real-time updates.

Method 2: Automating the Data Migration Process using a No-Code Tool

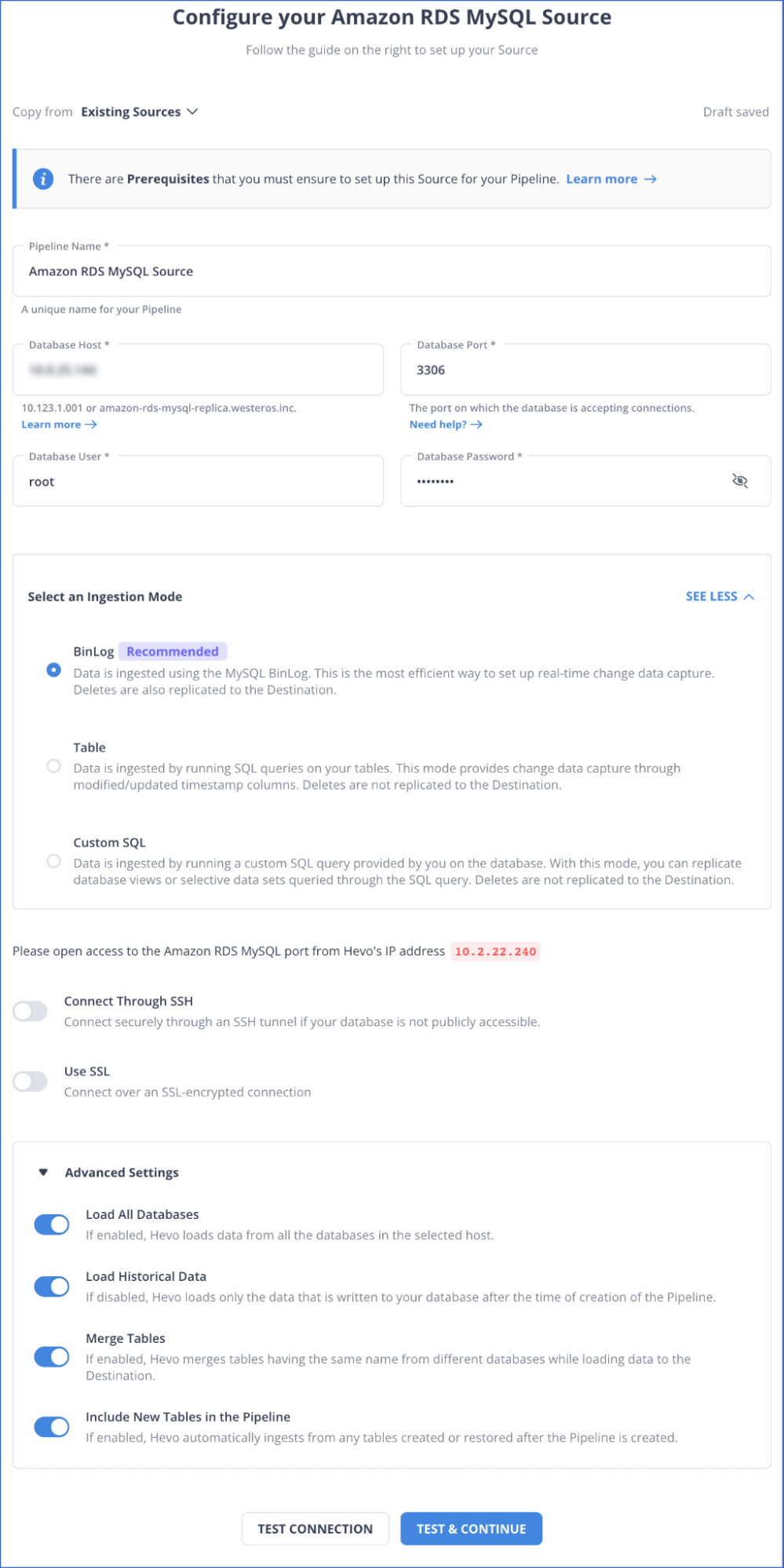

Step 2.1: Configure MySQL on Amazon RDS as your Source

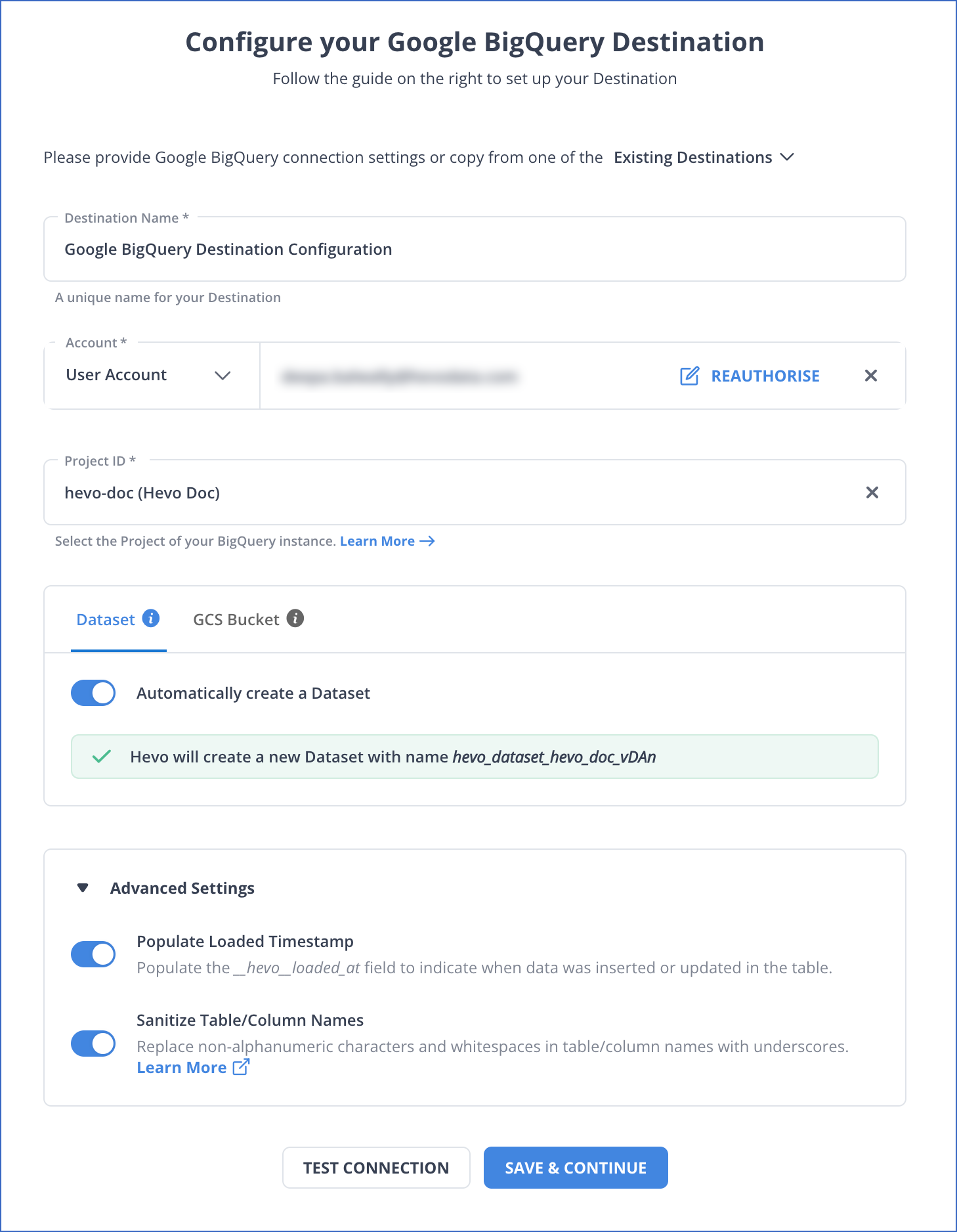

Step 2.2: Configure BigQuery as your Destination

With the two simple steps, you successfully connected MySQL on Amazon RDS with BigQuery. There are numerous reasons to choose Hevo as your no-code ETL tool for integrating MySQL on Amazon RDS with BigQuery.

Significant Features of Hevo

- Fully Managed Service & Live Support: Hevo manages the entire ETL process, from data extraction to loading, ensuring flawless execution. Additionally, Hevo provides round-the-clock support for your data integration queries via email, call, or live chat.

- Pre-Built Connectors: Hevo offers 150+ pre-built connectors for various data sources, enabling you to establish an ETL pipeline quickly.

- Live Monitoring: Hevo provides live monitoring support, allowing you to check the data flow at any point in time. You can also receive instant notifications about your data transfer pipelines across devices.

- Transformation: Hevo provides a drag-and-drop transformation feature, a user-friendly method of performing simple data transformations. Alternatively, you can use the Python interface for specific data transformations.

What Can You Achieve by Integrating Data from MySQL on Amazon RDS to BigQuery?

Here are some of the analyses you can perform after MySQL on Amazon RDS to Bigquery replication:

- Examine the different phases of your sales funnel to gain a deeper understanding of your customer’s journey.

- Identify high-value customers who contribute the most to revenue generation, and prioritize these customers by offering personalized incentives to build stronger relationships.

- Use data from various sources, such as project management tools, HR systems, and communication platforms, to define key performance indicators (KPIs) for team performance.

- Integrate transactional data from diverse functional groups, such as Sales, Marketing, Product, and Human Resources for insights and answers to critical questions. For instance:

- Identify top-selling products and customer buying patterns.

- Assess process inefficiencies while purchasing products or services.

- Identify at-risk customers and implement retention strategies.

Here are some other migrations that you may need:

- MySQL on Amazon RDS to Databricks

- MySQL on Amazon RDS to Redshift

- MySQL on Amazon RDS to Snowflake

- MySQL on Amazon RDS to Snowflake

Conclusion

With BigQuery’s advanced data warehousing and analytical capabilities, you can run several advanced analytics on the centralized MySQL on Amazon RDS data. Establishing a MySQL on Amazon RDS to BigQuery ETL pipeline enables seamless data migration and critical analysis from multiple sources. This, in turn aids in improved decision-making to accelerate business growth.

The two methods for MySQL on Amazon RDS to BigQuery migration are using CSV export/import and using no-code tools. The CSV file export/import method is a labor-intensive process requiring multiple manual steps. On the other hand, no-code tools, like Hevo Data, simplify the integration process by building fully automated and managed ETL pipelines.

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Frequently Asked Questions (FAQs)

1. How to transfer data from MySQL to BigQuery?

You can transfer data from MySQL to BigQuery using ETL tools like Hevo, which allows real-time data migration with no coding required. Alternatively, you can export MySQL data to CSV and import it to BigQuery.

2. How to connect BigQuery to MySQL database?

To connect BigQuery to MySQL, you can use tools like Hevo, which offer seamless integration. Otherwise, you can use Google Cloud Dataflow or manually extract and upload data using CSV or other file formats.

3. Is Amazon RDS compatible with MySQL?

Yes, Amazon RDS fully supports MySQL. You can use Amazon RDS to host MySQL databases with ease and leverage its management features like backups, scaling, and security.