Easily move your data from MySQL On Amazon RDS To Databricks to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Are you looking to migrate your data from MySQL on Amazon RDS to Databricks? If so, you’ve come to the right place. Migrating data from MySQL on Amazon RDS to Databricks can be a complex process, especially when handling large datasets and different database structures. However, moving data from MySQL on Amazon RDS to Databricks can help you leverage analytical capabilities, obtain better insights, and enhance reporting efficiency.

While MySQL and Amazon RDS are fully managed cloud-based services, offering scalability, high availability, and automated backups for seamless data management. Databricks is a unified data analytics platform that combines data engineering, data science, and machine learning capabilities, empowering you to process and analyze large-scale data with ease and efficiency.

So, let’s look into the two popular methods for migrating data from MySQL on Amazon RDS to Databricks.

Method 1: Move Data from MySQL on Amazon RDS Using CSV Files

Export your data manually from MySQL on Amazon RDS into CSV files and then upload them to Databricks for further processing.

Method 2: Migrate from MySQL on Amazon RDS to Databricks Using Hevo

Use Hevo’s automated, no-code pipeline to seamlessly transfer data from MySQL on Amazon RDS to Databricks, ensuring real-time sync and pre/post-load transformations.

Table of Contents

Method 1: Move Data from MySQL on Amazon RDS Using CSV files

Using CSV files to load data from MySQL on Amazon RDS to Databricks involves the following steps:

Step 1: Export the Data on Amazon RDS MySQL into a CSV File

- Open the terminal or command prompt on your system. Enter the following command, replacing your_mysql_username with your actual MySQL username:

mysql -u your_mysql_username -pPress Enter, and you will be indicated to provide your MySQL password. Type your password and press Enter. After entering the password, you will gain access to the MySQL Command-Line Interface, where you can start executing MySQL commands.

- To export your MySQL data from Amazon RDS into a CSV file, execute the following command:

mysql your_database --user=your_username --password=your_password --host=your-host.eu-west-2.rds.amazonaws.com --batch -e "SELECT * FROM table_name" | sed 's/\t/","/g;s/^/"/;s/$/"/;s/\n//g' > path/output.csvReplace your_database, your_username, your_password, and table_name with your actual details. Ensure you provide the correct file path where you want to save the CSV file. Additionally, replace your-host.eu-west-2.rds.amazonaws.com with the specific endpoint of your Amazon RDS MySQL instance.

- Once you execute the command, your MySQL data on Amazon RDS will be saved in the output.csv file at the specified location on your system.

Step 2: Import the MySQL on Amazon RDS CSV File into Databricks

- Log in to your Databricks account. Go to the Data tab and click on Add Data to begin the process of adding new data to your Databricks workspace.

- Now, you can click the file browser button to open a file browser window. Use it to navigate through your local storage and select the files you want to import. Alternatively, you can directly drag and drop the files onto the Databricks workspace.

- After uploading the CSV file or files, Databricks will automatically process the data and display a preview. You can now create your own table to store this data.

Using CSV files for data migration is indeed a time-consuming approach. However, the manual approach is particularly well-suited for one-time migrations. For occasional or infrequent data transfers, manual transfer can often be more straightforward compared to setting up complex automated processes.

While the manual method offers certain benefits, it also has several limitations. Some of these limitations include:

- Lack of Real-Time Integration: The process of manually transferring data from Amazon RDS MySQL to Databricks rds using CSV files lacks real-time integration. This means that any changes made in the MySQL database after the last CSV transfer will not be reflected in Databricks rds until they are manually uploaded.

- Inability to Handle Large Data Volumes: Using the CSV-based method to transfer data from Amazon RDS MySQL to Databricks is not ideal for handling large datasets. It is a time-consuming and resource-intensive process, making the migration less efficient and causing data transfer delays.

- Data Security Concerns: During the migration process storing the CSV files temporarily on the local system can indeed raise security concerns, particularly when handling sensitive or confidential data. It’s essential to implement proper security measures like data encryption and access control.

Method 2: Automating the Data Replication Process Using a No-Code Tool

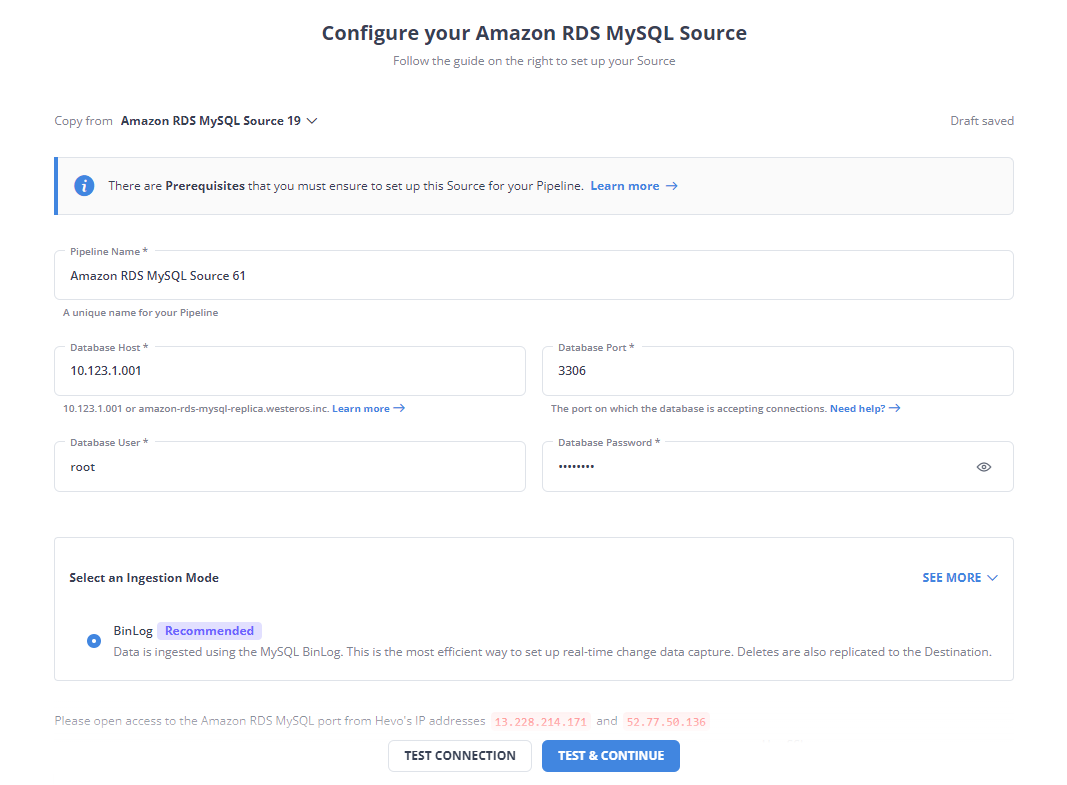

Step 1: Configure MySQL on Amazon RDS as Source

- Step 1.1: Click PIPELINES in the Asset Palette.

- Step 1.2: Next, click +CREATE in the Pipelines List View.

- Step 1.3: Then, on the Select Source Type page, select Amazon RDS MySQL.

- Step 1.4: In the Configure your Amazon RDS MySQL Source page.

- Step 1.5: Click TEST & CONTINUE.

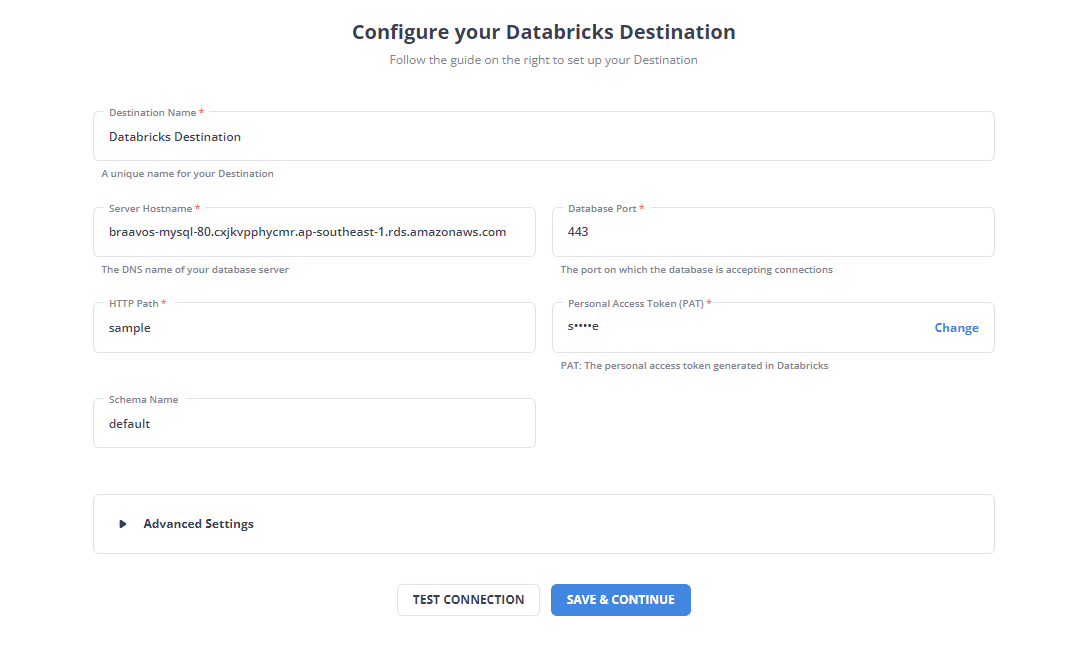

Step 2: Configure Databricks as Destination

- Step 2.1: In the Asset Palette, select DESTINATIONS.

- Step 2.2: In the Destinations List View, click + CREATE.

- Step 2.3: Select Databricks from the Add Destination page.

- Step 2.4: Set the following parameters on the Configure your Databricks Destination page.

- Step 2.5: To test connectivity with the Databricks warehouse, click Test Connection.

- Step 2.6: When the test is complete, select SAVE DESTINATION.

Your migration from Amazon RDS to Databricks is now complete.

Here are some of the benefits of using Hevo’s no-code tool for seamless data integration between MySQL on Amazon RDS and Databricks:

- Pre-Built Connectors: Hevo offers over 150 ready-to-use pre-built integrations, enabling easy connections to various data sources, including popular SaaS applications, payment gateways, advertising platforms, and analytics tools.

- Drag-and-Drop Transformations: Hevo’s user-friendly platform allows effortless basic data transformations like filtering and mapping with simple drag-and-drop actions. For more complex transformations, Hevo provides Python and SQL capabilities to cater to your specific business needs.

- Real-Time Data Replication: Hevo uses Change Data Capture (CDC) technology for real-time MySQL on Amazon RDS to Databricks ETL. This ensures that your Databricks database is always up-to-date without impacting the performance of your Amazon RDS database.

- Live Support: Hevo Data provides 24/7 support via email, chat, and voice calls, ensuring you have access to dedicated assistance whenever you require help with your integration project.

What Can You Achieve by Migrating Data From MySQL on Amazon RDS to Databricks?

Here are some of the analyses you can perform after MySQL on Amazon RDS to Databricks integration:

- Explore the various stages of your sales funnel to uncover valuable insights.

- Unlock deeper customer insights by analyzing every email touchpoint.

- Analyze employee performance data from Human Resources to understand your team’s performance, behavior, and efficiency.

- Integrating transactional data from different functional groups (Sales, marketing, product, Human Resources) and finding answers. For example:

- Measure the ROI of different marketing campaigns to identify the most cost-effective ones.

- Monitor website traffic data to identify the most popular product categories among customers.

- Evaluate customer feedback and sentiment analysis to understand overall customer satisfaction levels.

See how to connect PostgreSQL on Amazon RDS to Databricks for improved data processing. Explore our guide for easy setup and optimized performance.

Here are some other migrations that you may need:

- Connect MySQL to Amazon RDS to BigQuery

- MySQL to Amazon EDS to Snowflake

- MySQL on Amazon RDS to Redshift

- GCP MySQL to Databricks

- Amazon RDS to Databricks

Conclusion

When it comes to integrating MySQL data on Amazon RDS with Databricks, both the manual approach and using a no-code tool have their advantages. While the manual CSV-based method provides certain benefits, such as one-time migration capabilities and no third-party tool dependency, it also has limitations like a lack of real-time synchronization, scalability issues, and data security risks.

However, for organizations seeking a simplified and efficient data integration solution, Hevo Data is an ideal choice with its user-friendly interface, pre-built connectors, real-time data streaming, and data transformation capabilities. Hevo Data empowers you to seamlessly integrate and analyze data from MySQL on Amazon RDS to Databricks, enabling you to make data-driven decisions with ease.

Want to take Hevo for a spin? SIGN UP for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

FAQ on MySQL on Amazon RDS to Databricks

How to connect RDS to Databricks?

To connect Amazon RDS to Databricks:

1. Ensure the RDS instance is publicly accessible or accessible from the VPC where Databricks is deployed.

2. Obtain the RDS endpoint, username, password, and database details.

3. Use JDBC to connect, like so:jdbc_url = "jdbc:mysql://<rds-endpoint>:3306/<database>"

connection_properties = {

"user" : "your-username",

"password" : "your-password",

"driver" : "com.mysql.jdbc.Driver"

}

df = spark.read.jdbc(jdbc_url, "<table-name>", properties=connection_properties)

How to connect MySQL database to Databricks?

To connect MySQL to Databricks:

1. Install the MySQL JDBC driver on your Databricks cluster.

2. Use the JDBC connection string and specify MySQL database details:jdbc_url = "jdbc:mysql://<mysql-host>:3306/<database>"

connection_properties = {

"user" : "your-username",

"password" : "your-password",

"driver" : "com.mysql.cj.jdbc.Driver"

}

df = spark.read.jdbc(jdbc_url, "<table-name>", properties=connection_properties)

Is Amazon RDS compatible with MySQL?

Yes, Amazon RDS is compatible with MySQL. You can run MySQL as one of the database engines in RDS, allowing you to leverage MySQL features with managed services such as backups, scaling, and monitoring.