Oracle data load is an essential process for organizations wanting to import and manage large volumes of data within Oracle databases. This process helps keep the Oracle cloud applications, Oracle E-Business Suite (EBS), and Oracle Autonomous database up-to-date with the latest data.

You can facilitate load with various tools that offer user-friendly interfaces. These tools simplify the task of loading new and updated data and configurations into Oracle databases. This can help reduce the time and cost associated with these activities. These tools are designed to handle everything from simple data entries to complex data migration projects, ensuring that all organizational data needs are met.

This guide highlights Oracle’s own data load tool and other external tools for loading data into Oracle databases from external sources.

Table of Contents

Understanding What is Oracle Data Load

Oracle Data Load is a powerful tool for loading data from various external sources into an Oracle database. Using the Data Load dashboard in Oracle Database Actions, you can load data from local files, cloud-based storage, or connected file systems. The Data Load page allows you to load, link, and feed data into your Oracle Autonomous database.

For faster data loading, Oracle recommends uploading source files from external sources to Oracle Cloud Infrastructure Object Storage before loading the data to your database.

How to Import Data into Your Oracle Database Using Oracle Data Load Dashboard?

Here are the steps to import data from different sources into your Oracle database:

Step 1: Access the Data Load Dashboard Page

- Launch the Database Actions from within Oracle Autonomous Database Cloud Services.

- Navigate to the Data Studio home page from the Database Actions home page.

- Click on Data Load from the left navigation pane on the Data Studio page.

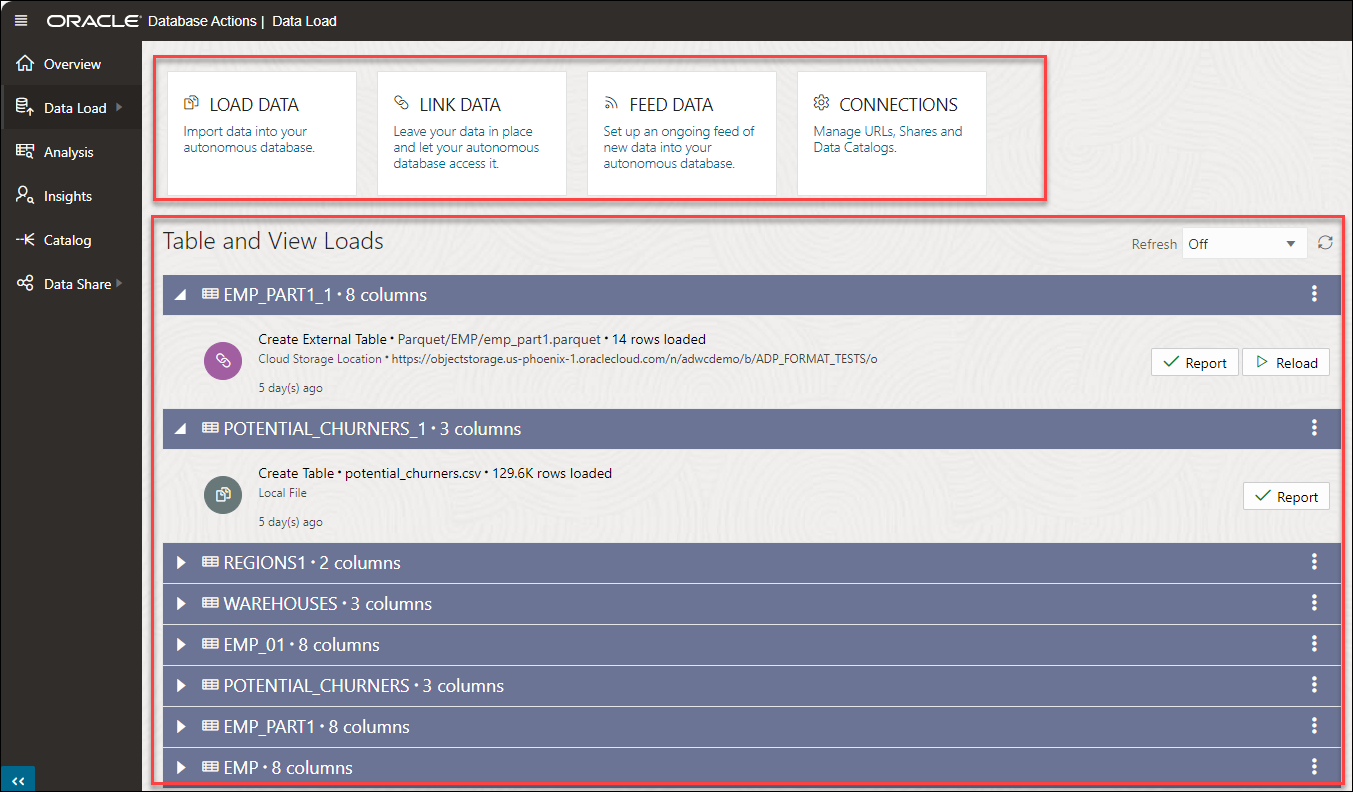

- The top section of the Data Load dashboard displays four options: LOAD DATA, LINK DATA, FEED DATA, and CONNECTIONS.

Step 2: Loading Data into Oracle Autonomous Database

You can import data from the following data sources into your Oracle Autonomous Database:

- Loading Data from Local Files and Databases

- Choose LOAD DATA > LOCAL FILE from the Data Load page and perform one of the following:

- Drag one or more files from your local system and drop them into a Data Load Cart.

- Click on Select Files, choose the desired files, and click Open to add them to the Data Load Cart.

- Use the Settings icon next to a file in the Data Load Cart and specify the required processing options.

Hevo is a No-code Data Pipeline. Hevo helps you export data from Oracle to any destination, such as a data warehouse. Hevo integrates data from various sources and loads it into a database or a data warehouse. Hevo offers secure and reliable data transfer at a reasonable price. Its features include:

- Connectors: Hevo supports 150+ pre-built integrations to SaaS platforms, files, Databases, analytics, and BI tools.

- Transformations: A simple Python-based drag-and-drop data transformation technique that allows you to transform your data for analysis.

- Schema Management: Hevo eliminates the tedious task of schema management. It automatically detects the schema of incoming data and maps it to the destination schema.

Thousands of customers trust Hevo with their ETL process. Join them today and experience seamless data integration.

Get Started with Hevo for Free

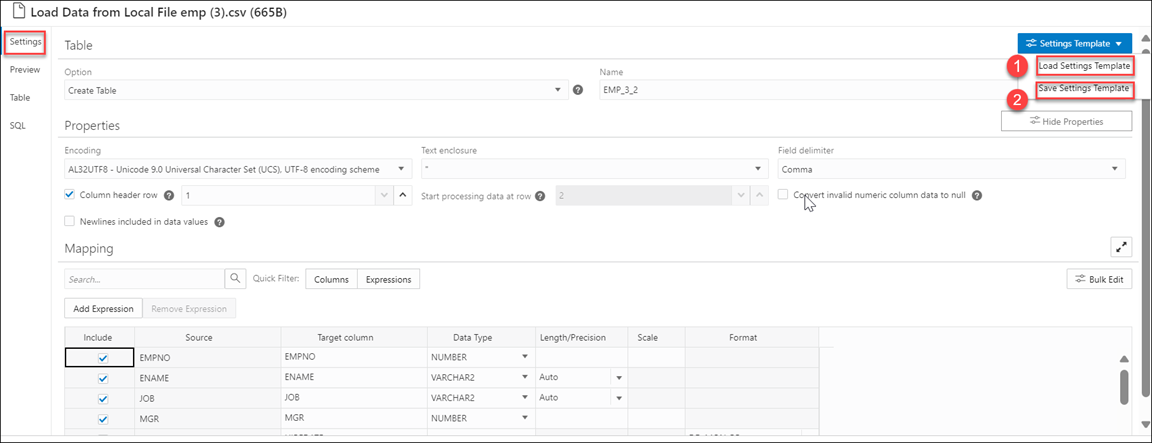

- Open the Settings Template and choose Save Settings Template to save the configuration settings in JSON format. Alternatively, you can use an existing template from your local system by clicking on Load Settings Template.

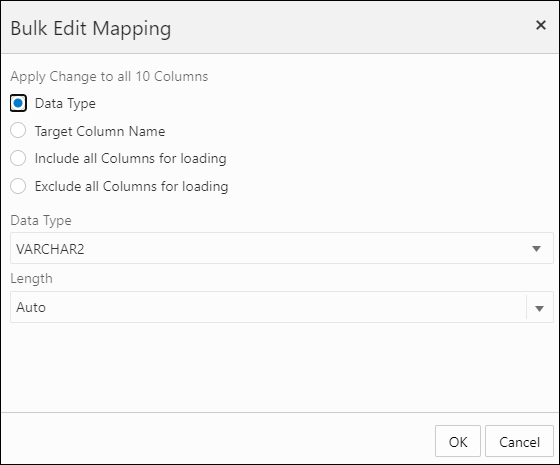

- You can modify all the columns from the mapping table by clicking on the Bulk Edit icon.

- Use the Quick Filter field to filter the results in the mapping table based on Columns or Expressions.

- Preview the source and target tables by clicking on the Load Preview tab.

- In the Data Load Cart menu, click the Start icon to launch the run data load job dialog box.

- Click Start in the Run Data Load Job dialog box to begin the execution.

- To stop the data load job at any time, click Stop.

- Once the data load job is complete, the Data Load dashboard page will display the results.

- Click Report to view the number of processed and rejected rows and the Start time.

For more information, refer to the Oracle documentation for loading data from local files.

For loading data from databases, follow the above steps. But instead of selecting the LOCAL FILE option from the LOAD DATA page, you must choose DATABASE.

- Loading Data from Cloud Storage

- Launch Database Actions and navigate to the Data Load page.

- Select LOAD DATA > Cloud Store and click the Create Cloud Store locations to establish a connection with your chosen cloud storage. For more information, read how to manage cloud storage links for data load jobs.

- Prepare your source data or target table to run the data load job correctly.

- Add the desired number of files or folders from the cloud store to the cart.

- Enter the data load job details in the Load Data from Cloud Storage pane. Then click Actions > Settings.

- Configure the Settings Tab – Table, Properties, and Mappings sections, Settings Template, and Bulk Edit Settings, similar to when loading data from local files.

- The Preview tab in the Load Preview menu shows the source data in tabular form.

- In the Load Data from Cloud Storage pane, save your configurations by clicking the Close button.

- In the data load cart menu, click the Start icon to begin the data load job.

- A Run Data Load Job dialog box appears; click the Start option.

To learn more about loading data from cloud storage, read the Oracle documentation on loading from cloud storage.

Follow the above steps to load data from a file system. Instead of selecting the Cloud Store option from the LOAD DATA page, consider choosing File System.

- Loading Data from AI Source

Before you begin, configure your AI profile. To load data from AI source, you can use Data Studio tools. Follow the steps below to load data from your AI source:

- Launch Database Actions.

- From the Data Load dashboard, choose Load Data > AI Source.

To run the data load job with AI source data, follow the steps in the above section on loading data from cloud storage.

- Loading Data from Share Provider

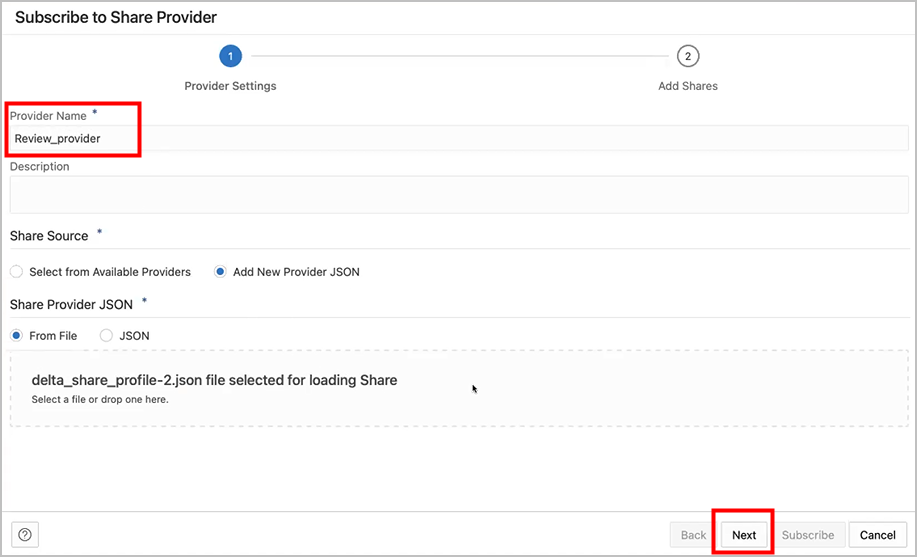

- On the Data Load dashboard, click Load Data > Share.

- Click + Subscribe to Share Provider and specify the required information.

- Use the Share REST endpoint to select the network access level from your database to the host, then click Run.

- Register the shares available to you by moving shares from Available Shares to Selected Shares; click Subscribe.

- Create external tables based on the tables selected from the data share.

- Click on the SQL tile from Database Actions and select the external table.

- You can drag and drop an external table into the worksheet; the SQL Select statement for the table that automatically appears can be run to consume the shared data.

Tools to Perform Data Load in Different Oracle Interfaces

Let’s examine a few data load tools for importing data into different Oracle environments, such as the Oracle EBS or the Oracle Cloud autonomous database.

1. Oracle Data Loader On Demand

The Oracle Data Loader On Demand client helps you import data into the Oracle Customer Relationship Manager (CRM) On Demand from external sources. It supports the following operations:

- Insert Operation

The insert operation involves adding records from an external file to Oracle CRM On Demand.

- Update Operation

The update operation helps you modify existing records in Oracle CRM On Demand using data from an external source. The records in the external source must have a unique External System ID or Row ID to match records in Oracle CRM On Demand. Since these identifiers are used to locate the target record, the External System ID and Row ID fields cannot be modified using Oracle Data Loader On Demand.

- Upsert Operation

The upsert operation combines the Insert and Update functionalities. It checks if an external record already exists in the Oracle CRM On Demand database and updates it if present or inserts it if not.

2. DataLoad

DataLoad is a powerful data entry tool that enables your organization’s team members to load new or updated data and configuration in Oracle cloud applications. It provides an easy-to-use interface that reduces the time and costs associated with loading configuration and data.

Here are some key features of DataLoad:

- Available in Classic and Professional editions, handling all simple to highly complex data loading tasks.

- Fully supports the Unicode standard, enabling DataLoad to handle all global languages on your PC.

- Helps to load data into Self Service (browser forms), professional forms, and directly into databases.

3. Forms Data Loader

Forms Data Loader (FDL) is a data load tool that helps you cost-effectively import data from Excel or CSV files into Oracle Apps 11i/R12 via front-end forms. While primarily built for Oracle, FDL can also load data into any Windows-based application form, such as SAP.

FDL doesn’t require special technical expertise, making it accessible to non-technical users as data is loaded through the frontend.

Here are a few key features of Forms Data Loader:

- No errors during data loading as it supports data verification when data is entered in Oracle application forms.

- It enables rapid loading of hundreds of rows into the target applications.

- It comes with pre-built sample spreadsheet templates to help you learn how to build data loads.

How to Optimize Oracle Data Load Size?

When optimizing a large Oracle dataload, it is critical to resize the entire environment to handle the load effectively. Here are the key factors to be considered for ensuring optimal data load size:

- Verify the client configuration to ensure that the data is transferred at an optimal speed.

- Inspect the data thoroughly to avoid errors.

- Check for data quality issues, such as validating required fields and pick list values.

- Ensure the log level is tailored to the specific load, avoiding unnecessary logging.

- Conduct test runs and gather metrics for each test run.

- Reconfigure the environment iteratively to obtain the desired throughput rates.

- For better performance during data load, temporarily inactivate the workflows that can be delayed.

How Does Hevo Help in the Oracle Data Load Process?

As your organizational data grows, consider consolidating new data into an Oracle database. Hevo Data enables you to efficiently extract, load, and transform up-to-date data into your Oracle database.

Here are some key features of Hevo that are helpful in the Oracle data load process:

- Data Transformation: Hevo provides two analyst-friendly transformation features: Python-based scripts and drag-and-drop blocks. These features help you clean and standardize your data according to your specific requirements before it is loaded into Oracle. Hevo’s data transformation features ensure that the data loaded in Oracle is in the right format for analysis and reporting.

- Incremental Data Load: Hevo Data allows the near-real-time transfer of up-to-date data, ensuring efficient bandwidth utilization on both ends of the data pipeline. Instead of reloading entire datasets, you can load only new or modified data into the Oracle database, saving time.

- Automatic Schema Management: Hevo Data’s auto-mapping enables you to reduce the need for manual schema management. This feature automatically identifies the structure of the source schema and replicates it to the target Oracle database schema. This reduces the risk of schema mismatches and data loading errors. Depending on your replication requirements, you can utilize full or incremental mappings.

You can also read about:

- Oracle data load: tools & best practices

- Use Oracle Data Pump Export

- Oracle replication methods & tools

- Set up CDC with Oracle and Debezium

Conclusion

By leveraging Oracle data load, your organization can maintain vast datasets within Oracle databases, ensuring that your applications remain accurate and up-to-date. Whether you are loading data into Oracle cloud applications, Oracle EBS, or an autonomous database, the tool streamlines the process, reduces costs, and minimizes errors.

Learn how to use data loader upsert to efficiently manage data updates and inserts with our comprehensive guide.

Alternative tools like DataLoad, Oracle Data Loader On Demand, and Forms Data Loader can simplify the task of importing data from various sources into Oracle databases. However, with Hevo Data, you create a data pipeline that helps automate data extraction from multiple databases and refine it before loading it into your Oracle database.

Sign up for a 14-day free trial with Hevo; no credit card is required. Check out our unbeatable pricing or get a custom quote for your requirements.

Frequently Asked Questions (FAQs)

1. What is SQL*Loader?

SQL*Loader is a tool for importing data from external files into Oracle database tables. It features a robust data parsing engine that imposes minimal restrictions on the data format in the data file.

2. What is Oracle Data Pump?

Oracle Data Pump helps you quickly transfer data and metadata from one database to another. It consists of three components: expdp or impdp clients, the Data Pump API, and the Metadata API.