You’re busy managing your organization’s data infrastructure when a new request comes in: replicate data from PagerDuty to Redshift for deeper analysis. Performing this manually can be challenging, but the good news is there are efficient ways to approach it.

In this article, explore two methods to seamlessly integrate PagerDuty with Redshift—leveraging custom ETL scripts or opting for an automated tool to streamline the process. This guide will help you choose the right approach to meet your needs and ensure smooth data replication.

Table of Contents

What is PagerDuty?

- PagerDuty is an on-call scheduling platform and incident management built to help teams detect critical issues, triage, and resolve them in real-time.

- It’s integrated with a number of monitoring tools that alert the right people in the case of a problem automation of workflows, so rapid response in case of outages or system failure.

- PagerDuty is predominantly utilized within DevOps and IT operations concerning various activities that may improve uptime, reliance, and teamwork during an incident.

Method 1: Simplify PagerDuty To Redshift Connection Using Hevo

Hevo Data, an Automated Data Pipeline, provides a hassle-free solution to connect PagerDuty with Redshift within minutes with an easy-to-use, no-code interface. Hevo is fully managed and completely automates the process of not only loading data from PagerDuty but also enriching the data and transforming it into an analysis-ready form without having to write a single line of code.

Method 2: Using Custom Code to Move Data from PagerDuty to Redshift

This method would be time-consuming and somewhat tedious to implement. Users will have to write custom codes to enable two processes: streaming data from PagerDuty and ingesting data into Redshift. This method is suitable for users with a technical background.

Get Started with Hevo for FreeWhat is Redshift?

- AWS Redshift is an Amazon Web Services data warehouse service. It’s commonly used for large-scale data storage and analysis and large database migrations.

- Amazon Redshift provides lightning-fast performance and scalable data processing solutions.

- Redshift also offers a number of data analytics tools, as well as compliance features, and artificial intelligence and machine learning applications.

- When integrated, moving data from PagerDuty to Redshift could solve some of the biggest data problems for businesses.

How to Connect PagerDuty to Redshift?

To integrate PagerDuty to Redshift, you can either use CSV files or a no-code automated solution.

Method 1: Simplify PagerDuty To Redshift Connection Using Hevo

Step 1: Configure PagerDuty as a Source

Step 2: Configure Redshift as a Destination

That’s it, literally! You have connected PagerDuty to Redshift in just 2 steps. These were just the inputs required from your end. Now, everything will be taken care of by Hevo. It will automatically replicate new and updated data from PagerDuty to Redshift every 1 hour (by default). However, you can also increase the pipeline frequency as per your requirements.

Data Replication Frequency

| Default Pipeline Frequency | Minimum Pipeline Frequency | Maximum Pipeline Frequency | Custom Frequency Range (Hrs) |

| 1 Hr | 1 Hr | 24 Hrs | 1-24 Hrs |

You can also visit the official documentation of Hevo for PagerDuty as a source and Redshift as a destination to have in-depth knowledge about the process.

In minutes, you can complete this no-code, automated approach to connecting PagerDuty to Redshift using Hevo and start analyzing your data.

Why you should Integrate Automatically using Hevo?

- Live Support: The support team is available round the clock to extend exceptional customer support through chat, email, and support calls.

- Data Transformation: Hevo provides a simple interface to cleanse, modify, and transform your data through drag-and-drop features and Python scripts. It can accommodate multiple use cases with its pre-load and post-load transformation capabilities.

- Schema Management: With Hevo’s auto schema mapping feature, all your mappings will be automatically detected and managed to the destination schema.

- Scalable Infrastructure: With the increase in the number of sources and volume of data, Hevo can automatically scale horizontally, handling millions of records per minute with minimal latency.

- Transparent pricing: You can select your pricing plan based on your requirements. You can adjust your credit limits and spend notifications for increased data flow.

Method 2: Using Custom Code to Move Data from PagerDuty to Redshift

In this method, you will learn how to integrate PagerDuty to Redshift using CSV Files.

PagerDuty to CSV

The steps to save reports as CSV files are mentioned below:

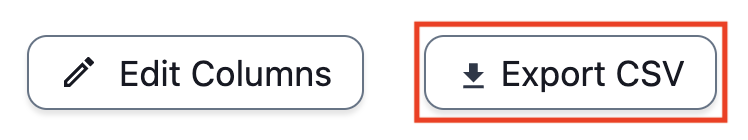

- Step 1: Click Export CSV to produce a file, that will make the view and results determined by the selections made on the search and filters.

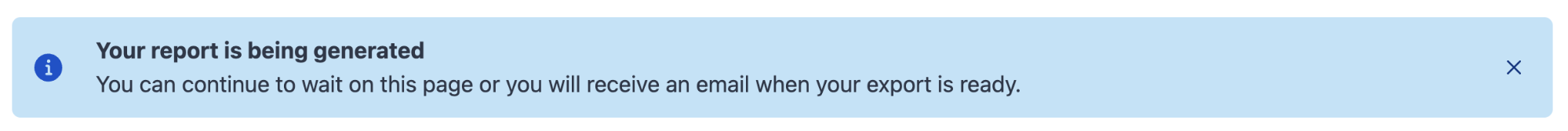

NOTE: Large reports such as the Incident Activity Report will otherwise be fetched in the

background and delivered to the requesting user by email.

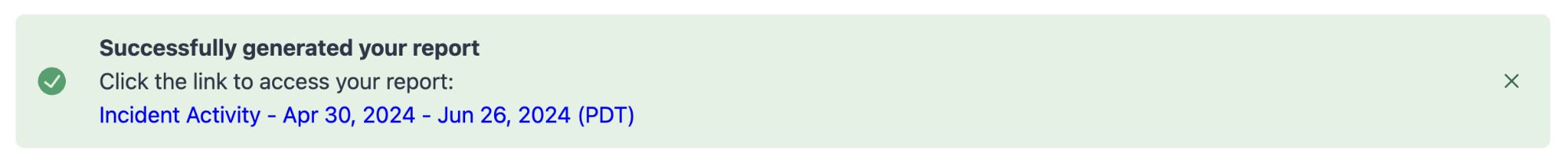

- Step 2: A success banner is shown on the Insights page till the report is delivered.

- Step 3: Click the link in the success banner or email to view your report in .csv format.

CSV to Redshift

Utilizing an S3 bucket is among the most straightforward methods available for loading CSV files into Amazon Redshift. It is accomplished in two stages: first, the CSV files are loaded into S3, and then, after that, the data is loaded from S3 into Amazon Redshift.

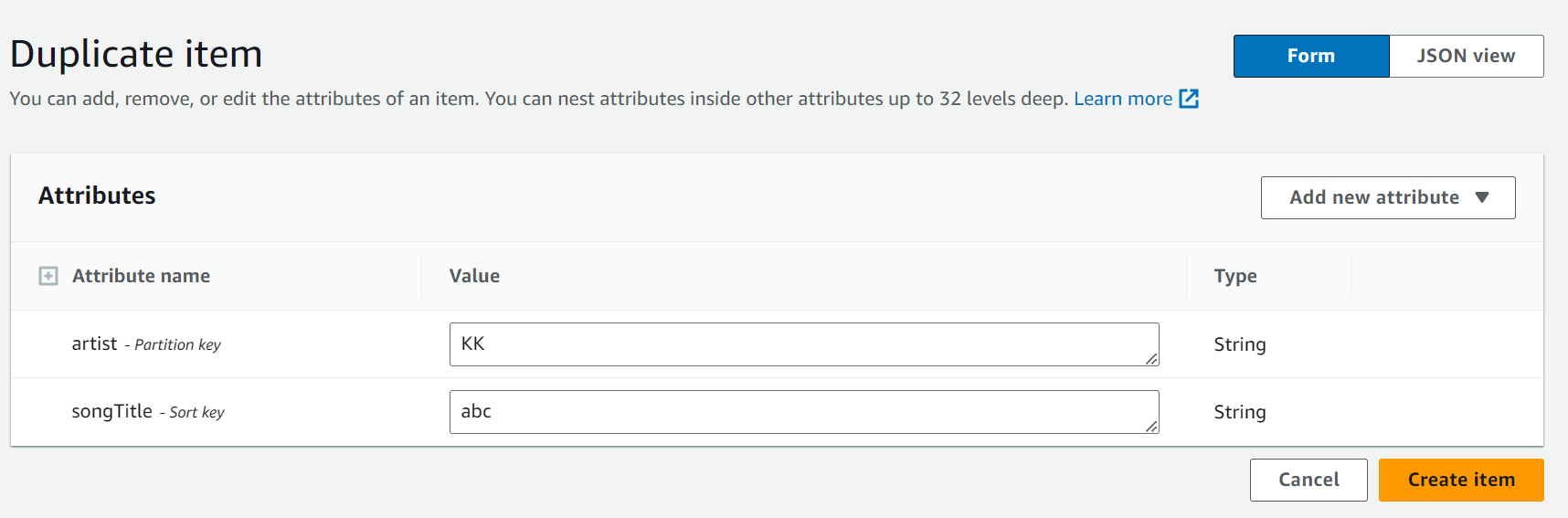

- Step 1: Create a manifest file that contains the CSV data to be loaded. Upload this to S3 and preferably gzip the files.

- Step 2: Once loaded onto S3, run the COPY command to pull the file from S3 and load it to the desired table. If you have used gzip, your code will be of the following structure:

COPY <schema-name>.<table-name> (<ordered-list-of-columns>) FROM '<manifest-file-s3-url>'

CREDENTIALS'aws_access_key_id=<key>;aws_secret_access_key=<secret-key>' GZIP MANIFEST;

In this scenario, utilizing the CSV keyword is important to assist Amazon Redshift in identifying the file format. In addition to this, you will need to specify any column arrangements or row headers that will be ignored, as shown below:

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV;

-- Ignore the first line

COPY table_name (col1, col2, col3, col4)

FROM 's3://<your-bucket-name>/load/file_name.csv'

credentials 'aws_access_key_id=<Your-Access-Key-ID>;aws_secret_access_key=<Your-Secret-Access-Key>'

CSV

INGOREHEADER 1;This process will successfully load your desired CSV datasets to Amazon Redshift.

When is Connecting Manually the Ideal Method?

Using CSV files and SQL queries is a great way to replicate data from PagerDuty to Redshift. It is ideal in the following situations:

- One-Time Data Replication: When your business teams require these PagerDuty files quarterly, annually, or for a single occasion, manual effort and time are justified.

- No Transformation of Data Required: This strategy offers limited data transformation options. Therefore, it is ideal if the data in your spreadsheets is accurate, standardized, and presented in a suitable format for analysis.

- Lesser Number of Files: Downloading and composing SQL queries to upload multiple CSV files is time-consuming. It can be particularly time-consuming if you need to generate a 360-degree view of the business and merge spreadsheets containing data from multiple departments across the organization.

Limitations of Connecting Manually

- You face a challenge when your business teams require fresh data from multiple reports every few hours. For them to make sense of this data in various formats, it must be cleaned and standardized.

- This requires you to devote substantial engineering bandwidth to creating new data connectors.

- To ensure a replication with zero data loss, you must monitor any changes to these connectors and fix data pipelines on an ad hoc basis.

- These additional tasks consume forty to fifty percent of the time you could have spent on your primary engineering objectives.

What can you hope to achieve by replicating data from PagerDuty to Redshift?

- You can centralize the data for your project. Using data from your company, you can create a single customer view to analyze your projects and team performance.

- Get more detailed customer insights. Combine all data from all channels to comprehend the customer journey and produce insights that may be used at various points in the sales funnel.

- You can also boost client satisfaction. Analyze customer interaction through email, chat, phone, and other channels. Identify drivers to improve customer pleasure by combining this data with consumer touchpoints from other channels.

Key Takeaways

- These data requests from your marketing and product teams can be effectively fulfilled by replicating data from PagerDuty to Redshift.

- If data replication must occur every few hours, you will have to switch to a custom data pipeline. This is crucial for marketers, as they require continuous updates on the ROI of their marketing campaigns and channels.

- Instead of spending months developing and maintaining such data integrations, you can enjoy a smooth ride with Hevo’s 150+ plug-and-play integrations (including 40+ free sources such as PagerDuty).

- Redshift’s “serverless” architecture prioritizes scalability and query speed and enables you to scale and conduct ad hoc analyses much more quickly than with cloud-based server structures. The cherry on top — Hevo will make it further simpler by making the data replication process very fast!

Take Hevo’s 14-day free trial to experience a better way to manage your data pipelines. You can also check out the unbeatable pricing, which will help you choose the right plan for your business needs.

FAQs

1. How do I transfer data to Redshift?

To transfer data to Redshift, you can use the COPY command to load data from sources like S3, DynamoDB, or an existing database.

2. Is Amazon Redshift serverless?

Yes, Amazon Redshift offers a serverless option, allowing you to run and scale data warehousing without managing infrastructure.

3. How do I upload a file to Redshift?

To upload a file to Redshift, first place it in an S3 bucket, then use the `COPY` command to load the file into your Redshift table.