Easily move your data from PostgreSQL to Databricks to enhance your analytics capabilities. With Hevo’s intuitive pipeline setup, data flows in real-time—check out our 1-minute demo below to see the seamless integration in action!

Undoubtedly, PostgreSQL is fantastic because of its ability to be used for both OLTP and OLAP data processing needs. And most experienced developers and DBAs prefer working on PostgreSQL because of the high familiarity with its workings, making it a compelling option to use as a data warehouse.

But, for OLAP-style processing, which is purposed for saving historical data and maintaining the ETL processes, PostgreSQL might not be the best fit — because it’s an OLTP database. And for BI functionality, columnar databases like Databricks, Redshift, BigQuery, and Snowflake are the go-to options.

In this post, you will learn two ways to replicate data from PostgreSQL to Databricks. The first method is manual, and the second uses Hevo, a no-code automated data pipeline. Let’s begin.

Table of Contents

What is PostgreSQL?

PostgreSQL is an open-source, general-purpose, object-relational database management system, or ORDBMS. It is widely used and provides advanced features along with standard compliance. Some of these features include complex queries, foreign keys, triggers, and views—all supporting transactional integrity with full ACID compliance. Inherent in it is vast extensibility for custom data types, functions, and operators.

Use Cases

- It can power dynamic web applications with its robust performance and scalability.

- Through its PostGIS extension, PostgreSQL can deal with geospatial data in an exemplary manner. This makes it very well-suited for applications of spatial data analyses, including mapping, location-based services, and geographic information systems.

- PostgreSQL is used in the financial sector for its strong transactional support and data integrity features.

What is Databricks?

Databricks provides a unified platform for data analytics that empowers simplicity in big data processing and machine learning, tightly integrating with Apache Spark for the power of an open-source analytics engine. It provides a cloud-based environment that simplifies the data pipeline from ingesting data to analyzing it. Principally, Databricks provides collaborative notebooks, automated cluster management, and advanced analytics capabilities that enable data engineers and data scientists to work more collaboratively on big data projects.

Use Cases

Some key use cases of Databricks are listed below:

- Databricks excels in data engineering workloads like ETL processes, data cleaning, and data transformation.

- Databricks comes with out-of-the-box machine learning capabilities that are tightly integrated into popular libraries to help you in end-to-end machine learning workflows.

- The integrated development environment, with collaboration notebooks, is available across the platform so that data scientists can work seamlessly.

Method 1: Replicating Data from Postgresql to Databricks using Hevo

With Hevo, you can replicate your data from PostgreSQL to Databricks in a hassle-free manner without writing a single line of code. All you need to do is provide credentials to your database/data warehouse, Hevo takes care of the rest. With its features such as:

- Real-time data integration with 150+ pre-built connectors ensures your data is constantly updated and available.

- The fault-tolerant architecture ensures no data is ever lost.

- Pre-load and post-load transformations ensure data is always analysis ready.

- Cost-effective pricing makes sure you only pay for what you use.

- HIPAA, GDPR, and SOC2 compliance ensure data is always secure.

Method 2: Connecting Postgresql to Databricks using JDBC Driver

You can replicate your data from PostgreSQL to Databricks manually using JDBC Driver. However, this method is complex and requires technically sound people to perform it.

Why Integrate Postgresql to Databricks?

Let’s start by understanding why there is a need to integrate Postgresql to Databricks. Time Complexity, Features, and data compression are the main factors that play an essential role in doing so.

1) Time Complexity

It may take longer than expected to ensure a seamless PostgreSQL replication effort. Unexpected problems frequently necessitate further study, which might slow down the process of replicating data. Therefore, it is crucial to include time for Ad-hoc research to tackle unforeseen problems in the project timeline from the start.

Your teams can immediately query the data using a “simple-to-use” interface without requiring time-consuming operations. By separating storage from computing and offering limitless scalability, Databricks democratizes data access and improves time complexity.

2) Difference in features on the cloud vs. on-premises

Working with PostgreSQL in the Cloud is different from working with it locally. The Postgresql Cloud databases lack many Postgres extensions, and suppliers sometimes lock some default settings, which restricts Postgres setups and functionality. To assure scalability, several businesses have been forced to go back to on-premises Postgres from Cloud ones.

Databricks is a fully managed solution that supports functionalities like Big data and machine learning. It uses the unified Spark engine to support machine learning, graph processing, and SQL queries. The libraries in Databricks increase developers’ productivity.

3) Data Compression

PostgreSQL stores tables as columns rather than rows. Additionally, as data from the same columns are more likely to be comparable, it aids with data compressing — Postgresql lacks these functionalities.

Databricks support easy and quick access to information. There are no issues with data compression. Databricks also provides additional features for building streaming apps using production-quality machine learning and increasing the use of data science to aid in decision-making. You might want to transfer data from Postgresql to Databricks to implement Databricks features and overcome functionalities.

To overcome these issues, you can transfer data from Postgresql to Databricks.

How to Connect Postgresql to Databricks?

You need to transfer data from Postgresql to Databricks. Let’s understand how to build this connection using the Hevo then by using JDBC driver.

Method 1: Replicating Data from Postgresql to Databricks using Hevo

Using Hevo, you can effortlessly replicate your data from PostgreSQL to Databricks in just two easy steps.

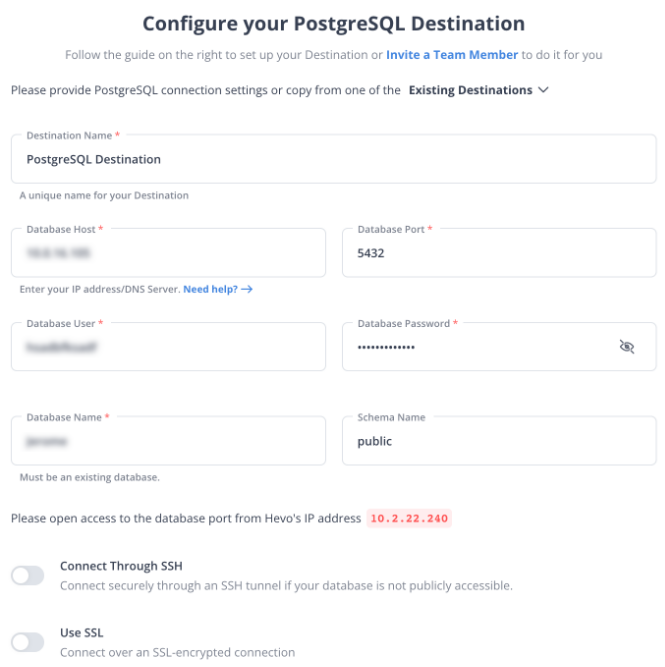

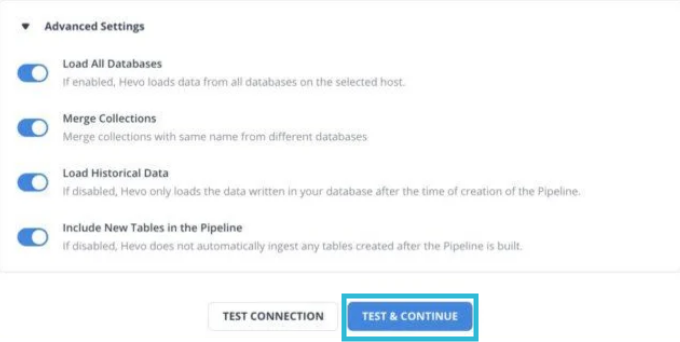

Step 1: Configure PostgreSQL as the Source

You can choose PostgreSQL as your source and provide credentials to the database that contains the data to be replicated.

Step 1.1: Select PostgreSQL as your Source.

Step 1.2: Provide credentials to your PostgreSQL Database such as the Host name, Username, password and the database name.

Step 1.3: Click on Test & Continue.

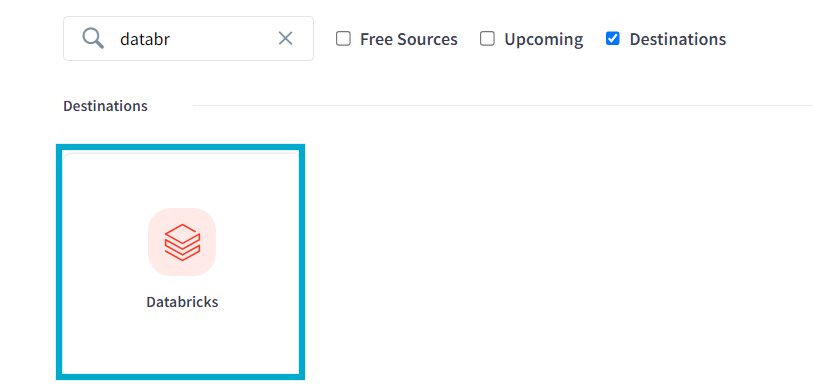

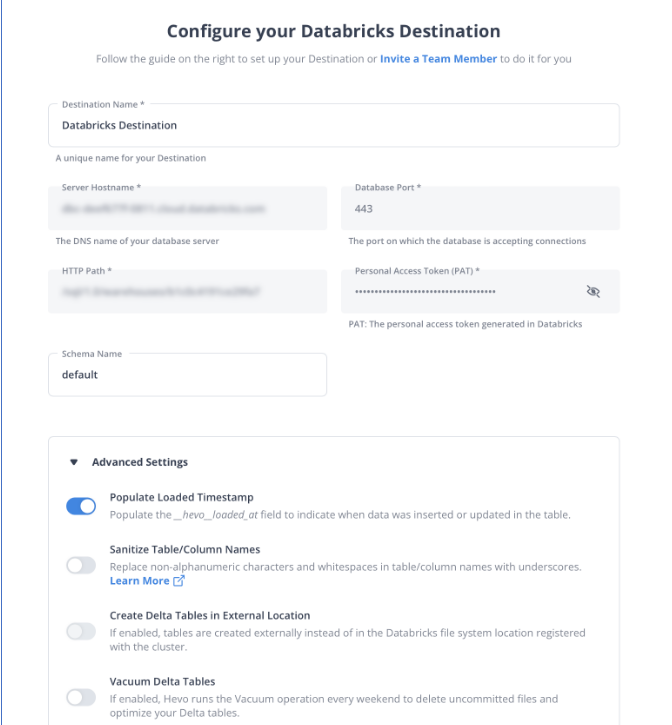

Step 2: Configure as Databricks as your Destination

You can select Databricks as your Destination to replicate your data into it.

Step 2.1: Select Databricks as your destination.

Step 2.2: Configure the destination by filling in the required details.

Step 2.3: Click on Save & Continue.

That’s how easy it is for you to replicate your data using Hevo.

Method 2: Connecting Postgresql to Databricks using JDBC Driver

- Step 1: Download and install the JDBC driver. Select the destination cluster from the Databricks administration interface by navigating there. Click “Install New” under the Libraries tab.

- Step 2: Choose “Jar” for the library type and “Upload” for the library source. From the installation folder, upload the JDBC JAR file (cdata.jdbc.postgresql.jar).

- Step 3: By referencing the JDBC Driver class and creating a connection string to be used in the JDBC URL, you can connect to PostgreSQL. In the JDBC URL, you also need to set the RTK property (unless you are using a Beta driver). The installation’s licensing file includes instructions on how to set this property, which you can examine.

driver = "cdata.jdbc.postgresql.PostgreSQLDriver"

url = "jdbc:postgresql:RTK=5246...;User=postgres;Password=admin;Database=postgres;Server=127.0.0.1;Port=5432;"- Step 4: Use the connection string designer integrated with the PostgreSQL JDBC Driver for help creating the JDBC URL. Double-click the JAR file or run it from the command line to start it. The connection string should be copied to the clipboard after you complete the connection properties.

- Step 5: Set the User and Password you want to use to authenticate to the server along with the Server, Port (the default port is 5432), and Database connection settings to establish a connection to PostgreSQL. The data provider connects to the user’s default database if the Database attribute is left empty.

- Step 6: Once the connection has been set up, you can use the JDBC Driver and the connection details to load PostgreSQL data as a data frame.

Challenges Faced While Replicating Data

You can face some challenges while replicating your data from PostgreSQL to Databricks using JDBC Driver.

- This method requires technical expertise, and you will need to utilise your engineering bandwidth, which can be a complex task.

- Setting up data pipelines across multiple environments is expensive. The configuration of a pipeline may change after it gets deployed into multiple settings.

Why Use Hevo to Connect Postgresql with Databricks?

Here’s how Hevo challenges the normal to beget the new ‘exceptional.’

- Reliability at Scale – With Hevo, you get a world-class fault-tolerant architecture that scales with zero data loss and low latency.

- Monitoring and Observability – Monitor pipeline health with intuitive dashboards that reveal every stat of pipeline and data flow. Bring real-time visibility into your ELT with Alerts and Activity Logs

- Stay in Total Control – When automation isn’t enough, Hevo offers flexibility – data ingestion modes, ingestion, and load frequency, JSON parsing, destination workbench, custom schema management, and much more – for you to have total control.

- Auto-Schema Management – Correcting improper schema after the data is loaded into your warehouse is challenging. Hevo automatically maps source schema with destination warehouse so that you don’t face the pain of schema errors.

- 24×7 Customer Support – With Hevo you get more than just a platform, you get a partner for your pipelines. Discover peace with round-the-clock “Live Chat” within the platform. What’s more, you get 24×7 support even during the 14-day full-feature free trial.

- Transparent Pricing – Say goodbye to complex and hidden pricing models. Hevo’s Transparent Pricing brings complete visibility to your ELT spend. Choose a plan based on your business needs. Stay in control with spend alerts and configurable credit limits for unforeseen spikes in data flow.

Additional Resources for PostgreSQL Integrations and Migrations

- How to load data from postgresql to biquery

- Postgresql on Google Cloud Sql to Bigquery

- MySQL to Databricks

- Migrate Data from Postgres to MySQL

- How to migrate Data from PostgreSQL to SQL Server

Let’s Put It All Together

In this article, you got to learn about the need for Postgresql to Databricks data transfer, as well as got to know about the methods to carry out the process.

Don’t forget to express your experience in the comment section, employing a data pipeline from Postgresql to Databricks using Hevo.

Check out this video to learn how Hevo seamlessly replicates data from wide data sources. See how to migrate data from Azure PostgreSQL to Databricks for powerful data analytics and reporting.

Initiate your journey with Hevo today and enjoy fully automated, hassle-free data replication for 150+ sources. Hevo’s free trial gives you limitless free sources and models to pick from, support for up to 1 million events per month, and a spectacular live chat service supported by an incredible 24/7 support team to help you get started. Sign up for Hevo’s 14-day free trial and experience seamless data migration.

FAQs to replicate data from PostgreSQL to Databricks

1. How do I connect Postgres to Databricks?

To connect PostgreSQL to Databricks, use the JDBC driver to establish a connection. Configure a Databricks cluster with the PostgreSQL JDBC driver, and then use Spark’s JDBC API to read from or write to the PostgreSQL database.

2. How do we migrate data from SQL Server to Databricks?

You can migrate data from SQL Server to Databricks by exporting the SQL Server data to a file format like CSV or Parquet. Upload this file to cloud storage (e.g., AWS S3 or Azure Blob Storage), and then use Databricks to read the data from the cloud storage and load it into Databricks.

3. Can I use Databricks as a data warehouse?

Yes, you can use Databricks as a data warehouse. Databricks provides a unified analytics platform with robust support for large-scale data processing and analytics.