PostgreSQL offers numerous advantages, including compatibility with various data types and a reliable environment with features like Multi-Version Concurrency Control (MVCC). However, if your use case requires capabilities such as long-term data retention and advanced data analysis, migrating to MySQL can be beneficial.

This article provides a detailed guide on four easy methods to seamlessly migrate your data from PostgreSQL to MySQL, ensuring a smooth transition for your organization.

Table of Contents

What is PostgreSQL?

It is written in C and has a monolithic architecture, which means that all of the components are connected and work in a symmetrical and systematic manner. It has a vast community and provides a lot of community assistance as well as extra help to its paying members.

Healthcare, banking, and manufacturing are just a few of the businesses that employ it. It also makes it possible to install new backup solutions. Monolithic architecture implies that all of the components are linked and work in a logical order. It offers both community support and additional assistance to some of its paid customers. It is frequently used in healthcare, banking, and industrial areas due to its innovative backup methods.

What is MySQL?

MySQL is an open-source and free database software that is supported by Oracle and is released under the GNU license.

When compared to Microsoft SQL Server and Oracle Database, MySQL is a faster, more scalable, and easier-to-use Database Management System. It is based on the Client-Server concept, which implies that the database is normally hosted on a server and data is accessed via network clients and workstations.

The server responds to the clients’ Graphical User Interface (GUI) queries with the desired result. MySQL works with a variety of operating systems and languages, including PHP, PERL, JAVA, C++, and C.

Methods to Connect PostgreSQL to MySQL

Facing challenges migrating your data from PostgreSQL to MySQL? Migrating your data can become seamless with Hevo’s no-code intuitive platform. With Hevo, you can:

- Automate Data Extraction: Effortlessly pull data from PostgreSQL(and other 60+ free sources).

- Transform Data effortlessly: Use Hevo’s drag-and-drop feature to transform data with just a few clicks.

- Seamless Data Loading: Quickly load your transformed data into your desired destinations, such as MySQL.

Try Hevo and join a growing community of 2000+ data professionals who rely on us for seamless and efficient migrations.

Get Started with Hevo for FreeMethod 1: Using Automated Data Pipeline Platforms

The following steps can be implemented to connect PostgreSQL to MySQL using Hevo:

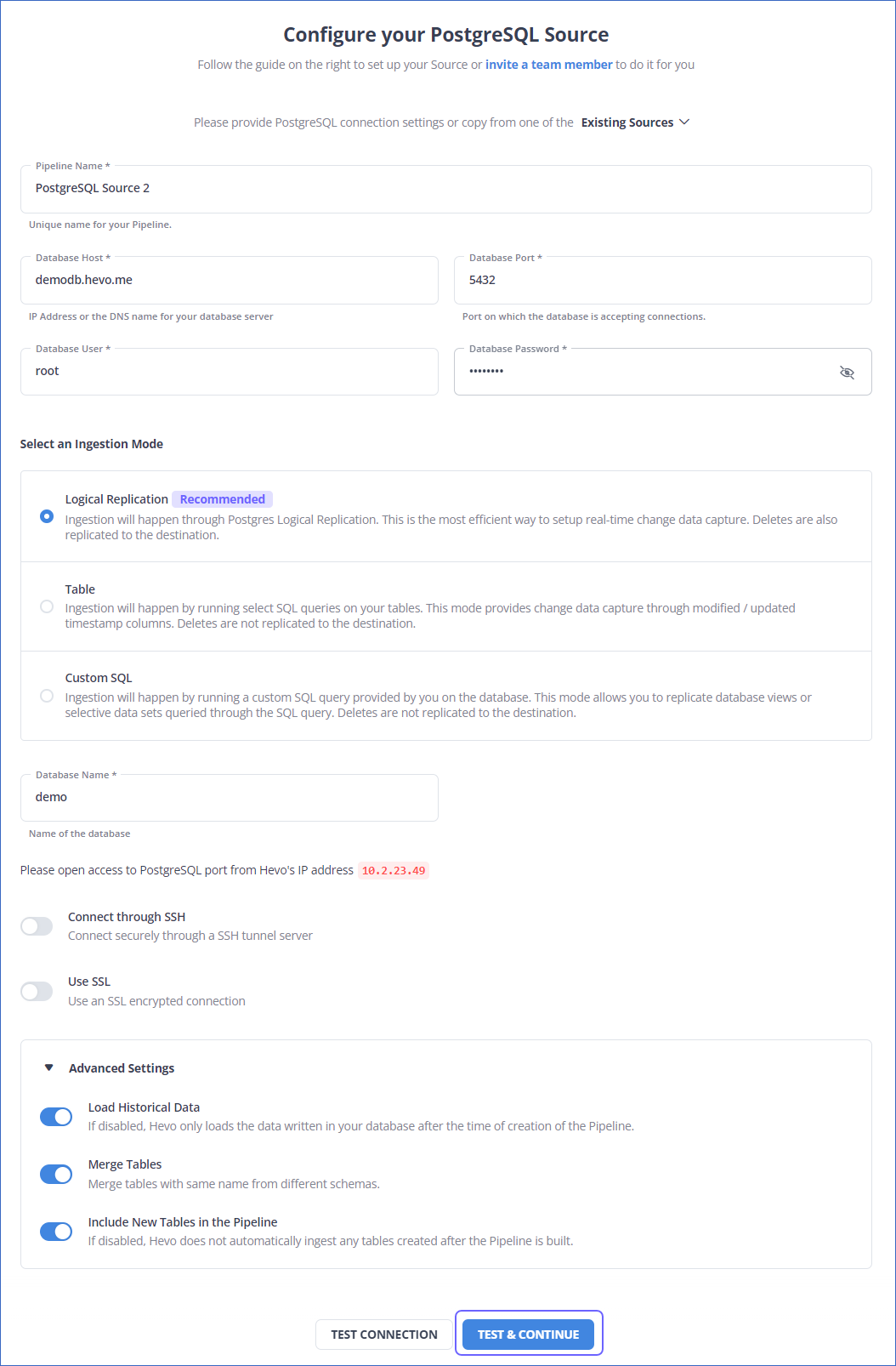

Step 1: Configure PostgreSQL as your Source

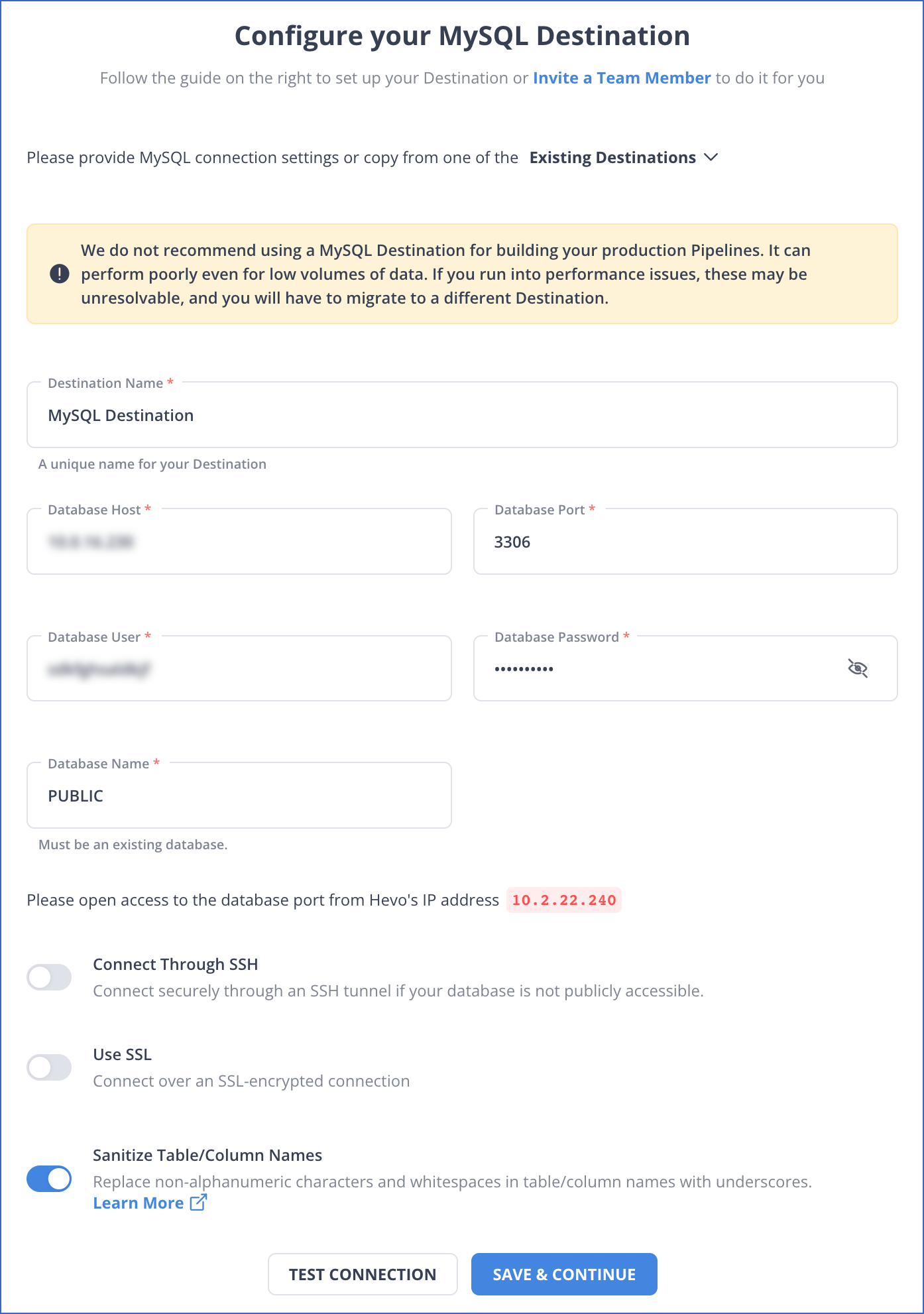

Step 2: Configure MySQL as your Destination

We have now successfully moved PostgreSQL to MySQL Migration using Hevo.

Here are more reasons to try Hevo:

- Data Transformation: It provides a simple interface to perfect, modify, and enrich the data you want to transfer.

- Schema Management: Hevo can automatically detect the schema of the incoming data and map it to the destination schema.

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real time. This ensures efficient utilization of bandwidth on both ends.

- Live Monitoring: Advanced monitoring gives you a one-stop view to watch all the activities that occur within Data Pipeline.

Method 2: Manually Connect PostgreSQL to MySQL using pg2mysql php Script

Step 1: Downloading the Script and PHP

- Downloading the script and unpacking the archive is the first step to connecting PostgreSQL to MySQL using the pg2mysql php script.

wget http://www.lightbox.ca/pg2mysql/pg2mysql-1.9.tar.bz2 (contains original script from the author)

wget https://www.easyredmine.com/ER/media/knowledge-base/pg2mysql-1.9.zip (contains our modified script for php version 7.4)

tar -xvf pg2mysql-1.9.tar.bz2(in case of original script from the author)

unzip pg2mysql-1.9.zip (in case of our modified script for php version 7.4)- You can install PHP using the command given below:

sudo apt install php7.4-cli (in case of our modified script)Step 2: Creating Dump Database for PostgreSQL and running it

- Make a .sql dump of your PostgreSQL database, being sure to use the “—format p —inserts” option.

sudo -u postgres pg_dump --format p --inserts DBNAME_HERE > /path/to/file.sql- Navigate to the pg2mysql-1.9 folder.

cd pg2mysql-1.9/- Run this file which we have created.

php pg2mysql_cli.php /path/to/pd/dump/file.sql /path/where/to/save/mysql/file.sqlThere will be some lines like this.

Completed! 30820 lines 5539 sql chunks

Notes:

- No its not perfect

- Yes it discards ALL stored procedures

- Yes it discards ALL queries except for CREATE TABLE and INSERT INTO

- Yes you can email us suggestsions: info[AT]lightbox.org

- In emails, please include the Postgres code, and the expected MySQL code

- If you're having problems creating your postgres dump, make sure you use "--format p --inserts"

- Default output engine if not specified is MyISAM"- The corrected sql dump file will now be created. It’s recommended that you read it and replace MyISAM with InnoDB wherever possible.

- At this point, you can restore the dump to a clean MySQL database.

- Since the script does not save indexes (mentioned by the creator), you must manually add them to each table (it will work without indexes but it may cause serious performance issues). You must manually add them or use your own custom script.

Learn More About: 3 Best Methods to Export MySQL Database

Limitations of Manually Connecting PostgreSQL to MySQL

- The data transfer from PostgreSQL to MySQL is only one-way. Two-way sync is essential to keep both tools up to date.

- Since the records must be updated on a regular basis, the manual approach takes time. This wastes time and resources that could be better spent on more important business tasks.

- Updating current data and maintaining workflows on multiple platforms necessitates a significant amount of engineering bandwidth, which can be a source of frustration for many users.

- During data transfer, there is no room for transformation. For firms who wish to update their data before exporting it from PostgreSQL to MySQL, this might be a considerable drawback.

Method 3: Migrate Postgres to MySQL using the MySQL Workbench Migration Wizard

Step 1: Download and install the PostgreSQL ODBC driver

Once we install the PostgreSQL, we need to install an ODBC driver wherever we have installed MySQL workbench.

Step 2: Open MySQL Workbench and start the migration wizard

- On the MySQL workbench screen, Click on the Database > Migrate on the main screen to start the Migration wizard.

- We can see an overview page for the Migration wizard below.

Step 3: Set up the parameters required for connecting to the source database

- Click on the Start Migration button to connect to the Source Selection page.

- Specify all the necessary information and click the Test Connection button to check the connection with PostgreSQL.

- Click on the Next button to move to the destination selection page.

Step 4: Set up the parameters required for connecting to the destination database

- Specify the required parameters on the destination setup page to connect to the MySQL Server instance.

- Click on the Test Connection button to verify the connection and click on the Next button to move to the Schema setup page.

Step 5: Select the schema for migration

The migration wizard communicates with the PostgreSQL database to fetch the schema data into the source database.

- Verify the tasks and click on the Next button to finish the schema setup for migration.

- Verify the tasks and click on the Next button to finish the schema setup for migration.

Step 6: Select the objects for migration

- Now, the Retrieved schema from the source will be reverse-engineered to determine its structure.

- In the source objects page, we can see the list of objects retrieved for migration.

- Click the Show Selection button to see the table objects in the source database. Click on the Next button to review the proposed migration.

Step 7: Review the proposed migration

In this step, the Migration wizard converts the objects into equivalent objects in MySQL and creates a MySQL code for the destination server.

- On the Manual Editing tab on the left side navigation panel. We can double-click on the Pagila row and rename the resultant database. It will also show the table columns, default values, and other attributes.

Step 8: Run the MySQL code to create the database objects

- Click on the Target Creation Options tab on the left side navigation pane. We will be given the option to run the generated code in the target RDBMS. Click on the Next button and move to the transfer data setup page.

- Transfer data setup page.

- The progress of the migration can be seen on the Create Schemata tab on the left-side navigation pane.

- Once the migration is done, click on the Next button and move to the transfer setup page.

Step 9: Transfer the data to MySQL database

The data transfer setup page allows you to configure the transfer process, as given below.

- Configure the transfer process

- Select the first option of live transfer of data and click on the Next button. Once it is done, we can see the summary of the transfer process on the report page and click on the Finish button to close the Migration wizard.

Factors to Consider for Postgres to MySQL Migration

A few differences between Postgres and MySQL should be considered before moving from Postgres to MySQL. A few of these are mentioned below.

- Complexity brought on by certain data models: Numerous data types are supported by both Postgres and MySQL. This covers a wide range of data formats, including JSON, XML, and TEXT, as well as conventional SQL types like String, Boolean, Integer, and Timestamp. It’s important to remember, though, that MySQL does not handle all Postgres data types.

- Differences between SQL and database capabilities: There are several operations that are performed differently in Postgres and MySQL databases. Additionally, some features could be supported in one but not in the other. Understanding this might help you steer clear of certain typical traps.

- Stored procedures: The process must be written in the regular SQL syntax according to MySQL. In comparison, the procedures in Postgres are function-based and may be written in a variety of languages, including Ruby, Perl, SQL, Python, JavaScript, and others.

- Extensions for Postgres: It can complicate a migration if you use Postgres extensions; thus, before you migrate, be sure to audit each one separately.

- Case sensitivity and support for IF/IFNULL: If a Postgres table or column name is enclosed in double quotations, it is case-sensitive. The names of MySQL tables and columns, however, are not case-sensitive. For this reason, bear this in mind while doing a migration. Additionally, Postgres does not support IF and IFNULL statements for condition evaluation, but MySQL does.

Related: If you are looking to streamline your PostgreSQL workflow, do read our blog on PostgreSQL import CSV, saving you time and effort. And if you’re interested in optimizing performance and achieving high availability, don’t miss our guide on setting up PostgreSQL clusters [+clustering options].

Postgres vs MySQL schema- Key Differences

- VARCHAR in Postgres vs MySQL

- For the varchar type, you need to set up a max value in MySQL while this is not required for Postgres. Instead of this, you can use the text type in MySQL, or have the same varchar type. But, you should specify a max length for the same. If you go for the varchar option,keep in mind the max value so that there won’t be any troubles while migrating your data.

- SERIAL in Postgres vs MySQL

- When you set the serial type in MySQL, the underlying data type by default will be bigint unsigned auto_increment. Overall, this wouldn’t have an impact on how you store your data. But, its good to know this as the data type will be different based on the records you have.

- Spatial data in Postgres vs MySQL: POINT

- Using spatial data is straightforward in PostgreSQL. When you define the location column as a type of Point, it is possible to insert spatial data by providing the required coordinates. In MySQL, spatial data types have different behavior. Based on the version of your MySQL, you might need to have a different approach to store spatial data.

Use Cases for PostgreSQL to MySQL Integration

- MySQL enables complex data analysis and insights beyond Postgres’ capabilities alone through powerful querying and processing.

- Centralizing data from Postgres and other sources in MySQL provides a unified view across systems. This consolidated data can enable change data capture to prevent future discrepancies.

- MySQL’s capabilities for long-term data retention facilitate historical trend analysis over time, without Postgres’ limits.

- Syncing Postgres data to MySQL leverages MySQL’s robust security features for advanced data governance and compliance.

- MySQL scales to handle large, growing data volumes from Postgres without performance impacts.

- With Postgres data in MySQL, machine learning for predictive analytics, customer segmentation and more is enabled.

- Visualization tools like Tableau, PowerBI and Looker that connect to MySQL enhance business intelligence, augmenting Postgres’ reporting.

Additional Resources for PostgreSQL Integrations and Migrations

- Connect PostgreSQL to MongoDB

- Connect PostgreSQL to Redshift

- Integrate Postgresql to Databricks

- Replicate Postgres to Snowflake

- Export a PostgreSQL Table to a CSV File

Conclusion

In this article, we got a glimpse of how to connect PostgreSQL to MySQL after a brief introduction to the salient features, and use cases. The manual methods talked about in this article are using CSV files, pg2mysql php script, and MySQL Workbench. The process can be a bit difficult for beginners. Moreover, you will have to update the data each and every time it is updated and this is where Hevo saves the day!

Hevo Data provides its users with a simpler platform for integrating data from 150+ data sources for Analysis. It is a No-code Data Pipeline that can help you combine data from multiple sources like PostgreSQL. You can use it to transfer data from multiple data sources into your Data Warehouses, Database, or a destination of your choice such as MySQL .

It provides you with a consistent and reliable solution to managing data in real-time, ensuring that you always have Analysis-ready data in your desired destination. Sign up for a 14-day free trial and experience the feature-rich Hevo suite firsthand.

FAQ on Postgres to MySQL

Can you convert PostgreSQL to MySQL?

1. Export data from PostgreSQL.

2. Convert the schema and data types.

3. Import the data into MySQL.

How to import data from PostgreSQL to MySQL?

1. Export data from PostgreSQL

2. Create corresponding tables in MySQL

3. Load CSV data into MySQL

Can I run PostgreSQL in MySQL?

1. Run Both Databases Simultaneously: Install and run both PostgreSQL and MySQL on the same server or different servers.

2. Use Middleware or Database Abstraction Layer: Use tools or libraries that support multiple databases to abstract database-specific features.