Struggling to manage massive volumes of data for analysis? Many companies turn to Amazon S3 for storage and Snowflake for powerful querying. But what happens when you need to offload unused data? This is where Snowflake Unload to S3 comes in, making it easy to move data efficiently. This guide explores how to easily set up Snowflake Unload to S3 quickly.

Table of Contents

Introduction to Snowflake

- Snowflake is a cloud data warehouse built on top of AWS (Amazon Web Services) to help companies store and analyze massive amounts of data. It is a Software-as-a-Service (SaaS) platform, which means you don’t need hardware to select, install, configure, or manage it.

- Snowflake handles all software updates, maintenance, management, upgrades, and tuning. It separates computation and storage and charges for the service independently.

- Snowflake is highly scalable, allowing users to easily scale up and down computation and storage to satisfy their business needs.

- It delivers faster query performance and can handle terabytes of data with its help, which involves virtual compute instances.

Key Features of Snowflake

Some of the main features of Snowflake are listed below:

- SQL Support: Snowflake wants its users to easily access and manipulate data by offering SQL language support, including DDL, DML, and other advanced commands.

- Data Import and Export: Snowflake supports bulk import and export of data, including character encoding, compressed files, delimited data files, etc.

- Integrations: Snowflake easily integrates with many third-party apps and services that companies use. It enables users to sync data easily between the platform and Snowflake.

Hevo Data, a No-code Data Pipeline, helps load data from any data source, such as Databases, SaaS applications, Cloud Storage, SDK, and Streaming Services, and simplifies the ETL process. It supports 150+ data sources and is a three-step process that involves selecting the data source, providing valid credentials, and choosing the destination. Hevo not only loads the data onto the desired Data Warehouse/destination but also enriches it and transforms it into an analysis-ready form without writing a single line of code.

Check out why Hevo is the Best:

- Schema Management: Hevo takes away the tedious task of schema management & automatically detects the schema of incoming data and maps it to the destination schema.

- Hevo Is Built To Scale: As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

- Incremental Data Load: Hevo allows the transfer of data that has been modified in real-time. This ensures efficient utilization of bandwidth on both ends.

- Live Support: The Hevo team is available round the clock to extend exceptional customer support through chat, E-Mail, and support calls.

- Live Monitoring: Hevo allows you to monitor the data flow and check where your data is at a particular time.

Introduction to Amazon S3

- Amazon S3 or Amazon Simple Storage Service, offered by AWS (Amazon Web Services), allows companies to store their data as object storage with a web service interface.

- It can store any type of object, which makes it highly adaptable and scalable. It can be used for backup and recovery, disaster recovery, internet applications, etc.

- Amazon S3 stores data as independent objects, complete metadata, and a unique object identifier. Companies like Netflix, Amazon, and Twitter widely use it.

Key Features of Amazon S3

Some of the main features of Amazon S3 are listed below.

- Access Management: Amazon comes with various data access features to secure data, including features for auditing and managing access to objects and businesses.

- Analytics and Insights: Amazon allows users to gain visibility on the data stored on Amazon, making it easier for them to understand, analyze, and optimize their storage at scale.

- Data Processing: Amazon automates your data transformation activities by offering AWS Lambda and other features.

Prerequisites

- An active Snowflake account.

- An active AWS account with S3.

Steps for Snowflake Unload to S3

Now that you have understood Snowflake and Amazon S3, you will learn the Snowflake Unload to S3 process in this section. The following steps to easily perform Snowflake Unload to S3 are listed below:

- Step 1: Allowing the Virtual Private Cloud IDs

- Step 2: Configuring an Amazon S3 Bucket

- Step 3: Unloading Data into an External Stage

Step 1: Allowing the Virtual Private Cloud IDs

The foremost step for Snowflake Unload to S3 is to explicitly grant Snowflake access to your AWS S3 storage account. For this, the location or region for both Amazon S3 located in your AWS account and Snowflake should be the same.

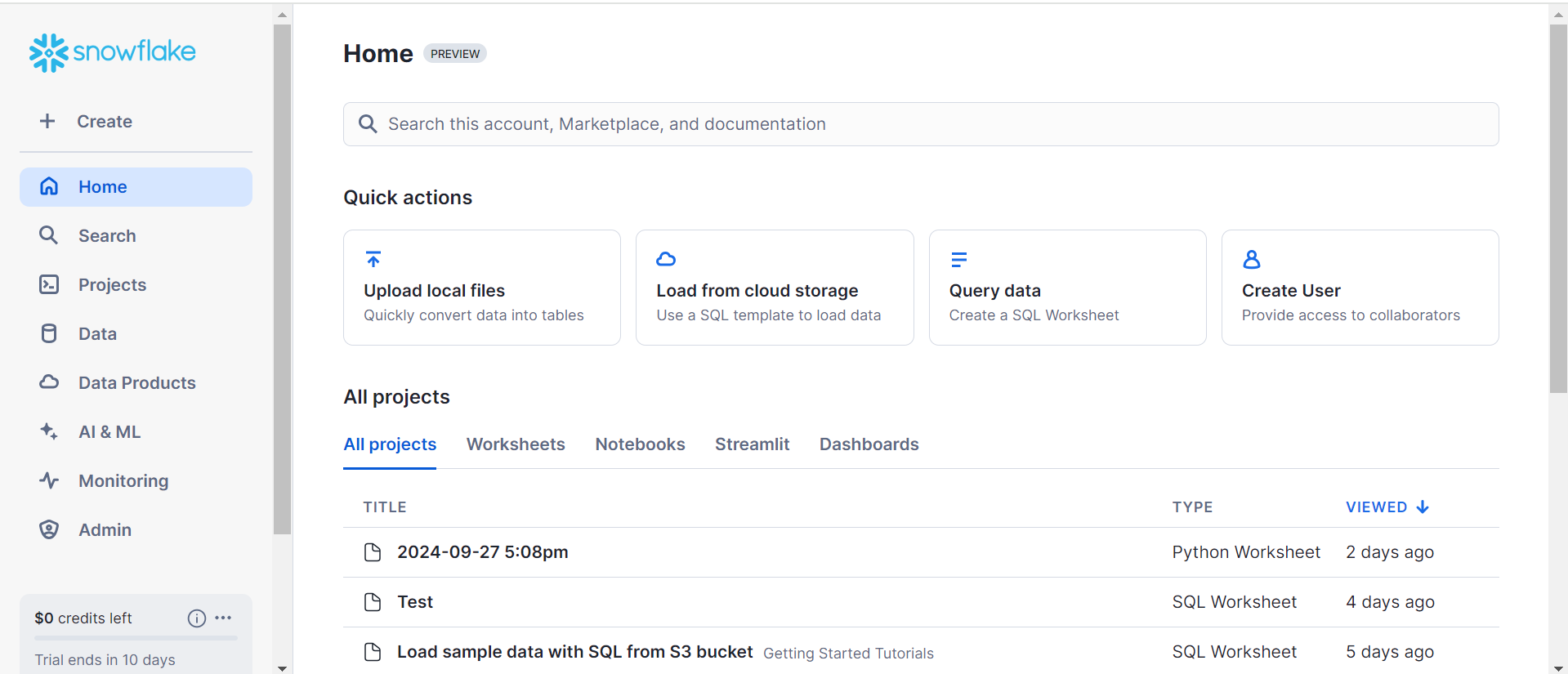

- First, log in to your Snowflake account.

- Then, set the ACCOUNTADMIN as an active role for the user session by executing the following code in the Snowflake console.

use role accountadmin;- Now, retrieve the IDs of the AWS Virtual Network (VNet) by querying the SYSTEM$GET_SNOWFLAKE_PLATFORM_INFO function in the console. You can follow the following code given below.

select system$get_snowflake_platform_info();- After getting the query result from the above replace the VPC IDs.

- Allow the VPC IDs by creating an Amazon S3 policy for a specific VPC.

- Now, lets’s provide an AWS Identify and Access Management (IAM) role to Snowflake so that you can access the Amazon S3 Bucket.

Step 2: Configuring an Amazon S3 Bucket

- You must allow Snowflake to access your Amazon S3 Bucket and folder to create new files. The following permissions are required for Snowflake Unload to S3:

- s3:DeleteObject

- s3:PutObject

- First, you have to create an IAM policy by logging in to your AWS Management Console and from the dashboard navigating to the Identity & Access Management (IAM) options under Security, Identity and Compliance section, as shown in the image below.

- Now, choose the “Account Settings” option from the left side navigation bar.

- Here, just expand the “Security Token Service Regions” list corresponding to the region your AWS account is located.

- Then, “Activate” the status if “Inactive“.

- Choose the “Policies” option from the left-hand navigation bar.

- Then, click on the “Create Policy” option, as shown in the image below.

- Click on the “JSON” tab and add a policy document to allow Snowflake Unload to S3.

- A sample policy document in JSON format is given below.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObjectVersion",

"s3:DeleteObject",

"s3:DeleteObjectVersion"

],

"Resource": "arn:aws:s3:::<bucket>/<prefix>/*"

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::<bucket>",

"Condition": {

"StringLike": {

"s3:prefix": [

"<prefix>/*"

]

}

}

}

]

}- In the above code replace the <bucket> and <prefix> with the bucket name and folder path prefix.

- Then, click on the “Review policy” button and enter the policy name eg.- snowflake_access.

- Click on the “Create policy” button, as shown in the image below.

- Now, it’s time to create the IAM Role in AWS for Snowflake unload to S3.

- Choose the “Roles” from the left navigation bar and click on the “Create role” button, as shown in the image below.

- Here, select the “Another AWS account” as the trusted entity type.

- In the Account ID field, provide your AWS account ID.

- Then, select the “Require external ID” option. For now, enter the dummy ID as “0000“. You will need to modify it later to grant Snowflake Unload to S3.

- Click on the “Next” button, locate the policy that you created and again click on the “Next” button, as shown in the image below.

- Create the role by providing a valid name and description. You will have an IAM policy for the bucket, IAM role, and attached policy.

- After this, you have to record the “Role ARN” value located on the role summary page, as shown in the image below.

- Now, create a Snowflake Unload to S3 integration that references this role.

- Next, you need to create a Cloud Storage integration in Snowflake using the “CREATE STORAGE INTEGRATION” command.

- Open up the console and enter the following code given below.

CREATE STORAGE INTEGRATION <integration_name>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = S3

ENABLED = TRUE

STORAGE_AWS_ROLE_ARN = '<iam_role>'

[ STORAGE_AWS_OBJECT_ACL = 'bucket-owner-full-control' ]

STORAGE_ALLOWED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/')

[ STORAGE_BLOCKED_LOCATIONS = ('s3://<bucket>/<path>/', 's3://<bucket>/<path>/') ]- Everything is ready for Snowflake Unload to S3, now you have to retrieve the AWS IAM user for your Snowflake account.

- For this, execute the following command given below to retrieve the ARN.

DESC INTEGRATION <integration_name>;- Here, provide the “integration_name” that you created.

- For example, the sample command is given below with the output.

desc integration s3_int;

+---------------------------+---------------+================================================================================+------------------+

| property | property_type | property_value | property_default |

+---------------------------+---------------+================================================================================+------------------|

| ENABLED | Boolean | true | false |

| STORAGE_ALLOWED_LOCATIONS | List | s3://mybucket1/mypath1/,s3://mybucket2/mypath2/ | [] |

| STORAGE_BLOCKED_LOCATIONS | List | s3://mybucket1/mypath1/sensitivedata/,s3://mybucket2/mypath2/sensitivedata/ | [] |

| STORAGE_AWS_IAM_USER_ARN | String | arn:aws:iam::123456789001:user/abc1-b-self1234 | |

| STORAGE_AWS_ROLE_ARN | String | arn:aws:iam::001234567890:role/myrole | |

| STORAGE_AWS_EXTERNAL_ID | String | MYACCOUNT_SFCRole=2_a123456/s0aBCDEfGHIJklmNoPq= | |

+---------------------------+---------------+================================================================================+------------------+- Record the “STORAGE_AWS_IAM_USER_ARN” and “STORAGE_AWS_EXTERNAL_ID” values from the output.

- Next, click on the “Trust relationships” from the “Roles” option located at the side navigation bar.

- Then, click on the “Edit trust relationship” button.

- Now, you can modify the policy document with the “DESC STORAGE INTEGRATION” output values you just recorded.

- The sample policy document for Snowflake Unload to S3 is given below.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": "<snowflake_user_arn>"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "<snowflake_external_id>"

}

}

}

]

}- In the above document, replace the recorded values.

- Then, click on the “Update Trust Policy” button.

- Now, you have to create an external stage for Snowflake Unload to S3 that references the storage integration you created earlier.

- You have to create the stage using the “CREATE STAGE” command as given below.

grant create stage on schema public to role myrole;

grant usage on integration s3_int to role myrole;- Now, set the “mydb.public” as the current Database and schema for the user session by creating the named stage “my_s3_stage”. You can follow the sample code given below.

use schema mydb.public;

create stage my_s3_stage

storage_integration = s3_int

url = 's3://bucket1/path1'

file_format = my_csv_format;Step 3: Unloading Data into an External Stage

- Create an external named stage using the web interface by clicking on the “Databases” option then, “<db_name>” and selecting then Stages.

- You can also do the same thing using the SQL command given below.

CREATE STAGE- The following sample code will create an external stage for Snowflake Unload to S3 given below.

create or replace stage my_ext_unload_stage url='s3://unload/files/'

storage_integration = s3_int

file_format = my_csv_unload_format;- In the above code, the name of the external stage is “my_ext_unload_stage” with bucket name as “unload” and folder “path” as files.

- Here, the data to unload as the file format object is “my_csv_unload_format“.

- Now, use the COPY INTO command for Snowflake Unload to S3 from a table using the external stage.

- The code for Snowflake Unload to S3 using the stage is given below.

copy into @my_ext_unload_stage/d1 from mytable;- In the above code, all the rows in “mytable” table are Snowflake Unload to S3 bucket. A “d1” filename prefix is applied to the files using the code given below.

- Then, retrieve the objects from the S3 bucket using the S3 console from the output.

That’s it! You have successfully performed Snowflake Unload to S3.

Key Takeaways

- In this article, you learned about Snowflake, Amazon S3, and the simple steps to Snowflake Unload to S3.

- The process of Snowflake Unload to S3 consists of accessing the required permission from the AWS console and Snowflake account.

- Configuring the staging area along with the data that needs to be unloaded from Snowflake to S3.

- Snowflake Data Unloading to S3 helps companies cut the cost of storing data that is not needed in the Data Warehouse or create a storage area with Snowflake.

Companies have business data available in multiple sources, and it’s a tedious process to load data manually from data sources to Snowflake. Hevo Data is a No-code Data Pipeline that can help you transfer data from any data source to the desired Snowflake. It fully automates the process to load and transform data from 150+ sources to a destination of your choice without writing a single line of code.

Want to take Hevo for a spin?

Sign up for a 14-day free trial and simplify your data integration process. Check out the pricing details to understand which plan fulfills all your business needs.

Share your experience of learning about Snowflake Unload to S3 in the comments section below!

Frequently Asked Questions

1. How do I give Snowflake access to S3?

To give Snowflake access to S3, create an IAM role in AWS, attach the required S3 permissions, and then link this role to Snowflake using an external ID.

2. What is unload in Snowflake?

UNLOAD in Snowflake is a command used to export data from a Snowflake table into external storage like Amazon S3 in a structured format, such as CSV or Parquet.

3. Which formats are supported for unloading data from Snowflake?

Snowflake supports formats like CSV, JSON, Parquet, ORC, and Avro for unloading data.