Business organizations receive data from various resources, they generate petabytes of data on a regular basis. With traditional databases, it is difficult to keep track of this data and even to access it flexibly and this is where Google BigQuery saves the day by providing the users with optimized results with better performance and availability. BigQuery lets you specify the Schema of a table when you load data into a table and create an empty table. Alternatively, you can use Auto-Schema detection for supported data formats.

Upon a complete walkthrough of this article, you will gain a decent understanding of Google BigQuery, along with the salient features that it offers. You will also learn about the different ways that can be used to specify BigQuery Schema. Read along to learn more about BigQuery Schema and its components!

Table of Contents

Introduction to Google BigQuery

Imagine BigQuery as this dramatically powerful, serverless warehouse that can handle as small as an Excel spreadsheet or as massive as a petabyte set of data, all built atop Google Cloud’s Bigtable. It’s meant for ultra-high-speed analysis of extremely large data sets, but remember it is not a transactional database OLTP kind of system.

Imagine you’re a data scientist who has to handle terabytes of data in MySQL. Here, you wouldn’t just have to worry about infrastructure—say, how much RAM or storage you need—but when using BigQuery, one just needs to upload CSV or JSON files as a batch and then start running queries. No more work on infrastructure; you are free to concentrate on work: data analysis.

A fully managed No-code Data Pipeline platform like Hevo Data helps you integrate and load data from 150+ different sources (including 60+ free sources) to a Data Warehouse such as Google BigQuery or Destination of your choice in real-time in an effortless manner. Check out some of the cool features of Hevo:

- Risk management and security framework for cloud-based systems with SOC2 Compliance.

- Always up-to-date data with real-time data sync.

- 24/5 Live Support will extend exceptional support to you.

Don’t just take our word for it—try Hevo and experience why industry leaders like Whatfix say,” We’re extremely happy to have Hevo on our side.”

Sign up here for a 14-day Free Trial!Learn more about the Best Warehousing Tools in 2025 based on their G2 Ratings.

Introduction to BigQuery Schemas

In a literal sense, schemas are a cognitive Framework that helps organize and interpret information.

In the BigQuery software, Information schemas are a set of Metadata tables that provide comprehensive data on the consumption and performance of the entire organization. The monitoring performance block in BigQuery lets you analyze various patterns of consumption to optimize slot capacity distribution.

Additionally, it identifies the troublesome queries and average query time across all projects in the organization.

Note: BigQuery lets you specify a table’s Schema while uploading data into a table when creating an empty one.

In the later section of this article, you will learn about the key components of BigQuery Schemas. Read about BigQuery Information Schema to get more clarity on BigQuery metadata.

Key Components of BigQuery Schema

There are a total of 4 key components in BigQuery Schema. They are as follows:

1) Column Description

Each column includes an optional description. If you wish to add a description, then you can add one (but not more than 1024 characters) for a column. This description is helpful to explain what a particular character means.

2) Column Names

In the Column Name, you are required to specify the parameter for which each column is responsible such as Date, User_Id, Products, etc.

The Column Name must contain only the Latin characters/letters (a-z, A-Z), numbers (0-9), or underscores (_), and it must start with a letter or underscore.

Additionally, the maximum length of a Column Name is approximately 300 characters.

NOTE: Column Name cannot start by prefixes: _TABLE_, _FILE_, _PARTITION. Duplicate names are not allowed, either.

3) Modes

BigQuery supports some Modes. These are particularly: Nullable, Required, Repeated.

- In the Nullable Mode, null values are allowed.

- The Required Mode doesn’t allow any null value.

- Finally, the Repeated Mode contains an array of values of the specified type in the column.

NOTE: It is not necessary to fill the mode. If no Mode is specified, then it automatically takes up the default Nullable Mode.

4) Data Type

BigQuery also lets you specify the Data Type. Some of the major ones that you can use to specify in your schema are INT64, FLOAT64, NUMERIC, BOOLEAN, STRING.

3 Ways to Specify Data in BigQuery

1) Specifying Schemas using API’s

- To specify a Schema when loading data, you need to call the jobs.insert method and configure the Schema property in the JobConfigurationLoad resource.

- On the other hand, if you want to specify a Schema using API while you create a table, you need to call the tables.insert method and configure the Schema property in the Table resource.

2) Specifying Schemas using JSON Files

The next method to specify BigQuery Schemas is using the JSON files method.

- In the JSON files method, a JSON file consists of a JSON array of the Column Name, Column Mode, Column Data Type and Column Description.

- You need to create a JSON Schema file and enter the JSON array using an appropriate text editor as follows:

[

{

"description": "[DESCRIPTION]",

"name": "[NAME]",

"type": "[TYPE]",

"mode": "[MODE]"

},

{

"description": "[DESCRIPTION]",

"name": "[NAME]",

"type": "[TYPE]",

"mode": "[MODE]"

}

]

- Once you create a JSON file, you can specify it using the bq command-line tool.

Pro tips:

- You can’t use a Schema file with the Cloud Console or the API.

- When you supply your JSON Schema file, store it in your locally readable location.

- You cannot specify your JSON Schema file in Cloud storage or in the drive.

3) Specifying Schemas Manually

Yes, you can specify the BigQuery Schema manually as well.

- When specifying Schema manually in BigQuery, you can either use a Cloud Console or a bq command-line tool.

- Manually specifying Schema is only supported by BigQuery when you load CSV and JSON (newline delimited) files.

- Furthermore, when you load Avro, Parquet, ORC, Firestore Export Data, or Datastore Export Data, the Schema automatically gets retrieved from the self-describing data source.

Follow the steps given below to manually specify the BigQuery Schema using Cloud Console:

- Step 1: Open BigQuery Page in Cloud Console.

- Step 2: Expand your Project and select a dataset in the software’s Explorer panel.

- Step 3: Expand the “Actions” option and click on “Open“.

- Step 4: Click “Create Table” in the details panel section.

- Step 5: Select “Empty Table” from the source section on the Create Table page.

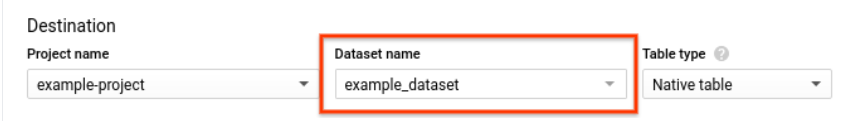

- Step 6: Visit the destination section on the Create Table page. Once you do that, choose an appropriate dataset, enter the table you want to create, and verify that the table type is set to Native table.

Conclusion

In this article, you learned about the components and three ways used to specify BigQuery Schema. You can use it for various purposes such as setting up end-to-end analytics, evaluating customer acquisition channels, and even segmenting your customers.

Integrating and analyzing your data from a diverse set of data sources can be challenging and this is where Hevo Data comes into the picture.

Want to take Hevo for a spin? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite firsthand. You can also have a look at our unbeatable pricing that will help you choose the right plan for your business needs!

Share your experience of learning about BigQuery Schemas. Let us know in the comments section below!

FAQs

1. What is the difference between schema and query?

A schema is a blueprint of your data structure, including which tables and fields to expect. Conversely, a query can be thought of as a request to pull or manipulate that data from the tables.

2. Does BigQuery have an information schema?

Yes, BigQuery does have an information schema. It is a series of views that give BigQuery users access to metadata about tables, views, datasets, routines, reservations, jobs, and streaming data. You can query it to get some insight into how your data is organized.

3. Is BigQuery a relational database or NoSQL?

BigQuery is a relational database. SQL-driven, though it is designed around large data sets rather than transactional processing, like most traditional relational databases.